By Renjie

Note: The following analysis is based on the open-source v19.15.2.2-stable.

ClickHouse is a popular open-source columnar storage analytic database featuring excellent storage compression ratios and query performance. I have been studying ClickHouse recently. I believe storage is the key to the core competitiveness and applicable scenarios of a database. Therefore, the most important MergeTree storage kernel in ClickHouse will be analyzed in a series of articles. This article introduces the MergeTree storage format and analyzes the retrieval performance of MergeTree storage.

When it comes to MergeTree, it is reminiscent of the data structure LSM-Tree. LSM-Tree is often used to solve the performance problem of random disk writing. MergeTree has the same core idea as LSM-Tree. The MergeTree storage structure sorts the written data first and then stores it. Orderly data storage has two core advantages:

A series of MergeTree table engines can be found in the ClickHouse official documents , including the basic MergeTree, ReplacingMergeTree, CollapsingMergeTree, and VersionedCollapsingMergeTree, which support data deduplication and SummingMergeTree and AggregatingMergeTree, which support data aggregation. However, these MergeTree table engines with "special capabilities" are the same as the basic MergeTree table engine in terms of storage. They all add "additional merge logic" in the data merging process. This will be explained further in subsequent articles about the asynchronous Merge mechanism of MergeTree.

The following shows the test table DDL of a POC user to help understand the table storage structure. The kernel design of MergeTree storage will be analyzed based on this table. The PARTITION BY assertion shows that the user has created data partitions by hourly granularity in each partition. The data in each data partition is stored in sequence with (action_id, scene_id, time_ts, level, uid) as the sort key.

CREATE TABLE user_action_log (

`time` DateTime DEFAULT CAST('1970-01-01 08:00:00', 'DateTime') COMMENT 'Log time',

`action_id` UInt16 DEFAULT CAST(0, 'UInt16') COMMENT 'Log behavior type id',

`action_name` String DEFAULT '' COMMENT 'Log behavior type name',

`region_name` String DEFAULT '' COMMENT 'Region name',

`uid` UInt64 DEFAULT CAST(0, 'UInt64') COMMENT 'User id',

`level` UInt32 DEFAULT CAST(0, 'UInt32') COMMENT 'Current level',

`trans_no` String DEFAULT '' COMMENT 'Transaction serial number',

`ext_head` String DEFAULT '' COMMENT 'Extended log head',

`avatar_id` UInt32 DEFAULT CAST(0, 'UInt32') COMMENT 'Avatar id',

`scene_id` UInt32 DEFAULT CAST(0, 'UInt32') COMMENT 'Scene id',

`time_ts` UInt64 DEFAULT CAST(0, 'UInt64') COMMENT 'Second timestamp',

index avatar_id_minmax (avatar_id) type minmax granularity 3

) ENGINE = MergeTree()

PARTITION BY (toYYYYMMDD(time), toHour(time), region_name)

ORDER BY (action_id, scene_id, time_ts, level, uid)

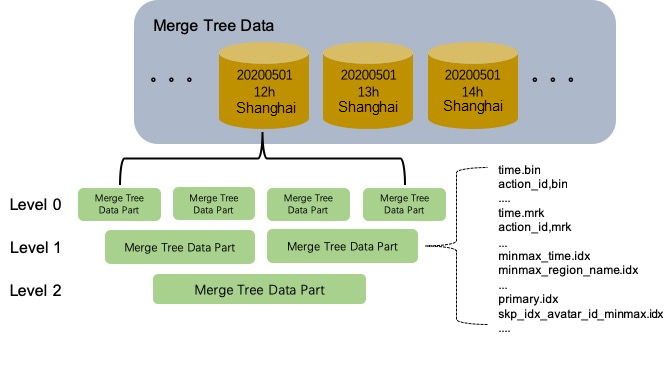

PRIMARY KEY (action_id, scene_id, time_ts, level);The following figure shows the MergeTree storage structure logic of the table:

In the storage structure of the MergeTree table, each data partition is independent of each other with no logical connections. A single partition contains multiple MergeTree Data Parts. Once these Data Parts are generated, they are immutable. The generation and destruction of Data Parts are mainly related to writing and asynchronous Merge. The write link of the MergeTree table is an extreme batch load process, and the Data Part does not support the single append insert. A new MergeTree Data Part is generated for each batch insert operation. If a record is inserted one at a time, an independent Data Part is generated for that record, which is unacceptable. Generally, when using the MergeTree table engine, aggregation needs to be performed at the client-side for batch writing. Alternatively, you can create a Distributed table based on the MergeTree table to proxy the writing and query of the MergeTree table. The Distributed table caches the written data by default and asynchronously forwards the data to the MergeTree table when exceeding a specific time or volume of cache data. The MergeTree storage engine is not friendly to scenarios with high real-time data visibility requirements.

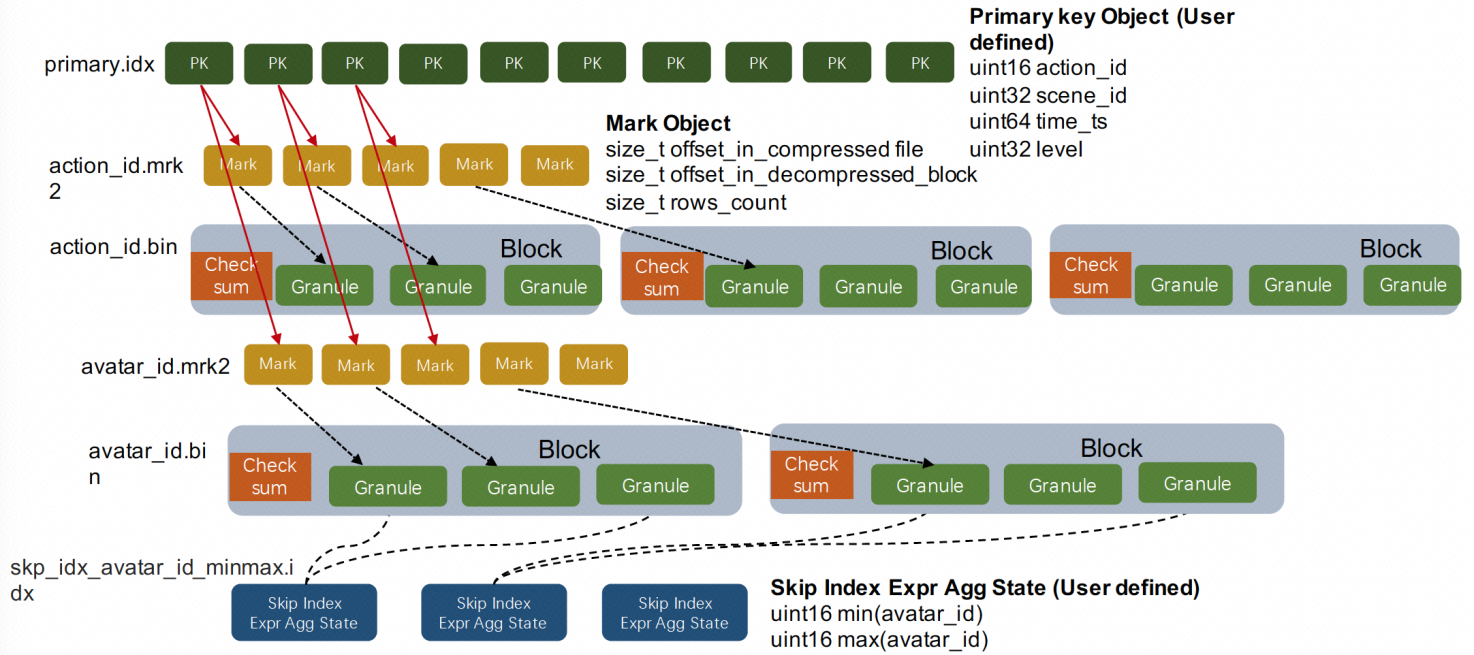

The preceding figure shows some of the core disk files in the MergeTree Data Part. Only the storage files in the action_id and avatar_id columns are shown. There are three main functional categories:

1. Data Files: action_id.bin, avatar_id.bin, and others are column-store files after the single column is compressed by block. ClickHouse adopts an extreme column-store mode. To be more specific, a single column of data may correspond to multiple column-store files. For example, a nullable column-store file is generated when declaring a Nullable field, and a column-store file of array size is generated when declaring an Array field. When dictionary compression is performed, the dictionary Key becomes a separate column-store file. Tips: When the special identifier of Null value is not needed, do not declare the Nullable. This is an extremely simplified design idea for ClickHouse.

2. Files with Mark Identifiers: action_id.mrk2, avatar_id.mrk2, and others are Mark identifiers in the column-store files. The Mark identifier is related to two important concepts in MergeTree columnar storage, namely, Granule and Block.

index_granularity_bytes parameter in the current version also affects the number of rows contained by a Granule. This parameter ensures that the sum size of all columns in a Granule does not exceed the specified value. In earlier versions, a major problem with the fixed-length Granule setting is that data in MergeTree is indexed by Granule. The size of data reads from the storage will increase significantly because of the coarse granularity in analyzing super-large wide tables. The parameters need to be set with caution.min_compress_block_size parameter. It checks whether the current Block size has reached the set value when the data in a Granule is written in a Block. If so, the current Block is compressed and then written to the disk.3. Primary Key Index: primary.idx is the primary key index of the table. The definition of the primary key index by ClickHouse is slightly different from traditional databases. The primary key index of ClickHouse does not include primary key deduplication, but it is capable of quickly finding the primary key rows. It stores the primary key value of the start row in each Granule, while the data in MergeTree storage is strictly sorted according to the primary key. So, when the primary key condition is given for a query, the possible Granular Range where data lies can be determined according to the primary key index. Together with the above Mark identifier, the position interval of the data in the column-store file can be determined. The primary key index of ClickHouse is a rough index reaching a relative balance between index construction cost and indexing efficiency. By default, the primary key sequence of MergeTree is consistent with the Order By sequence. However, users can define the primary key sequence as part of the prefixes of the Order By sequence.

4. Partition Key Index: minmax_regionidx and minmax_region_name.idx are the partition key indexes of the table. MergeTree storage counts the maximum and minimum partition key values in each Data Part. When a user query contains partition key conditions, irrelevant Data Parts can be excluded. This is a common partition pruning technology in OLAP scenarios.

5. Skipping Index: skp_idx_avatar_id_minmax.idx is the MinMax index defined by the user in the avatar_id column. The skipping index in MergeTree is a rough index of local aggregation. The granularity parameter needs to be set when defining the skipping index. Here, the granularity parameter specifies how many Granules of data are aggregated to generate index information. Users also need to configure the corresponding aggregation function for the index. Minmax, set, bloom_filter, and ngrambf_v1 are common aggregation functions. The aggregation function counts the column values in several consecutive Granules to generate index information. The skipping index is similar to the primary key index. Since the data is sorted by primary key, the primary key index counts the MinMax value of the primary key sequence in each Granule. The skipping index provides a wider variety of aggregation functions that supplements the primary key index. In addition, both indexes must be designed for specific business scenarios based on the understanding of indexing principles.

This section analyzes the data query process on the MergeTree table engine combined with the source code of ClickHouse. It is roughly divided into two parts: index retrieval and data scanning. The index retrieval is executed serially in each MergeTree Data Part, but the retrieval between the Data Parts is not associated at all. While in data scanning, the columnar storage scanning at the bottom layer in each Data Part is executed in parallel. Similarly, there is no association between the columnar storage scanning in each Data Part.

First, MergeTree storage extracts the KeyCondition of the partition key and the primary key in the query when receiving a select query. The following three methods are implemented on the KeyCondition to determine the Mark Range that may meet the filtering condition. As mentioned in the previous section, the column-store data in the MergeTree Data Part is indexed by Granularity by the Mark identifier groups, and the Mark Range indicates the subscript range in the Mark identifier groups that meet the query condition.

/// Whether the condition is feasible in the key range.

/// left_key and right_key must contain all fields in the sort_descr in the appropriate order.

/// data_types - the types of the key columns.

bool mayBeTrueInRange(size_t used_key_size, const Field * left_key, const Field * right_key, const DataTypes & data_types) const;

/// Whether the condition is feasible in the direct product of single column ranges specified by `parallelogram`.

bool mayBeTrueInParallelogram(const std::vector<Range> & parallelogram, const DataTypes & data_types) const;

/// Is the condition valid in a semi-infinite (not limited to the right) key range.

/// left_key must contain all the fields in the sort_descr in the appropriate order.

bool mayBeTrueAfter(size_t used_key_size, const Field * left_key, const DataTypes & data_types) const;In the index retrieval process, prune irrelevant data partitions with the partition key KeyCondition first. Then, select the rough Mark Ranges using the primary key index. Finally, filter the Mark Ranges generated by the primary key index with skipping index. The algorithm for the primary key index to pick out rough Mark Ranges is a process of constantly splitting Mark Ranges. The returned result is a set of Mark Ranges. The initial Mark Range covers the entire MergeTree Data Part. For each splitting, the newly split Mark Ranges are split into finer Mark Ranges by certain granularity, and those that do not meet the specified conditions are excluded. When the Mark Range reaches a certain granularity, the splitting is finished. This is a simple and efficient rough filtering algorithm.

Before using the skipping index to filter the Mark Ranges returned by the primary key index, the IndexCondition should be constructed for each skipping index. Different skipping index aggregation functions have different IndexCondition implementations, but the interface for determining whether a Mark Range meets the conditions is similar to KeyCondition.

After the index filtering in the previous section, the obtained set of Mark Ranges needs to be scanned. This section briefly introduces how data Sampling in MergeTree is implemented. It is not implemented during data scanning but during index retrieval, which intends to achieve greater sample efficiency. When creating a table, users can specify a column or expression in the primary key as the Sampling key. ClickHouse decides the value of the Sampling key must be numeric, and the system values are randomly and evenly distributed. If the value type of the Sampling key is Uint32 with the sample ratio set to 0.1, the sample will be converted to a filter condition in index retrieval: the value of the Sampling key is less than Uint32::max * 0.1. Users must be aware of this detail when using the Sampling function, otherwise, Sampling deviation may occur. Generally, the Sampling key is recommended to be randomly scattered by the column value and a Hash function.

MergeTree provides three different modes for data scanning:

The following describes several key performance optimizations in the Normal mode:

After a deep insight into the ClickHouse source code and its kernel implementation, I think ClickHouse is not a perfect analytic database yet, but it has many ultimate performance optimization designs. All these designs originate from Yandex's real analysis scenarios and can solve some business problems practically with massive data. I believe ClickHouse can bring users the best performance experience in some business scenarios.

Alibaba Cloud has launched the ClickHouse cloud hosting product. You can visit the product homepage for more information. Clickhouse product link: https://www.alibabacloud.com/product/clickhouse

ApsaraDB - July 7, 2021

ApsaraDB - July 29, 2022

ApsaraDB - May 7, 2021

ApsaraDB - May 7, 2021

ApsaraDB - July 8, 2021

ApsaraDB - July 7, 2021

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse is a distributed column-oriented database service that provides real-time analysis.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn More ApsaraDB for Cassandra

ApsaraDB for Cassandra

A database engine fully compatible with Apache Cassandra with enterprise-level SLA assurance.

Learn MoreMore Posts by ApsaraDB