The data volume on data platforms in various industries is growing in the data age. The requirements regarding users' personalized operation are also being highlighted. Therefore, the user tag system has emerged as a basic service for personalized operation. Nowadays, real-time, precise marketing is required in almost all industries, including the Internet, gaming, and education. You can locate target groups by generating user profiles and filtering users with a combination of conditions in marketing. For example:

Let's take a typical target crowd selection scenario in e-commerce platforms as an example. The following table describes how to collect, tag, and clean potential customer information in the apparel industry:

In the table above, the first column is the unique identifier of the users, which is often used as the primary key. The other columns are all tag columns.

If the company wants to launch a high-end male sports product, the selection conditions are listed below:

The table above shows the typical table structure for crowd selection, with the first column displaying the user ID and all the others as tag columns and query conditions. The crowd selection service faces some common pain points:

This article provides an in-depth analysis and description of how ClickHouse builds a crowd selection system, why ClickHouse is important, and its comparative advantages.

This article uses open-source Elasticsearch (ES) as an example to compare ClickHouse in terms of crowd selection. The open-source ES is an efficient search engine. ES can perform complex combination operations and data aggregation with its excellent indexing technology. ClickHouse is a popular open-source columnar storage AnalyticDB. Its core feature is its high storage compression ratio and query performance, especially large table queries. Therefore, compared with ClickHouse, ES has the necessary ca pabilities for crowd selection, but it still has the following three deficiencies:

Open-source Elasticsearch (ES) uses Lucene as the underlying storage, which mainly includes row storage (storefailed), columnar storage (docvalues), and inverted indexes (invertindex). The _source field in row storage controls raw doc data storage. When writing data, ES regards the entire json structure of raw doc data as a string and stores it as a _source field. Hence, the _source field occupies a large storage space, and update operation is not supported after _source field is disabled. Indexing is also an indispensable part of ES. By default, all columns are indexed in ES. Specific columns can be set to be unindexed, but query will not be supported on the unindexed columns. In the crowd selection scenario, the conditions for filtering tags are arbitrary, varied, and change constantly. It is unrealistic not to index on any tag columns. Therefore, for a large, wide table with hundreds of columns, the storage cost must be doubled with a full-column index.

ClickHouse is a complete columnar storage database. Since it does not rely on indexes for query or force index building, no additional index files are retained. At the same time, the number of ClickHouse storage data copies can be adjusted to minimize costs.

Indexes provide efficient query performance for ES, but index construction is complicated and time-consuming. Each time, the index creation requires scanning and sorting the data in a column to generate an index file. However, in the crowd selection business, the crowd information is increasing continuously. The correspondingly constant updating of tags will force ES to recreate the indexes frequently, which will be a huge burden to ES performance.

ClickHouse queries are index-independent, and no index creation is required. Therefore, for newly added data, index update and maintenance are not included by ClickHouse.

Open-source ES does not completely support SQL, and the json format of query requests is complex. At the same time, ES lacks optimization for the execution strategy of multi-condition filtering and aggregation. Let's take sorting out of the target group of a high-end male sports product like the example mentioned above. The following SQL statements are available: SELECT user_id FROM whatever_table WHERE city_level = 'first-tier city' AND gender = 'male' AND is_like_sports = 'yes ';

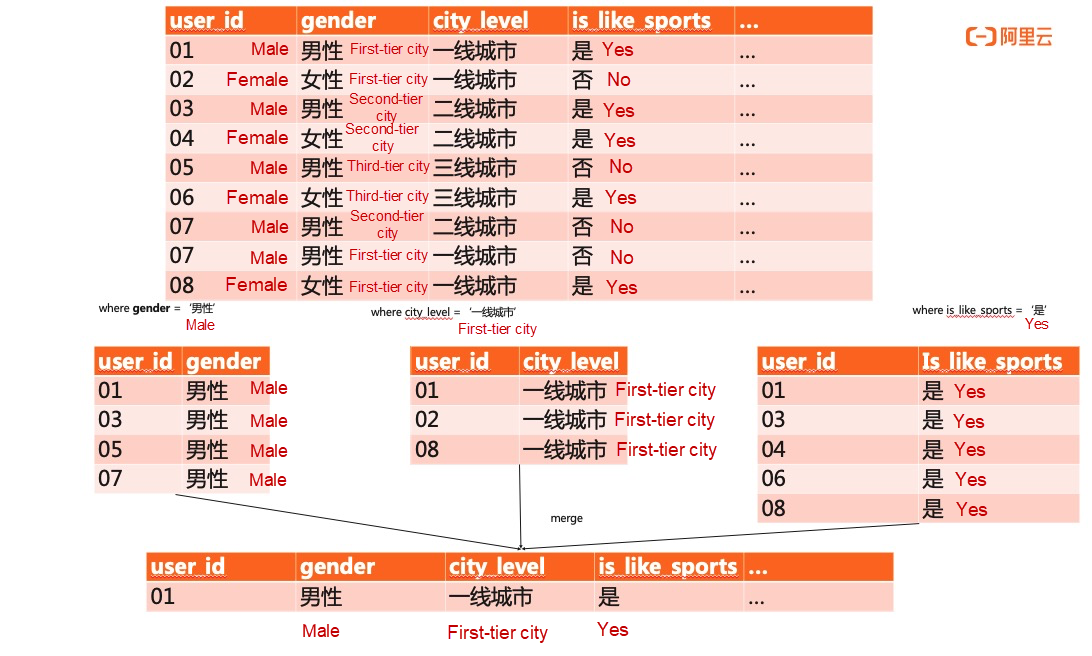

For the preceding SQL statements, ES performs three index scans on the three tags and then merges the results of the three scans, as shown in the following figure:

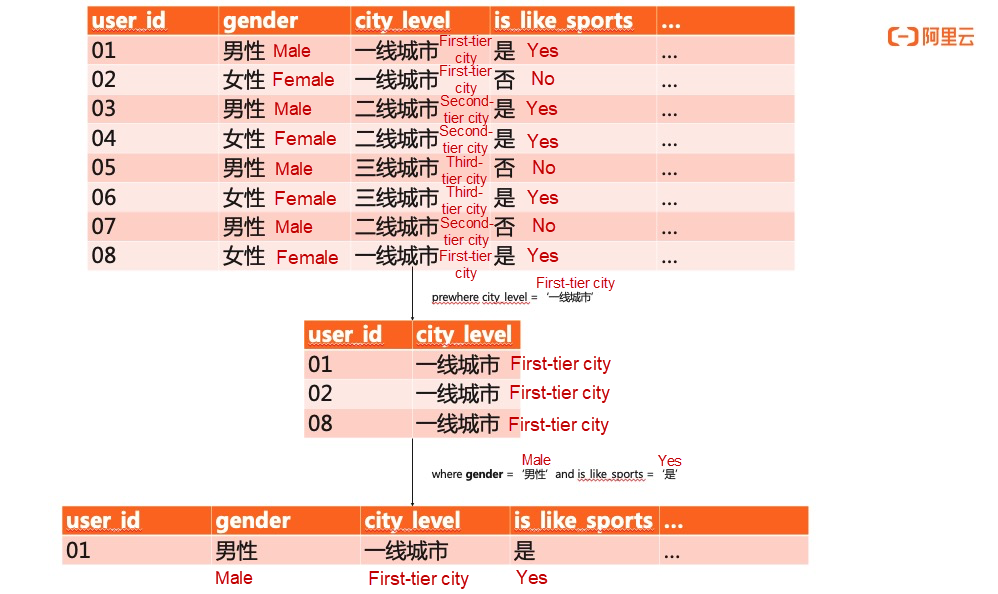

The execution of ClickHouse is more elegant. ClickHouse adopts a standard SQL statement, which is simple and useful. When the where statement is executed, a layer will be generated automatically to execute the PreWhere statement. Therefore, the second scan is performed based on the result of the first scan, as shown in the following figure:

ClickHouse optimizes the filtering process of complex multi-condition filtering scenarios. The scanned data volume is smaller with more efficient performance than ES.

After the model selection, the next thing is building a crowd selection system based on ClickHouse. After reviewing the service description at the beginning of the article and the typical SQL statements in the above part (SELECT user_id FROM whatever_table WHERE city_level = 'first-tier city' AND gender = 'male' AND is_like_sports = 'yes'; ), the requirements of the crowd selection business on database capabilities are listed below:

How can we make full use of ClickHouse in the crowd selection scenario while avoiding its disadvantages and addressing the needs listed above?

The asynchronous Update mechanism of ClickHouse should be prioritized. The execution of Update by ClickHouse is inefficient. Once a Data Part is generated by the MergeTree storage in the ClickHouse kernel, this Data Part cannot be changed. Therefore, at the MergeTree storage kernel level, ClickHouse is inept at updating and deleting data. Bare Update operations are not supported by ClickHouse and thus are added to the Alter Table.

ALTER TABLE [db.]table UPDATE column1 = expr1 [, ...] WHERE filter_expr;So when an Update operation (above) is performed and responded, the ClickHouse kernel only does the following two things:

Despite the extreme complexity, the workflow of asynchronous threads are summarized below:

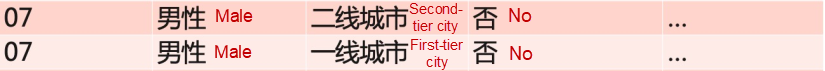

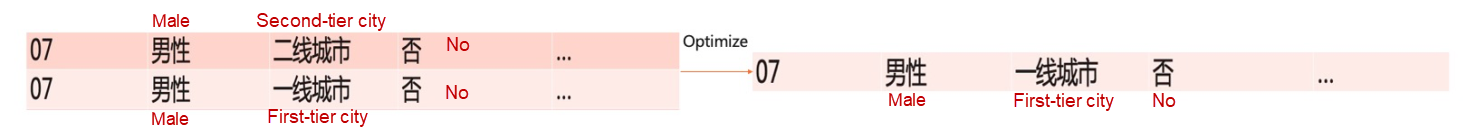

This is how ClickHouse executes the Update command. Frequent Update operations are disastrous for ClickHouse. Therefore, the update statement is substituted with the insert statement. New data from that user needs to be inserted to update tags of a specified user. For example, update the data of user 07 in the table:

Consequently, there may be multiple records for each user. For the crowd selection scenario, the query results are misled by the same user's disordered and redundant information. Thus, the requirements for precise selection cannot be met. The next section describes how to use primary keys to eliminate duplication in ClickHouse. You must update the same user's data by overwriting the existing data with the newly inserted data.

MergeTree is the most important and core storage kernel in ClickHouse. MergeTree is similar to LSM-Tree in terms of the idea. The complexity is difficult to explain clearly in a single article, so the implementation principles will not be explained here. This section of the article focuses on the crowd selection scenario and describes how to use the AggregatingMergeTree, the MergeTree variant, and the data aggregation effects using AggregatingMergeTree. Derived from MergeTree, AggregatingMergeTree is identical to the basic MergeTree in terms of storage. However, for the former, "additional merge logic" is added in the data merge process. AggregatingMergeTree substitutes a single row that stores a series of aggregated functions state for all rows with the same primary key (within a data fragment.) Let's take the table architecture from the beginning of this article as an example. The following shows how to create a table using the AggregatingMergeTree table engine:

CREATE TABLE IF NOT EXISTS whatever_table ON CLUSTER default

(

user_id UInt64,

city_level SimpleAggregateFunction(anyLast, Nullable(Enum('First-tier city' = 0, 'Second-tier city' = 1, 'Third-tier city' = 2, 'Fourth-tier city' = 3))),

gender SimpleAggregateFunction(anyLast, Nullable(Enum('Female' = 0, 'Male' = 1))),

interest_sports SimpleAggregateFunction(anyLast, Nullable(Enum('No' = 0, 'Yes' = 1))),

reg_date SimpleAggregateFunction(anyLast, Datetime),

comment_like_cnt SimpleAggregateFunction(anyLast, Nullable(UInt32)),

last30d_share_cnt SimpleAggregateFunction(anyLast, Nullable(UInt32)),

user_like_consume_trend_type SimpleAggregateFunction(anyLast, Nullable(String)),

province SimpleAggregateFunction(anyLast, Nullable(String)),

last_access_version SimpleAggregateFunction(anyLast, Nullable(String)),

others SimpleAggregateFunction(anyLast,Array(String))

)ENGINE = AggregatingMergeTree() partition by toYYYYMMDD(reg_date) ORDER BY user_id;Based on the preceding table creating statement, each row of data except for the primary key (user) is altered into a pre-aggregated state by AggregatingMergeTree in combination with anyLast function. The anyLast aggregation function declares that the aggregation policy is to retain the last updated data.

The previous section describes how to select a table engine and an aggregation function for crowd selection scenarios. However, AggregatingMergeTree does not guarantee that the results of any query are aggregated, and no flag is provided to check the aggregation state and progress. Therefore, the optimize command needs to be manually issued to force the aggregation to ensure the data is aggregated before the query. Besides, for convenience, the optimize command can be set to be issued periodically. For example, you can issue the optimize command every ten minutes. The execution cycle of the optimize command can be determined on real-time business requirements and computing resources. If the data volume is too large for the optimize command to take effect, the optimize command can be issued in parallel at the partition level. After the optimize operation takes effect, the deduplication logic can be implemented.

import java.sql.*;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.concurrent.TimeoutException;

public class Main {

private static final String DATE_FORMAT = "yyyy-MM-dd HH:mm:ss";

private static final SimpleDateFormat SIMPLE_DATE_FORMAT = new SimpleDateFormat(DATE_FORMAT);

public static void main(String[] args) throws ClassNotFoundException, SQLException, InterruptedException, ParseException {

String url = "your url";

String username = "your username";

String password = "your password";

Class.forName("ru.yandex.clickhouse.ClickHouseDriver");

String connectionStr = "jdbc:clickhouse://" + url + ":8123";

try {

Connection connection = DriverManager.getConnection(connectionStr, username, password);

Statement stmt = connection.createStatement();

// Create a local table

String createLocalTableDDL = "CREATE TABLE IF NOT EXISTS whatever_table ON CLUSTER default " +

"(user_id UInt64, " +

"city_level SimpleAggregateFunction(anyLast, Nullable(Enum('First-tier city' = 0, 'Second-tier city' = 1, 'Third-tier city' = 2, 'Fourth-tier city' = 3))), " +

"gender SimpleAggregateFunction(anyLast, Nullable(Enum('Female' = 0, 'Male' = 1)))," +

"interest_sports SimpleAggregateFunction(anyLast, Nullable(Enum('No' = 0, 'Yes' = 1)))," +

"reg_date SimpleAggregateFunction(anyLast, Datetime)) " +

"comment_like_cnt SimpleAggregateFunction(anyLast, Nullable(UInt32)),\n" +

"last30d_share_cnt SimpleAggregateFunction(anyLast, Nullable(UInt32)),\n" +

"user_like_consume_trend_type SimpleAggregateFunction(anyLast, Nullable(String)),\n" +

"province SimpleAggregateFunction(anyLast, Nullable(String)),\n" +

"last_access_version SimpleAggregateFunction(anyLast, Nullable(String)),\n" +

"others SimpleAggregateFunction(anyLast, Array(String)),\n" +

"ENGINE = AggregatingMergeTree() PARTITION by toYYYYMM(reg_date) ORDER BY user_id;";

stmt.execute(createLocalTableDDL);

System.out.println("create local table done.");

// Create a distributed table

String createDistributedTableDDL = "CREATE TABLE IF NOT EXISTS whatever_table_dist ON cluster default " +

"AS default.whatever_table " +

"ENGINE = Distributed(default, default, whatever_table, intHash64(user_id));";

stmt.execute(createDistributedTableDDL);

System.out.println("create distributed table done");

// Insert mock data

String insertSQL = "INSERT INTO whatever_table(\n" +

"\tuser_id,\n" +

"\tcity_level,\n" +

"\tgender,\n" +

"\tinterest_sports,\n" +

"\treg_date,\n" +

"\tcomment_like_cnt,\n" +

"\tlast30d_share_cnt,\n" +

"\tuser_like_consume_trend_type,\n" +

"\tprovince,\n" +

"\tlast_access_version,\n" +

"\tothers\n" +

"\t)SELECT\n" +

" number as user_id,\n" +

" toUInt32(rand(11)%4) as city_level,\n" +

" toUInt32(rand(30)%2) as gender,\n" +

" toUInt32(rand(28)%2) as interest_sports,\n" +

" (toDateTime('2020-01-01 00:00:00') + rand(1)%(3600*24*30*4)) as reg_date,\n" +

" toUInt32(rand(15)%10) as comment_like_cnt,\n" +

" toUInt32(rand(16)%10) as last30d_share_cnt,\n" +

"randomPrintableASCII(64) as user_like_consume_trend_type,\n" +

"randomPrintableASCII(64) as province,\n" +

"randomPrintableASCII(64) as last_access_version,\n" +

"[randomPrintableASCII(64)] as others\n" +

" FROM numbers(100000);\n";

stmt.execute(insertSQL);

System.out.println("Mock data and insert done.");

System.out.println("Select count(user_id)...");

ResultSet rs = stmt.executeQuery("select count(user_id) from whatever_table_dist");

while (rs.next()) {

int count = rs.getInt(1);

System.out.println("user_id count: " + count);

}

// Merge data

String optimizeSQL = "OPTIMIZE table whatever_table final;";

// If the data merge time is too long, execute the optimize command in parallel at the partition level.

String optimizeByPartitionSQL = "OPTIMIZE table whatever_table PARTITION 202001 final;";

try {

stmt.execute(optimizeByPartitionSQL);

}catch (SQLTimeoutException e){

// View merge progress

// String checkMergeSQL = "select * from system.merges where database = 'default' and table = 'whatever_table ';";

Thread.sleep(60*1000);

}

// Crowd Selection(city_level='First-tier city',gender='Male',interest_sports='Yes', reg_date<='2020-01-31 23:59:59')

String selectSQL = "SELECT user_id from whatever_table_dist where city_level=0 and gender=1 and interest_sports=1 and reg_date <= NOW();";

rs = stmt.executeQuery(selectSQL);

while (rs.next()) {

int user_id = rs.getInt(1);

System.out.println("Got suitable user: " + user_id);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}Alibaba Cloud has launched the ClickHouse cloud hosting product. You can visit the product homepage for more information ApsaraDB for ClickHouse .

Alibaba Clouder - December 21, 2020

Alibaba Cloud MaxCompute - December 6, 2021

Hologres - June 16, 2022

ApsaraDB - December 21, 2022

ApsaraDB - December 21, 2022

Alibaba Cloud Serverless - July 17, 2023

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by ApsaraDB