By Renjie

This article describes the DDL syntax, several typical scenarios, and features of the secondary index in detail. The author hopes to help users gain a deeper understanding of ClickHouse in OLAP scenarios and elaborate on applicable search scenarios for secondary indexes.

The secondary index function of ClickHouse was officially released recently, which makes up for the insufficient multi-dimensional point query capability in the massive data analysis scenario. From the perspective of serving users, the author finds that most users do not know how to solve the optimization issue of a single-table query performance of ClickHouse. If this is the case, the author has taken the opportunity to introduce several methods for optimizing single-table analysis and query performance of ClickHouse. These methods cover most of the common methods for accelerating the storage layer scanning in the OLAP field.

After solving the performance optimization problem in various business scenarios, the author found that ClickHouse cannot solve the multi-dimensional search problem at the moment. A point query often wastes huge I/O and CPU resources. Therefore, ClickHouse provides an exclusive secondary index function to solve this problem.

Before introducing various optimization technologies for OLAP storage scanning performance, the author describes a simple cost model and some background knowledge of OLAP. In this article, the author uses the simplest cost model to calculate the overhead of OLAP storage scanning, which is the amount of data read by disk scanning. In a column-based storage and computing engine similar to ClickHouse, data is compressed, computed, and transferred by column in blocks. In ClickHouse, data can only be read in blocks in specified locations. Although the user queries a clear record based on UID, the amount of data read from the disk is amplified to the block size multiplied by the number of columns. In this article, optimization factors, such as BlockCache and PageCache, are not considered because the optimization effect of the cache is not stable.

ClickHouse relies on the sort key as the main technology for storage scanning acceleration. It means the data in each DataPart at the storage layer is stored in a strict order based on the sort key. This ordered storage mode lays the basis for "skip" scanning of ClickHouse and the high compression ratio of duplicate data. If you are unfamiliar with the MergeTree Storage Structure of ClickHouse, please refer to the previous article, ClickHouse Kernel Analysis - Storage Structure and Query Acceleration of MergeTree.

CREATE TABLE order_info

(

`oid` UInt64, --Order ID

`buyer_nick` String, --Buyer ID

`seller_nick` String, --Seller ID

`payment` Decimal64(4), --Payment amount

`order_status` UInt8, --Order status

...

`gmt_order_create` DateTime, --Order creation time

`gmt_order_pay` DateTime, --Payment time

`gmt_update_time` DateTime, --Record update time

INDEX oid_idx (oid) TYPE minmax GRANULARITY 32

)

ENGINE = MergeTree()

PARTITION BY toYYYYMMDD(gmt_order_create) --Partition by day

ORDER BY (seller_nick, gmt_order_create, oid) –Sort key

PRIMARY KEY (seller_nick, gmt_order_create) --Primary key

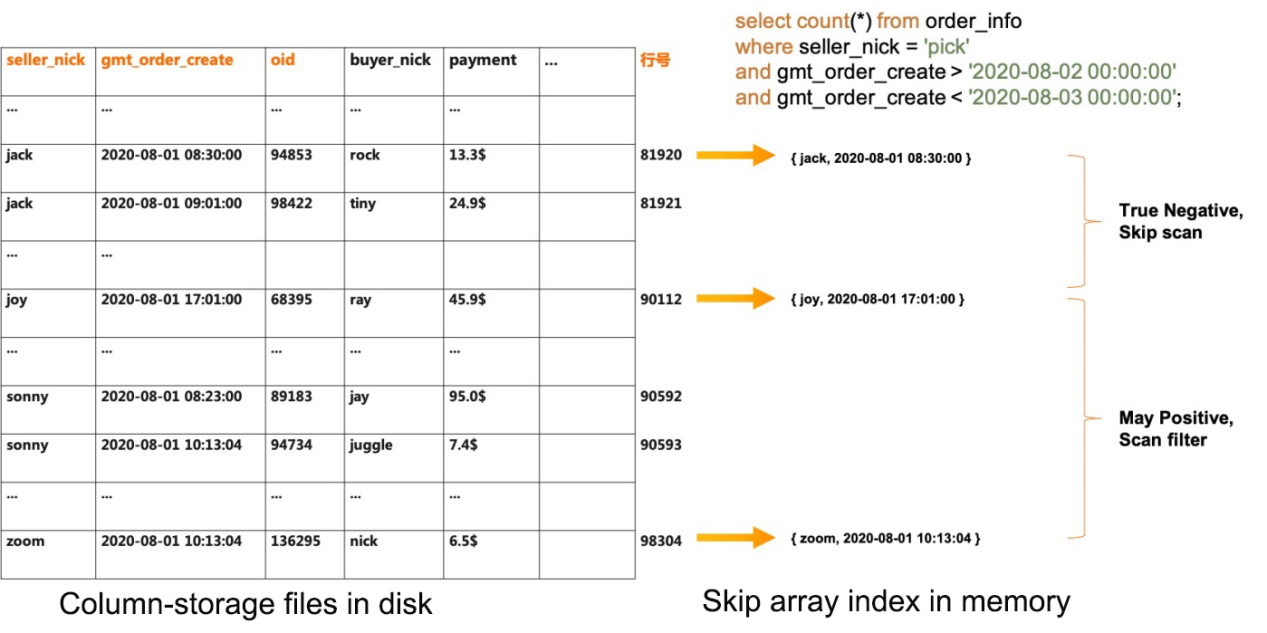

SETTINGS index_granularity = 8192;Take a simple order scenario as an example. The table structure is listed above. ORDER BY defines that the records in the data file will be stored in an absolute order based on the combined sort key of the seller ID, order creation time, and order ID. PRIMARY KEY and index_granularity define the index structure on the sort key. ClickHouse constructs a "skip array" for each ordered data file as the index, where the records are extracted from the original data file at a certain interval. In other words, a row of the record is abstracted by every index_granularity. At the same time, only the seller_nick and gmt_order_create prefix columns in PRIMARY KEY are retained. As shown in the following figure, with this memory-based "skip array" as the index, the optimizer can exclude rows irrelevant to the query quickly, reducing the amount of data by disk scanning. The reason why the oid column is not put in PRIMARY KEY is related to the set value of index_granularity.

When migrating binlog data from MySQL databases to ClickHouse for analysis, many users copy the primary key definitions in MySQL. As a result, the sort key index in ClickHouse almost fails to play any role. The query performance mainly depends on the powerful parallel data scanning capability and the efficient column-oriented computing engine of ClickHouse. This also reflects the absolute performance advantage of ClickHouse in OLAP scenarios, which shows how the query performance is still great even without an index. In business systems, MySQL databases focus on updating transactions of a single record. The primary key can be simply and clearly defined as an object identifier (OID). However, in OLAP scenarios, queries need to scan and analyze large amounts of data. ClickHouse uses the sort key index for "skip" scanning, so users should try to put the fields that remain unchanged in the business record lifecycle into the sort key when creating a table. Generally, the column with a smaller distinct count value is placed first.

In the business scenario described in the previous section, when querying the total order volume of all sellers at a certain time, the "skip" array index defined in PRIMARY KEY is not so effective. The sample query is listed below:

select count(*)

from order_info where

gmt_order_create > '2020-0802 00:00:00'

and gmt_order_create < '2020-0804 15:00:00'There is a fatal problem for the primary key index in ClickHouse. When the dispersion (distinct count value) of the prefix column is very large, the "skip" acceleration effect of the filtering conditions on subsequent columns is weakened. For example, if the two adjacent tuples in the "skip array" are ('a', 1) and ('a', 10086), the value range of the second column in the interval of corresponding row numbers is [1, 10086]. If the adjacent tuples are ('a', 1) and ('b', 10086), then the value range of the second column becomes (-inf, +inf). Thus, it cannot be skipped according to the filtering conditions of the second column.

If this is the case, PARTITION BY is required for optimization. Data in different partitions of ClickHouse is physically isolated, and the data lifecycle management is independent. PARTITION BY is like the "ordered state" of multiple DataPart files (data partitions) while ORDER BY mentioned in the previous section is the "ordered state" of records in a single DataPart file. Each DataPart file belongs to only one data partition and contains the MinMax value records of the PARTITION BY column in the memory. In the preceding query case, the optimizer crops out irrelevant DataPart files quickly based on the minimum and maximum values of gmt_order_create in each DataPart. This cropping mode provides more efficient data filtering than the sort key index. Pro Tip: If the business side needs to perform aggregate analysis by the range of both the order creation and payment time, how can the partition key be designed? The two time columns have business correlation, and the time difference has business constraints. If this is the case, PARTITION BY can be designed like this:

(toYYYYMMDD(gmt_order_create), (gmt_order_pay - gmt_order_create)/3600/240)

Now, the MinMax cropping index is available on both the gmt_order_create and gmt_order_pay columns in DataPart. When designing data partitions, pay attention to two issues:

1) PARTITION BY will physically isolate DataPart, so the number of DataPart at the underlying layer will be huge if the data partitions are too detailed. To a certain extent, the performance of large-scale queries will be affected, so the granularity of PARTITION BY should be controlled.

2) PARTITION BY and ORDER BY are both technologies that enable data to be stored in an ordered state. When defining them, it is better to use different columns. The first column for ORDER BY must not be used for PARTITION BY. The time columns are generally more suitable for PARTITION BY.

Users can customize indexes to accelerate query analysis in open-source ClickHouse. However, in practice, most users do not understand the principle of "skip index" in this article. Why is the query not accelerated or decelerated after the index is created? The "skip index" is not a real index. General indexes aggregate the data or row numbers by the index key. The "skip index" of ClickHouse does not aggregate data or row numbers. It is only a means to accelerate block filtering. Let's take the "INDEX oid_idx (oid) TYPE minmax GRANULARITY 32" index as an example. It is responsible for the MinMax value statistics for every 32 column-storage blocks of the oid column. The statistics results are stored in separate index files. If the oid skip index is not enabled, the following query needs to scan at least five days of data files to find the corresponding oid columns. If the skip index is enabled, the query scans the oid index files first and checks whether the MinMax range covers the target value. When scanning the primary table later, irrelevant blocks are skipped. This is the Block Meta Scan technology commonly used in OLAP.

select *

from order_info where

gmt_order_create > '2020-0802 00:00:00'

and gmt_order_create < '2020-0807 00:00:00'

and oid = 726495;When the MinMax range of oid column-storage blocks is large, the skip index cannot accelerate or decelerate queries. In the sample business scenario in this article, the oid skip index is useful. As described in the previous section, data in the same DataPart is sorted by the seller ID and order creation time. The MinMax ranges of all oid column-storage blocks in the same DataPart overlap for the most part. However, the MergeTree of ClickHouse also has an implicit ordered state that says multiple DataParts in the same partition are in an ordered state and sorted by the write time. The oid in a business system is a self-increasing sequence, and the oid of data just written to ClickHouse also increases over time. So, the MinMax ranges of oid column-storage blocks in different DataParts are staggered for the most part.

The acceleration effect of skip index on queries is constant, while the index scan time is proportional to the data volume. In addition to the MinMax type, skip indexes of the set type are provided and apply to scenarios where column values are localized in terms of the write time. The bloom_filter, ngrambf_v1, and tokenbf_v1 generate signatures with a high compression ratio for the complete string column or tokens after string column tokenization using bloom_filter. Thus, they exclude blocks, which can be accelerated in long string scenarios.

All the performance optimization technologies discussed in the preceding sections rely on the orderliness of data to accelerate the scanning of blocks that meet the specified conditions. This means the data to be queried should be localized. In normal business scenarios, as long as the data that meets the query conditions is localized, the three methods above can accelerate queries. When designing a business system, we should deliberately create more locality. For example, if the oid in examples is in the form of "toYYYYMMDDhh(gmt_order_create) + seller ID + increasing sequence", then the data for internal filtering of DataPart is localized. Therefore, query processing will be faster. Now, think about the following issue.

In OLAP scenarios, the most difficult problem is that the data that meets the query conditions is not "localized" at all. The data rows may be a few, but they are scattered. Each column-storage block has one or two records that meet the conditions. If this is the case, due to the column storage mode, the system reads the entire block if one record in the block meets the condition. As a result, an extremely large amount of data needs to be read from the disk. However, this is an extreme case. In many cases, some column-storage blocks contain no matching records, and others contain just a few matching records. Records are randomly scattered without order. In this kind of scenario, a Lucene-like inverted index can be used for query conditions to locate the row number of target records. However, indexes incur additional storage and construction costs. Thus, two-phase scanning can be used.

Let's take the following query as an example. Generally, the storage-layer scanning reads all column data in the last five days. Then, the computing engine filters the target rows based on the order_status column. In a two-phase scanning framework, the prewhere expression is pushed down to the storage-layer scanning process for execution. The order_status column-storage blocks are scanned first and checked whether any records meet the conditions. Then, all other columns of records that meet the query conditions are read. If no records meet the conditions, the block data of other columns are skipped.

--General cases

select *

from order_info where

where order_status = 2 –- Order cancelled

and gmt_order_create > '2020-0802 00:00:00'

and gmt_order_create < '2020-0807 00:00:00';

--Two-phase scanning

select *

from order_info where

prewhere order_status = 2 -- Order cancelled

where gmt_order_create > '2020-0802 00:00:00'

and gmt_order_create < '2020-0807 00:00:00';Two-phase scanning scans the columns that have a high filtering rate first and then scans the blocks of other columns as needed. This acceleration method can reduce I/O significantly in OLAP scenarios of wide table analysis with hundreds of columns. However, the bottleneck is also certain, which says at least all data of a single column needs to be scanned. When using the prewhere expression for acceleration, columns with the highest filtering rate and the least amount of data are recommended based on the data distribution. In the extreme case mentioned above, try to use the materialized views of ClickHouse. Materialized views support pre-aggregation and allow data to be stored in order by a different sort key. The zorder technology can also be used.

The previous four sections describe four different query acceleration technologies in ClickHouse. If the data that meet the query conditions is localized, the first three low-cost methods are recommended. In the case of scattered data distribution, the prewhere expression can reduce the I/O operations of multi-column analysis. Also, these four methods can be used in combination. This article introduces them separately to help you understand the main ideas. Continue with the issue in the previous section. When the data distribution is scattered and only a few target records are found, even if the prewhere expression is used for two-phase scanning, the I/O problem still exists. A simple example is to query the purchase records of a specific buyer ID (buyer_nick). The buyer ID distribution in the data table is scattered, and the full-table scanning cost of the buyer ID column is too high. Alibaba Cloud ClickHouse provides the secondary index feature to solve this problem. The question is, what is the standard to judge whether the number of results is small? In column-storage systems, a column-storage block contains nearly 10,000 rows of records. If the number of records that meet the query condition is an order of magnitude smaller than the record number of column-storage blocks (the filtering rate exceeds 100000:1), secondary indexes can give play to the performance advantages.

The secondary index feature of ClickHouse is designed to compete with the multi-dimensional search capability of Elasticsearch. It supports the conditional INTERSET, EXCEPT, and UNION search of multiple index columns. Compared with the multi-dimensional search capability of Elasticsearch, the secondary index feature is easy to use. The features of secondary indexes are summarized below:

General Index

The following statement provides an example of how to define secondary indexes when a table is created.

CREATE TABLE index_test

(

id UInt64,

d DateTime,

x UInt64,

y UInt64,

tag String,

KEY tag_idx tag TYPE range, --Single-column index

KEY d_idx toStartOfHour(d) TYPE range, --Index on expression

KEY combo_idx (toStartOfHour(d),x, y) TYPE range, --Multi-column composite index

) ENGINE = MergeTree() ORDER BY id;The following data definition language (DDL) statements provide examples of how to modify secondary indexes.

--Delete index definition

Alter table index_test DROP KEY tag_idx;

--Add index definition

Alter table index_test ADD KEY tag_idx tag TYPE range;

--Clear index files in the data partition

Alter table index_test CLEAR KEY tag_idx tag in partition partition_expr;

--Reconstruct index files in the data partition

Alter table index_test MATERIALIZE KEY tag_idx tag in partition partition_expr;Multi-column indexes are intended to reduce index merges in a specific query pattern. Users can create multi-column indexes for workloads that require particularly high queries per second (QPS) to maximize the query performance. Indexes on expressions facilitate the adjustment of the query granularity and work in the following typical scenarios:

1) The time column in an index meets the query conditions and is filtered only on an hourly basis. If this is the case, users can create an index on the toStartOfHour(time) expression, which is faster than index creation on the time column. In addition, filtering conditions on the time column can automatically transform and push down the index.

2) The ID column in an index is composed of universally unique identifiers (UUIDs). A UUID is a string sequence that ensures the object to be distinct. To directly create an index on the ID column, the index file can be extremely large. If this is the case, users can use the prefix function to truncate UUID to create an index. For example, prefix8(id) truncates the prefix of 8 bytes. Other prefix functions, such as prefix4, prefix16, prefixUTF4, prefixUTF8, and prefixUTF16, are used to truncate UTF code.

After an index is created on an expression, query conditions on the source column can also push down the index without rewriting queries. Similarly, after an index is created on the source column and an expression is added to the original column based on filtering conditions, the optimizer can also push down the index.

In Set Clause pushdown is a typical scenario of associative search. In a common scenario, the user attributes involve a wide table while the user behaviors involve another independent table., First user IDs that meet specific conditions must be filtered and aggregated from the behavior table to search for specific users. Then, it uses user IDs to retrieve detailed records from the attribute table. In such scenarios of In subquery, ClickHouse can also push down indexes to accelerate queries automatically.

The multi-value index is mainly for the acceleration of queries for the has()/hasAll()/hasAny() conditions on the array columns. Array columns are commonly used in tag analysis. Each record is attached with a tag and stored in an array column. In the past, the tag column can only be filtered by brute-force scanning. The secondary index feature of ClickHouse provides multi-value indexes to solve this problem. The index definition example is listed below:

CREATE TABLE index_test

(

id UInt64,

tags Array(String),

KEY tag_idx tag TYPE array –Multi-value index

) ENGINE = MergeTree() ORDER BY id;

--Contain one single tag

select * from index_test where has(tags, 'aaa');

--Contain all tags

select * from index_test where hasAll(tags, ['aaa', 'bbb']);

--Contain any tag

select * from index_test where has(tags, ['aaa', 'ccc']);The dictionary index accelerates queries in scenarios where two array columns are used to simulate mapping. The key and value columns are two separate array columns mapped based on the position of elements. The secondary index feature of ClickHouse provides the support for query functions and index types for this kind of scenario. The index definition example is listed below:

CREATE TABLE index_test

(

id UInt64,

keys Array(String),

vals Array(UInt32),

KEY kv_idx (keys, vals) TYPE map -- Dictionary index

) ENGINE = MergeTree() ORDER BY id;

--Specify the mapping condition when key is equal to value, which is ['aaa'] = 32

select * from index_test where hasPairEQ(keys, vals, ('aaa', 32));

-- Specify the mapping condition when key is greater than value, which is ['aaa'] > 32

select * from index_test where hasPairGT(keys, vals, ('aaa', 32));

-- Specify the mapping condition when key is no less than value, which is ['aaa'] >= 32

select * from index_test where hasPairGTE(keys, vals, ('aaa', 32));

-- Specify the mapping condition when key is less than value, which is ['aaa'] < 32

select * from index_test where hasPairLT(keys, vals, ('aaa', 32));

-- Specify the mapping condition when key is no greater than value, which is ['aaa'] <= 32

select * from index_test where hasPairLTE(keys, vals, ('aaa', 32));The author has tested the construction performance of secondary indexes and index compression ratio of ClickHouse in a variety of scenarios, mainly comparing the inverted index and BKD index of Lucene 8.7.0. Indexes of Lucene are used by Elasticsearch as the underlying indexes. The performance data here is only for reference, and it does not represent the end-to-end performance of the secondary index feature of Elasticsearch and ClickHouse. Secondary indexes of ClickHouse are constructed during DataPart merge operation. In some situations, ClickHouse MergeTree has a problem of duplicate write. One record will be merged multiple times, while at the same time, the merge operation is completely asynchronous.

trace_id ScenariosMethod for Mocking the Data:

substringUTF8(cast (generateUUIDv4() as String), 1, 16)

Data Size: 1 EB (1.5 GB for ClickHouse data files)

Construction Time: 65.32s for ClickHouse vs. 487.255s for Lucene

Index File Size: 1.4 GB in ClickHouse vs. 1.3 GB in Lucene

Method for Mocking the Data:

cast((10000000 + rand(number) % 1000) as String)

Data Size: 1 EB (316 MB for ClickHouse data files)

Construction Time: 37.19s for ClickHouse vs. 46.279s for Lucene

Index File Size: 160 MB in ClickHouse vs. 163 MB in Lucene

Method for Mocking the Data:

toFloat64(rand(100000000))

Data Size: 1 EB (564 MB for ClickHouse data files)

Construction Time: 32.20s for ClickHouse vs. 86.456s for Lucene BKD

Index File Size: 801 MB in ClickHouse vs. 755 MB in Lucene BKD

Method for Mocking the Data:

rand(1000)

Data Size: 1 EB (275 MB for ClickHouse data files)

Construction Time: 12.81s for ClickHouse vs. 78.0s for Lucene BKD

Index File Size: 160 MB in ClickHouse vs. 184 MB in Lucene BKD

The secondary index feature is designed to improve the performance of ClickHouse in search scenarios. In analysis scenarios, ClickHouse has already provided abundant technologies. The author hopes users will have a deeper understanding of OLAP query optimization after reading this article. You are welcome to use secondary indexes to solve multi-dimensional search problems and give feedback on your experience.

ClickHouse Source Code Introduction – The Story of SQL Queries

ApsaraDB - July 8, 2021

ApsaraDB - June 7, 2022

ApsaraDB - May 7, 2021

Alibaba Cloud_Academy - October 7, 2023

Alibaba Clouder - January 6, 2021

ApsaraDB - August 8, 2023

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse is a distributed column-oriented database service that provides real-time analysis.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn More ApsaraDB for Cassandra

ApsaraDB for Cassandra

A database engine fully compatible with Apache Cassandra with enterprise-level SLA assurance.

Learn MoreMore Posts by ApsaraDB

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free