Released by ELK Geek

MongoDB and Elasticsearch are two popular databases and have been subject to many debates between supporters of the two technologies. However, this article only represents the author's individual experience rather than opinions of any group. This article covers the following two topics:

MongoDB is positioned to compete with relational databases. However, in almost all projects, the data of core business systems is still stored in traditional relational databases rather than MongoDB.

As a player in the logistics and express delivery industry, the company has an extensive and complex business system. Massive business data is produced every day by a large number of users. The business data undergoes a variety of status changes during its lifecycle. To facilitate log tracking and analysis, the system operation logging project was established, and based on the original average daily data volume, MongoDB was selected to store operation log data.

The operation logging system records the following two types of data:

1) Primary change data that describes who performs operations, what operations are performed, which system modules are operated on, when the operations are performed, what data numbers are involved, and what operation tracking numbers are assigned.

{

"dataId": 1,

"traceId": "abc",

"moduleCode": "crm_01",

"operateTime": "2019-11-11 12:12:12",

"operationId": 100,

"operationName": "Zhang San",

"departmentId": 1000,

"departmentName": "Account Department",

"operationContent": "Visit clients"

}2) Secondary change data that describes the actual values before and after a change. Changes to multiple fields in one row of data result in multiple data entries. Therefore, a large number of such data entries are recorded.

[

{

"dataId": 1,

"traceId": "abc",

"moduleCode": "crm_01",

"operateTime": "2019-11-11 12:12:12",

"operationId": 100,

"operationName": "Zhang San",

"departmentId": 1000,

"departmentName": "Account Department",

"operationContent": "Visit clients",

"beforeValue": "20",

"afterValue": "30",

"columnName": "customerType"

},

{

"dataId": 1,

"traceId": "abc",

"moduleCode": "crm_01",

"operateTime": "2019-11-11 12:12:12",

"operationId": 100,

"operationName": "Zhang San",

"departmentId": 1000,

"departmentName": "Account Department",

"operationContent": "Visit clients",

"beforeValue": "2019-11-02",

"afterValue": "2019-11-10",

"columnName": "lastVisitDate"

}

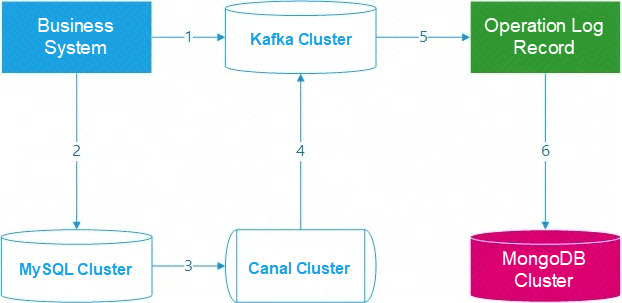

]The project architecture is as follows:

Figure: Workflow of the operation logging system

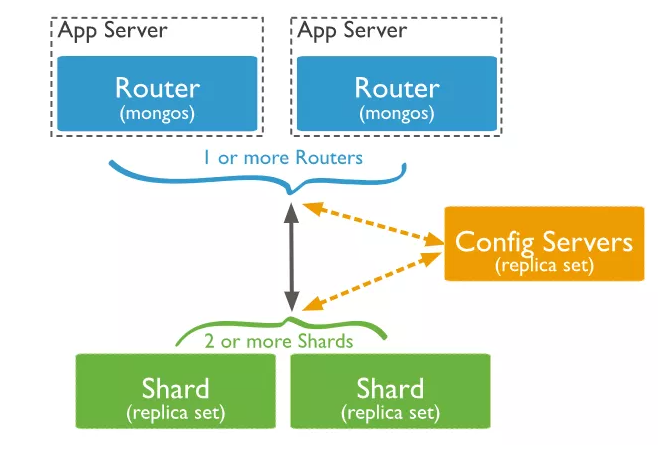

Cluster architecture:

1) Servers are configured with 8-core 32 GB memory and 500 GB solid state drives (SSDs).

2) Three router servers are deployed.

3) Three configuration servers are deployed.

4) Nine shard servers are deployed.

5) Three shards are designed for primary operation records.

6) Three shards are designed for secondary operation records.

Fans of MongoDB may suspect that we were not using MongoDB properly, our O&M capabilities were insufficient, or we were under the influence of an Elasticsearch expert. In fact, we shifted from MongoDB to Elasticsearch based on actual scenario requirements rather than any technical bias. The reasons are described in the following sections.

1) MongoDB uses B-Tree as its index structure. This index gives the highest priority to the leftmost alternative and is effective only when the query order is consistent with the order of the index fields. This is beneficial for some applications, but can also be fatal in the complex business scenarios we are faced with today.

2) Queries for operation log records in the business system involve many filter criteria that can be arbitrarily combined. This is not supported by MongoDB, or by any relational database. To support this, you have to create a lot of B+Tree index combinations, which is not practical.

3) In addition, primary and secondary records contain a lot of character-type data. Therefore, both exact query and full-text search are required to query this kind of data. In these respects, MongoDB provides inadequate functions and poor performance, leading to frequent timeouts in business system queries. By contrast, Elasticsearch is a very suitable solution.

1) In terms of sharding and replica implementation, you have to bind collection data in MongoDB to specific instances during design. This means you have to finalize the allocation of nodes for shards and replica sets during cluster configuration. This is essentially the same as sharding traditional relational databases. In fact, this mode is widely used by the clusters of many data products, such as Redis-cluster and ClickHouse. By contrast, Elasticsearch clusters are not bound to shards or replica sets and can be adjusted as needed. In addition, Elasticsearch makes it easy to adopt different performance configurations for different nodes.

2) Operation logs accumulate rapidly, with over 10 million new entries every day. As a result, you have to scale out your servers at short time intervals, and this process is much more complicated than Elasticsearch.

3) Each MongoDB collection contains more than 1 billion data records. As a result, the performance of a simple quest in MongoDB is inferior to a query by inverted indexes in Elasticsearch.

4) The company has different levels of experience with the Elasticsearch and MongoDB technology stacks. Elasticsearch is widely used in many projects including core projects, so the company is accustomed to the technologies and O&M of Elasticsearch. By contrast, MongoDB is suitable for nothing apart from core business scenarios. However, no one wants to risk using MongoDB in core projects, leaving MongoDB in a very embarrassing situation.

MongoDB and Elasticsearch are both document-oriented databases. Binary Serialized Document Format (BSON) is similar to JavaScript Object Notation (JSON), and the _objectid field works in the same way as the _id field. Therefore, the data model essentially remains unchanged when primary and secondary data is migrated to the Elasticsearch platform.

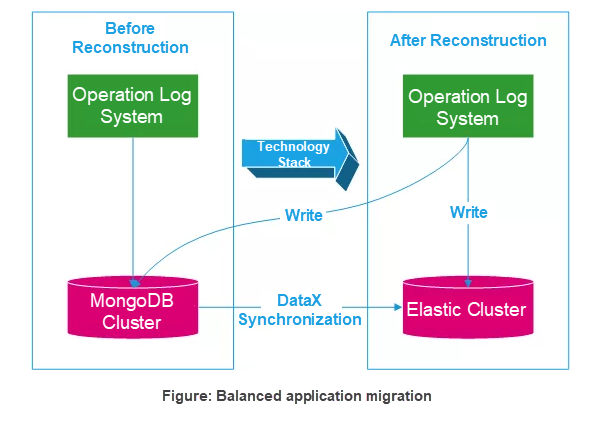

Migration between heterogeneous data systems consists involves two parts:

1) Migrate the application system at the upper layer. This involves shifting from MongoDB-oriented syntax rules to Elasticsearch-oriented ones.

2) Migrate data at the lower layer from MongoDB to Elasticsearch.

The original MongoDB cluster consisted of 15 servers, of which nine were data servers. How many servers are needed after migration to the Elasticsearch cluster? Use the following simple calculation. Assume that a MongoDB collection in the production environment contains 1 billion data entries. First, synchronize 1 million data entries from MongoDB to Elasticsearch in the test environment. If the 1 million data entries occupy 10 GB of disk space, 1 TB of disk space is required in the production environment. Then, add some redundancy based on expected business growth. According to this preliminary evaluation, the Elasticsearch cluster needs three servers configured with 8-core 16 GB memory and 2 TB hard disk drives (HDDs). Consequently, the number of servers is reduced from 15 to 3, significantly reducing configuration costs.

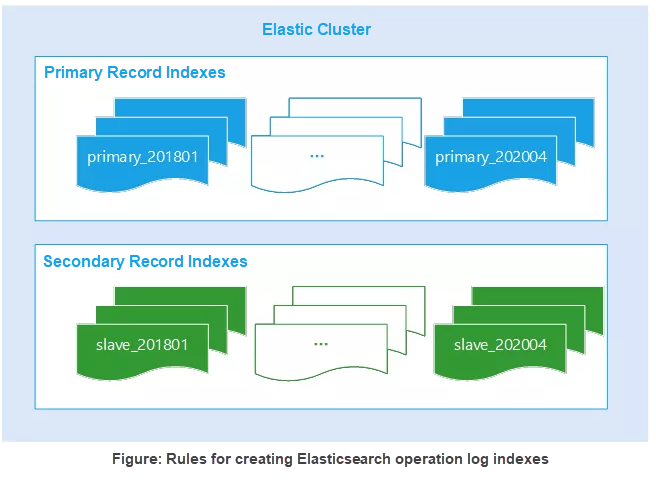

System operation logs are time-series data that requires no modification once fully written. Operation log records are queried most frequently in the month when they are generated and are seldom queried as historical data afterward. According to evaluation results, core data indexes are created and generated on a monthly basis. You must specify an operation time period when you make a service query so that the backend can identify query indexes based on the specified time period. Elasticsearch APIs support multi-index matching queries, making full use of Elasticsearch's features to merge queries that span multiple months. It is enough to create and generate non-core data indexes on a yearly basis.

Elasticsearch is not a relational database and does not have a transaction mechanism. All the data of the operation log system is sourced from the Kafka cluster. Data is consumed in sequence according to a mechanism. Therefore, pay special attention to the following two scenarios:

In Elasticsearch, index data is updated according to a near-real-time refresh mechanism. Therefore, data cannot be queried through search APIs immediately after it is submitted. In this case, how can we update primary record data to secondary records? In addition, the same data ID or trace ID may be used in multiple primary records due to a lack of standardization across business departments.

Primary data is correlated to secondary data by the dataId and traceId fields. Therefore, a data update based on the update_by_query command will be invalid and incorrect if primary data and secondary data arrive at the operation log system at the same time. In addition, primary data and secondary data may be correlated to each other on a many-to-many basis, and therefore the dataId and traceId fields are not the unique identifiers of a record.

In fact, Elasticsearch is also a NoSQL database that supports key-value caching. Therefore, you can create an Elasticsearch index to serve as an intermediate cache that caches primary data or secondary data, whichever arrives first. The _id element of the index consists of the dataId and traceId fields. This allows you to find the ID of the primary or secondary data record by using an intermediate ID. Most index data models are structured as follows, where the detailId field is the _id array record of the secondary index.

{

"dataId": 1,

"traceId": "abc",

"moduleCode": "crm_01",

"operationId": 100,

"operationName": "Zhang San",

"departmentId": 1000,

"departmentName": "Account Department",

"operationContent": "Visit clients",

"detailId": [

1,

2,

3,

4,

5,

6

]

}As mentioned above, primary records and secondary records are both stored on a Kafka shard. This allows you to call the following core Elasticsearch APIs to pull data in batches:

# Query records in secondary indexes in bulk

_mget

# Insert in bulk

bulk

# Delete intermediate temporary indexes in bulk

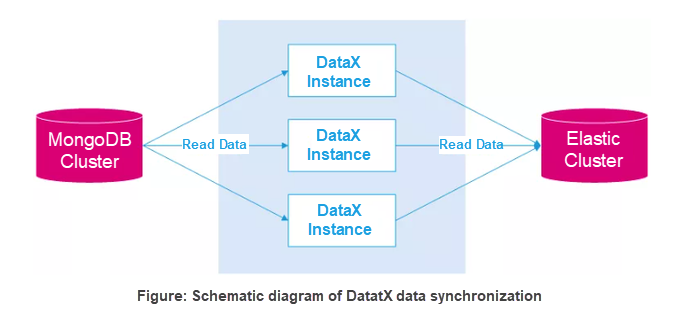

_delete_by_query Here, we use DataX as the data synchronization tool based on the following factors:

Therefore, the migration must be processed at an appropriate speed. An excessively fast migration leads to performance problems for the MongoDB cluster, while an excessively slow migration prolongs the project and increases O&M costs and complexity. If this is not an issue, you can select Hadoop as an intermediate platform for migration.

Run the following command to temporarily modify some index settings, and then revert them when data is synchronized:

"index.number_of_replicas": 0,

"index.refresh_interval": "30s",

"index.translog.flush_threshold_size": "1024M"

"index.translog.durability": "async",

"index.translog.sync_interval": "5s"The operation log project is built by using Springboot, with the following custom configuration items added:

# Write flag mongodb in applications

writeflag.mongodb: true

# Write flag elasticsearch in applications

writeflag.elasticsearch: trueProject-based modifications:

By replacing a MongoDB storage database with Elasticsearch, we can use three Elasticsearch servers to do the work of 15 MongoDB servers, significantly reducing corporate costs each month. In addition, the query performance improved more than 10 times over, and the system provides better support for a variety of queries. This is a great help to the business department, O&M team, and company leaders.

The whole project took several months, and many colleagues participated in its design, R&D, data migration, testing, data verification, and stress testing. This technical solution was not designed all at once. Instead, it is the result of trial and error. Elasticsearch has many excellent technical features. Only flexible use can maximize its power.

Li Meng is an Elasticsearch Stack user and a certified Elasticsearch engineer. Since his first explorations into Elasticsearch in 2012, he has gained in-depth experience in the development, architecture, and operation and maintenance (O&M) of the Elastic Stack and has carried out a variety of large and mid-sized projects. He provides enterprises with Elastic Stack consulting, training, and tuning services. He has years of practical experience and is an expert in various technical fields, such as big data, machine learning, and system architecture.

Declaration: This article is reproduced with authorization from Li Meng, the original author. The author reserves the right to hold users legally liable in the case of unauthorized use.

Dedicated Host (DDH) on Alibaba Cloud: A Specialized Solution For Enterprise Customers

Combining Elasticsearch with DBs: Application System Scenarios

2,593 posts | 791 followers

FollowData Geek - August 6, 2024

ApsaraDB - June 24, 2024

Alibaba Clouder - September 29, 2018

Data Geek - August 7, 2024

Alibaba Cloud Product Launch - December 11, 2018

Alibaba Clouder - March 1, 2019

2,593 posts | 791 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Clouder