In the past two years, the development of the Internet of Vehicles (IoV) technology has been widely publicized and actively promoted by governments, research institutes and major Internet giants. IoV is mainly applied in two ways: before assembly and after assembly. In the pre-assembly stage, the vehicle is equipped with an IoV device before leaving the factory, which is dominated by the vehicle manufacturer. This may also include the collaboration between a vehicle manufacturer and an IoV solution provider, such as the collaboration between SAIC and Alibaba. In the post-assembly stage, typically the IoV device is connected to the OBD port of the vehicle. The IoV solution uses an intelligent terminal (that is, an IoV device) to collect all raw data from the CAN bus of the OBD port, diagnoses and analyzes the data, records driving information, resolves the data (various sensor values from the electronic control system), and outputs the data through the serial port for users to access, analyze, and use. The vehicle data collected by the IoV device is presented to the IoV mobile app.

First of all, let's roughly summarize the characteristics of the IoV industry:

Currently, startup companies choose in-house IDCs from the very start, with a small number of users and only a few servers. As their products become increasingly sophisticated, users surge to a million level in less than two years, and servers in the IDC reach a scale of hundreds of physical machines and thousands of virtual machines. But problems also increase. Researching and planning the next-generation application architecture and infrastructure is an urgent task. The new application architecture is expected to meet the requirements of surging users and explosive traffic, while ensuring pleasant user experience; the infrastructure must be highly reliable, stable, and secure while maintaining a low cost.

Traditional in-house IDC solutions are difficult to achieve this goal, unless at a very high cost. In contrast, cloud computing can resolve these problems from all aspects. Therefore, migration to cloud is the best choice. However, there are many cloud computing vendors, including Chinese companies and foreign companies. Choosing the most suitable cloud computing vendor can be a challenging process, but through our investigation and analysis, we felt that Alibaba Cloud was the best choice according to our business needs.

After deciding on Alibaba Cloud, the next step is to consider how to migrate to the cloud. This article series aims to share some of the details of the migration process.

Before migrating to the cloud, we must fully understand our business and application architecture. Then understand the features of cloud products to determine which products can be directly used and what adjustments our applications or architecture require. Let's analyze the smart IoV platform architecture.

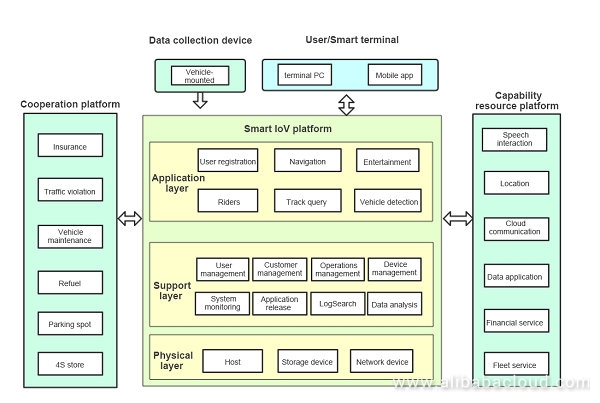

The following figure shows the company's business architecture. It consists of three major business platforms, the core of which is the IoV platform, followed by the capability resource platform and the third-party cooperation platform.

IoV platform: consists of the application layer, support layer, and physical layer. The application layer implements user registration, user logon, navigation, vehicle friends, vehicle detection, track query, and other entertainment functions. These are the core functions of the app, followed by support layer functions, such as operations management systems, user management systems, vehicle management systems, and other auxiliary O&M systems and tools.

Capability resource platform: provides resources and capabilities for customers and partners as an open platform, for example, fleet services, data applications, and location services.

Third-party cooperation platform: provides insurances, violation inquiry, parking space searching, 4S shop services, and other functions by calling third-party platform interfaces.

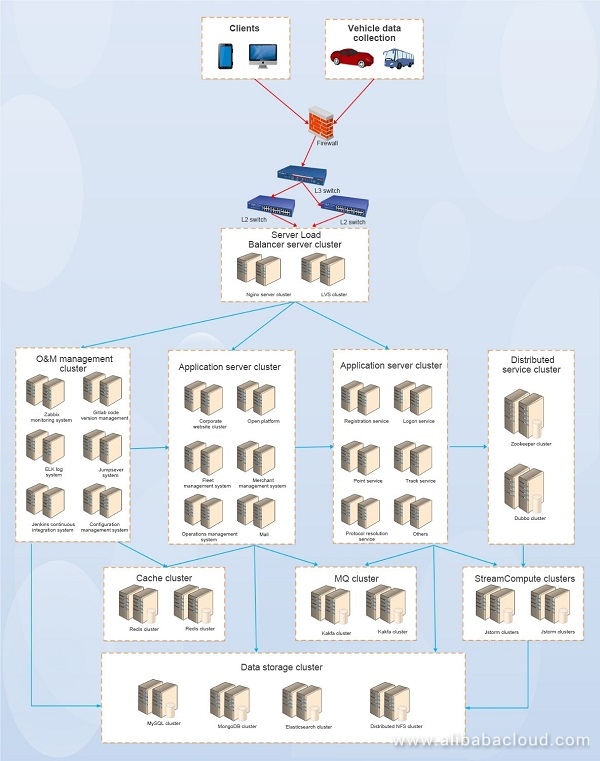

The following figure shows the application architecture, consisting of the client access layer, Server Load Balancer cluster, application server cluster, cache cluster, message queue (MQ) cluster, distributed service cluster, data storage cluster, and O&M control cluster.

A typical IoV system is data intensive and its success is highly dependent on how effective data can be collected and processed.

At the forefront of applications in traditional IDCs is a firewall, which provides access control and protects against common attacks. The defense capabilities are limited because the firewall is not advanced. During the company' rapid development, the firewall has been replaced twice, respectively when the number of users reaches 100,000 and 1 million. Services are interrupted every time the firewall is replaced. This cause unpleasant user experience, but there is no way because there are not many users when the business starts and the number of users is 100,000 according to the initial system design. It takes about 1 year for the user scale to increase from 0 to 100,000. However, it takes only seven months to increase from 100,000 to 1 million. The firewall has to be replaced to support 5 million users, so as to meet the surging user demand. If things go on like this, you cannot imagine what will happen. First, hardware equipment is becoming expensive. After you invest hundreds of thousands, you may have to replace equipment within less than one year due to fast business growth, which is expensive and laborious. Secondly, firewalls are the entrance to all services. If a firewall fails, services will inevitably break down. Services are interrupted no matter whether you replace the firewall.

The Layer-4 Server Load Balancer cluster uses the LVS server, which provides load balancing for protocol parsing and data processing at the back end. Because each protocol parsing service processes a maximum of only 10,000 vehicles per second, the LVS server is equipped with many data collection servers. This allows massive vehicles to be simultaneously online per second.

The Layer-7 Server Load Balancer cluster uses Nginx servers, which provides load balancing and reverse proxy for back-end web application servers. In addition, Nginx supports regular expressions and other features.

The bottleneck is the expansion of IDC network bandwidth. You need to apply for the expansion, which takes 1 to 2 days from completing the internal process to the operator process. This undermines fast expansion of network bandwidth, making it unable to cope with sudden traffic growth. It is a waste of resources if you purchase a lot of idle bandwidth for a long time. After all, high-quality network bandwidth resources are quite expensive in China. As the company's O&M personnel, how to help increase income and reduce expenditures and make every penny count is our responsibility, obligation, and more of an ability.

All application servers use Centos7. The running environment is mainly Java or PHP. Node.js is also used.

Java: Centos7, JDK1.7, and Tomcat7

PHP: Centos7 and PHP5.6.11

Node.js: Centos7 and Node8.9.3

Currently, we use Java, PHP, and Python as application development languages and use Tomcat, Nginx, and Node.js as web environments. Application upgrades are released basically using scripts because application releasing is not highly automated. Applications are usually released and upgraded in the middle of the night, requiring a lot of overtime. Heavy repetitive O&M workload diminishes a sense of accomplishment. O&M engineers either solve problems or upgrade and release services most of the time. They do not have time to improve themselves. They become confused without orientation, which increases staff turnover. A vicious circle is inevitable if this problem fails to be solved.

A distributed service cluster uses the Dubbo + ZooKeeper distributed service framework. The number of Zookeeper servers must be odd for election.

Alibaba Cloud Dubbo is an open source distributed service framework, which is also a popular Java distributed service framework. However, the lack of Dubbo monitoring software makes troubleshooting difficult. A robust link tracking and monitoring system improves distributed applications.

Cache clusters work in Redis 3.0 mode. 10 Redis cache clusters exist in the architecture. The memory of a cluster ranges from 60 GB to 300 GB. A cache server is typically a dedicated host acting as the memory, with low CPU overhead. As data persistence is demanding for the high disk I/O capability, Solid State Drives (SSDs) are recommended.

The biggest pain point with caching is O&M. Redis cluster failures are frequently caused by the disk I/O capability bottleneck, and Redis clusters need to be frequently resized online because of rapidly increasing users. In addition, Redis clusters must be operated and maintained manually, resulting in heavy workload and misoperation. Countless failures are caused by Redis clusters. The problem is also associated with strong dependency of applications: the entire application crashes when Redis clusters fail. That is an disadvantage of application system design.

Requests are congested when a huge number of requests are received concurrently. For example, a large volume of insert and update requests simultaneously reach the MySQL instance, resulting in massive row locks and table locks. The error "too many connections" may be caused by accumulation of excessive requests. Requests in an MQ can be processed asynchronously so that the system workload is reduced. In this architecture, open source Kafka is used as MQ. It is a distributed messaging system based on Pub/Sub. Featuring high throughput, it supports real-time and offline data processing.

However, it has a serious pain point. As open source software, Kafka is associated with several previous failures. In Version 0.8.1, a bug exists in the topic deletion function. In Version 09, a bug exists in Kafka clients: when multiple partitions and consumers exist, a partition may be congested by rebalancing. Version 10 is different from Version 08 in the consumption mode so that we had to rebuild the consumption program. In summary, we have encountered too many failures caused by Kafka bugs. Small and medium-sized enterprises are unable to fix bugs of such software due to limited technical capabilities, which leaves them passive and helpless.

StreamCompute uses Alibaba Cloud open source JStorm to process and analyze real-time data. In this architecture, two StreamCompute clusters are used. Each StreamCompute cluster has eight high-performance servers and two supervisor nodes (one active and one standby for high availability). StreamCompute is mainly used for real-time computing such as vehicle alarms and trip tracking.

A data storage cluster includes a database cluster and distributed file system.

A database cluster includes multiple databases, for example, MySQL cluster, MongoDB cluster, and Elasticsearch cluster.

We currently have dozens of database clusters. Some clusters work in high-availability architecture where one master and two slaves exist; some clusters work in double-master architecture. MySQL databases are mainly used for business databases. With the rapid development of our business and rapid growth of the user scale, we become more and more demanding for database performance. We have successively experienced high-end virtual machines (VMs), high-end physical machines, and then SSDs when the local I/O capability of physical machines could not meet our requirements any longer. However, what can we do when a single database server at the maximum configuration cannot meet us in the near future? What solutions should the database team learn about in advance, for example, Distributed Relational Database Service (DRDS)?

We currently have three MongoDB clusters, mainly used to store original data from vehicles and resolved data, such as vehicle status, ignition, alarms, and tracks. A replica set is used. It usually comprises three nodes. One serves as the master node, processing client requests. The others are slave nodes, copying data from the master node.

ElasticSearch is a Lucene-based search server. It provides a distributed multi-tenant full-text search engine based on the RESTful web interface. Developed based on Java, Elasticsearch is an open-source code service released under the Apache license terms and a popular enterprise-level search engine. In this architecture, the Elasticsearch cluster uses three nodes, all of which are candidates for the master node. The cluster is mainly used for track query, information retrieval, and log system.

As there are massive application pictures and user-uploaded pictures to be saved, we need a high-performance file storage system. We use a self-built distributed NFS.

However, the self-built distributed NFS is barely scalable because of our limited investment in hardware devices. In addition, downtime is required, which seriously affects business. The access rate decreases as the number of clients increases. As a pain point impairing user experience, the problem must be solved.

In complex system architecture and in scenarios where massive servers exist, we need appropriate O&M control software to improve the O&M efficiency.

Monitoring: The open source Zabbix monitoring system is used.

GitLab is used for code hosting.

The open source Jumpserver bastion host is used to audit operations by O&M engineers and improve the security of user logon.

Log query and management: The open source ELK log system is used.

As an open source tool for continuous integration, Jenkins is used for continuous deployment, such as code building, automatic deployment, and automatic test.

Based on Java, it manages application configuration in centralized mode.

Although the current O&M system is standard, most O&M tools are open source services that can only meet part of function requirements. As O&M control requirements increase, we should be familiar with more and more open source services. As O&M management is inconsistent, O&M engineers usually should be familiar with many O&M systems, which makes it difficult for beginners.

As the user scale increases, more and more problems of this architecture are exposed.

Most of O&M tasks are manually finished in script mode. O&M is insufficiently automated because with the rapid development of our business, O&M engineers either upgrade applications or deal with system failures most of the time and do not have time to automate O&M. In addition, it is difficult to recruit O&M talents probably because of uncompetitive pay. In summary, we develop slowly in O&M automation due to various factors with a sign of vicious cycle.

A feature of the Internet of Vehicles (IoV) industry is that the rate of online vehicles surges during morning and evening peak hours and holidays, and then the rate tends to be stable. Morning and evening peaks last for six hours, and the traffic is normal during the other 18 hours. The traffic during peak hours is usually three to five times the normal traffic. It usually takes several days to resize traditional IDC online so that it is impossible to deal with a sudden surge in traffic. To ensure the system stability and user experience, we have to multiply resources with resource utilization of less than 30%, resulting in a huge waste of resources.

Most of our O&M control software is open-source with many variants (for example, open source Jumpserver, zabbix monitoring system, Jenkins for continuous integration, and Ansible for automatic O&M), for which you need to configure independent logon accounts. Therefore, it is inconvenient to manage too many accounts, and O&M engineers need to operate and be familiar with a variety of open source software. For example, the zabbix monitoring system can deal with monitoring alarms when the scale of data is small. However, when the number of metrics surges as the number of servers increases, performance of the database is inadequate, resulting in frequent alarm delay and more false alarms. Some custom monitoring requirements and metrics need to be separately developed. Therefore, the availability of various O&M tools directly results in complex and tedious O&M.

When our applications are launched in the early stage, our system is simply designed without sufficient horizontal scaling capabilities. When the business volume explodes, many resources cannot be promptly scaled, which results in system failures and impairs user experience. For example, we used the self-built NFS in the early stage to store pictures of user profiles, pictures of driver licenses, and pictures sent to WeChat Moments. We failed to invest enough resources for some reasons, resulting in a series of problems after a period of time, such as insufficient storage space, impaired read-write performance, and user access latency. The biggest problem is that the cycle of hardware device scaling is long. It usually takes 5-10 days from making a purchase request to implementing hardware scaling because of the process of purchase approval, logistics shipping, acceptance, and IDC mounting.

Underlying infrastructure of traditional IDC is usually self-constructed with many factors resulting in instability of underlying infrastructure. For example, enterprises attach little importance to investment in hardware and use cheap devices; engineers are incapable of building sufficiently stable infrastructure architecture; ISP network connectivity is instable with no Border Gateway Protocol (BGP). In addition, our IDC encountered unexpected power-off for three times in one year, resulting in a large-scale system breakdown. Therefore, inexplicable failures occur frequently because of instable underlying infrastructure and cannot be promptly located. Unexpected problems occur at any time, haunting us with fear every day.

With the rapid development of the company and growth of the user scale, we are likely to be targeted by people with evil intent. One day at about 15:00, a large amount of DDoS attacks exploded, breaking down our firewall and system instantly. Our business crashed for several hours, but we could do nothing. Our poor security protection capabilities are also associated with costs: we cannot afford high-end firewalls or expensive bandwidth of ISPs.

Protect Data Effectively with Hybrid Backup Recovery for vSphere

Cloud Deployment Process for Internet of Vehicles: IoV Series (III)

9 posts | 2 followers

FollowAlibaba Cloud Product Launch - December 11, 2018

Alibaba Cloud Product Launch - December 12, 2018

Alibaba Clouder - October 1, 2019

Alibaba Clouder - March 9, 2018

Alibaba Clouder - November 28, 2018

digoal - July 24, 2019

9 posts | 2 followers

Follow Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by Alibaba Cloud Product Launch

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free

Raja_KT March 20, 2019 at 5:14 pm

Nice enumeration of pain points and it needs Cloud and Fog computing....as per what I see.