Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

We spent a lot of space introducing distributed systems in the big data field. Through various methods, we solved the extensibility, optimized the performance, and climbed out of the quagmire of CAP. Finally, we can save the data and compute it to get the desired results.

However, these results data are typically in the form of files. Data must reflect value. Files are not a good form, especially in big data scenarios.

Graphical applications (such as BI reports) are the real way to directly reflect the value of data. Such applications usually require data to be organized in a structured manner and to respond rapidly.

The structured organization is easy, and Hive can achieve it, but the rapid response is difficult.

The previous article mentioned that Spark residence session + parquet file of KE can provide good performance, but its extensibility, completeness, and concurrency still have significant defects and can only be used in specific scenarios.

We need a distributed online database that can provide low-latency responses like MySQL in a stand-alone environment.

We introduced three parts of Hadoop earlier: HDFS, MapReduce, and YARN. The three provide the most basic distributed storage and computing power.

With these basic capabilities, other differentiated scenarios can be considered to be derived from this basis. The distributed online database we mentioned here is included.

HBase is such an implementation. It is not too much to say that HBase is the favorite in the whole Hadoop ecosystem. This can be seen in the following code package diagram:

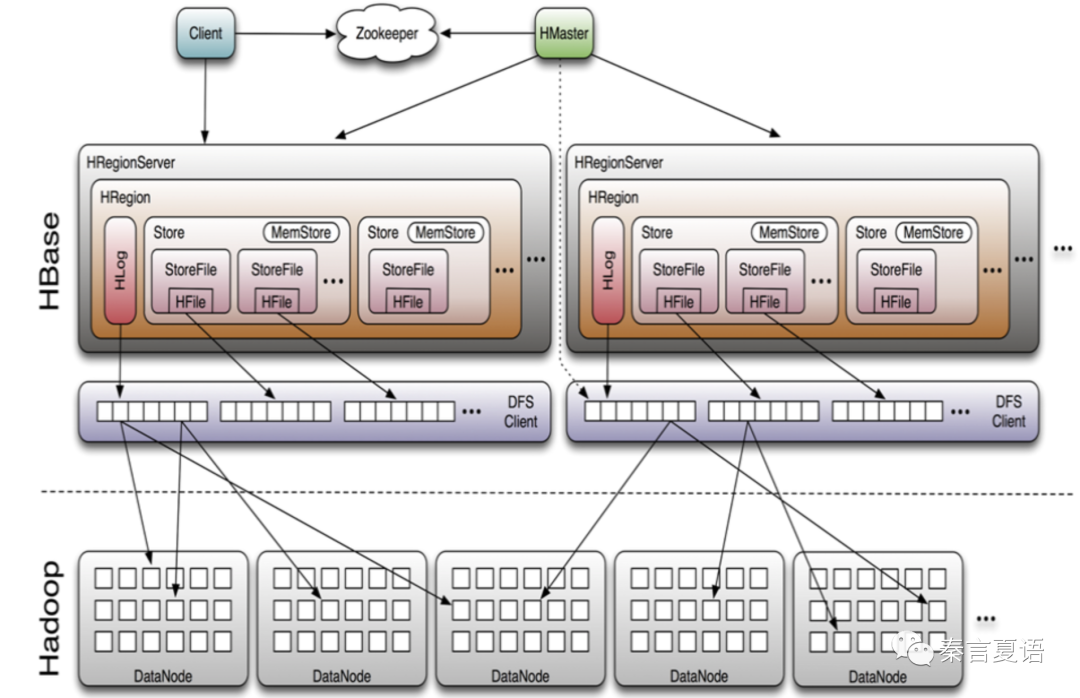

Look at the architecture diagram of HBase:

The diagram shows that HBase directly reuses the entire storage layer of HDFS. The data storage is completely handed over to HDFS, so you don't have to worry about the extensibility and reliability of data storage.

Therefore, HBase can focus on service to provide read and write services for a distributed database.

It is easy to consider memory-based data read and write to improve performance. However, in the application scenarios of big data, there will inevitably be a larger amount of data that cannot be stored in memory and can only be stored in HDFS.

Therefore, it is necessary to merge the data in the memory and HDFS to ensure the integrity of the data.

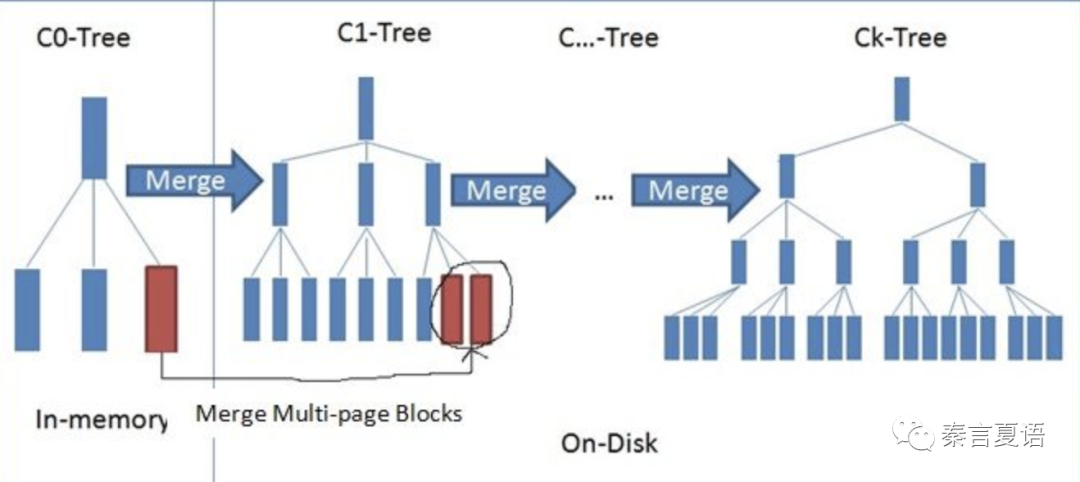

Taking the write operation as an example, HBase uses a commonly used Log Structured Merge Tree (LSM) design and uses multi-level merging to normalize data.

The reading operation is similar, and the data in the memory and on the disk need to be checked.

Continuous write operations will cause data to be flushed to the disk, resulting in a large number of files and significantly dragging down the read performance. Although HBase uses BloomFilter to filter data files, it is only a temporary solution.

Therefore, the compaction operation is used to merge files after certain conditions are met to reduce the number of files. File merging is a high-overhead operation. Therefore, compaction is divided into two levels:

You can use some parameters to control the trigger conditions of these two types of compactions. As shown in the name, minor compaction is performed more frequently than major compaction. The latter is generally performed in the business during off-peak hours (such as early morning) to avoid impacting the production business.

On the other hand, HDFS is an append-only file system and does not support data modification. Since HBase is a database, there is no doubt that it needs to support complete addition, deletion, modification, and query functions.

Therefore, the only reliable way is to append the add, delete, and modify operations to the file and merge the operations in the code logic when reading data. For example, if a row of data is added first and then deleted, it is regarded as non-existent.

This processing is logically fine, but as write operations accumulate, the burden will become heavier. Therefore, it is necessary to physically merge these write operations.

Because of the characteristics of HDFS append-only, there is only one way to physically merge - replace the old file after the new file is generated.

It is easy to imagine that since they all involve file merging, this operation can be put together with the compaction operation. The merging of data write operations is performed in the major compaction phase.

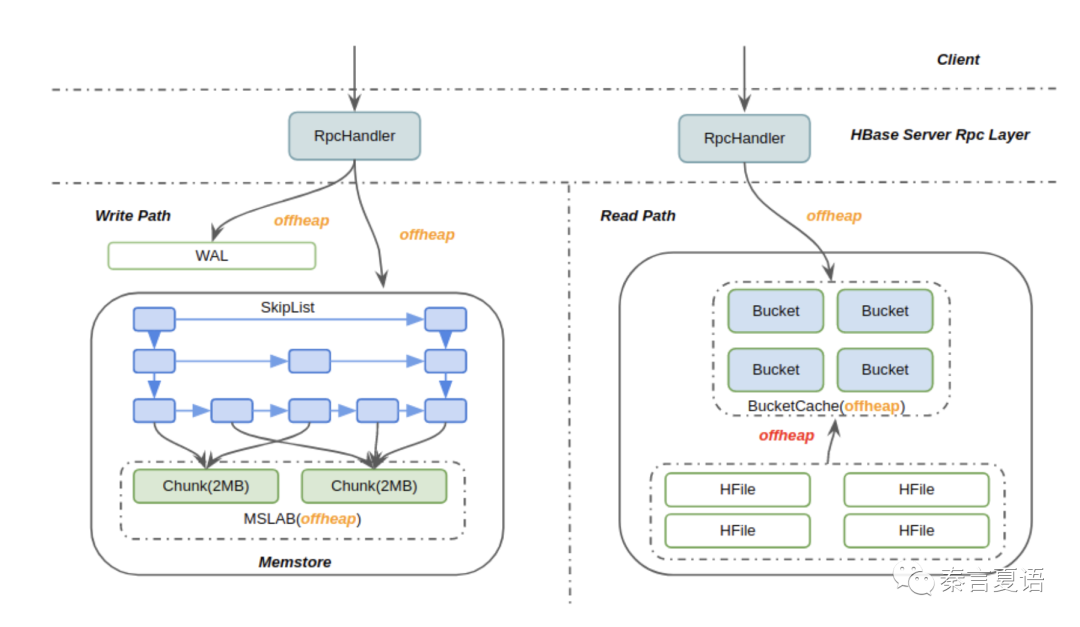

As mentioned above, HBase can use memory to accelerate read and write operations. MemStore is used for write caching, and BlockCache is used for read caching. However, this brings some problems:

It's easy to think of using off-heap memory. HBase also provides an off-heap method to provide more efficient memory management and larger caches. The general read/write path is shown in the following figure, so I won't go into detail here.

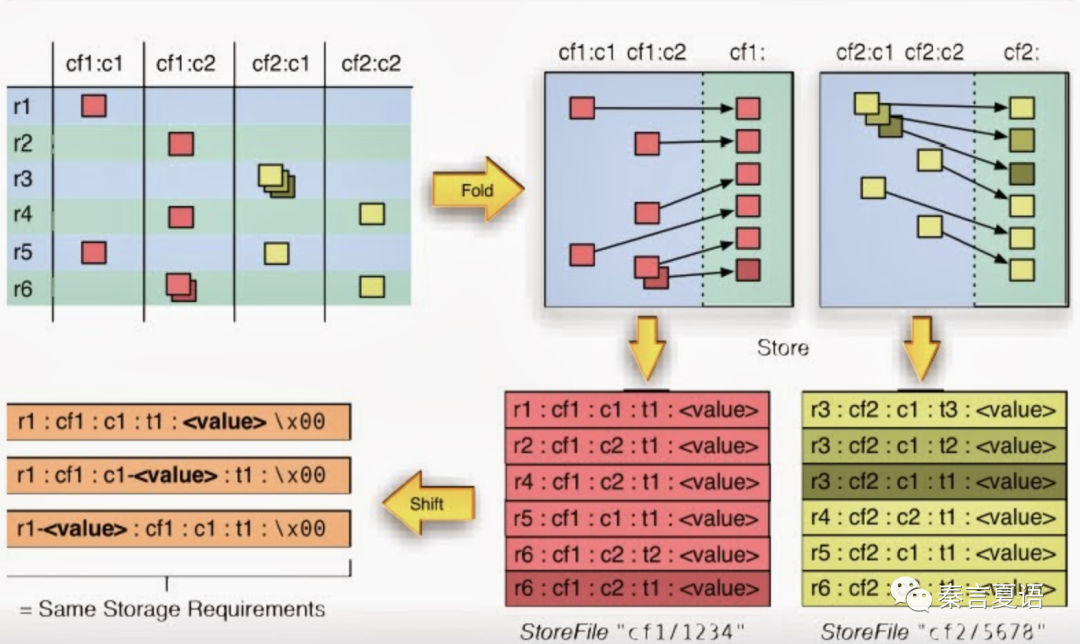

Since data queries often retrieve the same columns from many rows, the performance improvement of sequential reading is exponential. However, data in the same columns is naturally similar and has a higher data compression ratio. Therefore, more data use the column storage structure.

In the application scenarios of big data, data variances and changes in HBase may be beyond imagination. Therefore, a flexible schema like NoSQL is necessary.

HBase proposes the concept of column family by combining the two aspects. A schema is defined in units of column families. You can dynamically insert non-predefined columns into a column family for flexibility. A data store is organized in units of column families for performance and cost advantages.

This design achieved the best of both worlds. However, it forced HBase to make trade-offs in design (trade-offs are everywhere).

Since there is no predefined schema at the column level, HBase does not support the native secondary index.

This makes it necessary to specify the primary key (called row key in HBase) for all data queries. Any filter on columns cannot benefit from the performance improvement brought by indexes, so they have to traverse.

Therefore, in HBase, read operations on data are often local scan operations. This makes the row key design crucial.

A good row key design can make queries efficient, such as including the values of common query columns in the row key can significantly reduce the scope of scanning data. It can also distribute data more evenly to avoid hot spots.

Certainly, there are requirements for Secondary Index, so some implementations have emerged. For example, maintain a special index in the form of a special HBase table, realize automatic index creation, and update through the co-processor.

For example, Apache Phoenix (a well-known SQL on HBase framework) provides support for Secondary Index. (HBase does not provide SQL API, so there are projects like Phoenix to improve development efficiency.)

As shown in the HBase architecture diagram above, Region is the smallest unit of HBase to organize data at the service level (non-physical level), while RegionServer is the service that provides data read and write services in HBase. Each RS will serve the read and write of many regions.

RS and Region always maintain a 1:n relationship. In other words, each Region is always accessible only through a unique RS. Any data we look for is eventually located in a unique RS through the metadata managed by HMaster. Then, the data is read and written through this RS.

As discussed in the article, Learning about Distributed Systems – Part 9: An Exploration of Data Consistency, HBase is a CP system and chooses consistency at the expense of availability.

When an RS fails, all the Regions above become unavailable and need to be coordinated with other RS to provide the service. In this process, the data on these Regions is unavailable, which means local availability problems occur.

Fortunately, since HDFS is used to save data, the process of Region transfer only involves service migration. No data migration is involved, so it is relatively fast. Under normal circumstances, the impact on availability is relatively controllable.

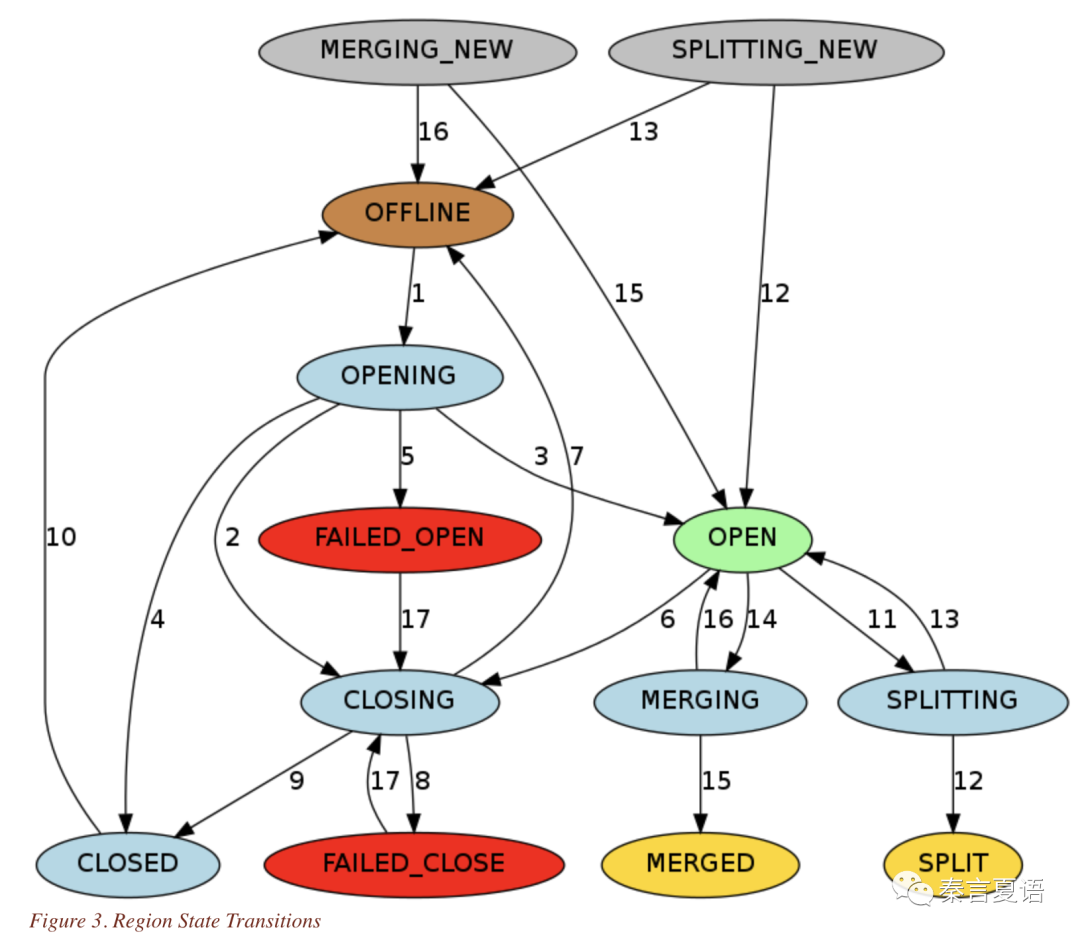

In addition, for performance reasons, the Region has operations (such as split and merge), which lead to Region migration. As shown in the following Region state machine, Region (short-term) is unavailable in many scenarios.

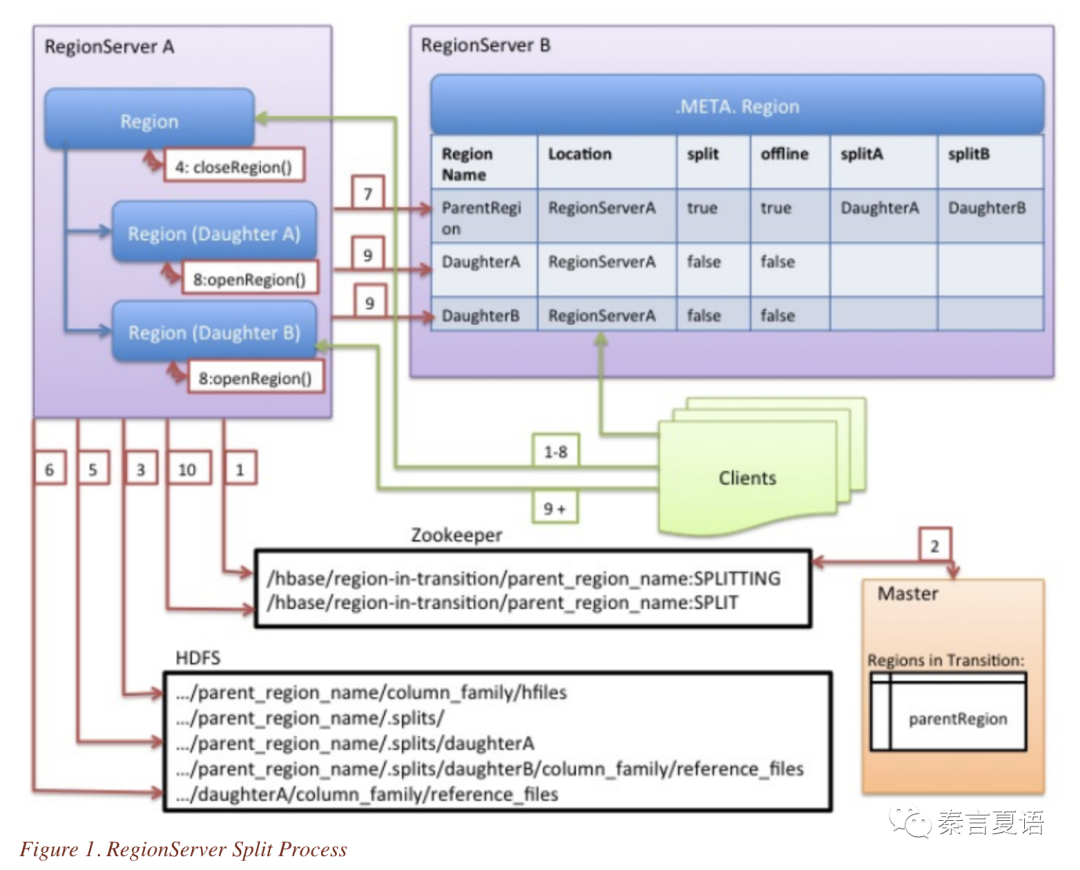

Take the Region Split operation as an example; the whole process is complicated.

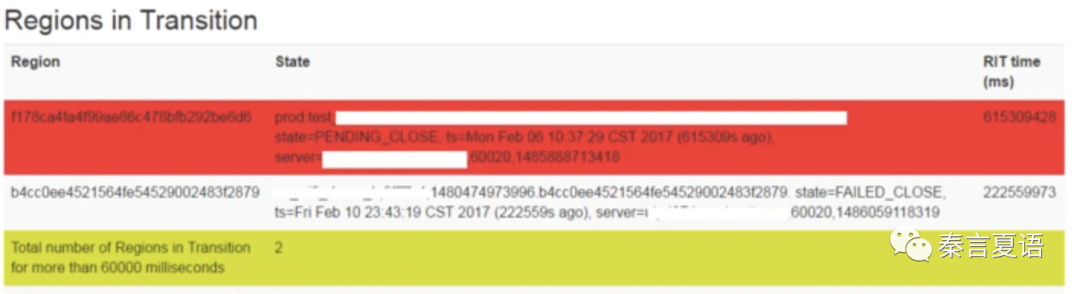

The Region transfer mentioned above can be completed quickly if it goes well. However, once it does not go well, it may be stuck for a long time or permanently. I believe many people have experienced that Region in Transition (RIT) cannot recover automatically for a long time.

This is the price of CAP trade-offs, which can be optimized as much as possible but cannot be completely avoided. Certainly, don't blindly throw yourself into the arms of the CA system because of these problems. Data inconsistency may cause you more headaches.

That's all for this article. Thanks to HBase, our data can be exported directly to business applications.

Certainly, HBase is not a silver bullet. Apart from the shortcomings described above, HBase does not support transactions, SQL, or OLAP analysis with a large throughput.

HBase applies to random or range queries of massive data. If you need to maintain multiple versions of data, it is a good choice. However, beyond these scenarios, you may need to turn to other systems for help (such as ClickHouse, Greenplum, DynamoDB, Casandra, and ES), as mentioned in the previous articles in the series.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems – Part 25: Kylin in a New Way

Learning about Distributed Systems - Part 27: From Batch Processing to Stream Computing

64 posts | 59 followers

FollowAlibaba Cloud_Academy - September 26, 2023

Alibaba Cloud_Academy - July 29, 2022

Alibaba Clouder - July 1, 2020

Alibaba Clouder - July 20, 2020

Alibaba Clouder - November 23, 2020

Apache Flink Community China - September 27, 2020

64 posts | 59 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy