With DataX-On-Hadoop, you can upload Hadoop data to MaxCompute and ApsaraDB for RDS using multiple MapReduce tasks without the need to install and deploy DataX software in advance.

DataX-On-Hadoop uses the Hadoop task scheduler to schedule DataX tasks to a Hadoop execution cluster, on which each task is executed based on the process of Reader -> Channel -> Writer. This means that you can upload Hadoop data to MaxCompute and ApsaraDB for RDS through multiple MapReduce tasks without the need to install and deploy DataX software in advance or prepare an additional execution cluster for DataX. However, you still have access to the plug-in logic, throttling, and robust retry functions of DataX.

DataX is an offline data synchronization tool and platform widely used in Alibaba Group. It efficiently synchronizes data among MySQL, Oracle, HDFS, Hive, OceanBase, HBase, Table Store, MaxCompute, and other heterogeneous data sources. The DataX synchronization engine splits, schedules, and runs tasks internally without depending on the Hadoop environment. For more information about DataX, visit https://github.com/alibaba/DataX

DataX-On-Hadoop is the implementation of DataX in the Hadoop scheduling environment. DataX-On-Hadoop uses the Hadoop task scheduler to schedule DataX tasks to a Hadoop execution cluster, on which each task is executed based on the process of Reader -> Channel -> Writer. This means that you can upload Hadoop data to MaxCompute and ApsaraDB for RDS through multiple MapReduce tasks without the need to install and deploy DataX software in advance or prepare an additional execution cluster for DataX. However, you still have access to the plug-in logic, throttling, and robust retry functions of DataX.

Currently, DataX-On-Hadoop supports uploading HDFS data to MaxCompute on the public cloud.

How to Run DataX-On-Hadoop

The procedure is as follows:

Submit an Alibaba Cloud ticket to apply for the DataX-On-Hadoop software package. The software package is a Hadoop MapReduce JAR package.

Run the following commands to submit a MapReduce task on the Hadoop client. You only need to focus on the content in the configuration file of the job. Here, the ./bvt_case/speed.json file is used as an example. The configuration file is the same as a common DataX configuration file.

./bin/hadoop jar datax-jar-with-dependencies.jar

com.alibaba.datax.hdfs.odps.mr.HdfsToOdpsMRJob ./bvt_case/speed.jsonThis blog article describes how to migrate data from Hadoop Hive to Alibaba Cloud MaxCompute.

Before migrating data from Hadoop Hive, ensure that your Hadoop cluster works properly. The following Hadoop environments are supported:

Hive script:

CREATE TABLE IF NOT EXISTS hive_sale(

create_time timestamp,

category STRING,

brand STRING,

buyer_id STRING,

trans_num BIGINT,

trans_amount DOUBLE,

click_cnt BIGINT

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' lines terminated by '\n';

insert into hive_sale values

('2019-04-14','外套','品牌A','lilei',3,500.6,7),

('2019-04-15','生鲜','品牌B','lilei',1,303,8),

('2019-04-16','外套','品牌C','hanmeimei',2,510,2),

('2019-04-17','卫浴','品牌A','hanmeimei',1,442.5,1),

('2019-04-18','生鲜','品牌D','hanmeimei',2,234,3),

('2019-04-19','外套','品牌B','jimmy',9,2000,7),

('2019-04-20','生鲜','品牌A','jimmy',5,45.1,5),

('2019-04-21','外套','品牌E','jimmy',5,100.2,4),

('2019-04-22','生鲜','品牌G','peiqi',10,5560,7),

('2019-04-23','卫浴','品牌F','peiqi',1,445.6,2),

('2019-04-24','外套','品牌A','ray',3,777,3),

('2019-04-25','卫浴','品牌G','ray',3,122,3),

('2019-04-26','外套','品牌C','ray',1,62,7);Log on to the Hadoop cluster, create a Hive SQL script, and run Hive commands to initialize the script.

hive -f hive_data.sqlIn this article, we continue with HUE, or Hadoop User Experience, which is an open-source web interface, which can make many operations more simpler and easy to complete.

As we are taking a look at various ways of making Big Data Analytics more productive and efficient with better cluster management and easy-to-use interfaces. In this article, we will continue to walk through HUE, or Hadoop User Experience, discussing its several features and how you can make the best out of the interface and all of its features. In the previous article, Diving into Big Data: Hadoop User Experience, we started to look at how you can access Hue and what are some prerequisites needed for accessing Hadoop components using Hue. In this article, we will specifically focus on making the file operations easier with Hue, as well as the usage of editors, how to create workflows and scheduling them using Hue.

In addition to the scenario of creating directories and uploading files which we saw in our previous article, we also have additional features to be explored. The File Browser lets you perform more advanced file operations like the following:

Let's take a look at the above mentioned functions using File Browser.

Redis is now a major component used in many Big Data applications. Redis is a favorable alternative to traditional relational database services becaus.

We are already living in the era of Big Data. Big Data technology and products are ubiquitous in every aspect of our lives. From online banking to smart homes, Big Data has proven to be enormously useful in their respective use cases.

Redis—a high-performance key value database— has become an essential element in Big Data applications. As a NoSQL database, Redis helps enterprises make sense out of data by making database scaling more convenient and cost-effective. Cloud providers from across the globe, including Alibaba Cloud, are now offering a wide variety of Redis-related products for Big Data applications, such as Alibaba Cloud ApsaraDB for Redis.

This article introduces two methods of combining Redis with other Big Data technologies, specifically Hadoop and ELK.

Prominent in the world of big data, Hadoop is a distributed computing platform. With its high availability, expandability, fault tolerance, and low costs, it has now become a standard for Big Data systems. However, Hadoop's HDFS storage system makes it difficult to face end user applications (such as using a user’s browser history to recommend news articles or products). Therefore, the common practice is to send offline computing results to user-facing storage systems such as Redis and HBase.

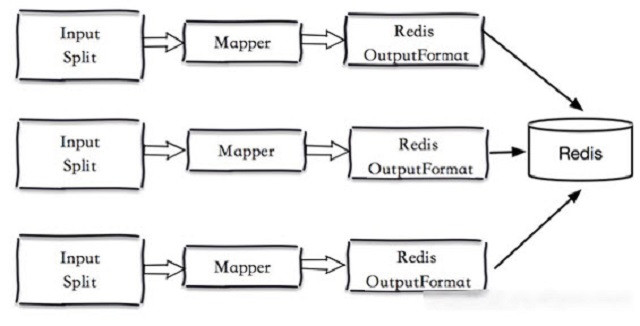

Even though it is not suitable for facing end users, Hadoop is extremely versatile and useful in that it supports custom OutputFormat. If you need a customized output, all you have to do is inherit the OutputFormat by defining Redis OutputFormat in the Redis terminal to complete mapping.

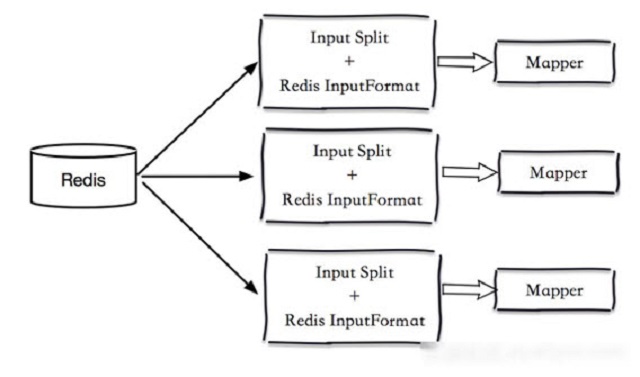

Of course, there are rare situations where Redis is the output source, but luckily Hadoop also provides custom InputFormat functionality.

When you choose to use Redis, you can decide whether to use the Master-Slave version or the cluster version according to the scope of your results.

This course is designed to help users who want to understand big data technology, as well as data processing through cloud products. Through learning, users can understand the advantages and architecture of E-MapReduce products, and be able to start using E-MapReduce, such as using offline analysis with Hadoop or Hive.

This course aims to help users who want to understand Big Data technology and data processing through cloud products. Through learning, users can fully understand the concept of Big Data and what is Hadoop, which is a firm foundation for the later study of Big Data technology.

This course aims to help users who want to understand Big Data technology and data processing through cloud products. Through learning, users can fully understand the storage mechanism of HDFS, the advanced application of HDFS, the reading and writing process of HDFS, and master the thought of MapReduce and the task execution process of Yarn, so as to better use Hadoop.

Through this course, you can understand what is Big Data, what is Hadoop, the basic structure of Hadoop, and the preparation for building Hadoop cluster on Alibaba Cloud.

Through this course, you will learn about SSH protocol, the installation process of Java and Hadoop on ECS, the construction process of Hadoop cluster, and the use of Hadoop.

This topic describes how to use the data synchronization feature of DataWorks to migrate data from Hadoop Distributed File System (HDFS) to MaxCompute. Data synchronization between MaxCompute and Hadoop or Spark is supported.

Before data migration, make sure that your Hadoop cluster works properly. You can use Alibaba Cloud E-MapReduce (EMR) to automatically create a Hadoop cluster. For more information, see Create a cluster.

The version information of EMR Hadoop is as follows:

The Hadoop cluster is deployed in a classic network in the China (Hangzhou) region. A public IP address and a private IP address are configured for the Elastic Compute Service (ECS) instance in the primary instance group. High Availability is set to No for the cluster.

This topic describes how to configure a Hadoop MapReduce job.

A project is created. For more information, see Manage projects.

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Heterogeneous database migration has always been a pain point of database migration. Traditionally, assessment of the compatibility and workload is difficult to complete in a short time; and it is also difficult to automatically output the transformation scheme and sort out the relationship between the database and the application. ADAM decreases the difficulty of heterogeneous database migration with functions of compatibility assessment, structure transformation suggestion, migration workload evaluation, application transformation assessment and suggestion.

2,593 posts | 792 followers

FollowAlibaba Cloud MaxCompute - June 24, 2019

Alibaba Cloud MaxCompute - December 7, 2018

Alibaba Cloud MaxCompute - March 2, 2020

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Cloud MaxCompute - September 30, 2022

Alibaba Clouder - April 14, 2021

2,593 posts | 792 followers

Follow Data Transport

Data Transport

A secure solution to migrate TB-level or PB-level data to Alibaba Cloud.

Learn More ApsaraDB for SelectDB

ApsaraDB for SelectDB

A cloud-native real-time data warehouse based on Apache Doris, providing high-performance and easy-to-use data analysis services.

Learn MoreMore Posts by Alibaba Clouder