This tutorial will discuss the preprocessing aspect of the machine learning pipeline, coving the ways in which data in handled, paying special attention to the techniques of standardization, normalization, feature selection and extraction, and data reduction. Last, this tutorial goes over how you can implement these techniques in a simple way.

This is part of a series of blog tutorials that discuss the basics of machine learning.

Data in the real world can be messy or incomplete, often riddled with errors. Finding insights or solving problems with such data can be extremely tedious and difficult. Therefore, it is important that data is converted into a format that is understandable before it can be processed. This is essentially what data preprocessing is. It is an important piece of the puzzle because it helps in removing various kinds of inconsistencies from the data.

Some ways that data can be handled during preprocessing includes:

Generally speaking, standardization can be considered as the process of implementing or developing standards based on a consensus. These standard help to maximize compatibility, repeatability, and quality, among other things. When it comes to machine learning, standardization serves as a means of making a data metric the same throughout the data. This technique can be applied to various kinds of data like pixel values for images or more specifically for medical data. Many machine learning algorithms such as support-vector machine and k-nearest neighbor uses the standardization technique to rescale the data.

Normalization is the process of reducing unwanted variation either within or between arrays of data. Min-Max scaling is one very common type of normalization technique frequently used in data preprocessing. This approach bounds a data feature into a range, usually either between 0 and 1 or between -1 and 1. This helps to create a standardized range, which makes comparisons easier and more objective. Consider the following example, you need to compare temperatures from cities from around the world. One issue is that temperatures recorded in NYC are in Fahrenheit but those in Poland are listed in Celsius. At the most fundamental level, the normalization process takes the data from each city and converts the temperatures to use the same unit of measure. Compared to standardization, normalization has smaller standard deviations due to the bounded range restriction, which works to suppress the effect of outliers.

Feature selection and extraction is a technique where either important features are built from the existing features. It serves as a process of selection of relevant features from a pool of variables.

The techniques of extraction and selection are quite different from each other. Extraction creates new features from other features, whereas selection returns a subset of most relevant features from many features. The selection technique is generally used for scenarios where there are many features, whereas the extraction technique is used when either there are few features. A very important use of feature selection is in text analysis where there many features but very few samples (these samples are the records themselves), and an important use of feature extraction is in image processing.

These techniques are mostly used because they offer the following benefits:

Developing a machine learning model on large amounts of data can be a computational heavy and tedious task, therefore the data reduction is a crucial step in data preprocessing. The process of data reduction can also help in making data analysis and the mining of huge amounts of data easier and simpler. Data reduction is an important process no matter the machine learning algorithm.

Despite being thought to have a negative impact on the final results of machine learning algorithms, this is simply not true. The process of data reduction does not remove data, but rather converts the data format from one type to another for better performance. The following are some ways in which data is reduced:

Learn the following content,you can refer to How to Use Preprocessing Techniques.

This article discusses how and where you can find public data to use in machine learning pipelines that you can then use in a variety of applications.

The goal of the article is to help you find a dataset from public data that you can use for your machine learning pipeline, whether it be for a machine learning demo, proof-of-concept, or research project. It may not always be possible to collect your own data, but by using public data, you can create machine learning pipelines that can be useful for a large number of applications.

Machine learning requires data. Without data you cannot be sure a machine learning model works. However, the data you need may not always be readily available.

Data may not have been collected or labeled yet or may not be readily available for machine learning model development because of technological, budgetary, privacy, or security concerns. Especially in a business contexts, stakeholders want to see how a machine learning system will work before investing the time and money in collecting, labeling, and moving data into such a system. This makes finding substitute data necessary.

This article wants to provide some light into how to find and use public data for various machine learning applications such as machine learning demos, proofs-of-concept, or research projects. This article specifically looks into where you can find data for almost any use case, problems with synthetic data, and the potential issues with using public data. In this article, the term "public data" refers to any data posted openly on the Internet and available for use by anyone who complies with the licensing terms of the data./ This definition goes beyond what is the typical scope of "open data", which usually refers only to government-released data.

One solution to these data needs is to generate synthetic data, or fake data to use layman's terms. Sometimes this is safe. But synthetic data is usually inappropriate for machine learning use cases because most datasets are too complex to fake correctly. More to the point, using synthetic data can also lead to misunderstandings during the development phase about how your machine learning model will perform with the intended data as you move onwards.

In a professional context using synthetic data is especially risky. If a model trained with synthetic data has worse performance than a model trained with the intended data, stakeholders may dismiss your work even though the model would have met their needs, in reality. If a model trained with synthetic data performs better than a model trained with the intended data, you create unrealistic expectations. Generally, you rarely know how the performance of your model will change when it is trained with a different dataset until you train it with that dataset.

Thus, using synthetic data creates a burden to communicate that any discussions of model performance are purely speculative. Model performance on substitute data is speculative as well, of course, but a model trained on a well-chosen substitute dataset will give closer performance to actual model trained on the intended data than a model trained on synthetic data.

If you feel you understand the intended data well enough to generate an essentially perfect synthetic dataset, then it is pointless to use machine learning since you already can predict the outlines. That is, the data you use for training should be random and used to see what the possible outcomes of this data, not to confirm what you already clearly know.

It is sometimes necessary to use synthetic data. It can be useful to use synthetic data in the following scenarios:

Be cautious when algorithmically generating data to demonstrate model training on large datasets. Many machine learning algorithms made for training models on large datasets are considerably optimized. These algorithms may detect simplistic (or overly noisy) data and train much faster than on real data.

Methodologies for generating synthetic data at large scale are beyond the scope of this guide. Google has released code to generate large-scale datasets. This code is well-suited for use cases like benchmarking the performance of schema-specific queries or data processing jobs. If the process you are testing is agnostic to the actual data values, it is usually safe to test with synthetic data.

This post features a basic introduction to machine learning (ML). You don’t need any prior knowledge about ML to get the best out of this article.

This post features a basic introduction to machine learning (ML). You don’t need any prior knowledge about ML to get the best out of this article. Before getting started, let’s address this question: "Is ML so important that I really need to read this post?"

After introducing Machine Learning and discussing the various techniques used to deliver its capabilities, let’s move on to its applications in related fields: big data, artificial intelligence (AI), and deep thinking.

Before 2010, Machine Learning applications played a significant role in specific fields, such as license plate recognition, cyber-attack prevention, and handwritten character recognition. After 2010, a significant number of Machine Learning applications were coupled with big data which then provided the optimal environment for Machine Learning applications.

The magic of big data mainly revolves around how big data can make highly accurate predictions. For example, Google used big data to predict the outbreak of H1N1 in specific U.S. cities. For the 2014 World Cup, Baidu accurately predicted match results, from the elimination round to the final game.This is amazing, but what gives big data such power?

At the heart of big data is its ability to extract value from data, and Machine Learning is a key technology that makes it possible. For Machine Learning, more data enables more accurate models. At the same time, the computing time needed by complex algorithms requires distributed computing, in-memory computing, and other technology. Therefore, the rise of Machine Learning is inextricably intertwined with lbig data.

However, big data is not the same as Machine Learning. Big data includes distributed computing, memory database, multi-dimensional analysis, and other

A census is an official survey of a population that records the details of individuals in various aspects. Through census data, we can measure the correlation of certain characteristics of the population, such as the impact of education on income level. This assessment can be made based on other attributes such as age, geographical location, and gender. In this article, we will show you how to set up the Alibaba Cloud Machine Learning Platform for AI product to perform a similar experiment using census data.

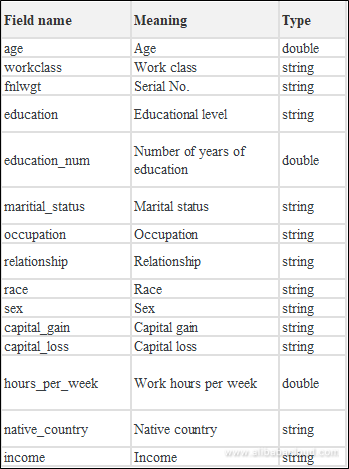

Data source: UCI open source dataset Adult is a census result for a certain region in the United States, with a total of 32,561 instances. The detailed fields are as follows:

Upload the data to MaxCompute via machine learning IDE or Command line tool Tunnel. Read the data through the Read Table component (Data source - Demographics in the figure). Then right click on the component to view the data, as shown below.

Through the full table statistics and numerical distribution statistics (data view and histogram component in the experiment), it can be determined whether a piece of data conforms to the Poisson distribution or the Gaussian distribution, and whether it is continuous or discrete.

Each component of Alibaba Cloud Machine Learning provides result visualization. The figure below is the output of the histogram component of the numerical statistics, in which the distribution of each input record can be clearly seen.

This course is the 2nd class of the Alibaba Cloud Machine Learning Algorithm QuickStart series, It mainly introduces the basic concept on Bayesian Probability and Naive Bayes Classifier Method Principle, as well as the evaluation metrics toword Naive Bayes Classifier Model , explains and demonstrates a complete process of building Naive Bayes Classifier Model with PAI, prepare for the knowledge associate with subsequent machine learning courses.

Heart disease causes 33% of deaths in the world. Data mining has become extremely important for heart disease prediction and treatment. It uses the relevant health exam indicators and analyzes their influences on heart disease. This session introduces how to use Alibaba Cloud Machine Learning Platform For AI to create a heart disease prediction model based on the data collected from heart disease patients.

How to use Alibaba Cloud advanced machine learning platform for AI (PAI) to quickly apply the linear regression model in machine learning to properly solve business-related prediction problems.

How to use Alibaba Cloud advanced machine learning platform for AI (PAI) to quickly apply the linear regression model in machine learning to properly solve business-related prediction problems.

This topic describes how to use the NGC-based Real-time Acceleration Platform for Integrated Data Science (RAPIDS) libraries that are installed on a GPU instance to accelerate tasks for data science and machine learning as well as improve the efficiency of computing resources.

RAPIDS is an open-source suite of data processing and machine learning libraries developed by NVIDIA that enables GPU-acceleration for data science workflows and machine learning. For more information about RAPIDS, visit the RAPIDS website.

NVIDIA GPU Cloud (NGC) is a deep learning ecosystem developed by NVIDIA to provide developers with free access to deep learning and machine learning software stacks to build corresponding development environments. The NGC website provides RAPIDS Docker images, which come pre-installed with development environments.

JupyterLab is an interactive development environment that makes it easy to browse, edit, and run code files on your servers.

Dask is a lightweight big data framework that can improve the efficiency of parallel computing.

This topic provides code that is modified based on the NVIDIA RAPIDS Demo code and dataset and demonstrates how to use RAPIDS to accelerate an end-to-end task from the Extract-Transform-Load (ETL) phase to the Machine Learning (ML) Training phase on a GPU instance. The RAPIDS cuDF library is used in the ETL phase whereas the XGBoost model is used in the ML Training phase. The example code is based on the Dask framework and runs on a single machine.

Sampling data is generated in the weighted mode. The weight column must be of Double or Int type. Sampling is performed based on the value of the weight column. For example, data with a col value of 1.2 has a higher probability to be sampled than data with a col value of 1.0.

An end-to-end platform that provides various machine learning algorithms to meet your data mining and analysis requirements.

An intelligent image search service with product search and generic search features to help users resolve image search requests.

Natural Language Intelligence: Building a Language Bridge for Business

2,593 posts | 793 followers

FollowAlibaba Clouder - March 15, 2021

Alibaba Clouder - October 28, 2019

Alibaba Clouder - September 14, 2017

Alibaba Cloud MaxCompute - October 18, 2021

Alibaba Clouder - June 17, 2020

Alibaba Cloud_Academy - May 18, 2022

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Clouder