By Si Luo.

This article brings you the highlights of Alibaba CIO Academy's 9th public live broadcast on fighting the pandemic with technology, entitled "The Crown Jewel of Artificial Intelligence: DAMO Academy's Language Technology." The guest speaker in this live broadcast was Si Luo, the head of Alibaba DAMO Academy's Language Technology Laboratory, a distinguished member of the ACM, and a senior researcher at Alibaba. He gave a lecture entitled "Building a Language Bridge for Business" and introduced the current development and trends of natural language research and DAMO Academy's achievements in natural language intelligence.

What is natural language intelligence? Natural language intelligence research aims to achieve effective communication between people and computers through language. It is a science that integrates linguistics, psychology, computer science, mathematics, and statistics and spans a wide range of disciplines, including the analysis, extraction, comprehension, transformation, and generation of natural languages and formal languages. Currently, artificial intelligence (AI) is undoubtedly one of the most popular areas of research. Si Luo divided the development of artificial intelligence into four stages: computational intelligence, perceptual intelligence, cognitive intelligence, and creative intelligence.

Figure 1 - Stages of Artificial Intelligence Development

Computational intelligence is the basic stage of AI. It refers to the ability to use the massive computing and storage capabilities of computers or machines to complete tasks that are beyond the capabilities of human beings. Google's AlphaGo falls into this category of intelligence. The next stage is perceptual intelligence. This refers to intelligence that can pinpoint target information from a wide range of unstructured information, including finding important elements like people's names, places, and organizations in news articles. Going a step further, cognitive intelligence attempts to identify the interrelationships among pieces of important information identified by perceptual intelligence. Then, it performs deduction, such as determining the occurrence, development, climax, process, and end of important events from a large number of news reports. Another example of cognitive intelligence is the use of computer vision to track a person's trajectory and activities at different times and in different scenarios. Based on the preceding stages, a more advanced stage is creative intelligence. This type of intelligence can generate new and complex ideas that are semantic and rational. It is an advanced form of artificial intelligence. As of 2020, computers cannot write novels with a high degree of internal consistency. Only when computers or artificial intelligence can conduct very complicated scientific work, such as mathematical reasoning or physical research, can it be called "creative intelligence." Perceptual intelligence, cognitive intelligence, and creative intelligence involve extensive semantic comprehension and common-sense reasoning. Natural language processing is essential to realizing the advanced stages of artificial intelligence, and also a very challenging undertaking.

In 2009, some important progress was achieved in the research of natural language intelligence. There was a breakthrough in in-depth language modeling that drove advances in important natural language technology. Public cloud NLP (natural language processing) technology shifted from generic functions to customized services. Natural language technology was increasingly integrated into industries and scenarios, where it is creating greater value. In 2020, we expect natural language intelligence to continue to make progress in these fields. As a technology-driven company, Alibaba will naturally strive to be a pioneer in natural language intelligence. This is why we founded the DAMO Academy, a language laboratory focusing on natural language intelligence that aims to:

Since it was founded two years ago, Alibaba DAMO Academy has continuously developed and improved its technology ecosystem. It has achieved excellent results in various domestic and international technical evaluations, including taking first place in the ACM CKIM Cup International Competition for Personalized E-Commerce Search in 2016, first place in the English Entity Classification Competition for Information Extraction held by the U.S. National Institute of Standards and Technology in 2017, first place at the 2019 Semantic Evaluation (SemEval) in four categories (including RumorEval and Toponym Resolution), and first place in the renowned language model evaluation set Gluebenchmark in March 2020. Alibaba DAMO Academy participated in these technology exercises to evaluate our technologies, verify, and improve our technology ecosystem. This allows us to apply our research results to the natural language technology platform and empower Alibaba's internal applications and partners to create greater business value.

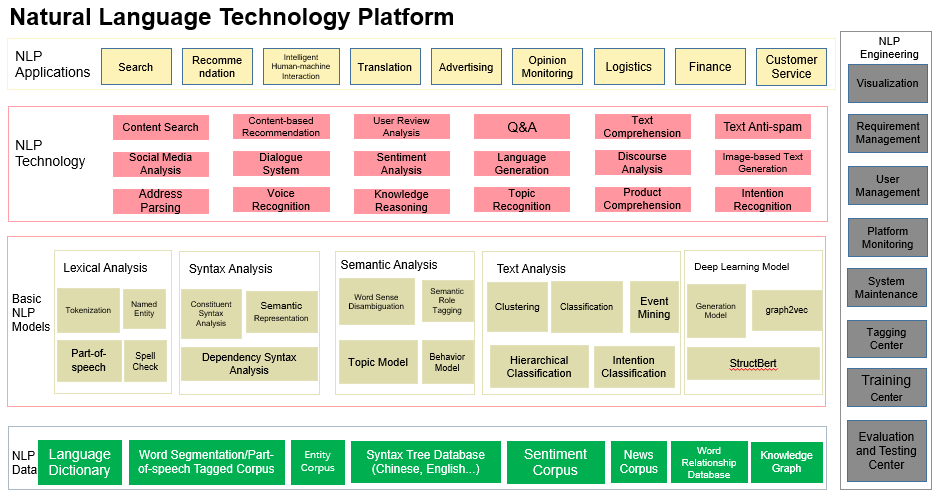

Relying on its technical strength, DAMO Academy has built a natural language technology platform as shown in Figure 2. The bottom layer of the platform is the data layer that collects massive volumes of data, including language dictionaries, word segmentation/part-of-speech tagging corpora, entity corpora, sentiment corpora, and more. Built on top of the data layer is the complete set of basic NLP algorithms, including basic lexical analysis, syntax analysis, semantic analysis, text analysis, and deep modeling. The vertical NLP technologies above the basic algorithms include search, content recommendation, sentiment analysis, and product comprehension. Finally, the NLP vertical technologies are combined to support complex NLP applications, such as search, recommendation, translation, and advertising.

Figure 2 - DAMO Academy Language Lab's Natural Language Technology Platform

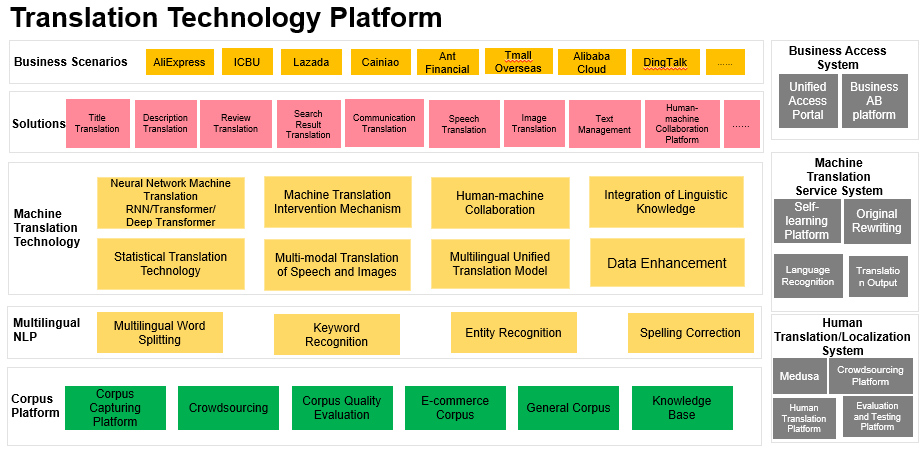

One very important branch of natural language processing is translation technology. This is used to eliminate the language barrier in business exchange and communication. The corpus platform is an important part of translation technology and covers corpus crawling, crowdsourcing, corpus quality evaluation, and other such functions. Above the corpus platform, there is the multi-language NLP technology that encompasses multi-language word segmentation, keyword recognition, entity recognition, and spelling error correction. Built on top of the multi-language NLP technology, machine translation technology mainly includes statistical translation technology, a multi-language unified translation model, and neural network machine translation. With the support of machine translation, translation technology can support various cross-language solutions and be applied in different business scenarios, such as title translation in e-commerce, product description translation, and review translation. Translation technology also plays a prominent role in promoting the internationalization of many Alibaba businesses, including AliExpress, ICBU, Cainiao, and Alibaba Cloud.

Figure 3 - Translation Technology Platform

Alibaba's machine translation technology underpins a wide range of international application scenarios within Alibaba. It supports more than 70 businesses and over 170 application scenarios and is called 1 billion times per day. While accumulating a large amount of corpus data, the technology supports many important tasks through its core neural network machine translation engine and has generated hundreds of millions of dollars in business value. Machine translation is not intended to replace manual translation. In many cases, a combined man-machine solution is used to improve efficiency and complete work faster. Developed out of a massive number of application scenarios, this technology can support more scenarios in a globalized world and create greater value by breaking down language barriers.

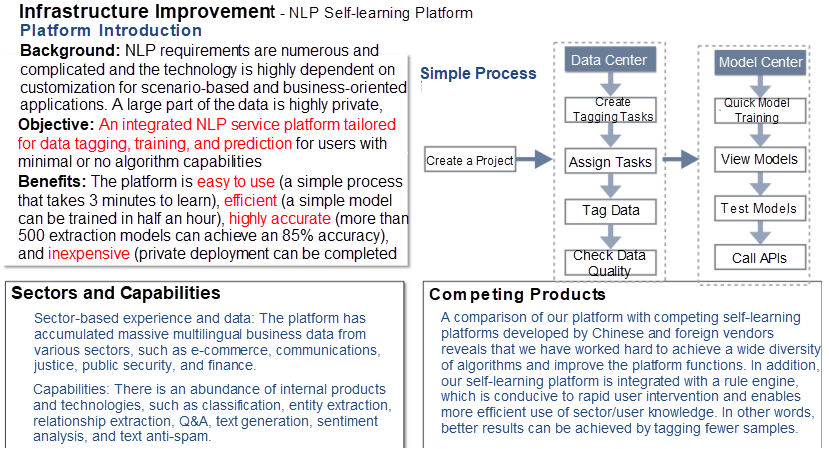

The aforementioned natural language technology platform and translation technology platform are both relatively common NLP platforms. They support the corresponding work with generic models. While working to empower our partners, Alibaba has found that the needs of companies are usually diversified and highly dependent on customization for specific scenarios and industries. Most data is highly private and companies lack platform-based solutions. Many of Alibaba's partners need to use algorithms, but cannot develop algorithms themselves. Therefore, we must provide integrated NLP tagging, training, and a prediction service platform tailored for partners who are less experienced in algorithm-based design. To meet the needs of real-world scenarios, the language lab designed the NLP self-learning platform. The platform starts with data tagging and provides a relatively complete data center to help perform tagging and data quality control. Users can use the NLP self-learning platform to train models closely aligned with specific industry scenarios, empower their businesses, and create business value. The NLP self-learning platform is convenient and easy to use, combining low cost with high accuracy. The platform allows partners to take advantage of the commercial benefits of NLP technology without requiring any algorithm capabilities. Therefore, it is very important to make an NLP basic platform accessible. For the same reason, many vendors are offering similar NLP platforms to accelerate business empowerment.

Figure 4 - NLP Self-Learning Platform

Currently, the atomic capabilities of the academy's NLP self-learning platform have evolved from text extraction and text classification functions to more advanced short text matching and relation extraction functions, and other complex atomic functions. We also support scenario-based applications, including sentiment analysis and product review analysis in the e-commerce sector, and are testing intricate applications, such as contract review.

With the text extraction function, we can extract information from plain text and retrieve important elements. For instance, we can train models to automatically extract the title of a contract, the names of party A and party B, the recipient's bank and account number, and other key information from a contract. Our solution for resumes extracts key information from resume letters including the applicant's name, school, major, and past work experience. Text classification is a very important feature of natural language technology, but many important applications can be regarded as special types of text classification. For instance, an application for short text classification recognizes short text strings and classifies them into different categories, such as pornography, advertising, abuse, and politics. Another example is classifying user reviews to determine whether they are positive or negative. Relation extraction and short text matching are two examples of more complex atomic functions. Through relationship extraction, we can identify important entities, extract their relationships, and determine the connections among multiple entities. Short text matching can be applied in many scenarios. For example, during the credit card replacement process, we can use the application on the NLP self-learning platform to determine the true intentions and business needs of users requesting credit card replacements through Q&A.

For the product review scenario, the language laboratory has trained many models based on the tagged data from Alibaba's internal platforms and achieved great results. By creating models tailored for different industries and conducting multi-dimensional analysis on product review text, DAMO Academy's NLP self-learning platform now supports a product review parsing model that can categorize user reviews.

With the technology platform in place, it is very important to construct foundational language models. Language models are used to describe the representation, sequence, structure, meaning, and generation process of natural languages and explain how to perform language-related application tasks. Traditionally, language models are constructed in two ways. The first approach is the linguistic method, which relies on linguistic grammar (context dependency) to describe the representation and meaning of formal languages. This method can effectively explain how the grammar of a language comes into being. However, as languages dynamically develop, new languages, new grammar, and new meanings are constantly emerging. Therefore, a strict rule-based method is subject to great limitations. The other approach is the data-driven method. This method uses statistical learning or deep learning to analyze a large number of corpora and some related application tasks and learn the representation and structure of a language. This method can be applied to a broad range of scenarios and applications by using changing language information and massive volumes of corpus information for self-learning. Si Luo believes models that integrate linguistic knowledge, general knowledge, domain-specific knowledge, and massive multi-modal data information represent the future of natural language intelligence. Today, data-driven language models, including statistical language models and the neural network language models, are the main focus of model development.

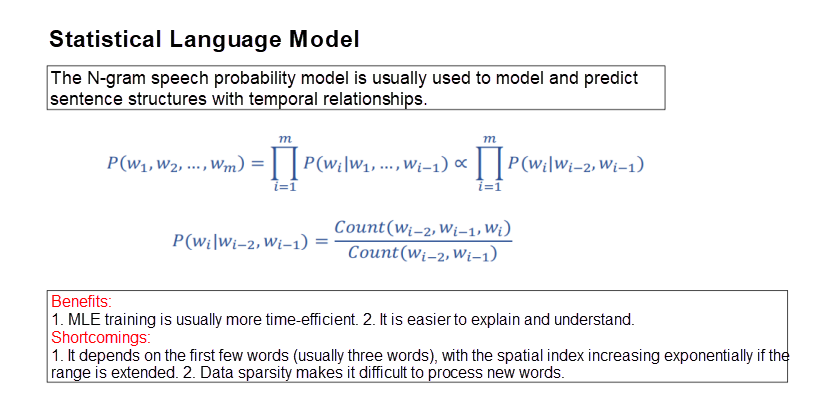

Statistical language models are built on the N-gram language probability model and used to predict sentence structures with temporal relationships. This model is trained using maximum likelihood estimation (MLE), an approach that is efficient, easy to explain, and easy to understand. The downside is that the model can only rely on the first few words of a sequence in the training data window. If the range is extended, the parameter space will increase exponentially, resulting in data sparsity and difficulty in processing new words.

Figure 5 - Statistical Language Model

To solve the problems inherent in the statistical language model, the academic community proposed the neural language model and representation learning. In this approach, a vector (such as a word vector) is assigned to every semantic unit (such as a word) in the continuous space, and the distributed representation is learned through neural networks. Based on this vector representation, natural language-related applications, such as computation and classification, can be performed. A typical example is the Word2vec model. In this model, each word is represented by a multi-dimensional vector, and the target word vector is used to predict the center word through the surrounding words. This approach uses a single hidden layer to learn vector representations, so it has inherent disadvantages. For example, the representation space of each word is fixed and does not change with the context. The model is simple but is limited in representation. It has insufficient links with downstream tasks, particularly with certain supervision corpus tasks.

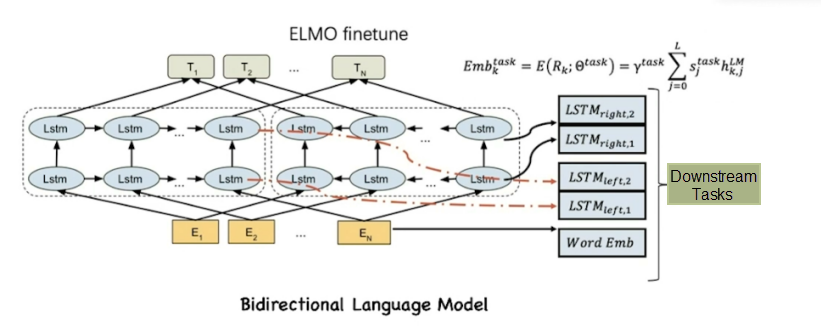

Based on the characteristics of Word2vec, researchers proposed a deep language model that uses a deep neural network to learn language representation that self-adapts through context learning. Among the deep language models, one well-known example is ELMo, which uses the LSTM network to model the context on the left and right of a word. ELMo has proven to be a very effective model. Figure 6 shows its structure.

Figure 6 - ELMo Model

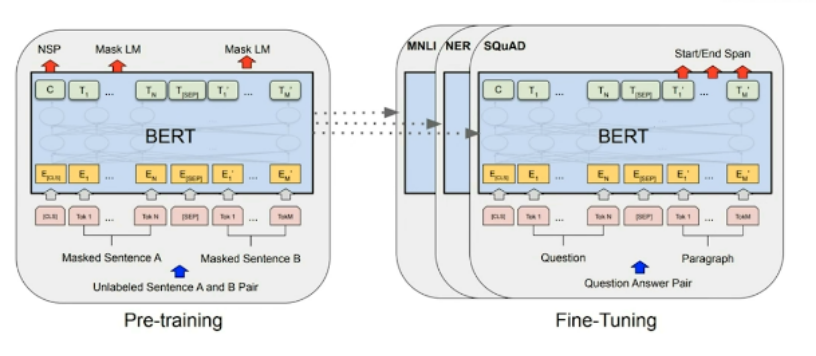

Another popular model is the BERT model proposed by Google in 2019. The BERT model is divided into two phases: the pre-training phase and the fine-tuning phase. In the pre-training phase, the BERT model is pre-trained without supervision on the relationships between words and sentences and learns the context semantic vector representation of each word in each sentence. The fine-tuning phase focuses on the combination of the downstream tasks. It uses some supervised expectations in the downstream as the optimization goals. Then, it combines them with the semantic representations in the BERT model for back-propagation, changing the semantic vector representations in the BERT model. In both phases, the BERT model uses the deep language model and the transformer architecture to complete the corresponding tasks.

Figure 7 - BERT Model

A simple comparison between BERT and ELMo reveals that both use a deep neural network to learn self-adapting language representations through context learning and improve model performance by combining downstream tasks. However, there are some differences between the two models:

Taking into consideration the characteristics of both models, if the downstream tasks are relatively more supervised corpora, the BERT model generally yields better results than the ELMo model because it is more coupled with the downstream tasks and introduces more coordinated optimization.

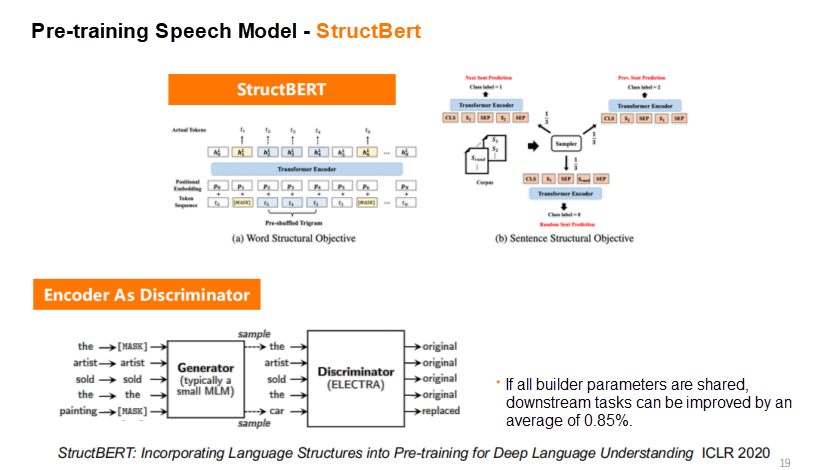

Since its debut, the BERT model has built a large user base and been used in a wide range of applications. Many organizations have developed improved versions of the model. The language laboratory has also done a lot of work in this area, and in particular, proposed the StructBERT model based on the BERT model. The StructBERT model makes more in-depth use of the relationships between words and sentences. For example, StructBERT uses the positional relationships between words to determine word relationships. By using the relationships between words and sentences, StructBERT is more effective in training word vectors representation. At the same time, the model also uses the generative model and the discriminator model to improve the representation of word vectors. Currently, StructBERT ranks first in the General Language Understanding Evaluation benchmark (GLUE).

Figure 8 - StructBERT Model

Focusing on the StructBERT model, Alibaba has made some progress in developing various natural language technologies. Here are some examples.

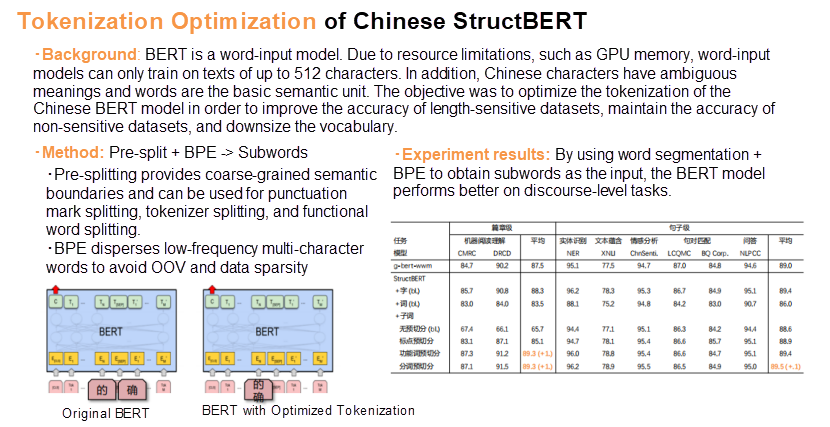

1. Tokenization Optimization of the Chinese StructBERT Model

Figure 9 - Tokenization Optimization of Chinese StructBERT

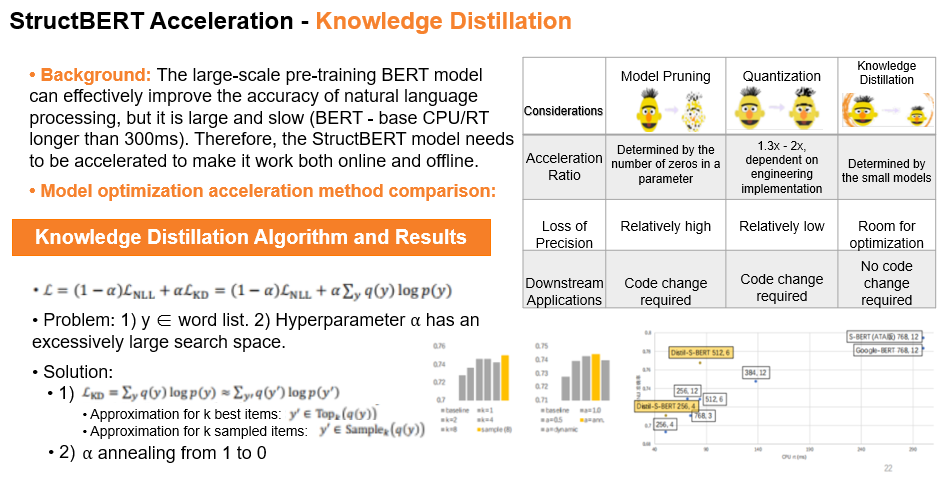

2. StructBERT Acceleration - Knowledge Distillation

Figure 10 - StructBERT Acceleration - Knowledge Distillation

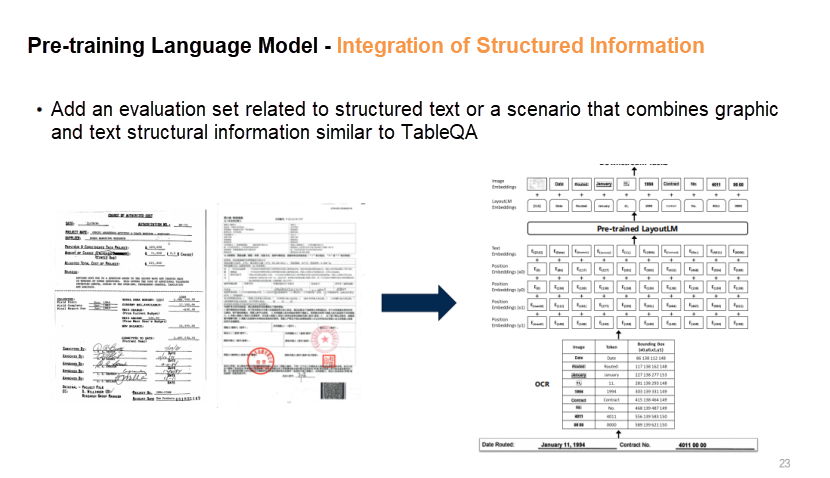

3. Pre-Training Language Model - Integration of Structured Information

The traditional BERT model uses text information. However, many scenarios involve more information, such as structured information. For example, table information often includes the location information of words. If an OCR tool is used to extract information, we can also obtain the location information of words. If this information is used together with text information to train the BERT model, it is easier to perform the downstream tasks.

Figure 11 - Pre-Trained Language Model - Integration of Structured Information

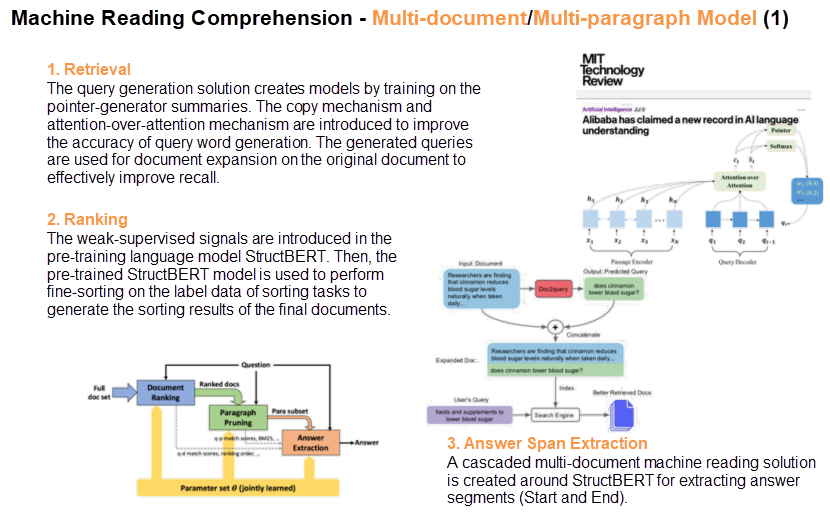

4. Machine Reading Comprehension - Multi-Document/Multi-Paragraph Model

The StructBERT model, when used in conjunction with the multi-document/multi-paragraph model, can achieve high performance in machine reading comprehension tasks, such as retrieval, sorting, and question-answering.

Figure 12 - StructBERT used with Multi-Document/Multi-Paragraph Model

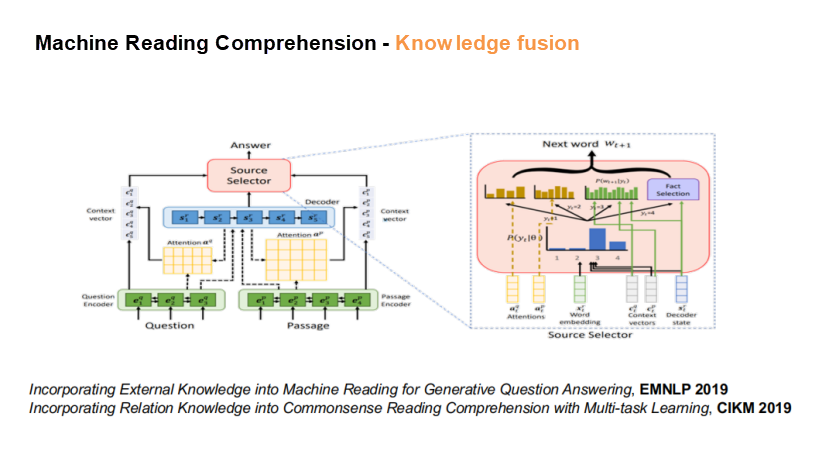

5. Machine Reading Comprehension - Knowledge Integration

StructBERT can also integrate knowledge, such as common-sense knowledge, into the language model to facilitate tasks, such as classification and question-answering.

Figure 13 - Machine Reading Comprehension - Knowledge Integration

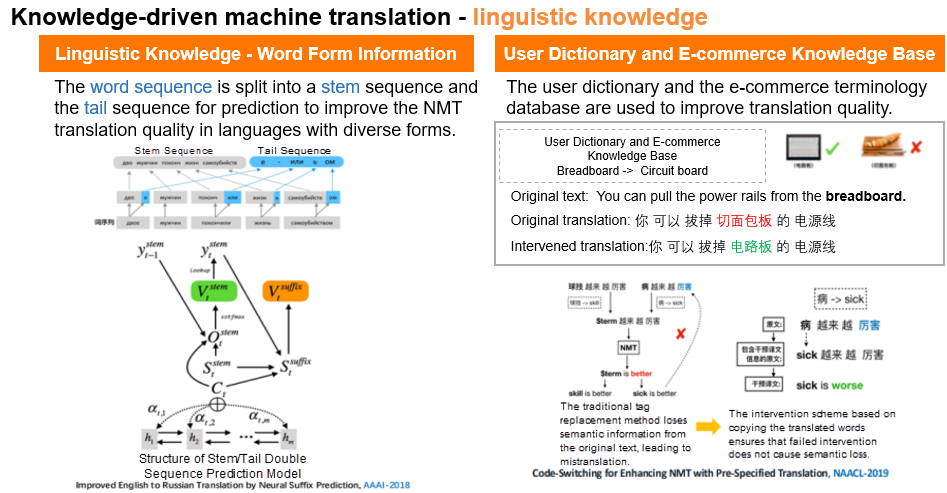

6. Knowledge-Driven Machine Translation - Linguistic Knowledge

A language usually has an abundance of forms of expression. A language model equipped with linguistic knowledge can perform better in translation scenarios. For example, the language model can be used to learn special forms of expression in different languages, such as compounding and suffixes, for improved translation results.

Figure 14 - Knowledge-Driven Machine Translation - Linguistic Knowledge

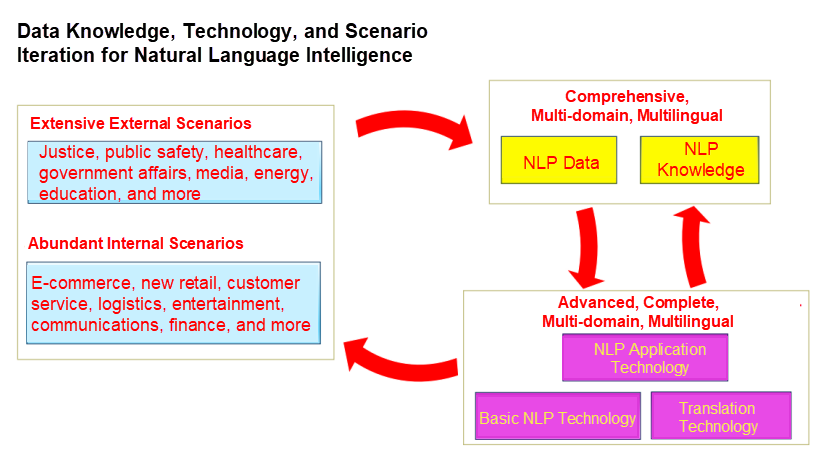

The atomic capabilities of NLP itself do not constitute a complete scenario. The only way to achieve its potential value is to combine these capabilities in business scenarios. An enterprise usually has many internal scenarios, such as e-commerce and customer service, in addition to a wide range of community-facing external scenarios, such as scenarios involving judicial authorities, public security, and education. A model trained on these scenarios can accumulate comprehensive, multi-field, and multilingual NLP data and knowledge that can be used to construct NLP-based application technologies applicable to similar scenarios. Only a closed-loop process can achieve the true value of natural language technology.

Figure 15 - Closed Loop between Natural Language Intelligence and Business Scenarios

The next section briefly introduces how DAMO Academy's language laboratory uses natural language technology in certain business scenarios.

Alibaba has a wide range of cross-border e-commerce scenarios that require a lot of translation work. In e-commerce translation scenarios, we need to establish a complete multilingual translation technology chain, from the translation of product titles and descriptions to the translation of payment instructions and communication with customers. All of these things require translation technology support to ensure the operation of Alibaba's overall cross-border e-commerce business.

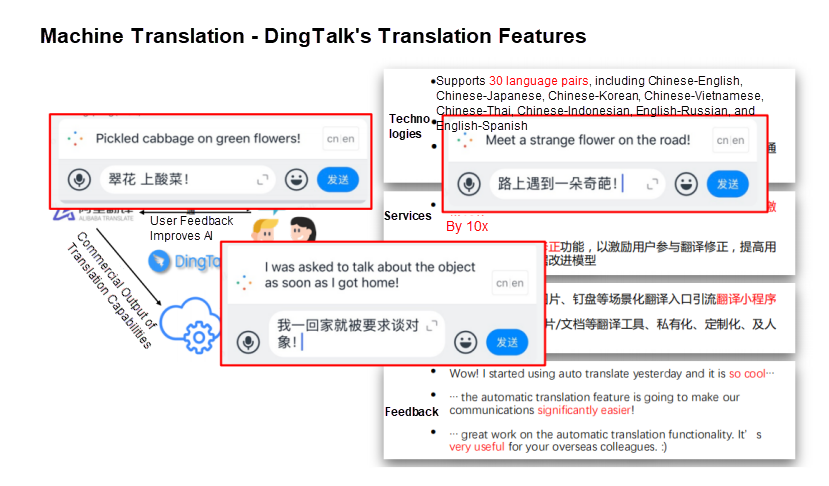

In recent years, DingTalk has developed rapidly. Its entry into the international market was supported by DAMO Academy's translation technology. So far, the DingTalk's translation feature supports 30 languages and is used in a large number of business application scenarios. Of course, some problems have emerged as a result of its wide application. For example, the translation of some colloquial text is not accurate, and it is difficult to accurately identify the context. The DAMO Academy has been working constantly to optimize the algorithms behind this technology to provide better services to users.

Figure 16 - Machine Translation in Social Scenarios: DingTalk's Translation Feature

With encouraging progress in NLP, Alibaba DAMO Academy's language laboratory has been working to integrate this technology into many business scenarios to create a wide range of cloud products and solutions suitable for specific scenarios.

1. Address information management system

The address information management system performs natural language processing, such as error correction, normalization, and hierarchical classification, for non-standard address information registered in various business systems. It systematically generates, matches, manages, and uses standardized addresses to better support logistics and business scenarios.

2. Event analysis graphs

The event analysis graphs use NLP technology on massive text information and convert this information into text, structured data, and graphs in scenarios such as data concatenation, retrieval, and reasoning.

3. Mass communication empowered by cloud solutions

In the communications industry, Alibaba Cloud's communication solution uses AI to empower cloud communication and help build differentiated competitive advantages for our products. For example, this solution applies its atomic capabilities to the content of short messages to improve cloud communication. In the future, we will extend this solution from mere text information to voice information, video information, and multilingual information in international scenarios. This solution has proven very fruitful, enhancing business value when tested on Alibaba Cloud's services.

4. Intelligent justice

As the number of court cases continues to surge, there are not enough judges to cope. In this context, the introduction of NLP technology can greatly improve the efficiency of the judicial system.

5. Intelligent contract management

Similar to the judicial field, intelligent contract management is another scenario where NLP technology can be applied. Electronic signatures and contract management have become a very important trend, but the vast majority of contracts are still manually reviewed by lawyers. Empowered by NLP intelligence, the intelligent contract management solution can facilitate the review and management of contracts, comprehensively improving the overall efficiency of contract services.

6. Intelligent healthcare

NLP technology can also be applied to intelligent healthcare. Presently, this technology has mainly been applied to quality inspection of medical records. This helps increase efficiency before and during patient consults.

7. NLP boosts epidemic prevention and control

During the arduous fight against the coronavirus (COVID-19) pandemic, the language laboratory has contributed by undertaking some fundamental work to facilitate epidemic prevention and control. First, its address solution uses NLP technology to extract addresses from massive information volumes. This solution is essential for supporting efforts to contain the coronavirus outbreak such as through epidemic prediction and prevention. Typical scenarios include analysis of inbound travel in pandemic-stricken areas, screening of related personnel, and outbound calling. The medical machine translation solution made significant contributions to the battle against the pandemic. For example, the medical machine translation engine supports researchers by translating medical literature, terms from foreign languages, and lowering the language barriers that complicate COVID-19 research. Amid the nationwide lockdown at the height of the outbreak in China, NLP technology proved to be very effective in facilitating remote trials. This technology greatly improved efficiency for the Shangcheng District and Xiacheng District People's Courts in Hangzhou, which held remote trials during the pandemic. In most cases, official records were automatically generated during the court hearings.

Get to know our core technologies and latest product updates from Alibaba's top senior experts on our Tech Show series

Breaking the Limits of Relational Database in Cloud Native Era

2,599 posts | 764 followers

FollowAlibaba Cloud Community - September 13, 2024

Farruh - March 22, 2024

Alibaba Clouder - August 9, 2018

Alibaba Cloud Community - September 17, 2021

Alibaba Cloud Community - November 14, 2022

Alibaba Clouder - June 11, 2018

2,599 posts | 764 followers

Follow Intelligent Robot

Intelligent Robot

A dialogue platform that enables smart dialog (based on natural language processing) through a range of dialogue-enabling clients

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More ApsaraVideo Media Processing

ApsaraVideo Media Processing

Transcode multimedia data into media files in various resolutions, bitrates, and formats that are suitable for playback on PCs, TVs, and mobile devices.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Clouder