By Xincheng and Chenguang

What is AI? You may think about a neural network stacked with neurons. What is the art of painting? Is it Da Vinci's Mona Lisa, Van Gogh's Starry Night and Sunflower, or Johannes Vermeer's The Girl with Pearl Earrings? What will happen when AI meets the art of painting?

In early 2021, the OpenAI Team released a DALL-E model capable of generating images based on texts. Due to its powerful ability of cross-modal image generation, it has been popular with natural language and visual technology enthusiasts. Multi-modal image generation technologies have sprung up in just one year. Many applications using these technologies for AI artistic creation emerged (such as Disco Diffusion), which is really popular recently. Nowadays, these applications are entering the vision of art creators and the general public, becoming the so-called magic pen.

This article introduces multi-modal image generation technology and classic work and explores how to use multi-modal images to do AI painting.

AI Paintings by Disco Diffusion

Multi-modal image generation aims to use text, audio, and other modal information as guiding conditions to generate realistic images with natural textures. Unlike the traditional single-modal generation technology that generates images based on noise, multi-modal image generation has always been challenging. The problems to be solved mainly include:

Over the past two years, Transformer was applied in Natural Language Processing (such as GPT), computer vision (such as VIT), multi-modal pre-training (such as CLIP), and other fields. Besides, the image generation technology represented by VAE and GAN is overtaken by the rising star-Diffusion Model. Thus, the development of multi-modal image generation is speeding up.

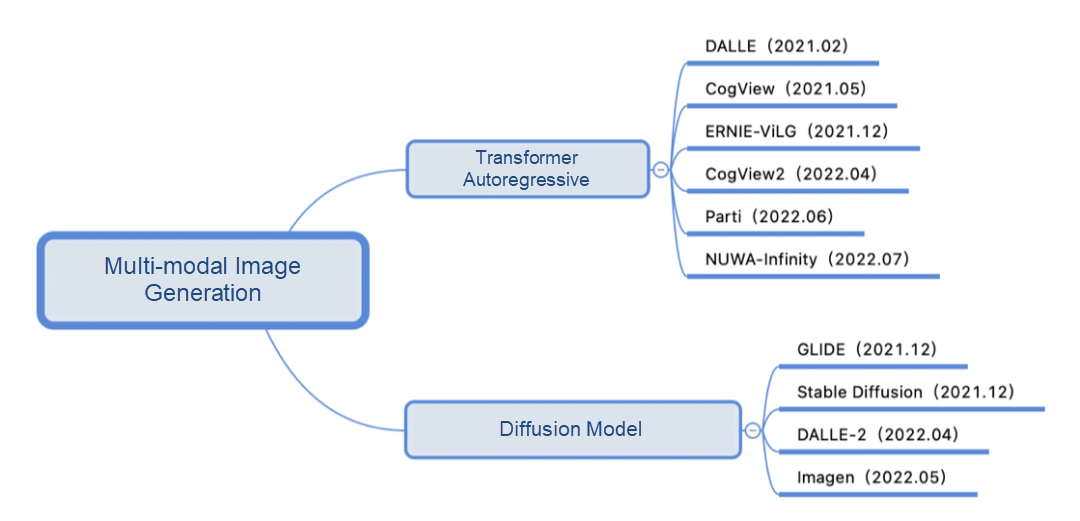

According to whether the training method is Transformer autoregressive or the diffusion model, the key work of multi-modal image generation over the past two years is classified below:

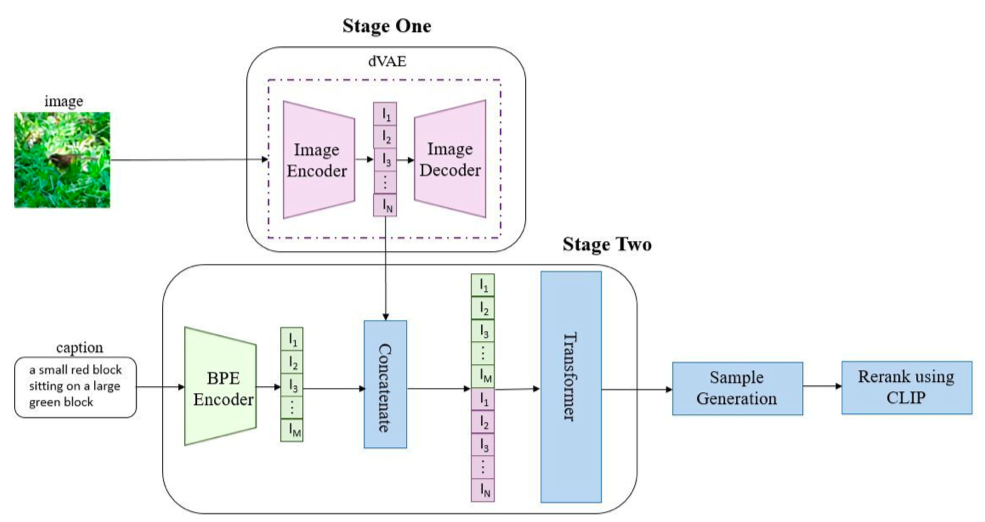

The method of Transformer autoregressive often converts text and image into tokens sequence, uses generative Transformer architecture to predict image sequence from text sequence (and optional image sequence), and uses image generation technology (VAE, GAN, etc.) to decode the image sequence to obtain the final generated image. Let's take DALL-E (OpenAI) [1] as an example:

The image and text are converted into sequences by their respective encoders and stitched together into Transformer (here is GPT3) for autoregressive sequence generation. In the inference phase, the pre-trained CLIP is used to calculate the similarity between the text and the generated image. The final generated image is output after sequencing. Similar to DALL-E, Tsinghua's CogView series [2,3] and Baidu's ERNIE-ViLG [4] use the architecture design of VQ-VAE + Transformer, while Google's Parti [5] replaces the image codec with ViT-VQGAN. Microsoft's NUWA-Infinity [6] can achieve infinite visual generation using autoregressive methods.

It is an image generation technology that grew quickly over the last year and is called the terminator of GAN. As shown in the figure, the diffusion model is divided into two stages:

(1) Adding Noise: It gradually adds random noises to the image along the diffusive Markov chain process.

(2) Denoising: It learns the inverse diffusion process to restore the image. Common variants include Denoising Diffusion Probabilistic Model (DDPM), etc.

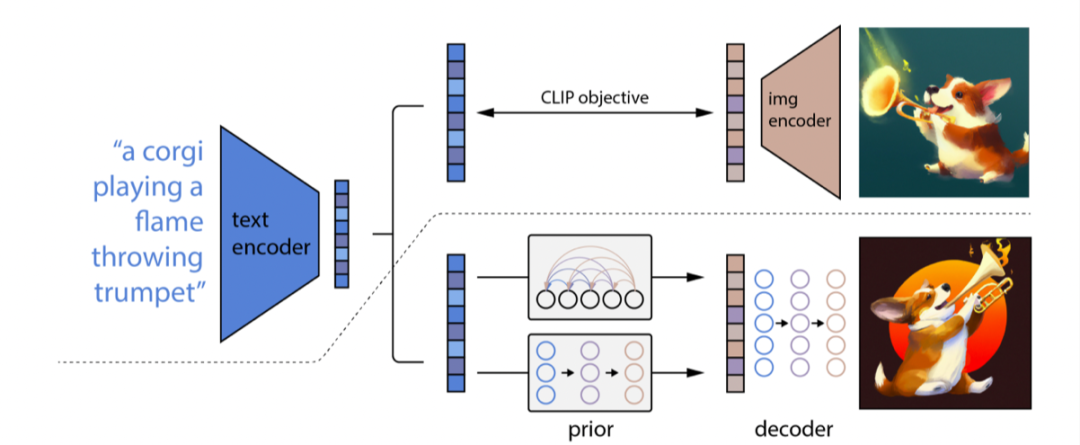

The multi-modal image generation method of the diffusion model is mainly to learn the mapping of text features to image features through the diffusion model with conditional guidance and decode the image features to obtain the final generated image. Take DALL-E-2(OpenAI) [7] as an example. Although it is a sequel to DALL-E, it adopts a different technical route from DALL-E. Its principle is more like GLIDE 8. The overall architecture of the DALL-E-2 is shown in the following figure.

DALL-E-2 uses CLIP to encode the text and uses the diffusion model to learn the prior process to obtain the mapping of text features to image features. It finally learns the process of reversing CLIP to decode the image features into the final image. Compared to DALL-E-2, Google's Imagen [9] uses pre-trained T5-XXL instead of CLIP for text encoding and then uses the hyper-diffusion model (U-Net architecture) to increase the image size to get 1024 x 1024 HD images.

The introduction of Transformer autoregressive and the comparative learning of CLIP establish a bridge between text and image. At the same time, the diffusion model with conditional guidance lays the foundation for generating diverse and high-resolution images. However, there are often subjective factors in evaluating the quality of images. It is difficult to say which one is better, the technology of the Transformer autoregressive or the diffusion model. Models like the DALL-E series, Imagen, and Parti are trained on large-scale data sets. There may be ethical issues and social biases in their applications. Therefore, these models have not become open-source yet. There are still many enthusiasts trying to use the technology, and many applications have been produced.

The development of multi-modal image generation technology provides possibilities for AI artistic creation. Currently, the widely used AI creation applications and tools include CLIPDraw, VQGAN-CLIP, Disco Diffusion, DALL-E Mini, Midjourney (need to be invited), DALL-E-2 (need to be qualified for internal testing), Dream By Wombo (App), Meta Make-A-Scene, the Tiktok AI Green Screen function, Stable Diffusion [10], Baidu Yige, etc. This article mainly uses Disco Diffusion to create AI art, which is popular in the field of artistic creation.

Disco Diffusion [11] is an AI art creation application jointly maintained by many technology enthusiasts on GitHub. It has been iterated in multiple versions. The name Disco Diffusion indicates that the technology it uses is mainly the diffusion model guided by CLIP. Disco Diffusion can generate artistic images or videos based on specified texts (and optional basemaps). For example, if you enter Sea of Flowers, the model will randomly generate a noisy image, which will be iterated step by step through the process of Diffusion. A beautiful image can be rendered after a certain number of steps. Thanks to the diverse generation of diffusion models, each time you run the program, you get a different image, which makes it fascinating.

Currently, AI creation based on the multi-modal image generation Disco Diffusion (DD) has the following problems.

(1) The Quality of Generated Images Is Uneven: According to the difficulty of generating tasks, it is roughly estimated that the yield rate of generating tasks with difficult descriptions is 20%-30%, and the yield rate of generating tasks with easy descriptions is 60%-70%. The yield rate of most tasks is between 30-40%.

(2) Slow Generation Speed and Large Memory Consumption: Let's take iterating 250 steps to generate a 1280*768 image as an example. It takes about six minutes and uses V100 16G video memory.

(3) Relying Heavily on Expert Experience: Selecting a set of suitable description words requires a large number of trial and error of texts and weight setting, the understanding of the painter's style and art community, and the selection of text modifiers, etc. Adjusting parameters requires a deep understanding of the concepts of CLIP guide times, saturation, contrast, noise, cutting times, internal and external cut, gradient size, symmetry and so on.. Also, strong painting skill is needed. Numerous parameters mean strong expert experience is required to generate a good image.

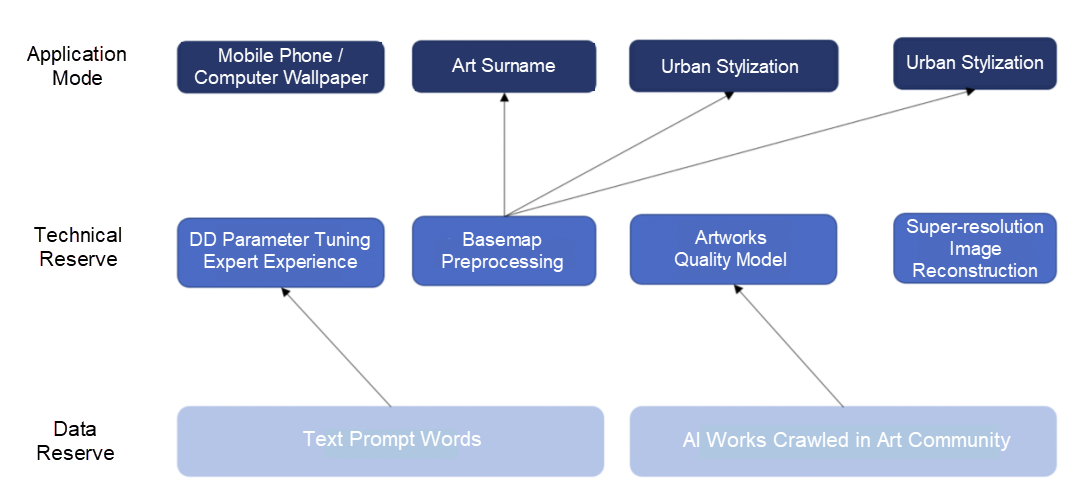

We have made some data and technical reserves to solve the problems and imagined some possible applications in the future. As the following figure shows:

With these data and technical reserves, we have accumulated multi-modal image generation applications (such as mobile phone and computer wallpapers, artistic surnames and first names, landmark city stylization, and digital collections). Below, we will show AI-generated artwork.

Different styles of paintings are generated (animation/cyberpunk/pixel) by inputting text descriptions and landmark city basemap.

(1) An anime-style building by Makoto Shinkai and Beeple, trending on artstation

(2) A cyberpunk-style building by Gregory Grewdson, trending on artstation

(3) A pixel-style building by Stefan Bogdanovi, trending on artstation.

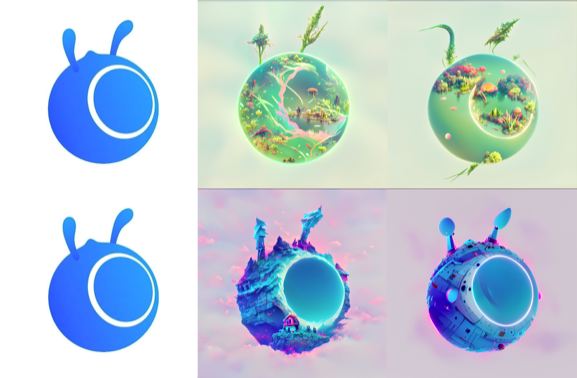

Create on the basemap by importing the text description and basemap:

Ant Logo Series (Ant Forest/Ant Hut/Ant Spaceship)

(1) A landscape with vegetation and a lake by RAHDS and Beeple, trending on artstation

(2) An enchanted cottage on the edge of a cliff with a foreboding ominous fantasy landscape by RAHDS and Beeple, trending on artstation

(3) A spacecraft by RAHDS and Beeple, trending on artstation

Ant Chicken Series (Chicken Transformers/Chicken SpongeBob SquarePants)

(1) Transformers with machine armor by Alex Milne, trending on artstation

(2) Spongebob by RAHDS and Beeple, trending on artstation

Generate a mobile wallpaper by entering the text description

(1) The esoteric dreamscape matte painting vast landscape by Dan Luvisi, trending on artstation

(2) Scattered terraces, winter, snow by Makoto Shinka, trending on artstation (4K wallpaper)

(3) A beautiful cloudpunk painting of Atlantis arising from the abyss heralded by steampunk whales and volumetric lighting by Pixar rococo style, artstation

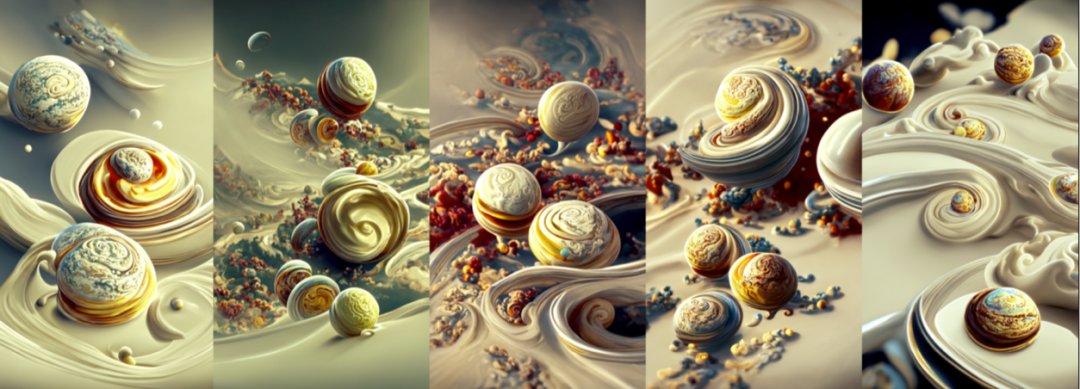

(4-8) A scenic view of the planets rotating through chantilly cream by Ernst Haeckel and Pixar, trending on artstation (4K wallpaper)

Generate computer wallpaper by entering the text description

(1) Fine, beautiful country fields, super wide angle, overlooking, morning by Makoto Shinkai

(2) A beautiful painting of a starry night, shining its light across a sunflower sea by James Gurney, trending on artstation

(3) Fairy tale steam country by Greg Rutkowski and Thomas Kinkade, trending on artstation.

(4) A beautiful render of a magical building in a dreamy landscape by Daniel Merriam, soft lighting, 4K HD wallpaper, trending on artstation and behance

Generate different styles of artistic surnames by entering text descriptions and surname basemap

(1) Large-scale military factories, mech testing machines, semi-finished mechs, engineering vehicles, automation management, indicators, future, sci-fi, light effect, and high-definition picture

(2) A beautiful painting of a mushroom, tree, artstation, 4K HD wallpaper

(3) A beautiful painting of sunflowers, fog, unreal engine, shining its light across a tumultuous sea of blood by Greg Rutkowski and Thomas Kinkade, artstation, Andreas Rocha, and Greg Rutkowski

(4) A beautiful painting of the pavilion on the water presents a reflection by John Howe, Albert Bierstadt, Alena Aenami, and Dan Mumford – concept art wallpaper 4K, trending on artstation, concept art, cinematic, unreal engine, trending on behance

(5) A beautiful landscape of a lush jungle with exotic plants and trees by John Howe, Albert Bierstadt, Alena Aenami, and Dan Mumford – concept art wallpaper 4K, trending on artstation, concept art, cinematic, unreal engine, trending on behance

(6) Contra Force, Red fortress, spacecraft by Ernst Haeckel and Pixar, wallpaper HD 4K, trending on artstation

Stable Diffusion [10, 12] shows more efficient and stable creative ability than Disco Diffusion [11], especially in the depiction of things. The following figure shows the AI paintings based on texts created by the author with Stable Diffusion.

This article mainly introduces multi-modal image generation technology and related progress over the past two years and tries to use multi-modal image generation for a variety of AI artistic creations. Next, we will explore the possibility of multi-modal image generation technology running on consumer CPUs and combining business to empower AI intelligent creation. We will try more related applications, such as movies, animation theme covers, games, meta-universe content creation, etc.

Multi-modal image generation technology for artistic creation is only one application of AI's autonomous production content (AIGC, AI-generated content). Thanks to the current development of massive data and pre-trained large models, AIGC accelerates the landing and provides more quality content.

[1] Ramesh A, Pavlov M, Goh G, et al. Zero-shot text-to-image generation[C]//International Conference on Machine Learning. PMLR, 2021: 8821-8831.

[2] Ding M, Yang Z, Hong W, et al. Cogview: Mastering text-to-image generation via transformers[J]. Advances in Neural Information Processing Systems, 2021, 34: 19822-19835.

[3] Ding M, Zheng W, Hong W, et al. CogView2: Faster and Better Text-to-Image Generation via Hierarchical Transformers[J]. arXiv preprint arXiv:2204.14217, 2022.

[4] Zhang H, Yin W, Fang Y, et al. ERNIE-ViLG: Unified generative pre-training for bidirectional vision-language generation[J]. arXiv preprint arXiv:2112.15283, 2021.

[5] Yu J, Xu Y, Koh J Y, et al. Scaling Autoregressive Models for Content-Rich Text-to-Image Generation[J]. arXiv preprint arXiv:2206.10789, 2022.

[6] Wu C, Liang J, Hu X, et al. NUWA-Infinity: Autoregressive over Autoregressive Generation for Infinite Visual Synthesis[J]. arXiv preprint arXiv:2207.09814, 2022.

[7] Ramesh A, Dhariwal P, Nichol A, et al. Hierarchical text-conditional image generation with clip latents[J]. arXiv preprint arXiv:2204.06125, 2022.

[8] Nichol A, Dhariwal P, Ramesh A, et al. Glide: Towards photorealistic image generation and editing with text-guided diffusion models[J]. arXiv preprint arXiv:2112.10741, 2021.

[9] Saharia C, Chan W, Saxena S, et al. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding[J]. arXiv preprint arXiv:2205.11487, 2022.

[10] Rombach R, Blattmann A, Lorenz D, et al. High-resolution image synthesis with latent diffusion models[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 10684-10695.

[11] Github: https://github.com/alembics/disco-diffusion

[12] Github: https://github.com/CompVis/stable-diffusion

A More Efficient, Innovative and Greener 11.11 Runs Wholly on Alibaba Cloud

Alibaba Cloud Champions Clean Energy During 11.11 Shopping Festival

1,322 posts | 464 followers

FollowAlibaba Cloud Community - November 18, 2022

Alibaba Cloud Serverless - July 27, 2023

Liam - December 29, 2022

Alibaba Cloud Community - July 9, 2024

Alibaba Cloud Community - August 8, 2025

Farruh - October 2, 2023

1,322 posts | 464 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Community