By Si Xiang

This document describes how to migrate data from Hadoop Hive to Alibaba Cloud MaxCompute.

Before migrating data from Hadoop Hive, ensure that your Hadoop cluster works properly. The following Hadoop environments are supported:

Hive script:

CREATE TABLE IF NOT EXISTS hive_sale(

create_time timestamp,

category STRING,

brand STRING,

buyer_id STRING,

trans_num BIGINT,

trans_amount DOUBLE,

click_cnt BIGINT

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' lines terminated by '\n';

insert into hive_sale values

('2019-04-14','外套','品牌A','lilei',3,500.6,7),

('2019-04-15','生鲜','品牌B','lilei',1,303,8),

('2019-04-16','外套','品牌C','hanmeimei',2,510,2),

('2019-04-17','卫浴','品牌A','hanmeimei',1,442.5,1),

('2019-04-18','生鲜','品牌D','hanmeimei',2,234,3),

('2019-04-19','外套','品牌B','jimmy',9,2000,7),

('2019-04-20','生鲜','品牌A','jimmy',5,45.1,5),

('2019-04-21','外套','品牌E','jimmy',5,100.2,4),

('2019-04-22','生鲜','品牌G','peiqi',10,5560,7),

('2019-04-23','卫浴','品牌F','peiqi',1,445.6,2),

('2019-04-24','外套','品牌A','ray',3,777,3),

('2019-04-25','卫浴','品牌G','ray',3,122,3),

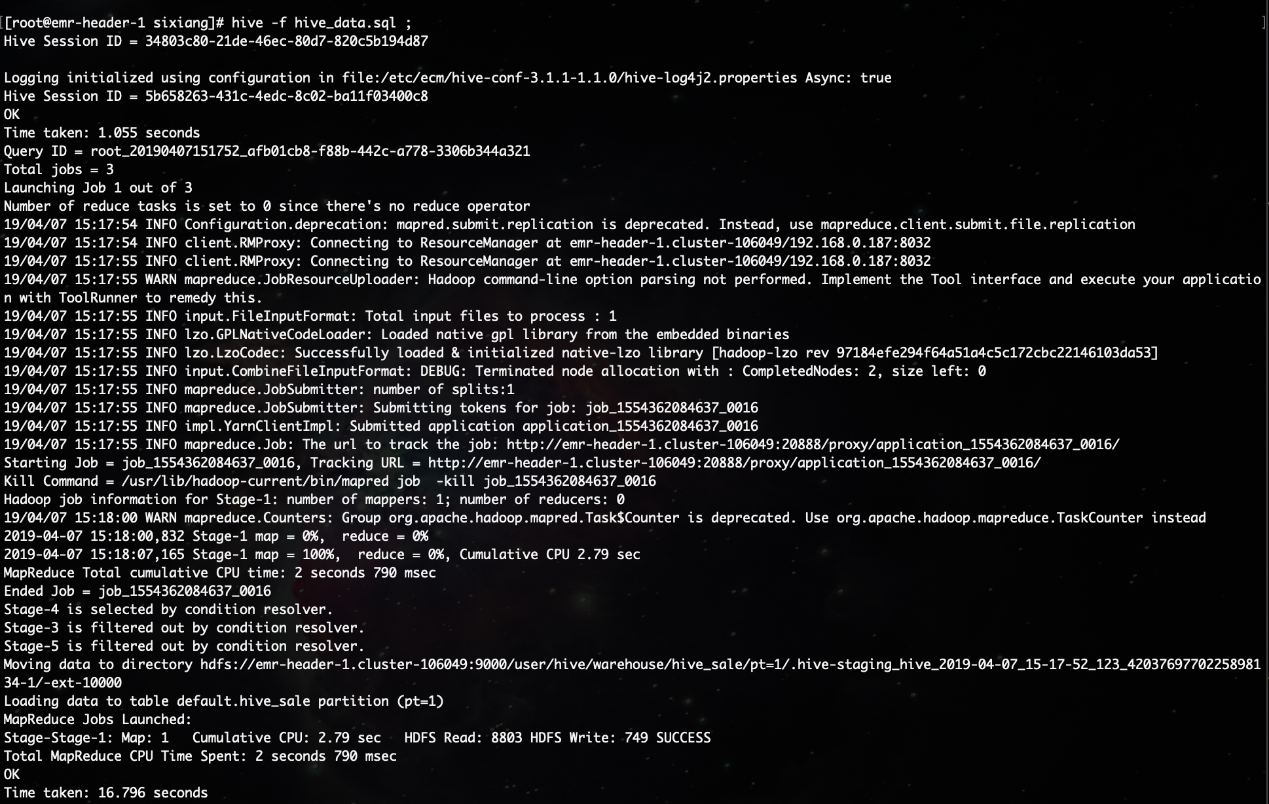

('2019-04-26','外套','品牌C','ray',1,62,7);Log on to the Hadoop cluster, create a Hive SQL script, and run Hive commands to initialize the script.

hive -f hive_data.sql

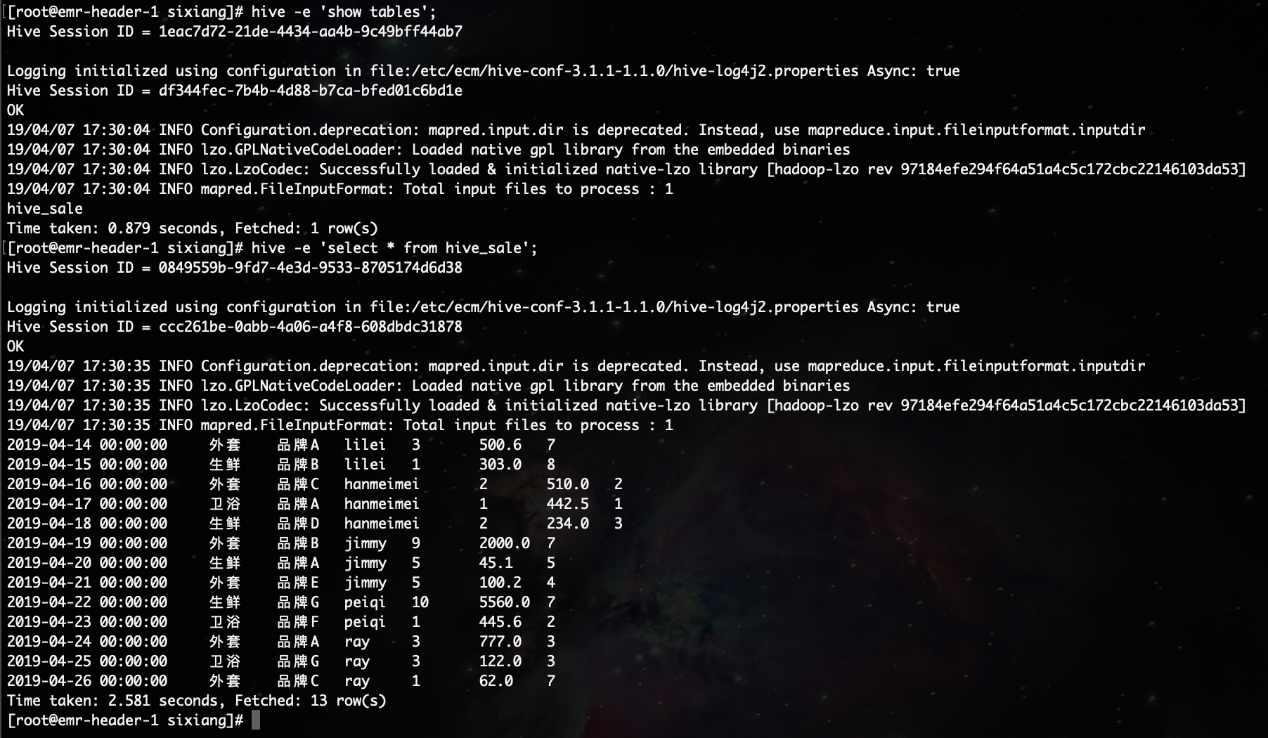

Query tables.

hive -e 'show tables';

hive -e 'select * from hive_sale';

Activate MaxCompute. For more information, see: https://www.alibabacloud.com/help/doc-detail/58226.htm

Install and configure a MaxCompute client. For more information, see: https://partners-intl.aliyun.com/help/doc-detail/27804.htm

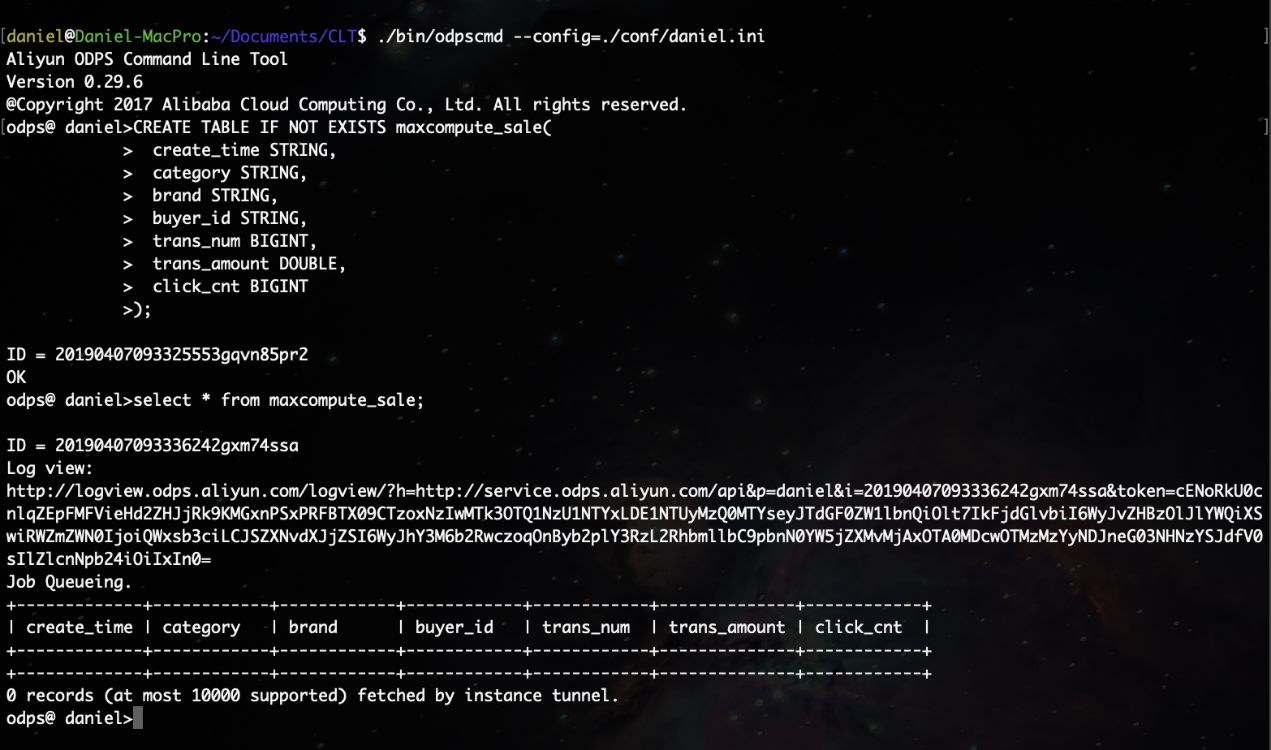

CREATE TABLE IF NOT EXISTS maxcompute_sale(

create_time STRING,

category STRING,

brand STRING,

buyer_id STRING,

trans_num BIGINT,

trans_amount DOUBLE,

click_cnt BIGINT

);

You need to consider data type mapping between Hive and MaxCompute when creating a table. For more information, see: https://www.alibabacloud.com/help/doc-detail/54081.htm

Create a table by using the odpscmd CLI tool. For how to install and configure odpscmd, see: https://www.alibabacloud.com/help/doc-detail/142260.htm

Note: MaxCompute 2.0 supports basic and complex data types. For more information, see: https://www.alibabacloud.com/help/doc-detail/27821.htm

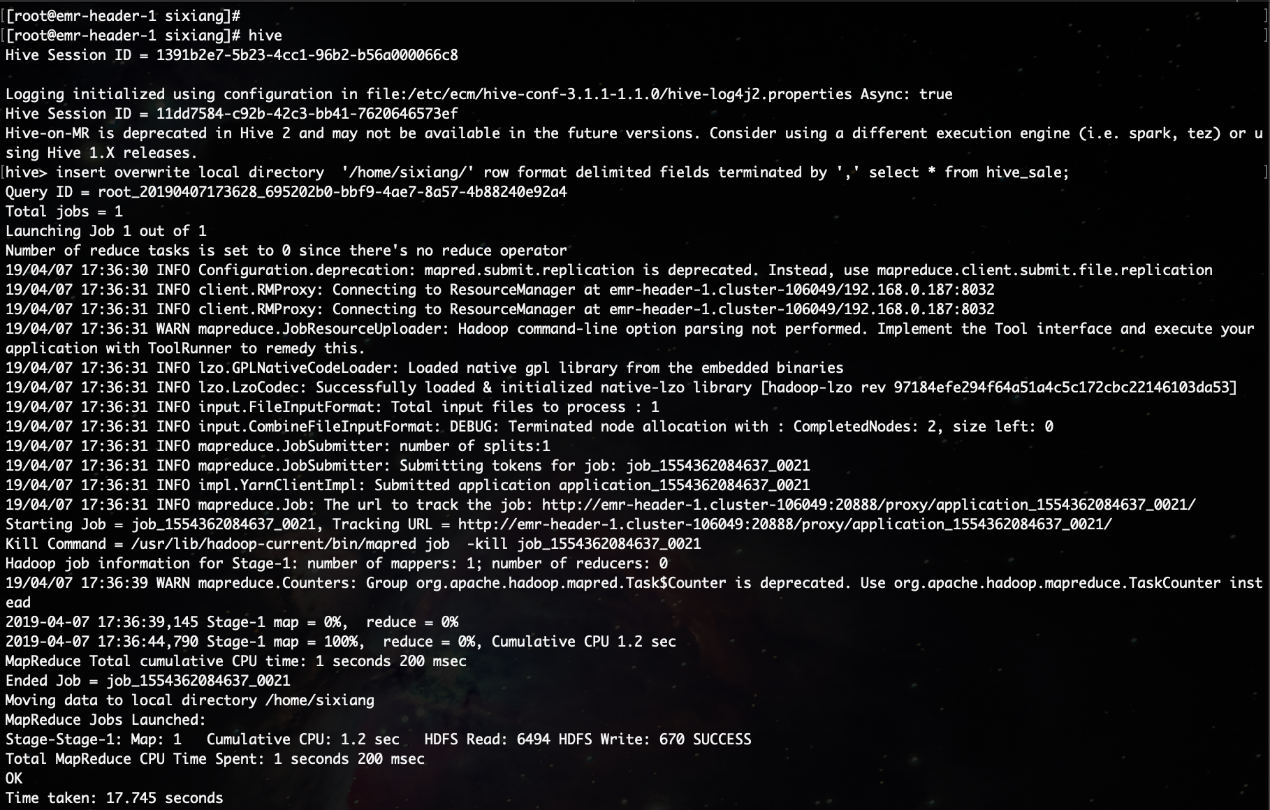

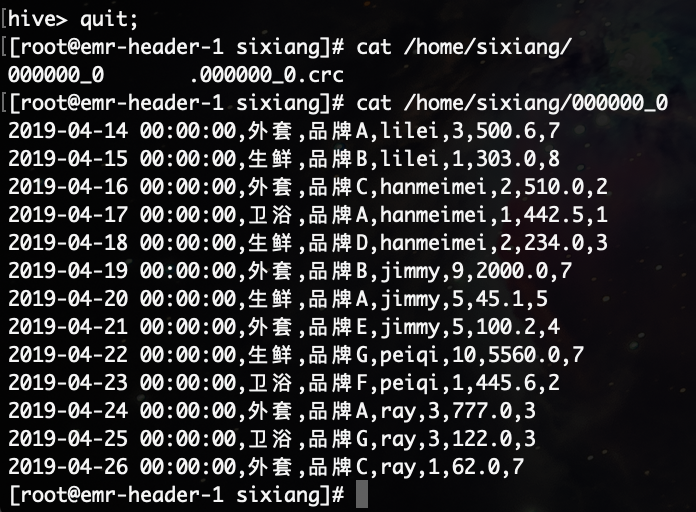

Log on to Hive and run the relevant SQL statements. The following example shows how to separate the data that is exported to the local device with commas (,) by line.

insert overwrite local directory '/home/sixiang/' row format delimited fields terminated by ',' select * from hive_sale;

List data files.

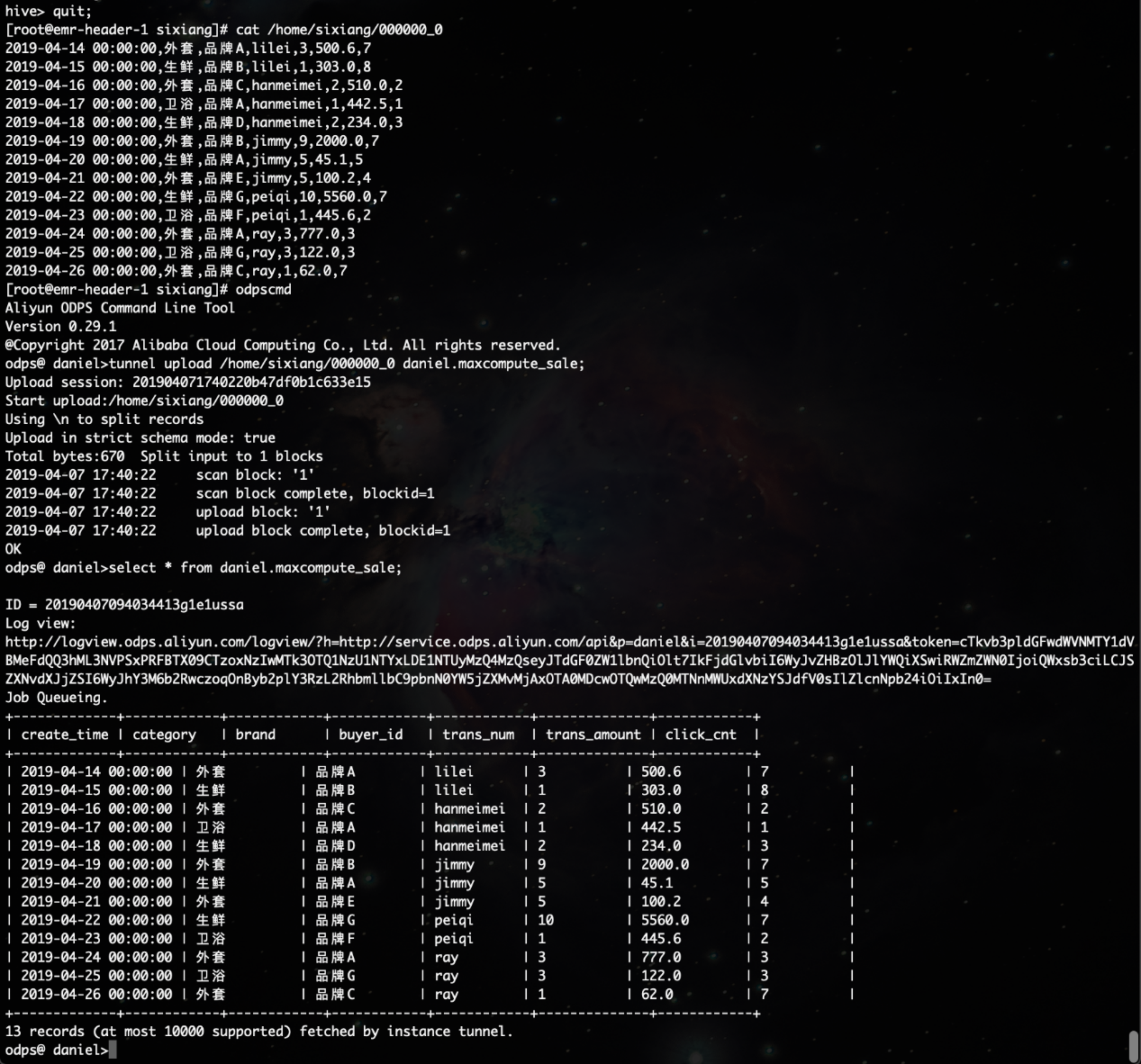

Go to the MaxCompute console and run the Tunnel upload command to upload data.

tunnel upload /home/sixiang/000000_0 daniel.maxcompute_sale;

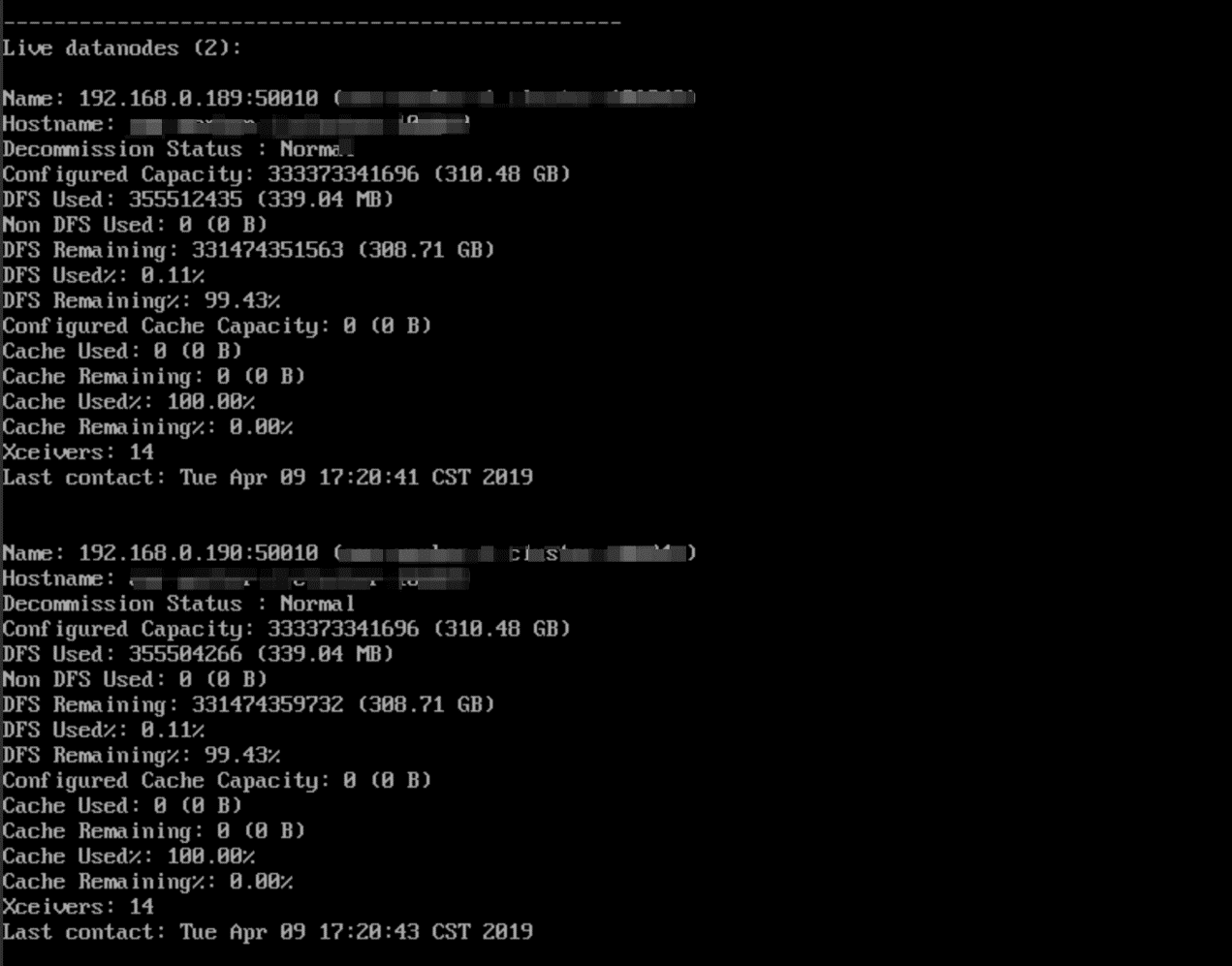

In most cases, the network between the MaxCompute project data node and the data node of the Hadoop cluster is unreachable. You can customize a resource group to run the synchronization task of DataWorks on the master node of the Hadoop cluster. In general, the network between the master node and data node of the Hadoop cluster is reachable.

Run the hadoop dfsadmin –report command to view the data node of the Hadoop cluster.

As shown in the preceding figure, the data node has only an internal network address and cannot communicate with the default resource group of DataWorks. Therefore, you need to customize a resource group and configure the master node to run the DataWorks synchronization task.

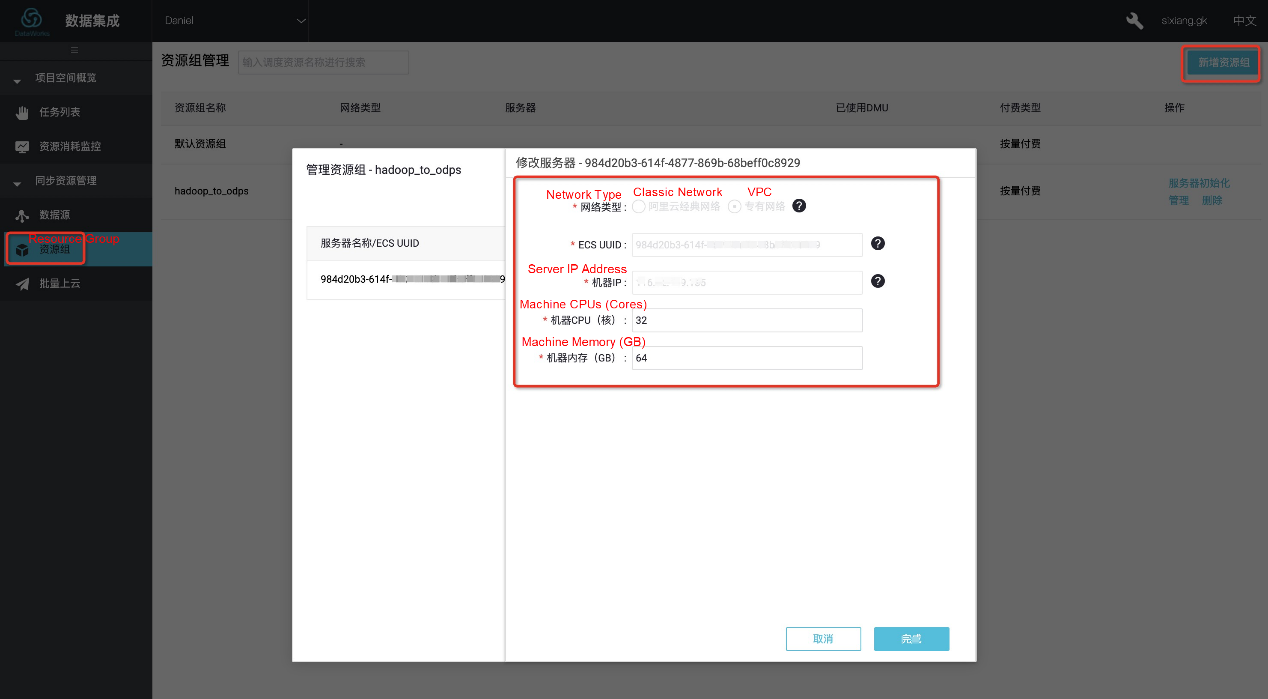

Go to the Data Integration page of DataWorks, choose Resource Group from the left-side navigation pane, and click Add Resource Group, as shown in the following figure.

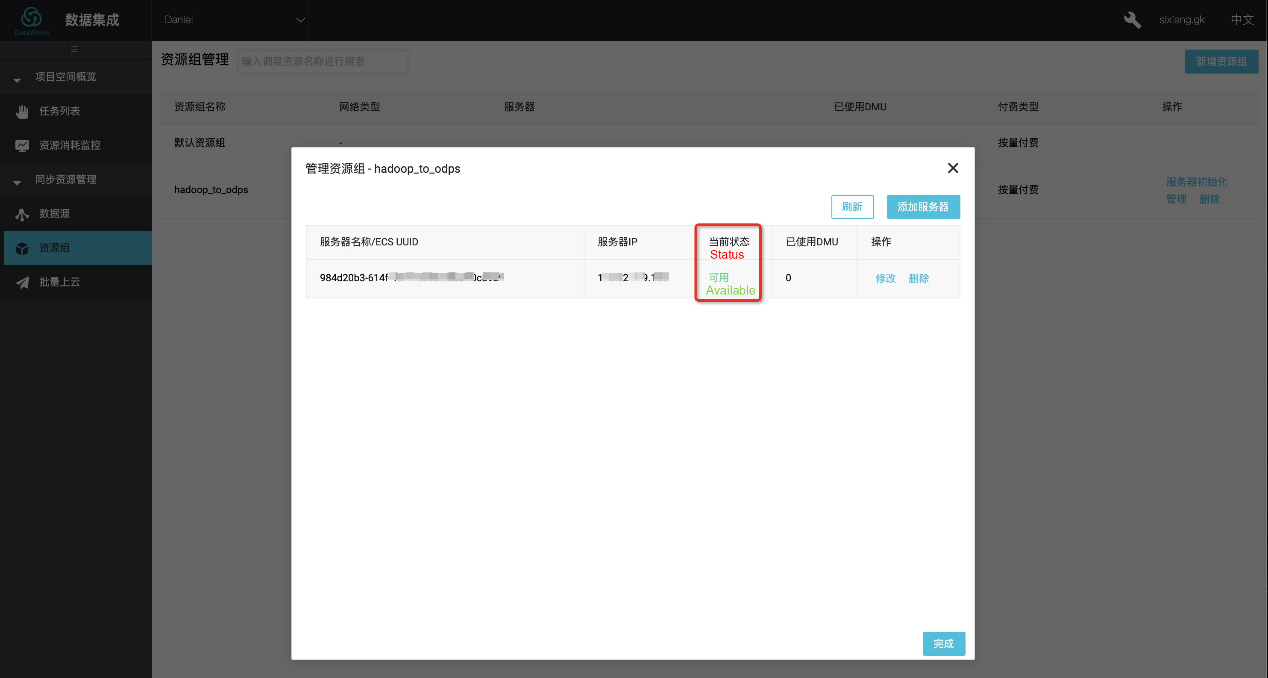

When adding a server, enter the UUID, IP address, and other information about the server. The IP address must be the Internet IP address of the master node. The internal network IP address may be unreachable.

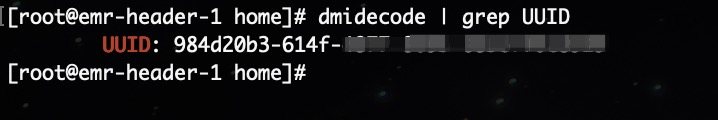

To obtain the UUID, run the dmidecode | grep UUID command on the master node CLI, as shown in the following figure.

Ensure that the network between the master node and DataWorks is reachable after the server is added. Install the agent of the custom resource group as instructed and check whether the agent status is Available. If yes, the custom resource group is added.

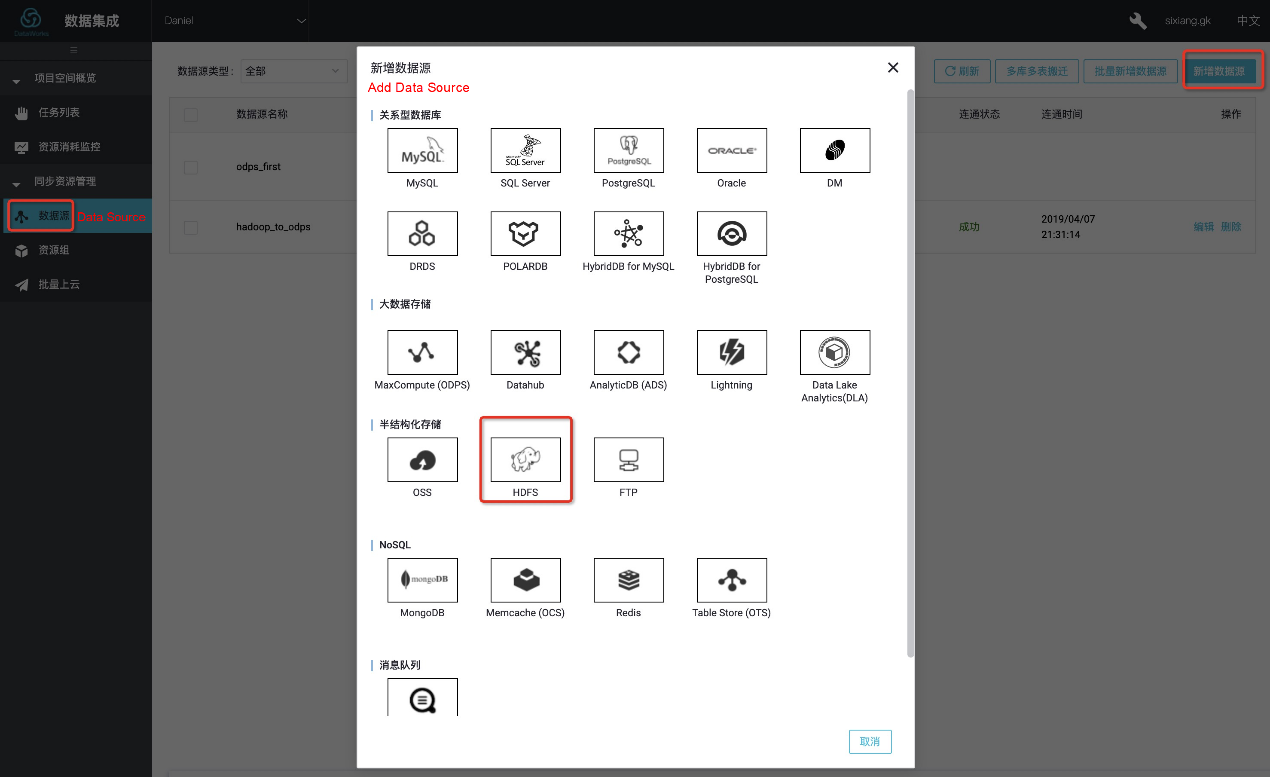

After you create a project in DataWorks, the data source is set to odps_first by default. Therefore, you only need to add a Hadoop cluster data source as follows: Go to the Data Integration page of DataWorks, choose Data Source from the left-side navigation pane, and click Add Data Source. In the Add Data Source dialog box that appears, select HDFS.

In the dialog box that appears, set Data Source Name and DefaultFS.

If the Hadoop cluster is an HA cluster, the address is hdfs://IP:8020. If the Hadoop cluster is a non-HA cluster, the address is hdfs://IP:9000. In this example, the Hadoop server is connected to DataWorks through the Internet. Therefore, enter the Internet IP address.

After the configuration is complete, click Test Connectivity. If a message appears, indicating that the connectivity test is successful, the data source is added.

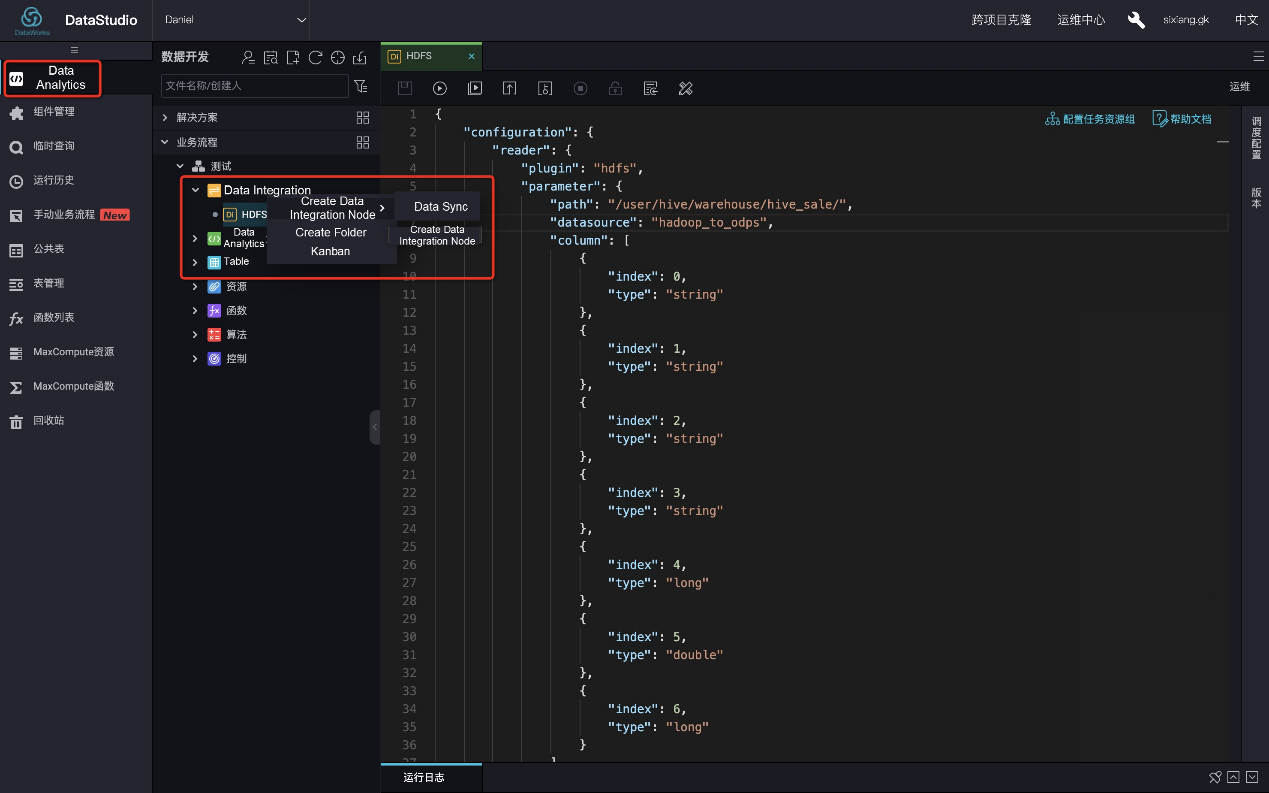

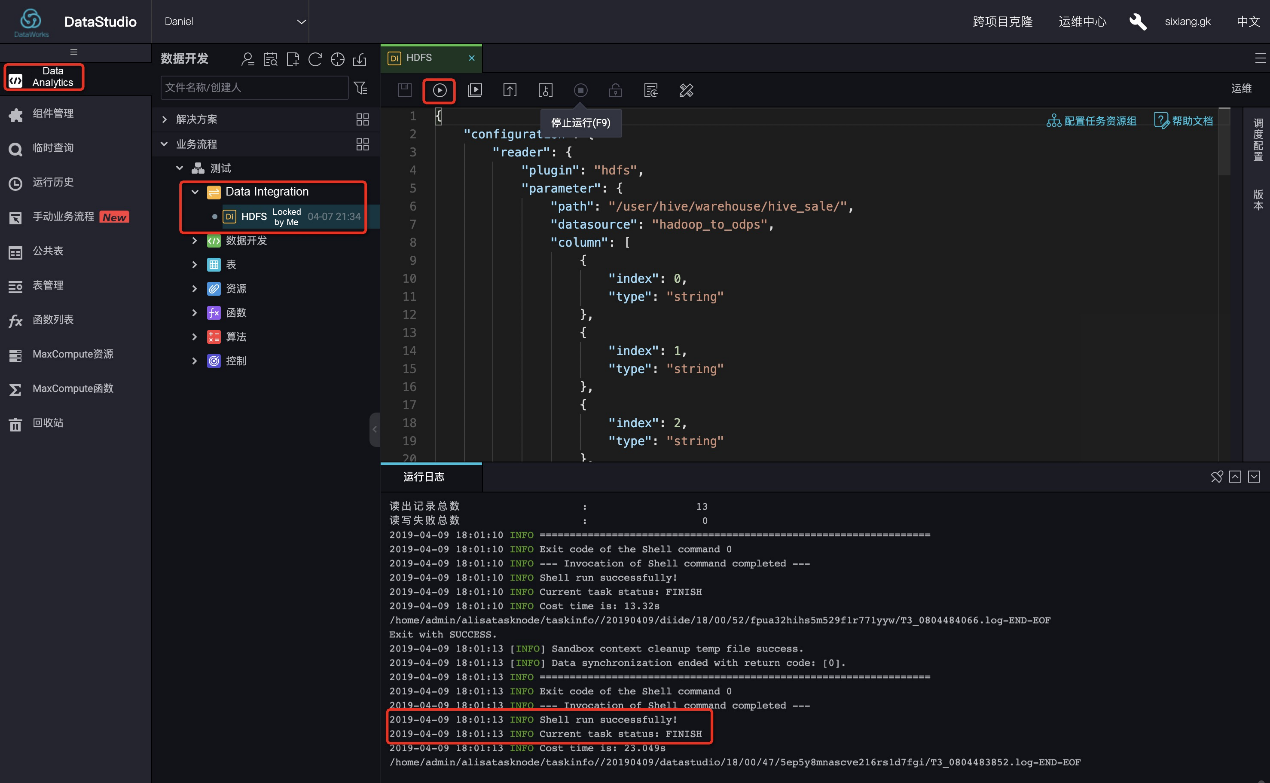

On the Data Analytics page of DataWorks, right-click the new Data Integration node and choose Create Data Integration Node > Data Sync from the context menu. In the Apply Template dialog box that appears, select a data source type, as shown in the following figure.

The script is as follows:

{

"configuration": {

"reader": {

"plugin": "hdfs",

"parameter": {

"path": "/user/hive/warehouse/hive_sale/",

"datasource": "hadoop_to_odps",

"column": [

{

"index": 0,

"type": "string"

},

{

"index": 1,

"type": "string"

},

{

"index": 2,

"type": "string"

},

{

"index": 3,

"type": "string"

},

{

"index": 4,

"type": "long"

},

{

"index": 5,

"type": "double"

},

{

"index": 6,

"type": "long"

}

],

"defaultFS": "hdfs://xxx.xxx.xxx.xxx:9000",

"fieldDelimiter": ",",

"encoding": "UTF-8",

"fileType": "text"

}

},

"writer": {

"plugin": "odps",

"parameter": {

"partition": "",

"truncate": false,

"datasource": "odps_first",

"column": [

"create_time",

"category",

"brand",

"buyer_id",

"trans_num",

"trans_amount",

"click_cnt"

],

"table": "maxcompute_sale"

}

},

"setting": {

"errorLimit": {

"record": "1000"

},

"speed": {

"throttle": false,

"concurrent": 1,

"mbps": "1",

"dmu": 1

}

}

},

"type": "job",

"version": "1.0"

}The path parameter indicates the location where the data is stored in the Hadoop cluster. To confirm the storage location, log on to the master node and

run the hdfs dfs –ls /user/hive/warehouse/hive_sale command.

After the configuration is complete, click Run. If a message appears, indicating that the task runs successfully, the synchronization task has been completed.

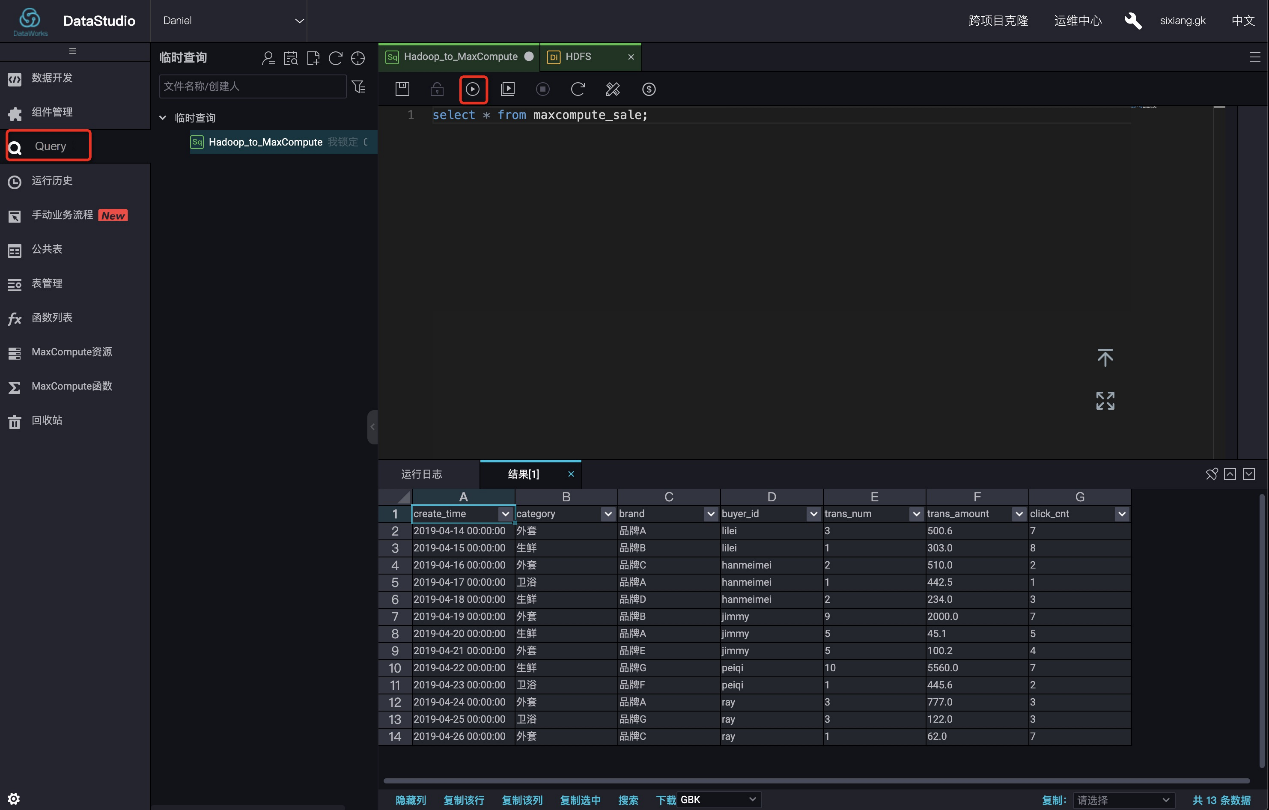

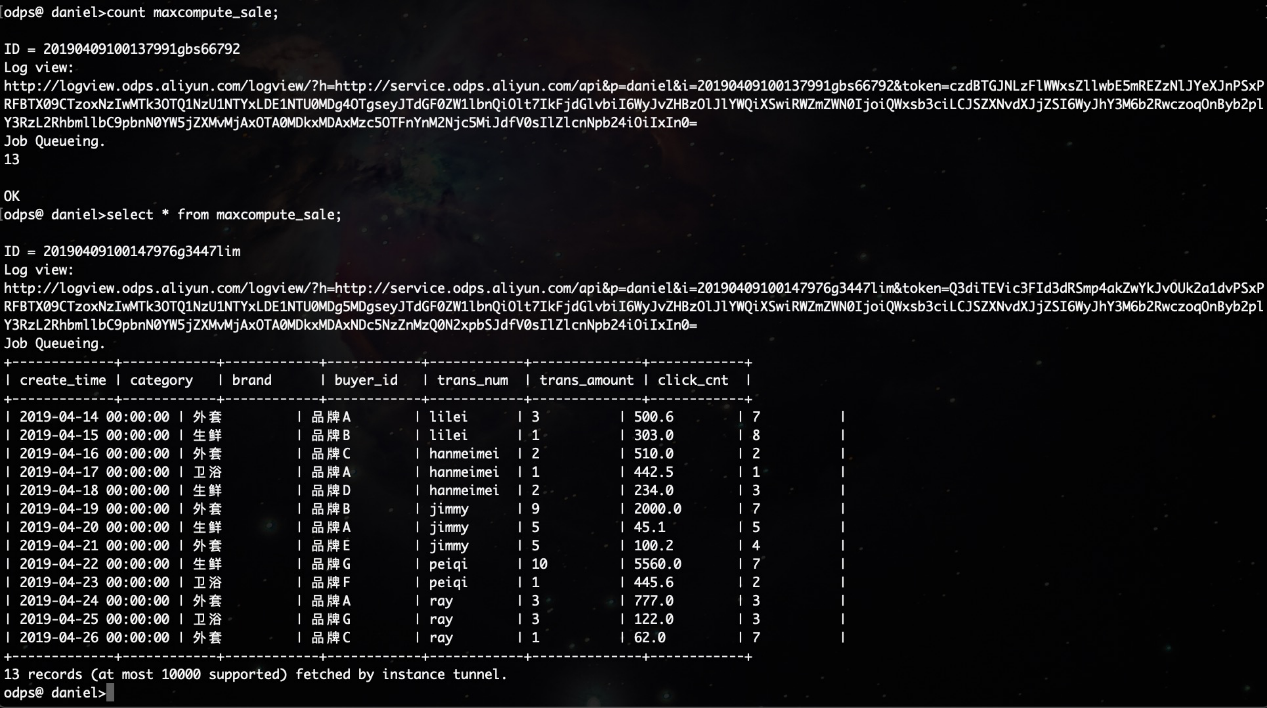

On the Data Analytics or Query page of DataWorks, run select * FROM hive_sale to verify the results, as shown in the following figure.

You can also perform an SQL table query by using odpscmd.

MaxCompute Wins Science and Technology Award of Zhejiang Province

137 posts | 21 followers

FollowAlibaba Clouder - July 20, 2020

Alibaba Cloud MaxCompute - December 7, 2018

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Clouder - March 31, 2021

Alibaba Cloud MaxCompute - September 30, 2022

JDP - June 17, 2022

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute