The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

Chai Wu, Senior Development Engineer at Alibaba, has many years of APM SaaS product R&D experience. He has used several industry-leading time series databases, including Druid, ClickHouse, and InfluxDB. With years of time series database experience, he is currently working on the development of core TSDB engines at Alibaba.

This article is based on his lecture, mainly covering the first three of the following topics:

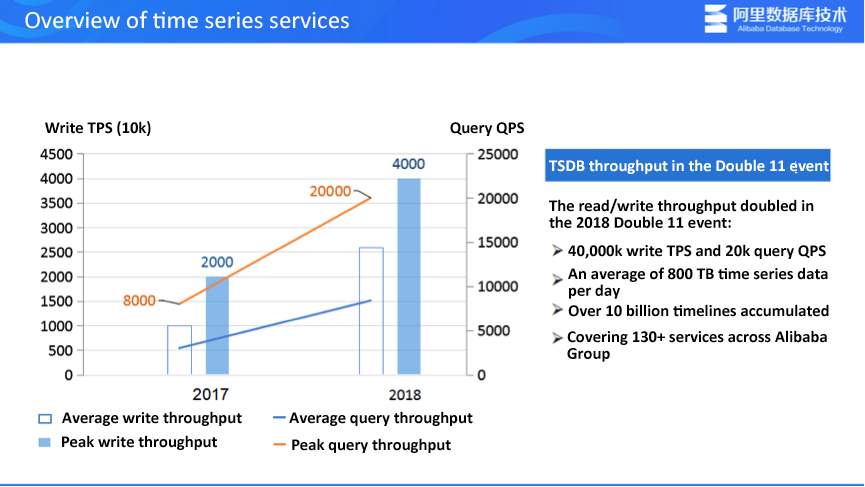

It has been three years since TSDB came into service in 2016, and TSDB has been used to support three Alibaba Double 11 promo events. At Alibaba, 2016 was the first year for TSDB. In 2017, TSDB was widely used within Alibaba. The following figure shows the TSDB throughput performance during the big promo events in 2017 and 2018. The peak write throughput doubled from 20 million TPS in 2017 to 40 million TPS in 2018. The peak query performance increased from 8000 QPS to 20,000 QPS. This is the throughput information of Alibaba core business, with 800 TB of daily time series data on average. The time series data consists of tens of billions points in time and covers 130+ services in Alibaba Group.

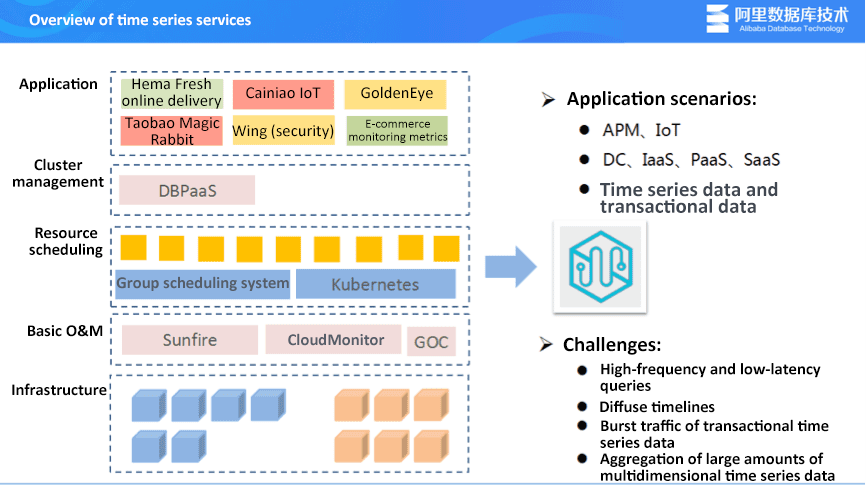

Time series services are involved in all main system layers, from infrastructure to upper-layer applications. The infrastructure layer mainly monitors physical machines and operating systems. The basic maintenance layer includes Sunfire, CloudMonitor, and GOC. Sunfire is a unified monitoring, logging, and alerting system at Alibaba. CloudMonitor is a unified monitoring system of Alibaba Cloud. GOC is a global emergency scheduling operations system that includes alerting data and fault rating data. The resource scheduling system has a large number of containers, including scheduling systems currently in service and the popular Kubernetes. The most prominent service in the cluster management layer is DBPaaS, which is responsible for monitoring and scheduling all Alibaba database instances, with tens of millions TPS. The upper-layer application mainly includes IoT application scenarios (such as Hema Fresh and Cainiao IoT), APM scenarios (GoldenEye and e-commerce metrics monitoring ), and core end-to-end monitoring in the security department. The covered application scenarios vary from DC to upper-layer SaaS.

Currently, time series databases are faced with many challenges, including requirements and challenges on the business side. For example, the APM app Taobao Magic Rabbit involves the interaction with end users. This app may be challenged by high-frequency and low-latency queries. In addition, most OLAP systems and distributed systems involve long-term and multidimensional aggregate analysis of large amounts of data. The diffuse timelines specific to time series databases is also a challenge, for example, how to aggregate a large number of timelines. High traffic impact during Double 11 promo events also poses a great challenge to the time series database capacity.

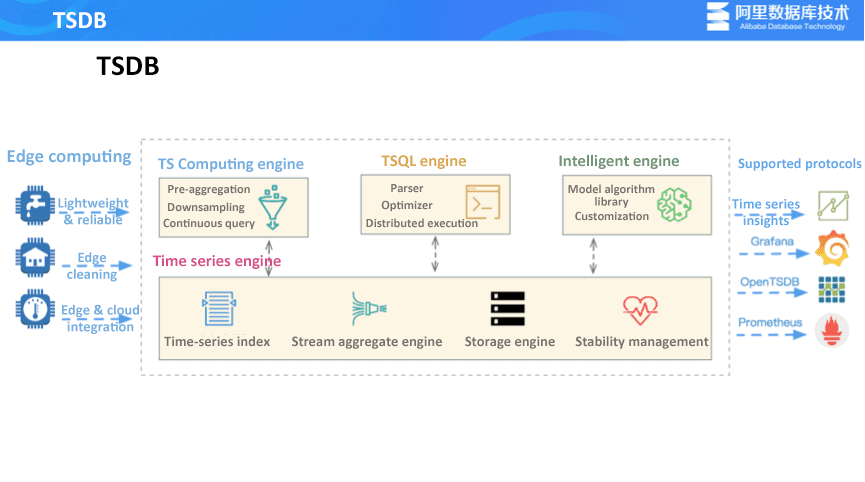

The TSDB used within Alibaba Group itself and the TSDB publicly available on Alibaba Cloud use the same architecture, but they have different capabilities. The following figure shows TSDB one the collection side, TSDB on the server, and protocols that are more relevant to users (visualization, Grafana display, and time series insights. Time series insight allows importing time series data for direct view and analysis.) A set of edge computing solutions are implemented on the collection side. Edge computing is mainly applied in IoT scenarios and environments with limited or unstable resources. Edge computing is interconnected with the TSDB on the server. The TSDB on the server can directly issue rules and perform edge data cleanup and computing to implement the integration of edge and cloud.

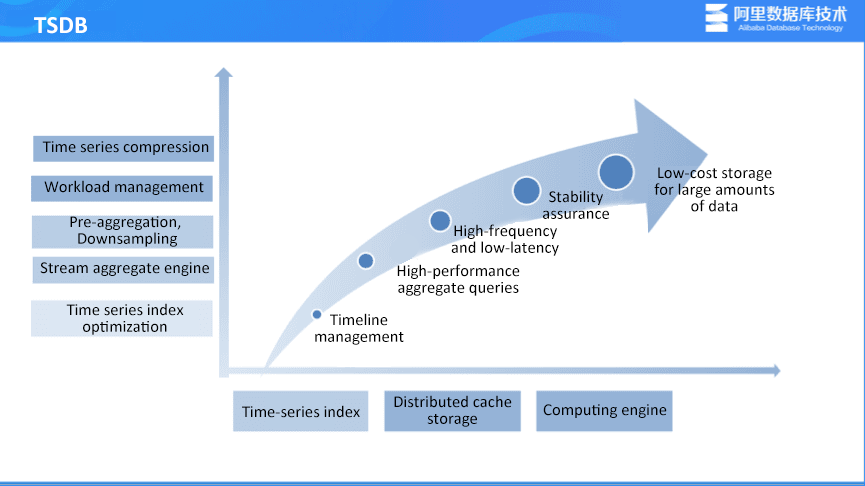

The entire architecture of the time series engine contains lots of content. The core is the core time series engine of the underlying TSDB. The upper layer includes the computing engine, TSQL engine, and the intelligent analysis engine. The core time series engine of the underlying TSDB consists of several modules: time series indexing, stream data aggregate, storage engine, and stability management. Time series indexing refers to the querying and filtering of timelines. Stream data aggregate refers to how to perform efficient aggregate analysis of large amounts of data. The storage engine provides solutions for storing large amounts of time series data. Stability management is a top priority of Alibaba. Stability management deals with how to ensure that the TSDB runs stable for a long time in Alibaba Group and on the cloud. The computing engine is the time series stream computing engine independently developed by Alibaba, which provides pre-aggregation, downsampling, and continuous query. Continuous query is often used in alerting or complex event analysis scenarios. The TSQL engine is also developed by Alibaba and has the built-in distributed executor and optimizer. The TSQL engine can expand the time series analysis capability and reduce users' application threshold. The intelligence engine provides some trained or generated model algorithm libraries and tailored industry solutions.

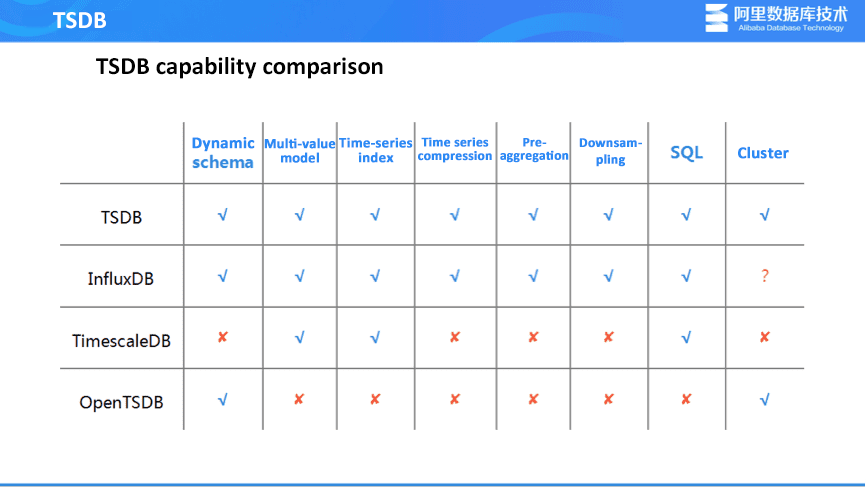

Support for dynamic schema. Almost all NoSQL databases support writing tags. However, TimescaleDB is based on the traditional relational database Postgres and therefore does not support dynamic schema.

Multi-value model. A multi-value model can significantly improve the speed of writing in time series scenarios. For example, monitoring a wind farm usually involves two metrics: wind direction and wind speed. In this case, writing the two metrics at the same time is much better writing a single metric at a time in a single-value model. In addition, a multi-value model is more friendly to the upper-layer SQL models. For example, analysis can be performed based on multiple values in the case of making a select query.

Time series indexing. Relatively, OpenTSDB implements filtering based on HBase and does not have time series indexes. But databases like the TSDB and InfluxDB provide time series indexing to optimize the query efficiency.

Pre-aggregation and downsampling. After Facebook Gorilla is developed, time series compression draws lots of attention. Proper compression can be performed based on the features of time series data. For example, if timestamps are consecutive and data is stable, time series compression by using delta-of-delta or xor is much more efficient that general compression.

SQL capability. SQL interfaces can reduce the threshold for users to use the TSDB and allow users who are familiar with SQL to directly use time series models. SQL interfaces can also expand capabilities like time series analysis by utilizing the power of SQL.

Cluster capabilities. InfluxDB provides some high availability and cluster solutions. However, stable high availability solutions are provided in the paid version. Cluster capabilities in TimescaleDB are still being developed. OpenTSDB is based on the Hadoop ecosystem, so the scalability should not be a problem.

What support does TSDB provide for the Double 11 event in 2018? First, it provides a set of solutions that can meet the requirements of different business sides. It supports high-frequency and low-latency query, high-performance aggregation, low-cost storage of large amounts of data, and management of a large number of timelines. In addition, in the function model, the time series indexing is optimized and an index based on KV storage is made to implement unlimited timeline read/write. A distributed cache storage system is implemented by using Facebook Gorilla as the reference to support high-frequency and low-latency query. Finally, it is the computing engine. Technically, we focus on the time series index optimization, stream aggregate engine, pre-aggregate and downsampling, workload management, and self-developed time series compression.

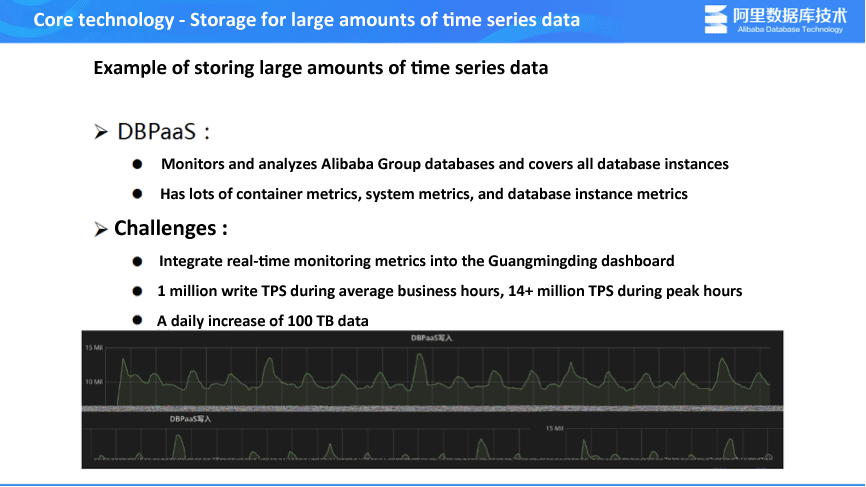

How can we implement low storage cost? The following figure shows the actual application scenario during the Double 11 event.

DBPaaS is used to monitor and analyze all database instances at Alibaba Group. This involves a variety of metrics for all underlying machines, containers, and operating systems as well as many metrics for upper-layer database instances. All these metrics are stored in a set of databases for unified query and analysis. DBPaaS also stores database performance data during the Double 11 event each year. Detailed data on the Double 11 day of each year is stored in a set of databases. DBPaaS brings great challenges. First, because DBPaaS monitors all database instances and these real-time metrics need to be integrated into the dashboard in the Alibaba core operations center during each Double 11 event, how to ensure accurate, timely, and stable dashboard display poses a great challenge to the TSDB. Another challenge is how to store large amounts of data during Double 11 events when the average writing speed is 1000w TPS and the peak speed is 1400w+ TPS. Additionally, hundreds of TB of data is generated each day. Storing such a large amount of data itself is a great challenge.

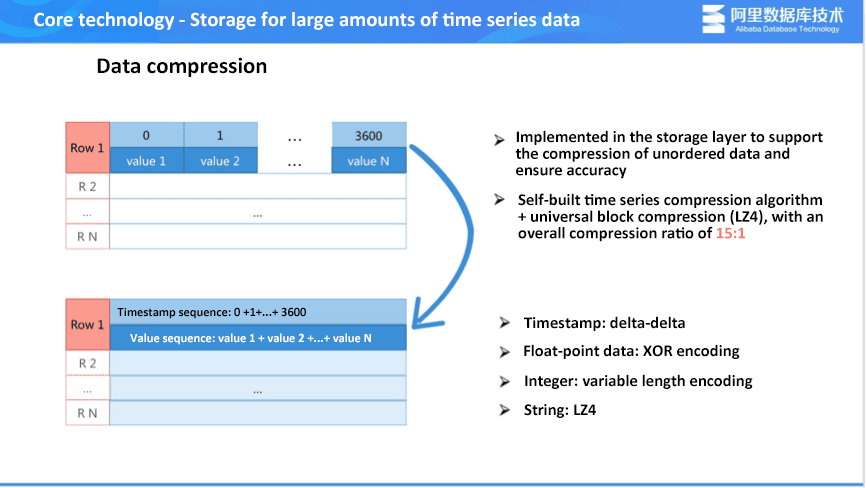

The left part of the following figure is a table, in which each row represents a time series. The table structure is the same as that of the OpenTSDB table. KV is usually designed like this due to the singularity of time series models.

Numbers from 0 to 3600 represent data of the past hour. Data is collected per second, that is, each data row includes a total of 3600 columns, each column having a value. In OpenTSDB, data is stored without being compressed. If data is collected per millisecond, a data row requires 3.6 million columns. The window of time can still be adjusted, but with lots of limits. After referring to Facebook Gorilla, Alibaba introduced time series compression algorithms in the storage layer. All timestamps and values are aggregated into two large data blocks by merging columns. Time series compression algorithms are used to compress the data blocks. General block compression is then used in the underlying KV storage. The overall compression ratio can be up to 15:1, which is calculated based on the actual online business data. Specifically, delta-delta is used for timestamps; XOR encoding is used for the float type; the simple variable length encoding is used for the integer type; a general compression is used for the string type. Note: Lossy compression algorithms are also supported, although they are not used at Alibaba due to some specific requirements.

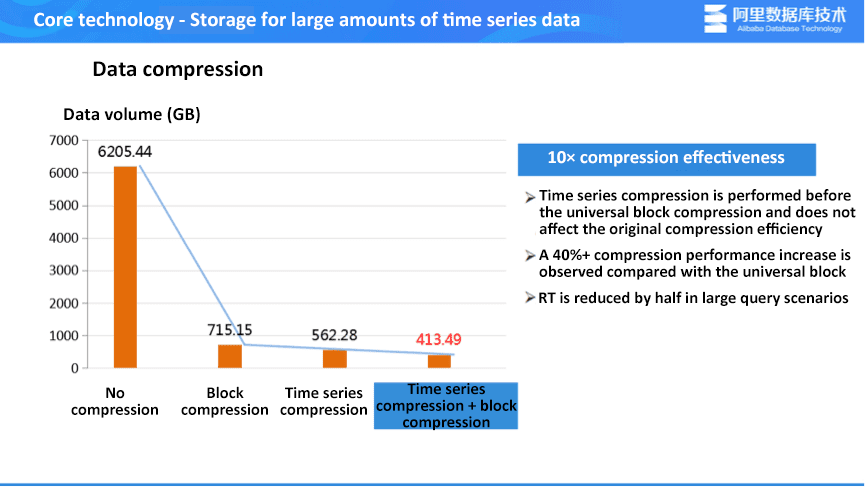

The following figure shows the data compression effectiveness in real business scenarios. A total of 6 TB data is compressed to 715 GB by using the general block compression algorithm, which is more than a 10× reduction in size.

With the combination of time series compression and block compression, this volume of data can be compressed to 413 GB, achieving a compression ratio of around 15:1. Compared with block compression alone, the combination of time series compression and block compression increases the compression effectiveness by 40%, which is of great significance to storage cost reduction. Another advantage is that block compression effectiveness will not be affected because time series compression is done before block compression and the size of data after time series compression is already very small. In large query scenarios, RT is reduced by half. When we scan data for a long time range, data has already been highly compressed. Therefore, the I/O efficiency is very high and RT can be reduced by half.

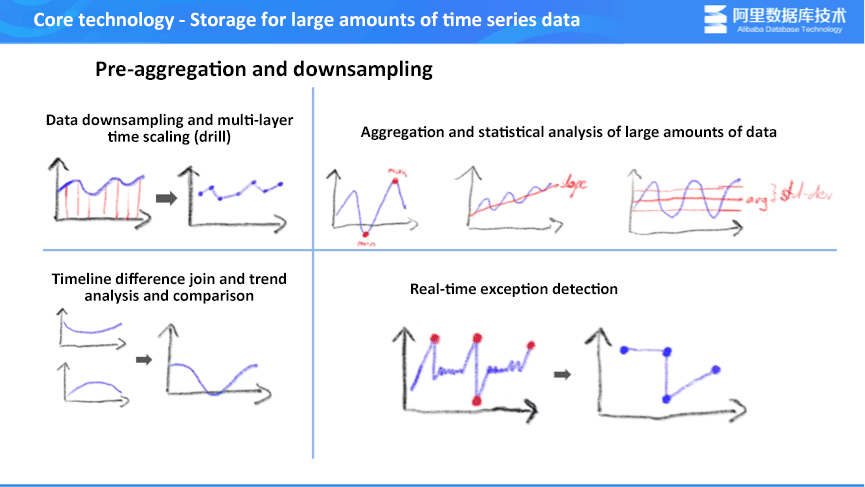

For example, we make our customer a report that features different time intervals and allows the customer to view detailed data by year, month, or week. This is a drill-down process. Directly using TSDB for aggregation right from detailed data will cause poor user experience and long response time.

Aggregation and statistical analysis of large amounts of data. For example, perform sum, min, and max operations or even P99/P55 statistics on the data in the last six months. These scenarios require lots of computations and it is not feasible to implement aggregations directly from all the detailed data.

For example, count successful transactions, failed transactions, and total transactions, and calculate the success ratio. Because data collection is done systematically from the collection side to the display side, directly modifying collection sources on the collection side may reduce the flexibility. Therefore, we need an engine that supports the join operation of multiple timelines.

For example, provide accurate and real-time data in the maintenance dashboard and send customers exceptional data points to alert them.

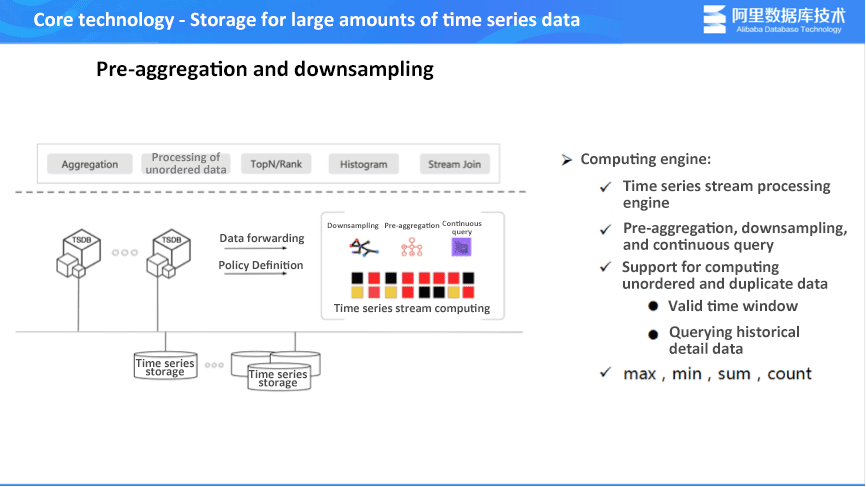

The solution shown in the following figure includes both the computing engine and the storage engine. Alibaba has developed a set of time series stream computing engines on its own. TSDB needs to provide services for public cloud customers and private cloud customers on Alibaba Cloud as well as internal customers of Alibaba Group. In different scenarios, resource deployment has different limits. Considering this, we designed a semantic framework for our own time series steam computing engines. Flink, Spark, and JVM memory are supported in the underlying layer. Both large-scale and small-scale computations can be implemented.

Based on this, the time series engines provide the capability of continuous query. We can directly query and analyze time series data in data streams for alerting and analysis of complex events. Time series stream computing engines are interconnected with TSDB. After a user writes data into TSDB, partial data will be forwarded to the stream computing engines. Users can interact with TSDB and make proper decisions to determine which data needs downsampling and which data needs pre-aggregation.

In addition, users can set some alerting rules and distribute these rules to TSDB. TSDB will send the rules for continuous query in time series stream computing to implement alerts. TSDB writes detailed data and time series stream computing writes summary data (namely, the data after downsampling and pre-aggregation). When performing queries in TSDB, users can query pre-aggregated data. The upper layer supports many aggregation operators, such as min, max, sum, count, p99, P95, and median statistics. Joining multiple timelines and writing unordered or duplicate data are also supported.

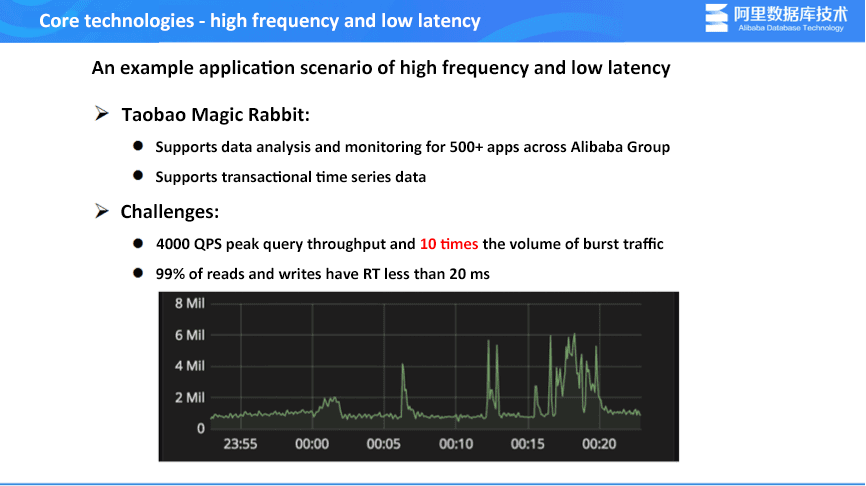

As a Mobile APM application, Taobao Magic Rabbit supports data analysis and monitoring for 500+ mobile apps in Alibaba. Mobile APM is characterized by the relevancy between read/write throughput and user interaction. Mobile APM data is transactional time series data. For example, when a promo event is announced during the Double 11 promo, lots of users accessing a specific feature at the same time will generate huge amounts of burst traffic.

During Double 11 events, the query peak monitored by Taobao Magic Rabbit is up to 4,000 QPS. The following figure shows that the write traffic is increased from the average 600,000 TPS to the peak 6,000,000 TPS (a tenfold traffic increase). In addition, real-time policies and decisions made according to TSDB on the business side will directly affect the user experience for mobile applications. Therefore, the business side requires the RT in TSDB to be less than 20 ms. In these scenarios, traditional TSDBs like OpenTSDB have too long I/O paths when performing queries in HBase, and lots of dithering and glitches will occur during the query process. In this situation, it is very difficult for OLAP systems to keep the RT for 99% of read and write operations less than 20 ms.

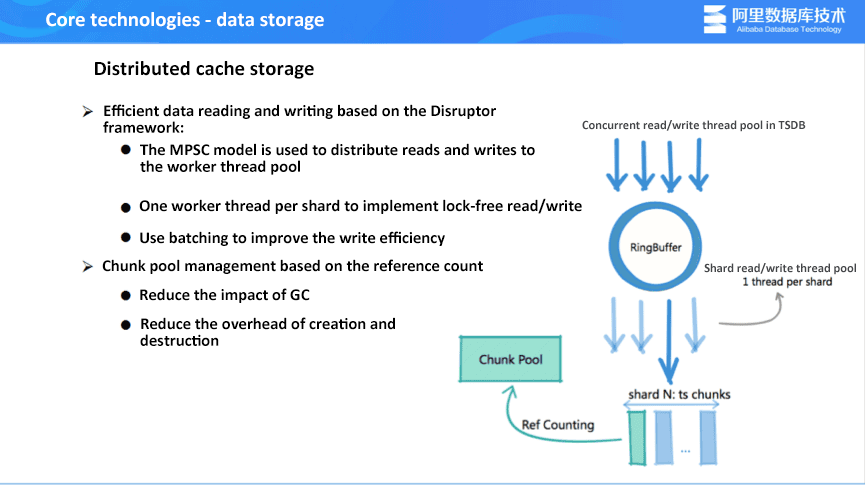

To support high-frequency and low-latency queries, we designed a Java-based distributed cache solution after referring to Facebook Gorilla: Clusters can implement sharding and capacity adjustment based ZooKeeper to support dynamic scaling. Throughout the Double 11 event, this solution have supported 10+ million TPS (a tenfold traffic increase). The key of this solution is the design of the TSMem node. TSMem needs to solve two challenges first: The first is how to implement high throughput; the second is how to ensure that the RT of 99% queries is less than 20 ms to implement low latency.

TSMem stages users' concurrent read/write requests in RingBuffer based on the Disruptor framework. TSMem adopts the "multiple producers and one consumer" mode. After one consumer consumes a user request, the quest will be assigned to the corresponding worker thread. Each thread in the worker thread pool corresponds to a time series cache shard. Therefore, this is actually memory sharding based on Disruptor. One worker thread corresponds to one shard, making it unnecessary to consider competing for resources like locks.

Also note that read requests and write requests are assigned to the same link and processed by the same worker thread, enabling lock-free reads/writes. With the batching feature of RingBuffer, a simple write or a small write batch can be accumulated into one large batch through RingBuffer. Then one batch operation can be performed in the worker thread to significantly improve throughput. Another issue is how to ensure efficient memory management and RT with few glitches. First, we need to reduce the influence of JVM GC, put all time series data into the time series blocks and perform chunk pool management based on the reference count. This avoids creating too many temporary objects. Therefore the entire GC is relatively stable. This method reduces the number of data blocks to be created and the overhead. This also avoids the latency vibration caused by the unexpected request for a large new time series block in extreme conditions.

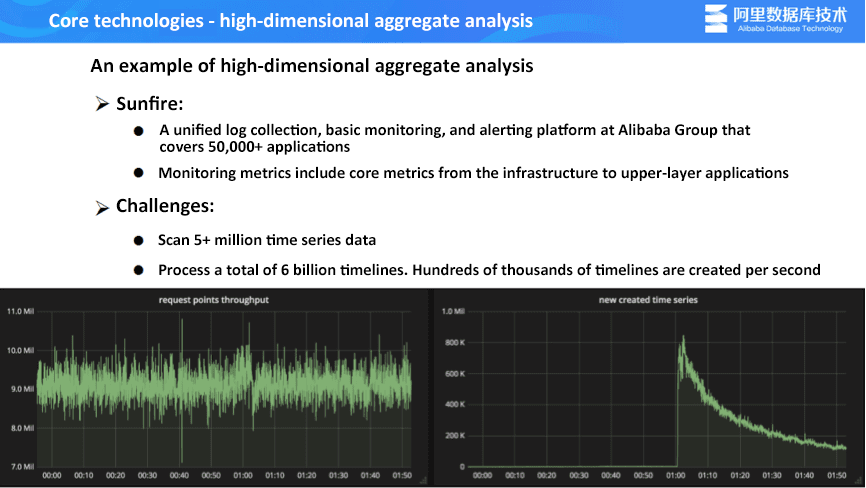

Sunfire is a unified log collection, basic monitoring, and alerting platform provided by Alibaba. Then entire platform covers 50,000+ applications across Alibaba Group. Monitoring metrics cover from the infrastructure to the upper-layer applications, including IaaS, PaaS, SaaS, and wireless applications. In these scenarios, the main challenge is that large amounts of time series data needs to be scanned per second due to the vast coverage of applications, the business complexity, and the large data volume. For example, scale out a service in advance during the Double 11 promo.

Adding a batch of new machines is equivalent to creating a batch of new time series. Hundreds of thousands of timelines can be created per second. The following figure shows the entire consumption process after a new batch of machines were added in one day. The accumulative number of timelines during the Double 11 promo is 6 billion, which continues to increase. How can we meet the requirement of scanning 5 million points in time per second?

If we perform aggregate operations in the memory of OpenTSDB and directly respond to user queries, we have to put all time points in memory, causing great instability. Another problem is the speed of creating timelines. For example, because OpenTSDB is based on UID tables, atomic operations on the dictionary table have one performance bottleneck (roughly 200,000 TPS), which will hinder business development.

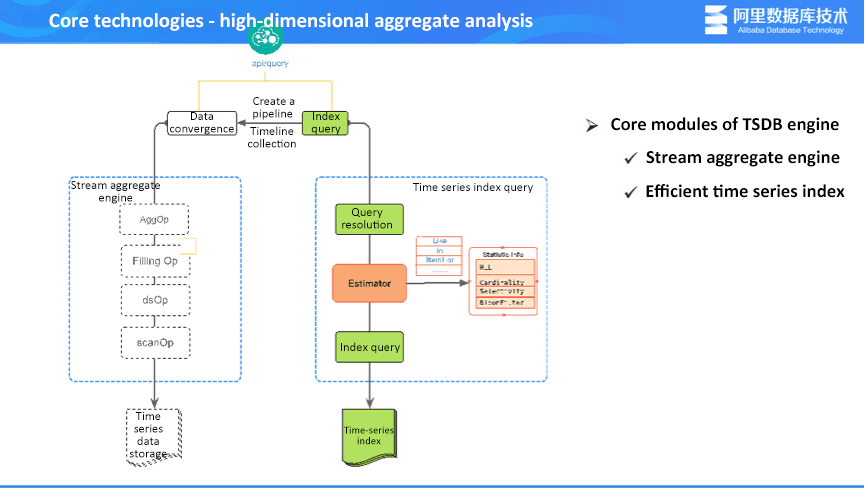

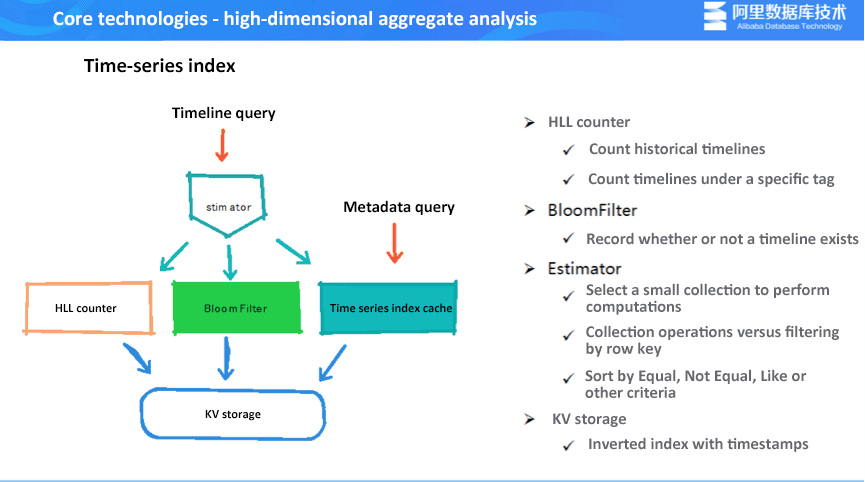

High-dimensional aggregate analysis involves two internal models of TSDB: the stream aggregate engine and the time series index. The time series index consists of two parts: the index and the index estimator. The following figure shows the whole query process for time series indexes. First, query parsing is performed. Then the estimator is used to optimize the query. The actual query steps are performed by the time series index. Finally, a timeline is returned. The stream aggregate engine will extract all the time series data in the underlying time series storage that falls in the obtained time range. Then the data is downsampled and pre-aggregated before the final result is returned to users.

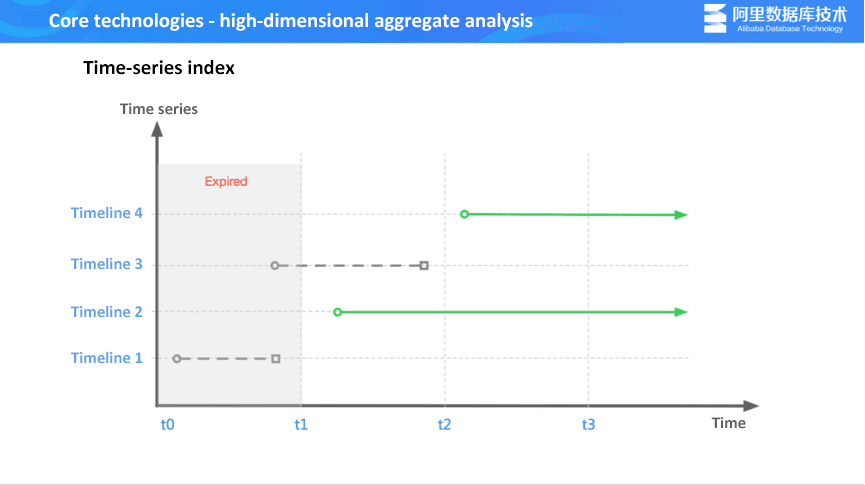

The following figure shows the lifecycle of timelines. In different phases, some timelines may expire, and some new timelines may be created. When users queries time series data between t2 and t3, they do not want to query timelines that expired between t0 and t2. One more timeline means that the stream aggregate engine has to perform one more I/O operation when it extracts data points from the bottom-layer storage. InfluxDB earlier than Version 1.3 features full-memory timeline index. Full-memory timeline index will not filter time range. When users query data between t2 and t3, all timelines are returned. Index in InfluxDB later than Version 1.3 is file-based and can directly return two timelines, leading to significant performance improvement.

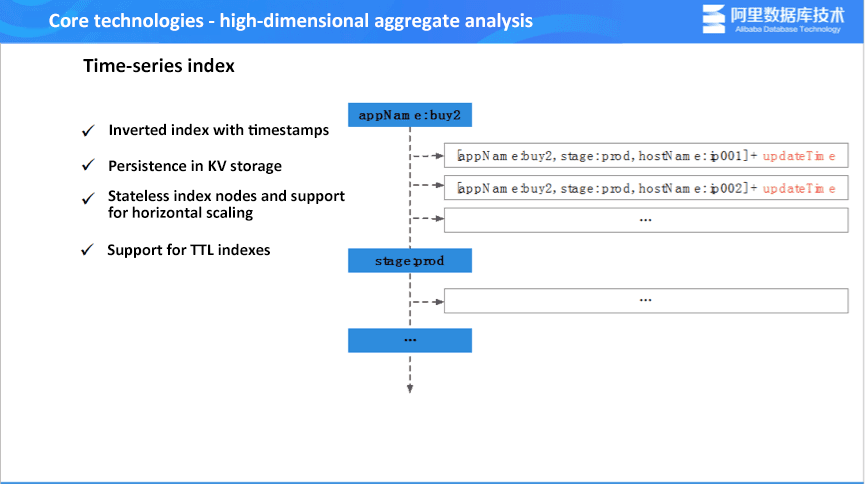

Like InfluxDB implements time series indexing, we also implement indexing based on KV in our TSDB (essentially reverted index) One feature of the time series index is that inverted indexes are added to timestamps. After inverted indexes are added to timestamps, we are allowed to filter target timelines by tags and values first and then filter data again by time dimension when performing index query. This improves filtering effectiveness. In addition, when inverted indexes are persisted into the KV storage, index nodes can run in a stateless manner, and horizontal scaling and timeline TTL are supported.

The following figure shows the estimator for the time series index. What the estimator does is allow the time series index to have higher query efficiency when a user perform queries. Data in the estimator comes from three sources: HyperLogLog counter, for example, how many timelines a tag in an inverted index or the entire reverted index has; BloomFilter, which records whether or not a specific timeline exists; and time series index cache in the memory. During the query process, the estimator will first perform operations on the relatively small collections in the entire inverted index and sort operation and query conditions by priority.

If a user selects equality query and fuzzy matching, we will first perform quality query, which has more accuracy, and also compare collection sizes at the same time. For example, if a user provides two conditions: the data center dimension and the IP dimension, the estimator will first query by the IP dimension because the tag corresponding to the IP dimension has more timelines and provides higher filter efficiency. Although BloomFilter and HLL implements rough statistics, they can still significantly improve the query efficiency when the collections corresponding the two query criteria differ by orders of magnitude. In addition, if BloomFilter suggests that no timelines exist, the entire query will immediately stop.

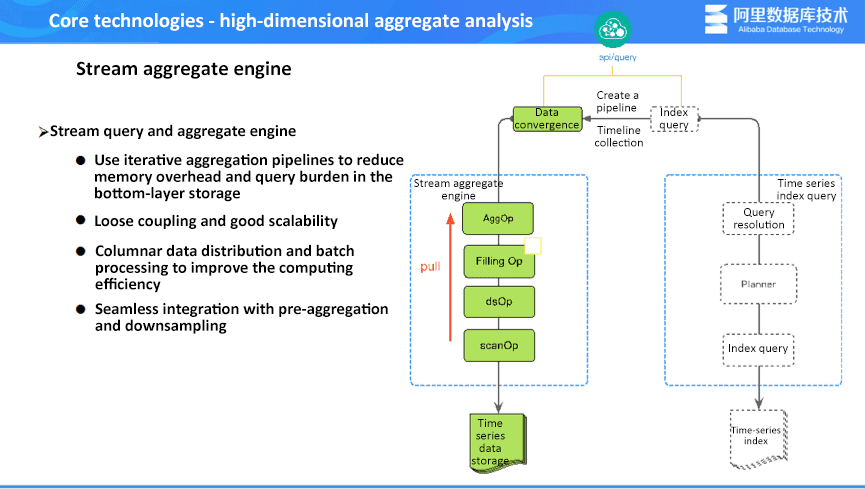

Pre-aggregate and downsampling described before are performed before data is written into TSDB. The stream aggregate engine is the aggregation and computing engine used when users query data in the TSDB process. The stream aggregate engine is designed based on the pipeline solution. The entire pipeline includes different steps. It provides 10+ core aggregation operators, 20+ filling policies, and 10+ insertion algorithms. Complex user queries can be converted into simple operator combination. The conversion result can ensure the accuracy of the overall query results.

As mentioned before, timelines queried by the time series index will be sent to the stream aggregate engine, and specific time series data will be extracted from the time series data storage. Operations like downsampling, completions, and aggregates will be performed based on users' query criteria. The whole pipeline does not only include operators listed previously. Operators like topN, limit, and interpolation are also included. The design of loose coupling enables good scalability.

In addition, the whole pipeline extracts data in small batches from the database and sends the extracted data to operators in the stream engine. Essentially, this is a volcano model. The small data batches obtained are stored in the memory in a columnar manner. Each operator shows good performance. Additionally, the stream aggregate engine in the memory can seamlessly integrate pre-aggregation and downsampling results before these results are written into TSDB. Assume that data at the granularity of five minutes are calculated in advance and written into TSDB by downsampling. If a user happens to perform a query downsampled at the granularity of five minutes, the stream computing engine does not need to obtain detailed data from the time series database for data computations. Instead, the stream computing engine can directly obtain data at the granularity of five minutes for further iterative calculation. TSDB and the computing engine are interconnected.

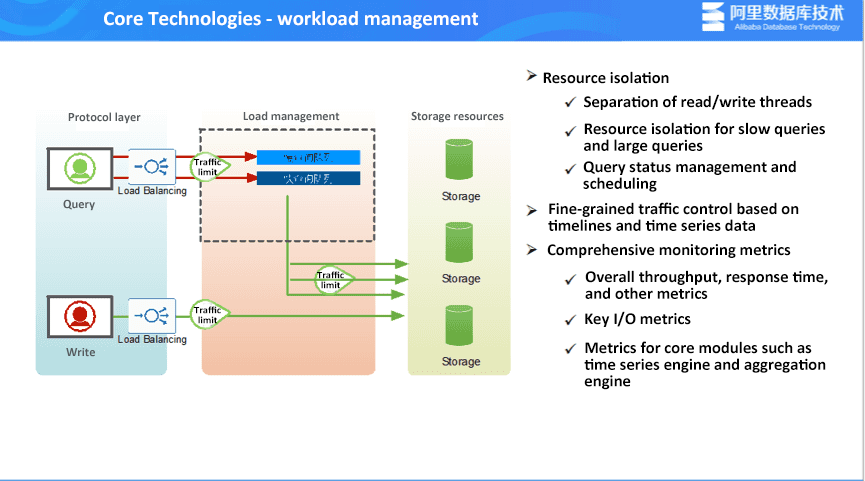

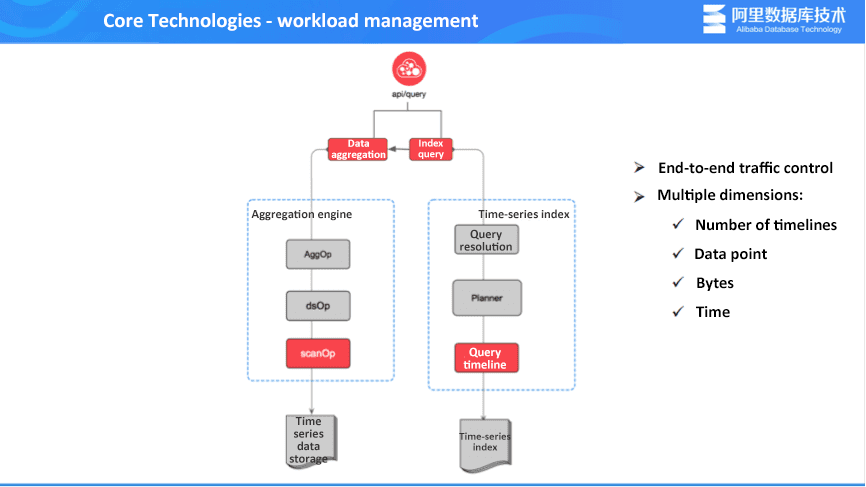

Because TSDB is designed to provide internal support for Alibaba Group and serve both private and public cloud customers, the stability is very important. TSDB ensures stability and security by using the three following methods. The first method is resource isolation. The second is fine-grained traffic control based timelines and time series data. The last is comprehensive metric monitoring.

Resource isolation. The separation of the read and write threads ensure that the write operation is not affected when the query link encounters failures and that the query is not affected when the write link encounters failures. Resource isolation is also for slow queries and large queries. A fingerprinted is calculated based on a user's query criteria. The fingerprint and query history records are used to determine whether a query is a slow or large query. If the query is a slow query, TSDB directly puts the query into a separate isolation queue, which has limited sources. If the query is a normal query, TSDB puts that query in a queue that has more resources to accelerate the entire query to a certain extent.

Comprehensive metric monitoring. Monitored metrics include the overall TSDB throughput, response time, and key I/O metrics as well as core metrics in core modules such as the time series index module and the stream aggregate module. By monitoring metrics, we can clearly know what happens inside TSDB and quickly locate problems.

Fine-grained traffic control based on timelines and time series data. The purpose of end-to-end traffic control is to protect the engine from being affected or crashing when burst traffic occurs or the user workload experiences a sudden increase. First, TSDB will control resources for I/O threads at the read/write entry. TSDB also controls traffic at the entries of the two core modules and at the I/O exit. In addition, many traffic control dimensions are provided. For example, you can implement traffic control targeting the timeline module. Too many timelines may indicate that the business time series module has design defects. This requires operations like pre-aggregate to reduce the number of timelines that a query covers. Other dimensions include the number of time series data points covered in a query, throughput (I/O), and query time consumption.

The aforementioned scenarios are scenarios that TSDB implemented in 2018 or is currently trying to solve. Now, let's see what the future TSDB development trends are and what features and characteristics are expected of TSDB. First, it is necessary to implement heterogeneous storage of cold and hot data. This aims to reduce the cost for users. Because more and more importance is being attached to the data value, it is a preferred option to save users' detail data for a long time. To do this, a solution for long-term storage of detailed data is required, for example, connecting to Alibaba Cloud OSS.

Second, we need to implement the serverless read/write capability. Serverless reading/writing allows TSDB to provide universal analysis and computing. Universal analysis and computing refers to short-term high-frequency and low-latency query, long-term high-dimensional aggregate analysis in the OLAB system, and analysis of history detail data or data in a past period of time. Serverless decides how historical data or cold data is analyzed. Serverless can reduce users' cost by lowering the cost of computing, querying, and writing.

Third, we need to embrace the time series ecosystem. For example, we can learn from the system design of Prometheus or other commercial time series databases and monitoring solutions. We need to embrace the open source community and provide highly available and stable solutions or alternatives to make use of TSDB advantages like stream computing, time series index, computing engines or SQL engines. We want to provide our customers with better solutions.

Finally, we will further promote smart time series analysis and provide more stable and reliable models to solve specific issues for our customers.

2,593 posts | 775 followers

FollowAlibaba Clouder - December 10, 2019

Hologres - July 7, 2021

Alibaba Clouder - February 5, 2019

Alibaba Clouder - November 8, 2018

Alibaba Clouder - April 8, 2020

Alibaba Clouder - December 21, 2020

2,593 posts | 775 followers

Follow Time Series Database for InfluxDB®

Time Series Database for InfluxDB®

A cost-effective online time series database service that offers high availability and auto scaling features

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn MoreMore Posts by Alibaba Clouder

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free