By Beixia, Alibaba Cloud Senior Technical Expert and Leader of Alibaba Cloud PolarDB for PostgreSQL Cloud-Native Database HTAP

Based on the architecture of separation of storage and computing in PolarDB, we have developed a shared storage-based MPP architecture. It has the capability of HTAP and supports two sets of execution engines for a set of TP data:

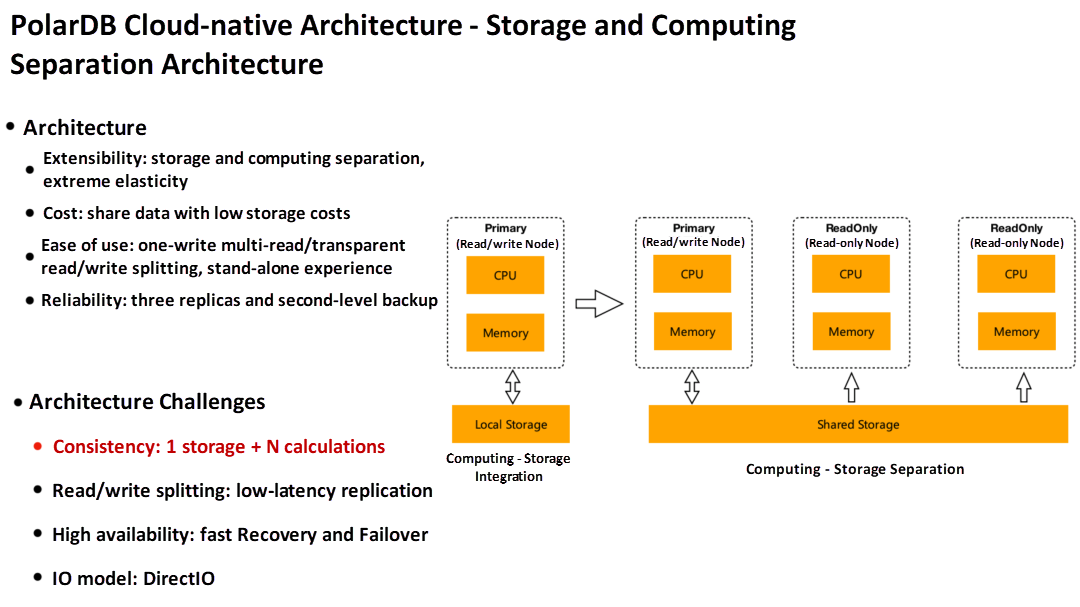

First, let's take a look at the architecture of PolarDB. As shown in the figure above, the left side is the integration of computing and storage. The storage of traditional databases is local. The right side is the PolarDB storage and computing separation architecture. The underlying layer is the shared storage, which can be attached to any number of compute nodes. Compute nodes are stateless and can be extended well. In addition, costs can be reduced. For example, users can expand to 16 nodes, but the underlying storage is still one copy (3 copies).

Distributed storage is a relatively mature storage solution. It has high availability and second-level backup of its storage, such as Ceph and PolarStorage, which are relatively mature storage solutions. Can PolarDB be considered PolarDB if a single PostgreSQL database runs directly on a shared storage device? The answer is that you can't do this directly. The fundamental reason is that there is storage under this architecture, but there are numerous compute nodes, and coordination is needed between compute nodes.

The first problem that needs to be solved in the architecture of separation of storage and computing is consistency, 1 storage + N computing. Second, read/write splitting makes low-latent replication on this architecture. Third, high availability refers to how to solve the problem of rapid recovery. Fourth, the IO model has changed. The distributed file system does not implement a cache, and the saved memory can be directly used by the BufferPool of the database.

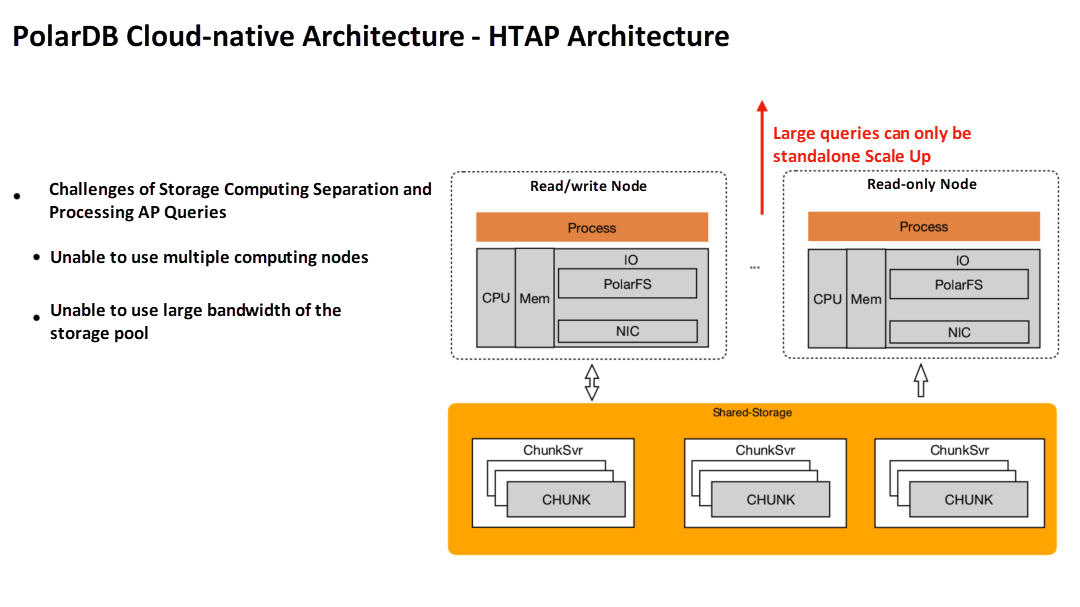

Under this architecture, if users need to run some analytical queries, here is an example. A telecom billing system handles user recharge and settlement of various points during the day. Requests like this will have UserID, and the modified page can be accurately located through the index. In the evening, some batch analyses are done, such as reconciliation. Statistics of the overall sales at the provinces and cities levels in different dimensions are done. The storage-computing separation architecture uses SQL read/write splitting to process large queries and dynamically loads SQL to nodes with lower loads.

When this node processes complex SQL, the PG database has the ability of a single machine in parallel. The parallel processing of complex SQL by a single machine is significantly improved compared with the serial of a single machine, but the memory and CPU still have certain limitations in a single machine in parallel. Processing complex SQL can only be accelerated by scaling up in this architecture. In other words, if SQL processing is found relatively slow, the CPU and the memory can only be increased, and a machine with a higher configuration to be a read-only node is needed. Moreover, a single node to process a complex SQL statement cannot take advantage of the large bandwidth of the entire storage pool.

Since the underlying layer of distributed storage has multiple disks, each disk can read and write. If compute nodes become a bottleneck, the capabilities of each disk in the underlying shared storage pool cannot be used. Another problem is that when only one node is used to process complex SQL, other nodes may be idle because the concurrency of AP is usually very low. It is possible that only a few nodes are running some fixed report SQL, while other nodes are in an idle state, and their CPU, memory, and network cannot be used.

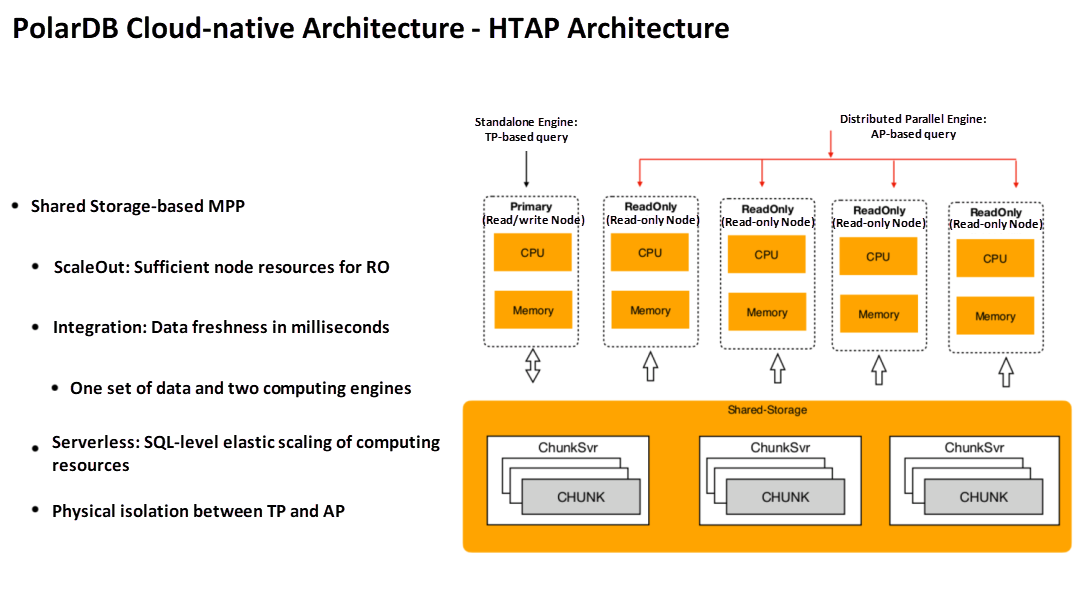

The solution of PolarDB is to connect multiple read-only nodes to implement a shared storage-based distributed parallel execution engine. The entire system can be used flexibly. For example, if some nodes are used to run TP query, the code path goes to standalone machine query. The advantage of a single-machine query is that it is faster to process point query writes. It does not involve distributed transactions, so a standalone can process them quickly. When you need to perform computing on complex SQL statements, you can use multiple read-only nodes to execute one SQL statement in parallel, which is the distributed parallel execution engine MPP scheme.

The MPP of Apsara PolarDB is essentially different from traditional databases (such as Greenplum). For example, Apsara PolarDB can quickly increase the number of read-only nodes if it finds that the computing power of the sub-nodes is insufficient at a certain time. In this case, the entire underlying shared storage data does not need to be redistributed. If you have used Greenplum traditional share nothing MPP, you will know that scale-out or contracting is a large O&M action.

PolarDB separates storage and computing. Compute nodes are stateless. You can quickly add nodes to make computing capabilities more powerful. Another advantage is that TP and AP can be physically isolated, ensuring that users will not affect AP, and AP will not affect TP when performing TP.

This solution has one set of data. For example, some traditional solutions support two sets. For example, the TP data is exported to another AP system. One copy of the data needs to be copied, and the delay in synchronizing process data is relatively large. Moreover, it is a waste of resources, such as running TP during the day and AP at night. In fact, only one of the two clusters is at work. PolarDB provides an integrated solution that supports two computing engines on the shared storage with one set of data. One is a standalone engine, and the other is a distributed parallel execution engine. The shared storage features and latency between read and write nodes can be achieved in milliseconds. Compared with the traditional system that leads TP data to AP, the data freshness can achieve a millisecond delay.

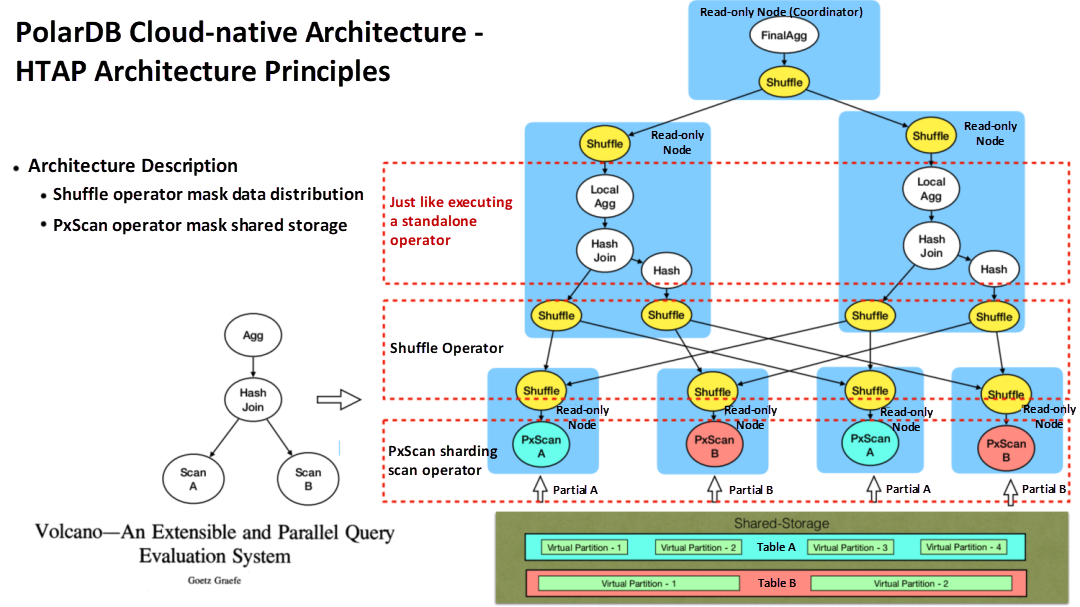

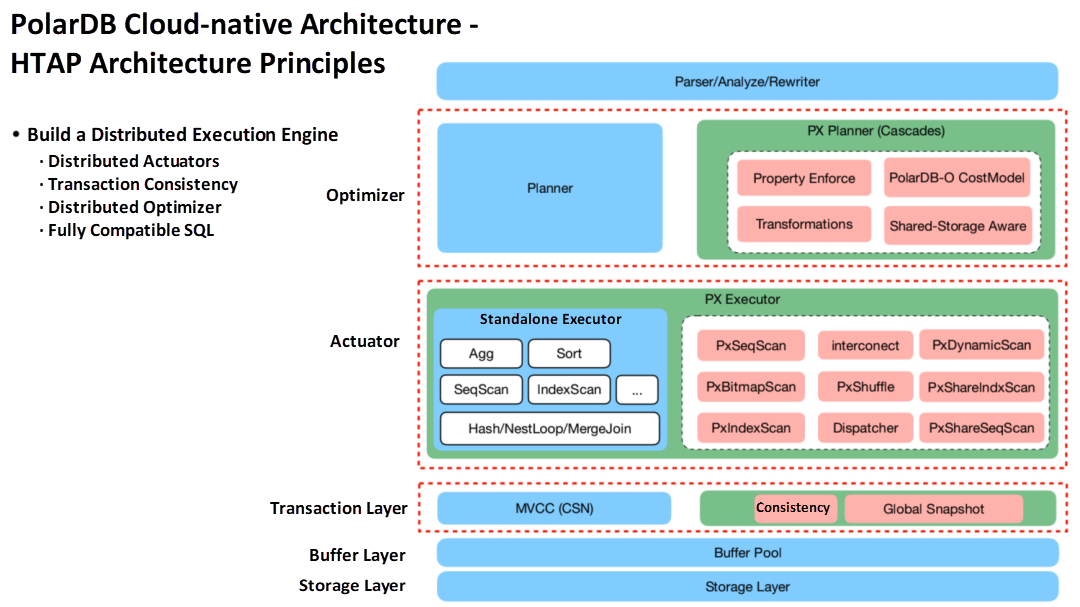

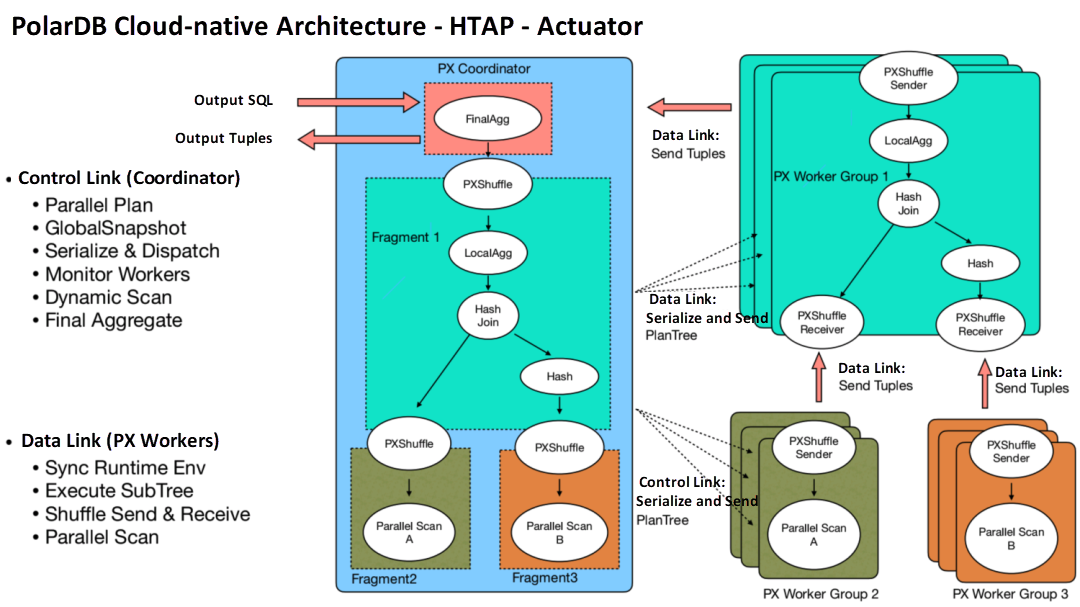

How can we implement a parallel database? The core idea is to introduce the Shuffle operator into the plan tree, through which the underlying data distribution characteristics can be shielded. It is exactly the working principle of MPP.

What will happen to the PolarDB-based shared storage? Since the underlying data is in a shared state, the plan tree joins B through A and performs connt(*) on the result. If Greenplum is directly used in parallel mode and a traditional MPP is directly implemented in PolarDB, two nodes execute AB join at the same time. Since A and B are shared for the two nodes, all data can be seen. The two nodes join A and B (respectively) and then perform statistical counting. The final counting is twice the real value. At the same time, the amount of data processed by A and B has not decreased, and the whole process has no acceleration effect.

Therefore, it is necessary to solve the problem of how to dynamically split any table. You need to parallelize parallel operators to parallelize all Scan operators and index Scan operators in the original PG database. Parallelization means that any table can be logically split according to some fixed strategies. After splitting, it is impossible to perceive that the bottom layer is shared storage for the upper operator of the entire plan number. Similar to using Shuffle operators to mask data distribution features, PolarDB uses a series of PXScan parallelization table scanning operators to mask the sharing features of underlying data. This is the principle of the HTAP architecture.

From the perspective of database modules, what needs to be done to implement MPP based on shared storage?

HTAP actuator is the general MPP practice, which is divided into control links and data links as a whole. There are two types of roles, PX Coordinator and PX Worker. The PX Coordinator executes the part of the optimizer, generates a distributed number of plans, and distributes the plans to slices. It may be distributed to other RO nodes in the PolarDB cluster. These nodes have many sub-plans. They can be sent to the PX Coordinator and eventually returned to customers through data links.

What are the advantages of MPP based on shared storage?

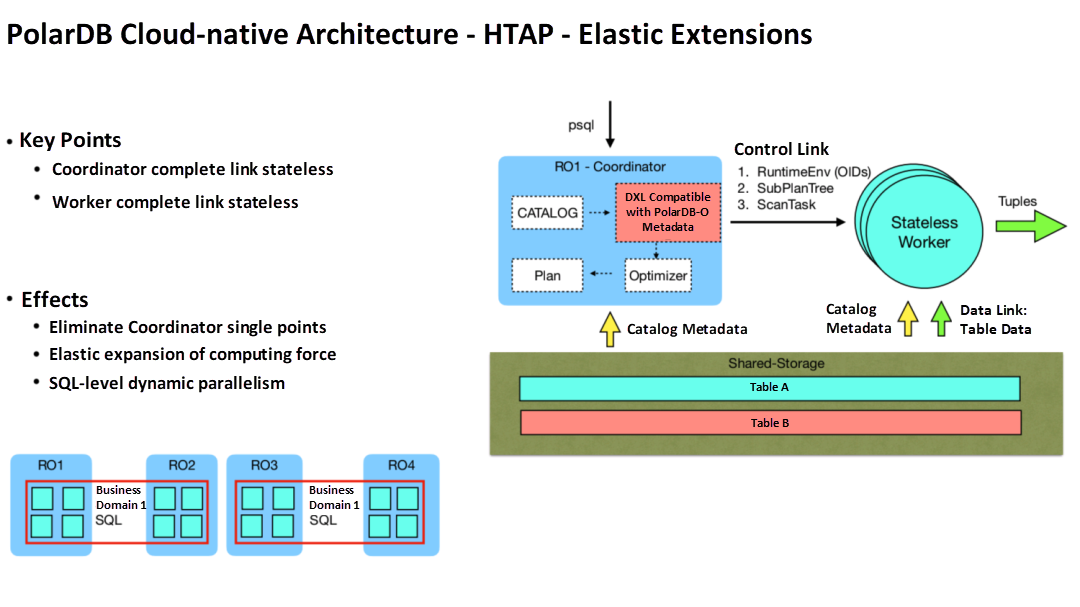

First, PolarDB has better elasticity than traditional MPP based on share nothing. In the right part of the figure, the status of the entire MPP execution path (such as the status of metadata and the status of each worker runtime) is stored in the shared storage. Change each worker of distributed computing to Stateless. On the one hand, its status is read from the shared storage. On the other hand, it is sent over from the coordinator to the network. This enables stateless and distributed execution. For PolarDB, data is stored in the shared storage, and the original data is stored in the shared storage table. Run time information (such as worker connected to RO1 by a SQL) needs eight workers to work, which are distributed to RO2 and RO3. Four workers do not know any information when they start. RO1 sends the relevant information of this SQL to eight workers through the network, and these eight workers can be executed. This is the idea of making a fully flexible MPP distributed engine. In this case, the Coordinator node becomes stateless. RO1 can be regarded as a centralized coordination node (or RO2 as a coordination node), which eliminates the single-point problem under the traditional Greenplum architecture.

Second, the computing power expands elastically. There are four nodes in the figure above, and its business involves some SQL. These SQL statements are complex queries that can be checked in RO1 and RO2. Another business domain can split its business into two parts, one of which can run to RO3 and RO4, which can be dynamically adjusted.

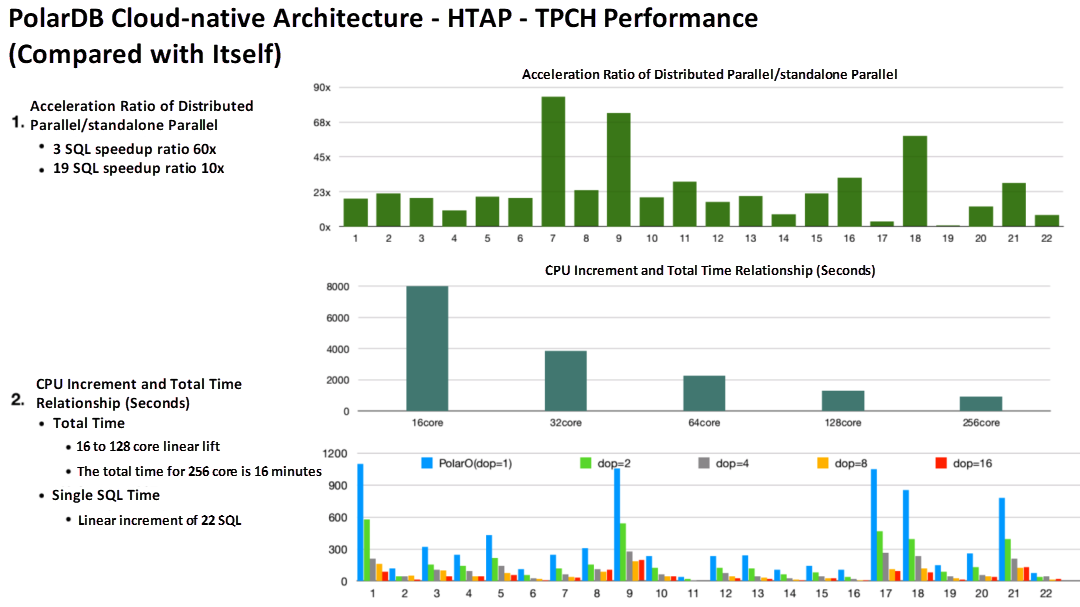

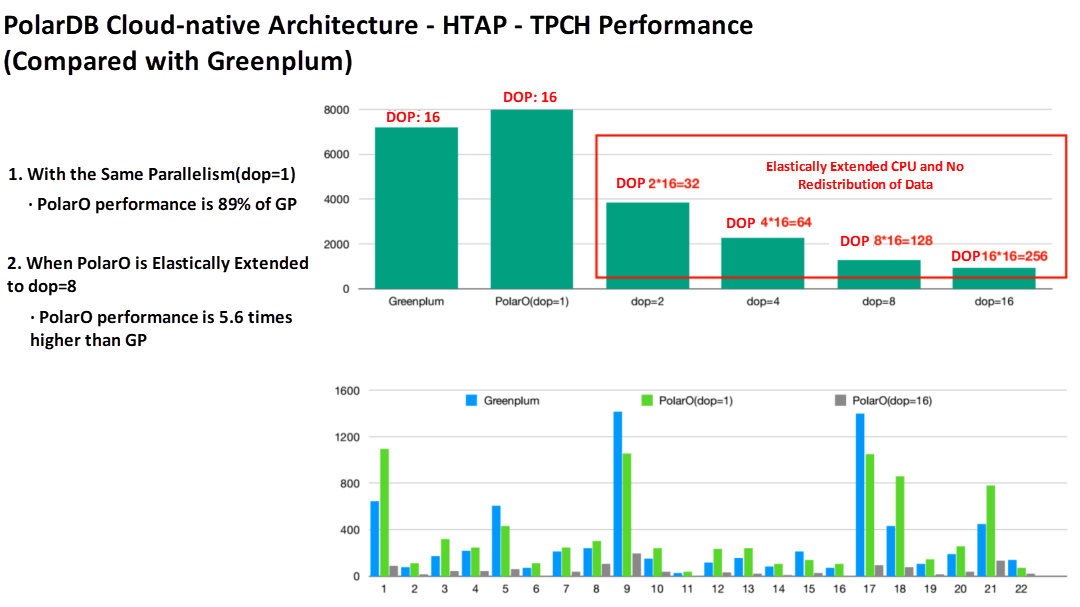

The preceding figure shows the comparison between the distributed parallel performance of PolarDB and the performance of standalone machine parallel. The first figure shows the 22 SQL acceleration ratios of TPCH. Here, the acceleration ratios of three SQL statements are more than 60 times higher, and most SQL statements are more than ten times higher. The second test will share the data of 1TB TPCH on the storage and 16 compute nodes and see how the performance is by increasing the CPU. In the second test chart, from 16 core to 256 core, it is mostly a linear improvement, but it reaches the bottleneck by 256 core. This is because the storage bandwidth is limited. If the bandwidth is increased, the overall performance will be improved. The bottom figure shows the performance of 22 SQL statements from 16 core to 256 core. The performance is linearly improved from 16 core to 128 core.

There is also a comparison between PolarDB and Greenplum. The test environment is the same hardware, 16 compute nodes and 1TB TPCH. As shown in the preceding figure, Greenplum has 16 cores and 16 CPUs for SQL processing. When the same degree of parallelism is used, the performance of PolarO is 89% of Greenplum. Why does Polar fail to achieve the performance of Greenplum when it is single-core? This is because data does not have data characteristics in shared storage. When Greenplum creates a table, the data is hash partitioned by default. When the two tables are joined, the join Key and the distribution Key are the same, and there is no need to shuffle the data. Polar has only one table, which has no data characteristics and is a randomly distributed data format. In this case, when any two tables are de-joined, a shuffle is required. Due to network factors, the performance of Polar single-core can only meet the 89% of Greenplum. We will optimize it through the PG partition table to solve this problem.

Although the underlying data of PolarDB is shared, a partition table can still be created in hash mode. At this time, the HTAP MPB mode of PolarDB can be aligned with Greenplum. After this feature is implemented, the single-core performance of PolarDB is the same as Greenplum. Four more tests are conducted in the red box part of the figure. PolarDB supports the elastic expansion of computing power. Data does not need to be redistributed in this case. This is the advantage of the random distribution of data. When using distributed execution engine, the priority is not the extreme performance, but the extensibility of the system. When your computing power is insufficient, you can quickly add nodes to accelerate computing.

For traditional MPP databases (such as Greenplum), its nodes are fixed, while Polar is stateless. The number of CPUs can be adjusted at any time. In this group of tests, only one GUC parameter needs to be adjusted to change Polar from 16 core to 256 core, and the calculation force is linearly expanded.

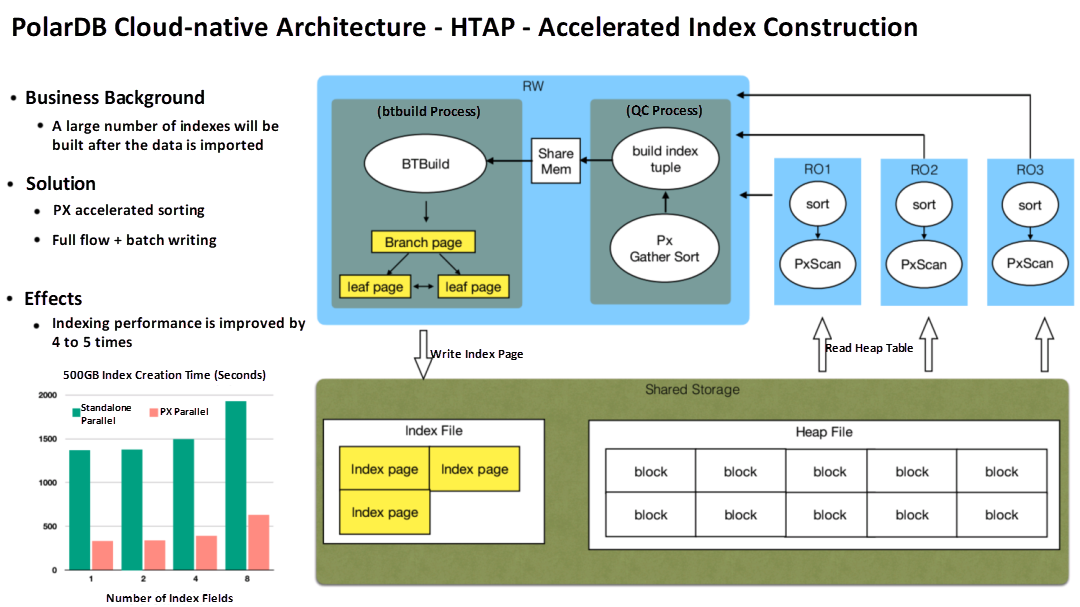

What else can you do after PolarDB supports MPP? After a large amount of data is imported into the newly launched business, some indexes need to be made. The principle is to sort the data first, organize it into an index page in memory, and write these pages directly to the disk. If PolarDB supports parallelism, the gameplay is different. As shown in the figure above, nodes RO1, RO2, and RO3 can scan data in parallel to the shared storage and then sort data locally in parallel. After the sequence is completed, the data is transmitted to the RW node through the network. The RW node merges and sorts the sorted data into an index page in the memory and gives it to the btbuild process. In the memory, the index page is used to update the pointing relationship between the index pages to build the instruction relationship of the index tree and then start writing the disk.

This solution uses the computing capabilities of multiple nodes and RO capabilities to accelerate the sorting phase. At the same time, it is passed to a QC node of MPP through the network, which is the central node. Then, this node is sent to the btbuild process through shared memory. After testing, 500G of data is used to create indexes, and the performance can be improved about five times.

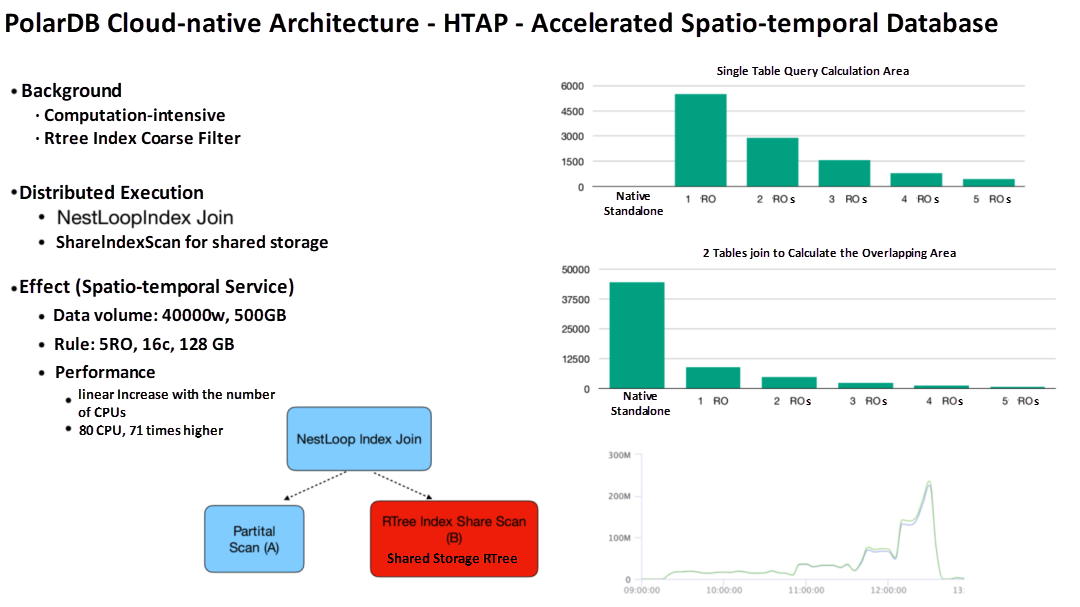

The spatio-temporal database is a computationally intensive coarse filter that uses the RTree index. It can locate an area through the RTree. In this area, further filtering can be done accurately. Only NestLoopIndex Join can be used during the shared storage index scan and Rtree scan process. There is no way to do hash join because the two-dimensional space of Rtree cannot be split completely. For spatio-temporal services, NestLoopIndex joins to obtain data from one table and scans it on the RTree in another table. This cannot be done on Greenplum because its index tree is split. However, the index tree of RTree is in the shared state in PolarDB, so no matter whether the worker is on node 1 or node 2, the index tree is complete in the shared storage concept. At this time, the two workers can directly use appearance for coordinated segmentation. Since it is computationally intensive, its acceleration effect will be better. After testing, the overall improvement can reach 71 times in an environment of 80 CPU.

This article is an introduction to the HTAP architecture. More implementation details will be shared in the future, such as optimizer, actuator, distributed consistency, etc. Stay tuned!

MySQL Deep Dive - Implementation and Acquisition Mechanism of Metadata Locking

[Into RDS] RDS Database Product Introduction and Business Scenario Selection

ApsaraDB - January 6, 2023

ApsaraDB - September 19, 2022

ApsaraDB - August 7, 2023

ApsaraDB - September 19, 2022

ApsaraDB - November 26, 2024

ApsaraDB - September 19, 2022

AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB