By Boge

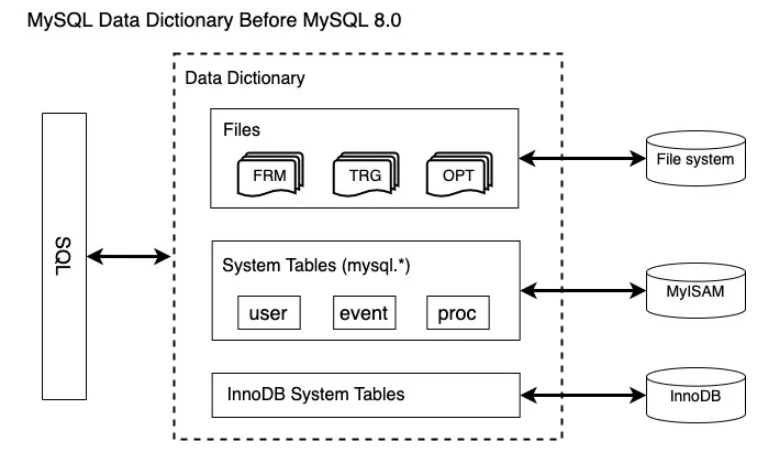

Before MySQL 8.0 was developed, the server layer and the storage engine (such as InnoDB) retained a copy of metadata (such as the schema name and table definition), which causes duplication and redundancy in information storage and a phenomenon where two copies of metadata are not synchronized. Different storage engines (such as InnoDB and MyISAM) store metadata at different locations and in different formats (.FRM, .PAR, .OPT, .TRN and .TRG). Therefore, the metadata cannot be managed in a centralized manner. In addition, the metadata stored in tables and files that do not support transactions will make DDL changes not atomic. Crash recovery will also become a problem.

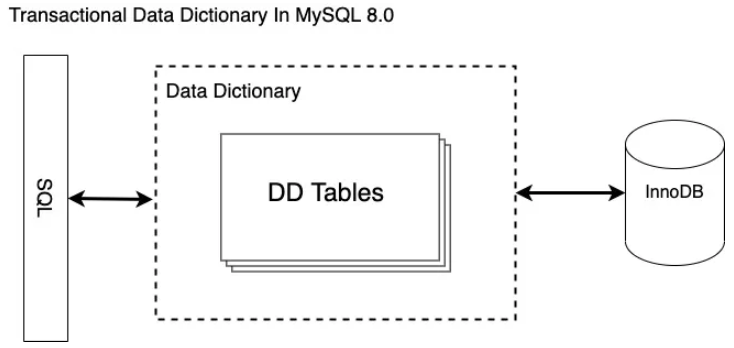

MySQL 8.0 introduced the data dictionary to solve these problems. MySQL 8.0 facilitates centralized management of the metadata of the server layer and different engines. Metadata is stored in the tables of the InnoDB engine, which naturally supports atomicity. Moreover, the server layer and the engine layer share a copy of metadata, so synchronization is no longer a problem.

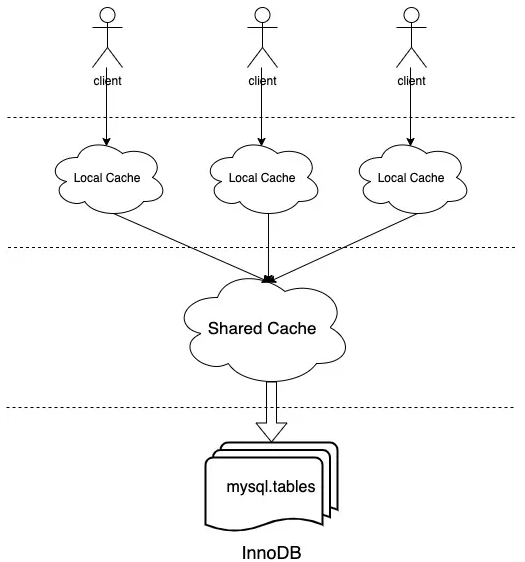

The data dictionary provides a client API for the server layer and the engine layer. API operations include the basic operations on metadata, such as acquire (), drop(), store(), and update(). The bottom layer allows read/write operations on the data dictionary tables stored in the InnoDB engine, including opening tables, constructing the primary key, and looking for the primary key. Two levels of cache are deployed to accelerate memory access to metadata objects from the client to the underlying storage. Both levels work based on hash maps. A level-1 cache is local and exclusive to each client (one client corresponding to one thread). A level-2 cache is a shared cache that can be accessed by all threads. This article focuses on the data structure and implementation architecture of the data dictionary and explains the implementation process of DDL that supports atomicity in the data dictionary.

The data structure in the data dictionary (DD for short) is organized in the polymorphic form of interface/implementation. The interface is implemented through an abstract class. The name of the implementation class is the name of the interface with an _impl suffix. For example, the name of the implementation class for the Table interface representing a table is Table_impl. Here is an example of Table_impl to describe the representation of the metadata object of a table in the DD cache.

class Table_impl : public Abstract_table_impl, virtual public Table {

// Fields.

Object_id m_se_private_id;

String_type m_engine;

String_type m_comment;

// - Partitioning related fields.

enum_partition_type m_partition_type;

String_type m_partition_expression;

String_type m_partition_expression_utf8;

enum_default_partitioning m_default_partitioning;

// References to tightly-coupled objects.

Index_collection m_indexes;

Foreign_key_collection m_foreign_keys;

Foreign_key_parent_collection m_foreign_key_parents;

Partition_collection m_partitions;

Partition_leaf_vector m_leaf_partitions;

Trigger_collection m_triggers;

Check_constraint_collection m_check_constraints;

};The Table_impl class contains a table-related metadata attribute definition, such as the engine type, comment, partition type, and partition expression.

Table_impl is also the memory structure most frequently accessed by the client in code implementations. If developers want to add new attributes, they can add and initialize them directly in this class. However, this will not persist the attributes in the storage engine automatically. In addition to the simple attributes mentioned above, some complex table-related attributes, such as column information, index information, and partition information, are stored in other DD tables and integrated into Table_impl objects in the memory cache.

Collection m_columns inherited from Abstract_table_impl represent the collection of all columns in the table. Each object named column_impl in the collection represents the metadata of the column, including its numeric type, whether it is NULL and auto-increment, and its default value. It also contains pointers to Abstract_table_impl, associating the column with its corresponding table.

class Column_impl : public Entity_object_impl, public Column {

// Fields.

enum_column_types m_type;

bool m_is_nullable;

bool m_is_zerofill;

bool m_is_unsigned;

bool m_is_auto_increment;

bool m_is_virtual;

bool m_default_value_null;

String_type m_default_value;

// References to tightly-coupled objects.

Abstract_table_impl *m_table;

};Table_impl also contains Collection m_partitions, which is the collection of partition metadata. Collection m_partitions stores the IDs, engines, options, range values, parent partitions, and child partitions of each partition.

class Partition_impl : public Entity_object_impl, public Partition {

// Fields.

Object_id m_parent_partition_id;

uint m_number;

Object_id m_se_private_id;

String_type m_description_utf8;

String_type m_engine;

String_type m_comment;

Properties_impl m_options;

Properties_impl m_se_private_data;

// References to tightly-coupled objects.

Table_impl *m_table;

const Partition *m_parent;

Partition_values m_values;

Partition_indexes m_indexes;

Table::Partition_collection m_sub_partitions;

};We can obtain all the metadata associated with the table using the Table_impl class of a table.

Metadata in the DD cache is read and stored in DD tables. Each table stores the basic attributes of a type of metadata, such as tables, columns, and indexes. They are interconnected by primary keys and foreign keys to constitute the whole metadata of Table_impl. DD tables are stored in the tablespace of MySQL. They are hidden from users in the release version and can only be viewed through some views of INFORMATION SCHEMA. In the debug version, you can view DD tables using SET debug='+d,skip_dd_table_access_check'. Example:

root@localhost:test 8.0.18-debug> SHOW CREATE TABLE mysql.tables\G

*************************< strong> 1. row < /strong>*************************

Table: tables

Create Table: CREATE TABLE `tables` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`schema_id` bigint(20) unsigned NOT NULL,

`name` varchar(64) CHARACTER SET utf8 COLLATE utf8_bin NOT NULL,

`type` enum('BASE TABLE','VIEW','SYSTEM VIEW') COLLATE utf8_bin NOT NULL,

`engine` varchar(64) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`mysql_version_id` int(10) unsigned NOT NULL,

`row_format` enum('Fixed','Dynamic','Compressed','Redundant','Compact','Paged') COLLATE utf8_bin DEFAULT NULL,

`collation_id` bigint(20) unsigned DEFAULT NULL,

`comment` varchar(2048) COLLATE utf8_bin NOT NULL,

`hidden` enum('Visible','System','SE','DDL') COLLATE utf8_bin NOT NULL,

`options` mediumtext COLLATE utf8_bin,

`se_private_data` mediumtext COLLATE utf8_bin,

`se_private_id` bigint(20) unsigned DEFAULT NULL,

`tablespace_id` bigint(20) unsigned DEFAULT NULL,

`partition_type` enum('HASH','KEY_51','KEY_55','LINEAR_HASH','LINEAR_KEY_51','LINEAR_KEY_55','RANGE','LIST','RANGE_COLUMNS','LIST_COLUMNS','AUTO','AUTO_LINEAR') COLLATE utf8_bin DEFAULT NULL,

`partition_expression` varchar(2048) COLLATE utf8_bin DEFAULT NULL,

`partition_expression_utf8` varchar(2048) COLLATE utf8_bin DEFAULT NULL,

`default_partitioning` enum('NO','YES','NUMBER') COLLATE utf8_bin DEFAULT NULL,

`subpartition_type` enum('HASH','KEY_51','KEY_55','LINEAR_HASH','LINEAR_KEY_51','LINEAR_KEY_55') COLLATE utf8_bin DEFAULT NULL,

`subpartition_expression` varchar(2048) COLLATE utf8_bin DEFAULT NULL,

`subpartition_expression_utf8` varchar(2048) COLLATE utf8_bin DEFAULT NULL,

`default_subpartitioning` enum('NO','YES','NUMBER') COLLATE utf8_bin DEFAULT NULL,

`created` timestamp NOT NULL,

`last_altered` timestamp NOT NULL,

`view_definition` longblob,

`view_definition_utf8` longtext COLLATE utf8_bin,

`view_check_option` enum('NONE','LOCAL','CASCADED') COLLATE utf8_bin DEFAULT NULL,

`view_is_updatable` enum('NO','YES') COLLATE utf8_bin DEFAULT NULL,

`view_algorithm` enum('UNDEFINED','TEMPTABLE','MERGE') COLLATE utf8_bin DEFAULT NULL,

`view_security_type` enum('DEFAULT','INVOKER','DEFINER') COLLATE utf8_bin DEFAULT NULL,

`view_definer` varchar(288) COLLATE utf8_bin DEFAULT NULL,

`view_client_collation_id` bigint(20) unsigned DEFAULT NULL,

`view_connection_collation_id` bigint(20) unsigned DEFAULT NULL,

`view_column_names` longtext COLLATE utf8_bin,

`last_checked_for_upgrade_version_id` int(10) unsigned NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `schema_id` (`schema_id`,`name`),

UNIQUE KEY `engine` (`engine`,`se_private_id`),

KEY `engine_2` (`engine`),

KEY `collation_id` (`collation_id`),

KEY `tablespace_id` (`tablespace_id`),

KEY `type` (`type`),

KEY `view_client_collation_id` (`view_client_collation_id`),

KEY `view_connection_collation_id` (`view_connection_collation_id`),

CONSTRAINT `tables_ibfk_1` FOREIGN KEY (`schema_id`) REFERENCES `schemata` (`id`),

CONSTRAINT `tables_ibfk_2` FOREIGN KEY (`collation_id`) REFERENCES `collations` (`id`),

CONSTRAINT `tables_ibfk_3` FOREIGN KEY (`tablespace_id`) REFERENCES `tablespaces` (`id`),

CONSTRAINT `tables_ibfk_4` FOREIGN KEY (`view_client_collation_id`) REFERENCES `collations` (`id`),

CONSTRAINT `tables_ibfk_5` FOREIGN KEY (`view_connection_collation_id`) REFERENCES `collations` (`id`)

) /*!50100 TABLESPACE `mysql` */ ENGINE=InnoDB AUTO_INCREMENT=549 DEFAULT CHARSET=utf8 COLLATE=utf8_bin STATS_PERSISTENT=0 ROW_FORMAT=DYNAMIC

1 row in set (0.00 sec)We can obtain the metadata fields stored in the storage engine by analyzing the table definition of mysql.tables. DD tables include tables, schemata, columns, column_type_elements, indexes, index_column_usage, foreign_keys, foreign_key_column_usage, table_partitions, table_partition_values, index_partitions, triggers, check_constraints, view_table_usage, and view_routine_usage.

Storage_adapter is a processing class that accesses the persistent storage engine, including methods such as get(), drop(), and store(). When the metadata of a table is obtained for the first time, the storage_adapter::get() method is called. The following code provides an example of the process:

Storage_adapter::get()

// According to the type of the object to be accessed, add the dependent DD tables to the open table list.

|--Open_dictionary_tables_ctx::register_tables< T>()

|--Table_impl::register_tables()

|--Open_dictionary_tables_ctx::open_tables() // Call the server layer interface to open all tables.

|--Raw_table::find_record() // Call the handler interface to search for records based on the input key, such as the table name.

|--handler::ha_index_read_idx_map() // index read

// Parse the corresponding attributes from the record and call the field[field_no]->val_xx() function.

|--Table_impl::restore_attributes()

// Call the restore_children() function to read the complete metadata definition from other DD tables associated with the object according to the primary keys and foreign keys.

|--Table_impl::restore_children()

|--Return the complete DD cache object.When obtaining the correspondence between columns and attributes, all columns in the DD table of this type are included in sequence according to the enumeration-type subscript of the Tables object, which corresponds to those table definitions. Therefore, if we need to add columns to the DD table, we also need to add the corresponding columns to the following enumeration-type definition and add reading and storing operations to the new columns in the Table_impl::restore_attributes() and Table_impl::store_attributes() functions.

class Tables : public Entity_object_table_impl {

enum enum_fields {

FIELD_ID,

FIELD_SCHEMA_ID,

FIELD_NAME,

FIELD_TYPE,

FIELD_ENGINE,

FIELD_MYSQL_VERSION_ID,

FIELD_ROW_FORMAT,

FIELD_COLLATION_ID,

FIELD_COMMENT,

FIELD_HIDDEN,

FIELD_OPTIONS,

FIELD_SE_PRIVATE_DATA,

FIELD_SE_PRIVATE_ID,

FIELD_TABLESPACE_ID,

FIELD_PARTITION_TYPE,

FIELD_PARTITION_EXPRESSION,

FIELD_PARTITION_EXPRESSION_UTF8,

FIELD_DEFAULT_PARTITIONING,

FIELD_SUBPARTITION_TYPE,

FIELD_SUBPARTITION_EXPRESSION,

FIELD_SUBPARTITION_EXPRESSION_UTF8,

FIELD_DEFAULT_SUBPARTITIONING,

FIELD_CREATED,

FIELD_LAST_ALTERED,

FIELD_VIEW_DEFINITION,

FIELD_VIEW_DEFINITION_UTF8,

FIELD_VIEW_CHECK_OPTION,

FIELD_VIEW_IS_UPDATABLE,

FIELD_VIEW_ALGORITHM,

FIELD_VIEW_SECURITY_TYPE,

FIELD_VIEW_DEFINER,

FIELD_VIEW_CLIENT_COLLATION_ID,

FIELD_VIEW_CONNECTION_COLLATION_ID,

FIELD_VIEW_COLUMN_NAMES,

FIELD_LAST_CHECKED_FOR_UPGRADE_VERSION_ID,

NUMBER_OF_FIELDS // Always keep this entry at the end of the enum

};

};The data dictionary implements a two-level caching architecture to avoid reading data from multiple tables in the persistent storage every time we access metadata objects and reuse the metadata memory objects generated. The first level is exclusive to a local client, and the core data structure is Local_multi_map, which is used to accelerate repeated access to the same object in the current thread. It also manages objects in committed, uncommitted, and dropped states when the current thread is involved in modifications (DDL) to DD objects. The second level is a common, shared cache among multiple threads. The core data structure is Shared_multi_map. It contains objects that all threads can access. Therefore, Shared_multi_map must be configured with concurrency control.

The underlying implementations of the two levels are highly uniform, both based on hash maps with the implementation std::map. Local_multi_map and Shared_multi_map are derived from Multi_map_base.

template <typename T>

class Multi_map_base {

private:

Element_map<const T *, Cache_element<T>> m_rev_map; // Reverse element map.

Element_map<typename T::Id_key, Cache_element<T>>

m_id_map; // Id map instance.

Element_map<typename T::Name_key, Cache_element<T>>

m_name_map; // Name map instance.

Element_map<typename T::Aux_key, Cache_element<T>>

m_aux_map; // Aux map instance.

};

template <typename K, typename E>

class Element_map {

public:

typedef std::map<K, E *, std::less<K>,

Malloc_allocator<std::pair<const K, E *>>>

Element_map_type; // Real map type.

private:

Element_map_type m_map; // The real map instance.

std::set<K, std::less<K>,

Malloc_allocator<K>>

m_missed; // Cache misses being handled.

};It is called Multi_map_base because it contains multiple hash maps. It is suitable for users to obtain cache objects according to different types of keys, such as id, name, and DD cache. Element_map is an encapsulation of std::map. Key is one of the types mentioned above. Value is an encapsulated Cache_element of DD cache object pointer, which encapsulates the object and reference count. The Multi_map_base object implements a variety of m_map() template functions and can easily select the corresponding hash map according to the key type. The difference between Shared_multi_map and Local_multi_map is that Shared_multi_map also introduces a set of latches and condition variables for thread synchronization and cache miss processing in concurrent accesses. Shared_multi_map also provides the capabilities of memory management and reuse for the Cache_element object.

The first-level cache is located inside each Dictionary_client (each client corresponds to a thread THD), composed of Object_registry in different states (committed, uncommitted, and dropped). Each Object_registry consists of different metadata types of the Local_multi_map. It is used to manage object caches of different types, such as tables, schemas, character sets, statistics, and events.

class Dictionary_client {

Object_registry m_registry_committed; // Registry of committed objects.

Object_registry m_registry_uncommitted; // Registry of uncommitted objects.

Object_registry m_registry_dropped; // Registry of dropped objects.

THD *m_thd; // Thread context, needed for cache misses.

Auto_releaser m_default_releaser; // Default auto releaser.

Auto_releaser *m_current_releaser; // Current auto releaser.};

class Object_registry { std::unique_ptr<Local_multi_map<Abstract_table>> m_abstract_table_map;

std::unique_ptr<Local_multi_map<Charset>> m_charset_map;

std::unique_ptr<Local_multi_map<Collation>> m_collation_map;

std::unique_ptr<Local_multi_map<Column_statistics>> m_column_statistics_map;

std::unique_ptr<Local_multi_map<Event>> m_event_map;

std::unique_ptr<Local_multi_map<Resource_group>> m_resource_group_map;

std::unique_ptr<Local_multi_map<Routine>> m_routine_map;

std::unique_ptr<Local_multi_map<Schema>> m_schema_map;

std::unique_ptr<Local_multi_map<Spatial_reference_system>>

m_spatial_reference_system_map;

std::unique_ptr<Local_multi_map<Tablespace>> m_tablespace_map;

};

template <typename T>

class Local_multi_map : public Multi_map_base<T> {};When the registry is in the committed state, it indicates that the user is accessing an object that already exists in the database. In this case, the registry is the location where the DD cache object is stored in the local cache. The uncommitted and dropped states are used to differentiate execution states when a DDL statement is executed. During the execution, DD objects corresponding to the old table to be dropped will be stored in the dropped registry. DD objects corresponding to the new table definition will be stored in the uncommitted registry.

The shared cache is unique to the Server and is implemented by the singleton Shared_dictionary_cache. Similar to the Object_registry in the local cache, Shared_dictionary_cache also needs to include caches for various types of objects. Multi_map_base selects template functions corresponding to hash maps based on different key types. Similarly, Object_registry and Shared_dictionary_cache select m_map() functions based on different object types. This simplifies function calling to a large extent.

class Shared_dictionary_cache {

Shared_multi_map<Abstract_table> m_abstract_table_map;

Shared_multi_map<Charset> m_charset_map;

Shared_multi_map<Collation> m_collation_map;

Shared_multi_map<Column_statistics> m_column_stat_map;

Shared_multi_map<Event> m_event_map;

Shared_multi_map<Resource_group> m_resource_group_map;

Shared_multi_map<Routine> m_routine_map; Shared_multi_map<Schema> m_schema_map;

Shared_multi_map<Spatial_reference_system> m_spatial_reference_system_map;

Shared_multi_map<Tablespace> m_tablespace_map;

};

template <typename T>

class Shared_multi_map : public Multi_map_base<T> {

private:

static const size_t initial_capacity = 256;

mysql_mutex_t m_lock; // Single mutex to lock the map.

mysql_cond_t m_miss_handled; // Broadcast a miss being handled.

Free_list<Cache_element<T>> m_free_list; // Free list.

std::vector<Cache_element<T> *>

m_element_pool; // Pool of allocated elements.

size_t m_capacity; // Total capacity, i.e., if the

// number of elements exceeds this

// limit, shrink the free list.

}It is different from the local caches that can access hash maps without locks. Shared caches need to be locked when acquiring or releasing DD cache objects to adjust the reference count and prevent it from being destroyed during the access.

The user calls the function to obtain the metadata object through the client, inputs the name string of metadata, constructs a corresponding name key, and uses the key to obtain the metadata object from the cache. The overall process involves the following items in sequence: level-1 local cache, level-2 shared cache, and storage engine.

// Get a dictionary object.

template < typename K, typename T>

bool Dictionary_client::acquire(const K &key, const T **object,

bool *local_committed,

bool *local_uncommitted) {

// Lookup in registry of uncommitted objects

T *uncommitted_object = nullptr;

bool dropped = false;

acquire_uncommitted(key, &uncommitted_object, &dropped);

...

// Lookup in the registry of committed objects.

Cache_element< T> *element = NULL;

m_registry_committed.get(key, &element);

...

// Get the object from the shared cache.

if (Shared_dictionary_cache::instance()->get(m_thd, key, &element)) {

DBUG_ASSERT(m_thd->is_system_thread() || m_thd->killed ||

m_thd->is_error());

return true;

}

}In the level-1 local cache, the system obtains the object from the uncommitted and dropped registries first because both contain the latest modifications. The system also checks whether the object to be obtained is dropped in this process. After that, the system obtains the object from the committed registry. If the metadata object is obtained, it is returned. Otherwise, the system will try to obtain the object from the level-2 shared cache.

The Shared_multi_map::get() method is called to obtain the object from the shared cache. It is a direct hash map lookup after the locking. If the object exists in the shared cache, the system increments the reference count and returns it. If the object does not exist in the shared cache, the system enters the process of cache miss. The system calls the storage engine method Storage_adapter::get() to obtain the object from the DD tables and adds the obtained object to the committed registries of the shared cache and the local cache in turn.

// Get a wrapper element from the map handling the given key type.

template <typename T>

template <typename K>

bool Shared_multi_map<T>::get(const K &key, Cache_element<T> **element) {

Autolocker lock(this);

*element = use_if_present(key);

if (*element) return false;

// Is the element already missed?

if (m_map<K>()->is_missed(key)) {

while (m_map<K>()->is_missed(key))

mysql_cond_wait(&m_miss_handled, &m_lock);

*element = use_if_present(key);

// Here, we return only if element is non-null. An absent element

// does not mean that the object does not exist, it might have been

// evicted after the thread handling the first cache miss added

// it to the cache, before this waiting thread was alerted. Thus,

// we need to handle this situation as a cache miss if the element

// is absent.

if (*element) return false;

}

// Mark the key as being missed.

m_map<K>()->set_missed(key);

return true;

}Due to open-table accesses to DD tables, building the DD cache object is time-consuming, and Shared_multi_map may not be locked. Therefore, concurrency control is required for concurrent accesses from clients. The first client to access will add the key of cache miss to the m_missed collection in Shared_multi_map. The collection contains all the object keys currently reading metadata. Other clients that access the same object find the object key in the m_missed collection and wait.

After the first client obtains the complete DD cache object, the object is added to the shared cache, and the corresponding key is removed from the m_missed collection. Then, the system notifies the waiting threads to obtain the object from the shared cache by broadcasting.

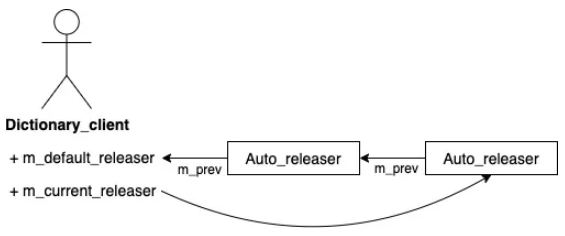

Auto_releaser is an RAII class. The encapsulation is done before the client accesses the DD cache. This ensures that all the obtained DD cache objects are not released in the local cache within the scope of the Auto_releaser object. Auto_releaser contains the registry that needs to be released. It collects the DD cache objects obtained by the current client from the shared cache through the auto_release() function. It automatically releases the object when destructing beyond its scope, removes the object from the committed registry in the local cache, and decrements the reference count in the shared cache.

There may be an Auto_releaser at each layer when nested functions are called. In this case, they are connected by a linked list pointer. When the function returns, objects at this layer are released as required, and the DD cache objects that need to be returned to the upper layer are handed over to Auto_releaser in the upper layer. Transfer_release() can be used to transfer the objects that need to be released between Auto_releaser objects at different levels. It can flexibly specify the level where DD cache objects are no longer needed.

During the execution of MySQL inplace DDL, the system obtains the DD cache object of the current table definition and constructs a new DD object based on the actual DDL operation. Then, the system calls the client interfaces in turn to delete the current table definition and store the new table definition.

{

if (thd->dd_client()->drop(table_def)) goto cleanup2;

table_def = nullptr;

DEBUG_SYNC_C("alter_table_after_dd_client_drop");

// Reset check constraint's mode.

reset_check_constraints_alter_mode(altered_table_def);

if ((db_type->flags & HTON_SUPPORTS_ATOMIC_DDL)) {

/*

For engines supporting atomic DDL we have delayed storing new

table definition in the data-dictionary so far in order to avoid

conflicts between old and new definitions on foreign key names.

Since the old table definition is gone we can safely store new

definition now.

*/

if (thd->dd_client()->store(altered_table_def)) goto cleanup2;

}

}

...

/*

If the SE failed to commit the transaction, we must rollback the

modified dictionary objects to make sure the DD cache, the DD

tables and the state in the SE stay in sync.

*/

if (res)

thd->dd_client()->rollback_modified_objects();

else

thd->dd_client()->commit_modified_objects();In the drop() process, data corresponding to the DD cache object of the current table definition is removed from the storage engine. Then, it is removed from the shared cache. (The reference count of the current object should be 1, which means it is only used by the current thread.) Then, it is added to the dropped local cache.

In the store() process, the new table definition is written to the storage engine, and the corresponding DD cache object is added to the uncommitted cache.

After a transaction is committed or rolled back, the client clears the dropped and uncommitted registries in the local cache. Since the InnoDB engine supports transactions, data at the persistent storage level is committed or rolled back through the interface of the storage engine. No additional operations are required for the client.

In this process, the metadata lock (MDL) prevents other threads from accessing the DD object being modified. Therefore, operations on Shared_dictionary_cache are secure. When the DDL operation ends (committed or rolled back) and the EXCLUSIVE lock is released, new threads can reload the new table definition from the storage engine.

The MySQL data dictionary solves the architecture problems described in the background part. It secures access to metadata and lowers the cost of storage and management. The architecture implementation is sophisticated, where code can be reused maximally through a large number of template class implementations. The implementation of multi-layer caching can also improve the efficiency of accessing significantly. The server layer and the storage layer can easily access metadata anywhere through concise interfaces in the client.

[1] MySQL8.0DataDictionary:BackgroundandMotivation

http://mysqlserverteam.com/mysql-8-0-data-dictionary-background-and-motivation/

[2] MySQL 8.0: Data Dictionary Architecture and Design

http://mysqlserverteam.com/mysql-8-0-data-dictionary-architecture-and-design/

[3] Source code mysql / mysql-server 8.0.18

https://github.com/mysql/mysql-server/tree/mysql-8.0.18

A Deep-Dive into MySQL: Analysis of Memory Management in Performance Schema

A Deep-Dive into MySQL: Analysis of Memory Management in Performance Schema

ApsaraDB - February 22, 2022

ApsaraDB - October 21, 2020

Alibaba Clouder - July 5, 2019

Alibaba Cloud Community - February 8, 2023

ApsaraDB - March 19, 2020

Alibaba Clouder - August 10, 2020

Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Database Security Solutions

Database Security Solutions

Protect, backup, and restore your data assets on the cloud with Alibaba Cloud database services.

Learn MoreMore Posts by ApsaraDB