Cloud computing has provided more computing capability, and more creative power, to propel the Internet era. Relational databases are something few applications can do without. Cloud databases that are ready to use out of the box and feature high performance to cost ratios have found favor among developers all over the world.

Early versions of the MySQL database were optimized for early systems/hardware, but they didn’t take into consideration the kinds of systems/hardware that are becoming popular now. Therefore they leave a lot to be desired in high concurrency situations. Furthermore, unlike other relational databases, for the sake of compatibility, MySQL needs to write two backup logs (a task log and a copy log) which lowers its performance in comparison to other commercial databases. The above complaints all come from real customer cases, so to put it simply, the underlying structure of traditional cloud databases give rise to the following problems:

1.Read/write instances and read-only instances each have their own independent copy of the data, so when the customer purchases a new read-only instance, he needs not only to pay the computing costs, but also needs to purchase the appropriate storage resources.

2.Since traditional backup techniques also involve copying data and uploading to cheap storage, the speed of the operation is bottlenecked by the speed of the network.

3.Since both read/write instances and read-only instances each have their own copy of the data, creating a new read-only instance also involves re-copying all of the data, so when we take into consideration the limited speed of data flow across the network, the operation will inevitably be slow.

4.Early versions of the MySQL database were optimized for early systems/hardware, but they didn’t take into consideration optimizations for the kinds of systems/hardware that are becoming popular now. Therefore they leave a lot to be desired in high concurrency situations. Furthermore, unlike other relational databases, for the sake of compatibility, MySQL uses two logs (task log and copy log), which hurts its performance in comparison to other commercial databases.

5.Because of the limits of physical disks and backup strategies, the size of the database can't be too large without making O&M a disaster.

6.Read/write instances and read-only instances synchronize through incremental logic data, so all of the SQL in a read/write instance needs to be re-executed on read-only instances (including steps like SQL parsing and SQL optimizations). At the same time, the concurrency of copy reads is based on table dimensions, which affects all kinds of task switching.

As the database grows, so do these “small” annoyances which can plague DBAs and CTOs. Today, all of these problems that have tripped us up for years are all solved in Alibaba Cloud’s new PolarDB. Note that these issues are solved from the root of each problem, not just hacked together solutions.

PolarDB is the next-generation relational database based on the cloud computing framework. Currently, PolarDB only supports MySQL with PostgreSQL, which is under development. The most notable features are as follows:

With these features, PolarDB satisfies both the elastic expandability needs of public cloud computing environments and the high availability needs of the database server for users on the Internet. The expansion time of read-only instances is no longer related to data size and the service can now continue even in the time between a server crash and restart.

PolarDB also features a complete management system based on Docker to handle instance creation, deletion, and account creation tasks passed down by the user. It also includes a complete and detailed monitoring system and reliable, high availability switching. The management system also maintains a set of metabases used to record the locational information of of each data block, which it provides to PolarSwitch which then passes it on to the appropriate destination. It can be said that the entire PolarDB project uses several new technologies to provide users with fast (6x the performance of MySQL) performance, large capacity (up to 100 TB), and cheap resources (about 1/10 the cost of other commercial databases).

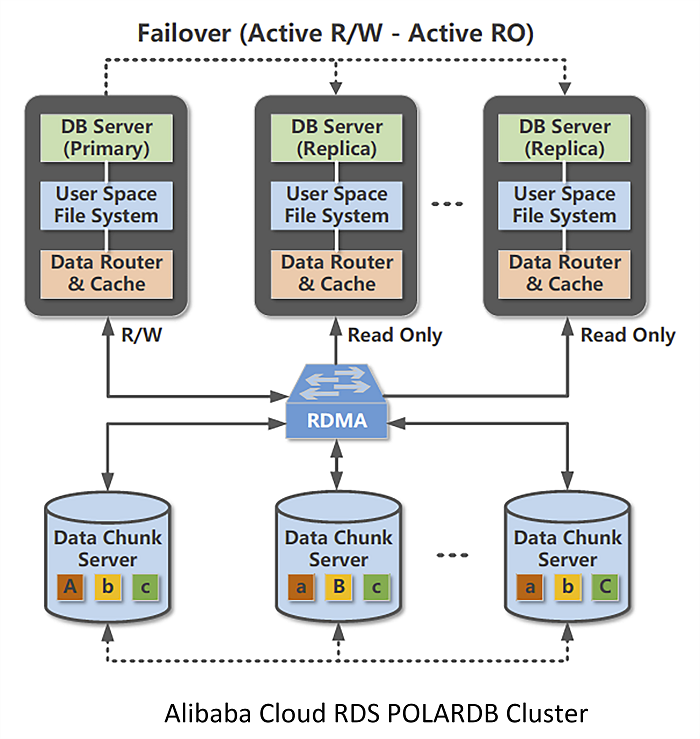

Figure 1 Architecture of the PolarDB beta release

In Figure 1, the above three boxes represent three computing nodes, while the bottom three blue boxes are three storage nodes. There are three important points to consider for computing nodes.

Figure2 Framework of the PolarDB database kernel

The database processes (Polar Database, referred to as PolarDB). The PolarDB database kernel is divided into instance roles, which are Primary, StandBy, and Replica. The StandBy and Replica nodes are called as Slave node, and the Primary nodes are called as Master nodes.

| Role Type | Read Permission | Write Permission | Share the same copy of both data and log files |

|---|---|---|---|

| Primary | Yes | Yes | Yes |

| StandBy | Yes | No | No (Has an independent copy of the data and log files, as shown in Figure 2) |

| Replica | Yes | No | Yes |

The StandBy nodes are used primarily in data centers for disaster tolerance and creating cross-zone read-only instances. They are not currently implemented in the beta release.

If the database role has the read permission and does not have write permission, cannot perform operations to change the data on the backend thread. Even though the user thread only has the read permission, the data can be updated on backend threads. For example, the physical replication method can be used to increment data from a Primary node. Since read-only instances do not need an independent copy of the data, creating a new read-only instance is not only fast, but cheap. The user need only pay the cost of the corresponding computing node.

This refers to the Polar File System also called PolarFS.

The hosts in traditional file system must access the same data in block storage, so it does not support multiple mount points. Also, the systems are embedded in the operating system kernel, each read/write operation must be submitted to the kernel state. So the user state is then returned upon completion, which drags down the performance.

The PolarDB file system is a proprietary user-state file system. It supports:

Also, PolarFS runs directly in the user state so it reduces the overhead from operating system switch.

This refers to the user client for the block storage system (Polar Store Client, also called Polar Switch).

When PolarFS receives a read/write request, it uses a shared memory to send the data to PolarSwitch. Polar Switch is a background daemon process running on computing node hosts that receives all of the read/write block storage requests for the instances and tools on the host. PolarSwitch performs a simple aggregation and then statistically distribute requests to the daemon on the corresponding storage node. From this we can see that PolarSwitch is a resource-heavy process, so if it isn’t handled well, it could severely impact the database instance on the computing node. Therefore, our management process uses CPU binding, memory pre-distribution, and resource quarantine, as well as deploying highly efficient, reliable monitoring systems to ensure stability.

This refers to the server for the block storage system (Polar Store Server, also called ChunkServer). The above three components all run on computing nodes, while this one runs on storage nodes. It is primarily responsible for performing data block reads. The size of a data block is currently 10GB, each with three copies (located on three different storage nodes). Two of the three copies must write successfully before the operation will be reported successful to the user client.

It supports high availability on the data block dimension, meaning that if a data block becomes unavailable, it can be recovered in seconds without anything on the upper level being aware.

Furthermore, PolarStore uses technology similar to Copy On Write to support snapshot creation in seconds. This means that all of the data in a database can be quickly backed up regardless of how large the underlying data may be, allowing PolarDB to support hard drives as large as 100 TB.

Computing and storage nodes are connected via a 25 Gigabit RDMA network connection, ensuring that data transmission flow will not be bottlenecked by network speeds.

We will analyze how PolarDB improves on the MySQL kernel in terms of performance optimizations and describe the roadmap of PolarDB to help you understand the internal operating mechanisms of the PolarDB database.

Simplistically, if read/write instances and read-only instances share the same underlying data and logs, then you only need to change the data index in the read-only database settings file to a read/write index. It should be able to work right away. However, this method would bring about a number of issues:

1.If a read/write instance has made changes to a particular piece of data, because of the Buffer Pool caching mechanism, the data page may not be refreshed on the disk, so at this point the read-only instance won't be able to see the data. If we have to refresh the disk every time, then how is it any different from a file?

2.Let’s look at another task in detail. Consider, for instance, a read/write task that changes 3 data pages, 2 of which are written to disk and 1 is not. At this point, a read-only instance needs to query and needs these data pages, and that would cause data inconsistence? To put it another way, the MVCC mechanism on the read-only node would be corrupted.

3.Consider DDL. If a table is deleted on a read/write instance, then what would happen when a read-only instance still needs to query the table? Where would the read-only instance go to query the data? Well, the IBD file has already been destroyed by the read/write instance, so the poor read-only instance has no choice but to core dump.

4.If the read/only instance is in the middle of writing a data page and the read-only instance needs to read the same data page, then the read-only instance may very well be reading a page that’s only half written. Afterwards, when the checksum inevitably fails, the database will just crash.

Therefore, if we think about it carefully, having multiple database instances share the same data copy is no simple task. In order to solve the above issues, we need to have our read-only instance to update data at the same pace as the read/write instance, and also implement a complete dirty page refresh mechanism. Modern relational databases actually already have a Redolog task log that’s updated by the record data page. In MySQL, this file is ib_logfileXX, so taking the Binlog copy framework into consideration, PolarDB uses this task log to create a set of data copying methods. The read-only instance only needs to apply the log produced by the read/write instance sequentially. The StandBy and Replica nodes both need to update the data structure in memory, but the StandBy node also needs to write the updated incremental data to its own data files and logs because it has to maintain an independent copy of data and log. Replica, on the other hand, is not required to perform this operation. Because the MySQL Redolog and Binlog record are physical data pages (of course there are also logical parts), we call this kind of copying physical replication.

Since the Redolong does not record the SQL implemented by the user, but only the final result of the operation, that is the changes made to the data page after executing the SQL, frameworks that rely on this type of copy do not require SQL parsing or SQL optimization. MySQL looks for the data page in the corresponding file directly, and once it locates the specified offset, all it has to do is perform the required update. Performance, therefore, can be brought to an extreme, since the concurrency granularity is changed from the table level to the data page level. Of course this brings with it a host of new problems, some which are: MVCC in physical replications. MVCC in MySQL relies on the Undo operation to retrieve multiple versions of data. If the Primary node needs to delete an Undo data page while the Replica node is still reading the same data, then there will be problems. The StandBy node suffers similar issues, therefore we provide two different methods for our clients. One such method is for all Slave nodes to regularly report to the Primary node the largest Undo data page the Slave can currently delete. The Primary node then makes arrangements accordingly. The second method is to, after the Primary node has deleted an Undo data page and the Slave has received the log, determine whether or not the data page is still in use. If the data is still being used, the operation will be put on hold, and an error will be reported to the user in the event that the operation times out. Furthermore, in order to allow the Slave to perceive the beginning and end of a task as well as the timestamp, we’ve also added a number of logical logging types in the log.

DDL in physical replications. A Replica node may still have requests that need to be performed with a table that’s already been deleted by the Primary node. To deal with this, we have determined that if the Primary performs an operation that changes table structure, then before the operation returns success, we must first notify all Replica nodes (with a timeout limit) so that they know that the table has already been deleted and further requests will fail. In practice, we can use MDL locking to control this process. Of course, this kind of forced synchronization operation can significantly impact performance, so we will continue to optimize the DDL.

Data replication in physical replications. Aside from copying data on the engine layer, PolarDB also needs to take into consideration copying files in the MySQL Server layer table structure, for example frm and opt files. Furthermore, it also needs to consider cache consistency problems on the Server layer, including permission information and table-level statistical information, etc. Concurrent application of logs in physical replications. Since the physical replication logs allow us to support concurrency on the snapshot dimension, PolarDB needs to take full advantage of this feature and at the same time ensure that the log and rollback transactions are properly applied after the database is run. Actually, this portion of code logic is similar to the logic that MySQL uses to recover from a crash. PolarDB has reused and transformed the code, implementing a number of performance optimizations to ensure that physical replications are both fast and stable.

Change Buffer problems in physical replications. In essence, Change Buffer is a special cache mechanism used to reduce the IO overhead of level two indexing. When the corresponding level two index page hasn't been read into memory, it is temporarily cached. When the data page is subsequently read into memory, the application is performed again. This feature can also comes with its own problems. For example, the Primary node, because it still hasn't read the data page into memory, might still have the operation cached in Change Buffer. However, the StandBy node might have already imported the data page to memory due to different query requests, causing a Change Buffer Merge operation. This causes inconsistency, so in order to solve this problem we introduce the concept of shadow page. Shadow page saves the unchanged data pages, merges the change buffer record with the original data page, and then closes the redo log of the Mtr. This prevents the Page from being added to the flush list. Furthermore, we need to add a new type of log in order to ensure that the user thread doesn’t see the state in the middle of the process of Change Buffer merge

Dirty page control in physical replications. A Primary node cannot have dirty pages without any controls, because this would cause the Replica node to read inconsistent data pages and data pages with un-submitted tasks. We have provided a strategy to handle this, whereby Replica needs to cache all yet-to-be-written data changes (that is, RedoLog). Only after the Primary node has updated dirty pages to the disk can the log cached in the Replica node by released. Once this strategy is applied, the usage rate of the Replica memory and the speed at which Primary updates dirty pages become linked, so we have to adjust the dirty page algorithm for the Primary node. We also have to take into consideration the issue of dirty pages slowing down on Primary nodes due to frequently updated data pages.

We need to consider Query Cache and discard/import table space problems in physical replications.

From the perspective of compatibility, since the PolarDB storage engine is currently only compatible with InnoDB data tables, not with Myisam or Tokudb, and lots of system tables are in Myisam, they need to be converted.

Physical replications can bring huge performance increases, but logical logs are still useful due to their high compatibility, so PolarDB retains Binlog logic to make things more convenient for the user.

Traditional MySQL databases do not have the concept of instance roles, so we can only query the read_only variable to determine the current role of an instance. PolarDB introduces three roles to satisfy the needs of different use cases. The Primary node indicates its role at the time of initialization, while StandBy and Replica nodes are indicated in subsequent settings files.

<g-455448329></g-455448329>Failover.<g-333361376></g-333361376>

There are currently three supported methods of switching. Primary can be demoted to StandBy, StandBy can be promoted to Primary, and Replica can be promoted to Primary. Each switch can be logged in the system table to facilitate later queries. Primary and StandBy nodes each have their own data files, so it’s not uncommon to see data inconsistencies in asynchronous replication mode. We provide a mechanism to ensure that the data in a new StandBy node is consistent with the data in the Primary node. The thought process is quite simple. When a new StandBy node is restarted, we can simply query the new Primary and roll back the extra data. Here we just don’t use SQL FlashBack based on Binlog, rather we use data page FlashBack based on Redolog for higher efficiency and accuracy. Because Primary and Replica nodes share the same copy of the data, we don't have to worry about inconsistent data sharing, so when there is a switch, the new Primary node need only finish applying the logs that the old Primary node didn't finish applying. Since this is a parallel operation, it can be completed very quickly.

Here we will briefly introduce the beta version’s failover logic: First, the management system detects that the Primary is unavailable (or initiates an O&M operation), then it connects to Primary (if possible) and kills all user connections. Then set the read_only flag and set PolarStore so that this IP is read only (Primary and Replica nodes must be on different computing nodes). Then the system connects to the Replica that is about to be promoted, sets PolarStore to read/write to this IP, and MySQL re-mounts PolarFS in read/write mode. Finally, the statement to promote Replica to Primary is executed. Under normal circumstances, the time it takes to execute the entire process is minimal, about 30 seconds to perform a switch, unless the latency for the Primary and Replica nodes is too high. In this case, the time will be extended, however it will still be faster than replicating via Binlog by at least 2 decimal places. We will continue optimizing this feature in the future to even further improve performance.

Traditional Binlog replication only offers two modes, namely asynchronous and semi-synchronous mode. In PolarDB, we’ve added a strong synchronous mode which requires Slave nodes to wait until data is finished being applied before returning success. This is good news to users who are particularly sensitive to replication performance. It allows us to ensure that the data queried by read-only instances is consistent with the data queried by the read/write instances. PolarDB also supports a delayed replication function which can replicate to a specific task or a point in time. Of course, these two features are mainly provided for StandBy. In order to further reduce replication latency, if a Replica node notices that its own latency has exceeded a certain threshold, then it will automatically activate Boost mode (which will impact reading on the instance) to accelerate replication. If a Primary node notices that the latency for a Replica is too high, for the sake of security, it will temporarily kick the Replica node from the replication topology. At this point, all we have to do is restart the Replica (since the Replica doesn’t have to perform crash recovery, restarting is quite fast). PolarDB also supports replication filtering functionality which allows us to only replicate certain databases or specific tables. Since we don’t have to query data we’re not interested in, this can further reduce replication latency. Many of the features mentioned here are unavailable in the beta release, but will be made available in the near future.

Like Binlog log management, PolarDB also provides tools and mechanisms for managing Redolog. First, unlike traditional MySQL Redolog cycling, but similar to Binlog, Redologs in PolarDB are also managed via increments of file serial numbers and it provides corresponding delete commands for system management. PolarDB provides customers with the ability to restore to any point in time based on PolarStore’s full backups and Redolog incremental logs. PolarDB also has a tool specifically designed to parse Redolog logs, called mysqlredolog. Similar to the Binlog parsing tool mysqlbinlog, this tool is extremely useful for system diagnostics. PolarDB has added a number of unique log types in Redolog, and a version control mechanism for compatibility purposes. In traditional MySQL, only data files support O_DIRECT. In order to accommodate newer file systems, PolarDB also supports O_DIRECT writing in Redolog.

Furthermore, PolarDB has also developed Undo logs in depth, currently supporting Online Undo Truncate. Never again do we have to worry that our ibdata file is too large.

With so many new features, it’s only natural that PolarDB has also added a number of new commands. For example, we can use SHOW POLAR STATUS to check on the status of PolarDB, or SHOW POLAR REPLICAS to check up on all connected Replica nodes. START POLAR SLAVE is used to restart a Replica, while SHOW POLAR LOGS is used to check the produced Redolog file. PolarDB also adds a number of new tables in information_schema. If you’re interested, you can read more to see what exactly is saved here.

The current database kernel supports (and will be made available to users in a few months) migrated from RDS 5.6 to PolarDB. The process looks like this: First we use xtrabackup in RDS 5.6 to create a full data backup, then we use xtrabackup to restore on the computing node. Once this operation is complete, we use the O&M tools in PolarFS to move all of the data files to PolarStore, at which point we can use certain parameters to restart PolarDB. PolarDB will then reformat the RDS 5.6 log files to PolarDB Redolog, after which Binlog methods can be used to catch up to incremented data. Once we’ve caught up to the time in the RDS 5.6 read/write database specified by the user, we can set the RDS 5.6 to read-only and restart PolarDB. Next we turn off Binlog replication, and finally switch VIP to PolarDB and the migration can be considered complete.

Aside from the tools used to parse Redolog mentioned above, we’ve also implemented significant changes to the source code. Because of that, we’ve changed the native TestCase Framework provided in MySQL in order to ensure that in situations where data logs are saved (Local FS/Disk and PolarFS/PolarStore) all of the test cases will still pass.

Besides a large number of new features, PolarDB also features significant performance optimizations, some of which we will go over below:

1.PolarDB has implemented a number of optimizations to latch under high concurrency scenarios, breaking latch up into several smaller granularity latches, and changing some latches to be executed incrementally so as to avoid lock competition. For example, Undo segment mutex, and log system mutex. PolarDB also changes some hotspot data structures into Lock Free structures, like MDL lock on the Server layer.

2.PolarDB has implemented optimizations to handle SQL statements in which the user specifies a primary key. This takes the load off of some optimizers for higher performance.

3.Physical replication is crucial in PolarDB, so we have not only used data page parallelism to increase performance, but have also optimized the processes necessary to replication. For example, we’ve added a length field to MTR logs to reduce CPU overhead for log parsing. This simple optimization reduces log parse times by 60%. We also reused the memory data structure in Dummy Index to reduce overhead in Malloc/Free to further improve performance.

4.Ordered write performance in Redolog heavily impacts the overall performance of the database. In order to reduce the impact of Redolog switching on performance, we’ve implemented a method similar to Fallocate on the backend to pre-allocate log files. Furthermore, since modern SSDs are often 4k aligned, but MySQL still refreshes the disk according the 512 byte alignment of older hard drives, modern drives often wind up performing lots of unnecessary operations. We have performed several optimizations on this front to better take advantage of the strengths of SSD drives.

5.In PolarDB, the Replica node and logs are currently applied in batches. Therefore, the read request in Replica does not need to re-create a new ReadView before a new batch of logs are applied, rather it can use the previous cached one. This optimization also improves read performance on Replica nodes.

6.There are also optimizations for temporary tables in PolarDB, for example Change Buffer can be turned off in temporary tables, which reduces the number of operations needed to implement changes. For example, we can avoid having to perform index locking when applying logs.

PolarDB has adopted almost all of the optimizations in AliSQL, like optimizations to the core log function log_write_up_to, LOCK_grant locking optimization, jemalloc memory allocation use, log Group Commit optimization, Double RedoLog Buffer optimization, Buffer Pool Hazard Pointer optimization, concurrency tickets adaptation optimization, and read-only task optimizations. For details, head to our GitHub at AliSQL.

7.We’ve created a set of algorithms to apply logs only according to necessity, making it so that only data pages in Buffer Pool need to apply logs. Furthermore, logs aren’t only applied on backend threads, but also on user threads. These two points are combined to greatly reduce issues of slowness and latency when applying logs. Because we use our very own PolarFS filesystem, there is a huge difference in writing dirty pages and throughput when compared to the Ext4 filesystem. We have also applied optimizations to page cleaner and double write buffer (this can be activated even though PolarFS supports atomic wright), like adding the dblwr number and adjusting the algorithm and priority of the page cleaner thread.

PolarDB is currently in beta phase, but we will be adding even more exciting features and performance optimizations in the future, for example:

1.Currently, PolarDB’s high availability switching and instance availability testing are both reliant on third party management systems. Furthermore, because Primary and Replica nodes share data and logs, if there is a communication interruption between the two, then the Replica node will be unable to sense the status of data files. Under such circumstances, Replica will be unable to provide service, so in order to better solve these problems, PolarDB will implement a self-governed cluster mechanism similar to that found in the Raft protocol in order to test availability and implement switching automatically. For specific implementation, take a look at the three node instance self-made cluster methodology in the financial version of RDS.

2.We can introduce features similar to Materialized View on Replica nodes, but this of course does not involve the concept of database views, rather ReadView in PolarDB.

3.As described above, DDL is currently force-synchronized, so we have to further optimize it. For example we can introduce multiple file versions to solve the issue of Replica reading. Furthermore, in a lot of situations, Online DDL still needs to copy entire data files, so we may be able to use the same concepts used in PolarStore COW to disperse the IO stress from this copy operation to subsequent DMLs.

4.A lot of Alibaba Cloud users need to migrate services to the cloud, and since physical migration methods can only be used with MySQL databases, logical SQL importing is the most common method. Therefore we need to improve performance as much as possible when importing large amounts of data. One simple optimization is ordering data according to primary key. We record the location of the last insertion in the BTree, so when we make further insertions we don’t have to start scanning from the Root node. When we import data, we can also temporarily close Redolog log writing, similar to the Nologging mechanism in Oracle, which helps to improve performance.

5.PolarDB can also implement optimizations in the storage engine locking mechanism, using more lock free structures to reduce lock conflicts. We are also considering using the new Futex to replace the under-performing Spinlock. Of course these functions need to be carefully tested before we can bring them online.

6.Since PolarFS/PolarStore can provide support for 16k atomic writes on the data page dimension, we do not need to use mechanisms like double write buffer, and we can further optimize write functions on data pages. For example, there are mechanisms we can use to release data pages during read locks when a disk is being refreshed. The IO priority functionality PolarDB provides through PolarFS can be used to ensure that logs are written to disk with the highest priority. These changes offer huge performance increases, but just as with locking mechanisms, they must be tested properly before implementation.

7.Furthermore, we plan to port the latest data dictionary structure from MySQL 8.0 since MySQL needs these changes in order to support evented DDL.

In summary, PolarDB is a database produced by the Alibaba Cloud database team specifically for the new age of cloud computing. It features a number of excellent optimizations and represents a key milestone in the evolution of products for the Cloud Computing 2.0 era as well as an importance impetus in the open source database ecology. The public beta will be released in September, we are looking forward to your feedback!

Alibaba Cloud PolarDB: 100TB Capacity & 6x Performance Improvement

2,599 posts | 764 followers

FollowAlibaba Clouder - September 27, 2018

Alibaba Clouder - July 16, 2020

ApsaraDB - June 3, 2021

ApsaraDB - March 3, 2020

Alibaba Cloud Community - December 9, 2024

ApsaraDB - February 16, 2023

2,599 posts | 764 followers

Follow PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 12, 2019 at 3:07 am

Test cases would have been comprehensive. It takes a lot of doing , RDBMS features , with ANSI....to get those figures