ApsaraDB for POLARDB is a next-generation cloud-native database of Alibaba Cloud. It is fully compatible with open-source databases such as MySQL and PostgreSQL and highly compatible with Oracle syntax. You can migrate from Alibaba Cloud Relational Database Service (RDS) to ApsaraDB for POLARDB in one click, without even modifying the application code. This swift will allow you to experience greater capacity, higher performance, lower costs, and higher elasticity.

Currently, ApsaraDB for POLARDB is Alibaba Cloud's fastest-growing database product. It is widely used in Internet finance, government convenience services, new retail, education, gaming, social networking, live streaming, and other industries.

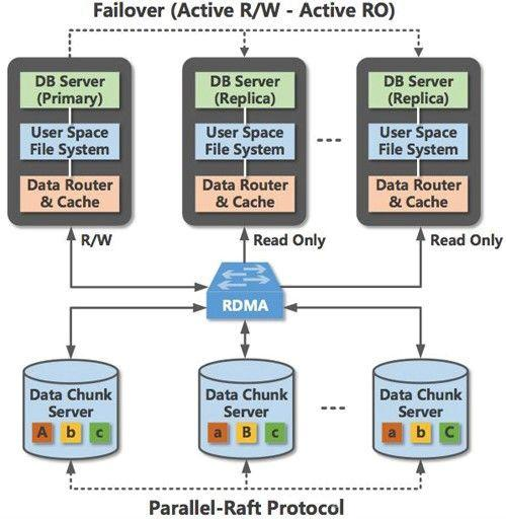

As a next-generation cloud-native database with computing-storage separation, ApsaraDB for POLARDB supports compute nodes that implement SQL parsing and optimization, parallel queries, and lockless high-performance transaction processing. The compute nodes synchronize the memory status among themselves other through a high-throughput physical replication protocol.

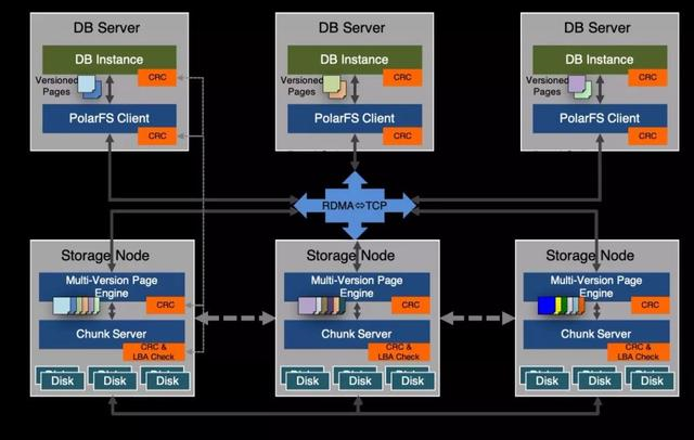

ApsaraDB for POLARDB is structured on the distributed file system PolarFS, which ensures strong consistency among multiple data replicas through the Parallel Raft algorithm. At the storage layer, multiversion concurrency control (MVCC) is implemented for the storage engine to isolate the snapshots across compute nodes in all clusters.

Compute nodes and storage nodes push operators such as filter and projection from the compute layer to the storage layer for execution through an intelligent interconnection protocol based on database semantics. Compute nodes and storage nodes are interconnected through a 25 Gbit/s high-speed remote direct memory access (RDMA) network and communicate with each other through the user-state network protocol layer of the Bypass kernel. This ensures the low latency of transactions and query statements and reduces the latency of state synchronization between compute nodes.

Based on the advanced architecture featuring separated computing and storage, ApsaraDB for POLARDB can elastically scale out transactions from 1 compute node (with 2 CPU cores) to 16 compute nodes (with up to 1,000 CPU cores). The storage capacity of a single instance can be elastically expanded from 10 GB (measured by usage) to 100 TB.

ApsaraDB for POLARDB supports real-time scale-out by separating compute nodes and storage nodes. In normal cases, multiple database replicas are created to solve the bottleneck created by the limited computing capability of a single database instance and provide database scale-out capabilities.

This requires storing multiple copies of full data and results in excessive network overhead due to frequent log synchronization. In traditional database clusters, adding replicas requires synchronizing all incremental data. This increases synchronization latency.

ApsaraDB for POLARDB stores database files and log files such as redo logs in a shared storage device, allowing the primary instance and all replicas to share the same copy of full data and incremental log data. Different nodes can read consistent data through multiversion concurrency control (MVCC) by synchronizing the metadata in the memory. This solves problems related data synchronization between the primary instance and replicas, reduces the cross-node network overhead, and lowers the latency of inter-replica synchronization.

ApsaraDB for POLARDB has made many kernel optimizations to improve transaction performance. Lockless and parallel algorithms are used to remove performance bottlenecks, reduce and even eliminate inter-lock conflicts, and improve system scalability.

We optimized frequently accessed data such as inventory data in ApsaraDB for POLARDB based on our experience with large-scale and high-concurrency scenarios, such as the Double 11 Shopping Festival. For simple and repeated queries, ApsaraDB for POLARDB can return results directly from the storage engine. This reduces the overhead of the optimizer and executor.

Physical replication is also optimized for improved efficiency. For example, metadata is added to redo logs to reduce the CPU overhead of log parsing, which reduces the log parsing duration by 60%. The overhead of the memory clerk is reduced by reusing some data structures.

ApsaraDB for POLARDB optimizes log applications through algorithms. For instance, log applications are only used on the data page of the buffer pool. The page cleaner and double write buffer are optimized to reduce costs. These optimizations allow ApsaraDB for POLARDB to far surpass MySQL in terms of performance. ApsaraDB for POLARDB performs up to 600% better than MySQL in benchmark evaluations using a large number of concurrent writes, such as sysbencholtp_insert.

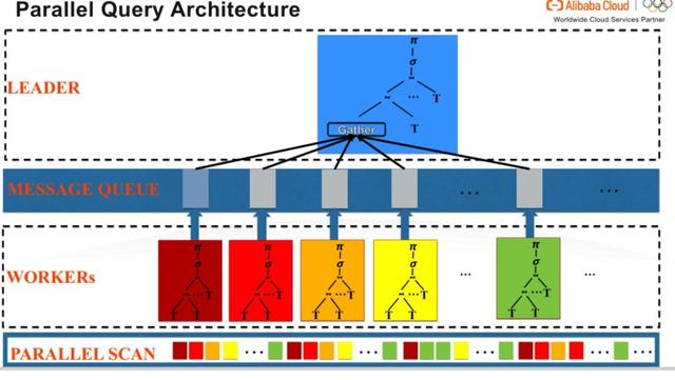

To improve the capabilities of complex queries such as subqueries and Join queries (for example, in the TPC-H benchmark evaluation), ApsaraDB for POLARDB provides a query processor that supports parallel queries, in which a query is executed on multiple or all-available CPU cores. The parallel query function divides a query task (currently, only SELECT statements are supported) into multiple subtasks for parallel processing based on the leader-worker concurrency model.

The leader thread creates a parallel query plan and coordinates components to perform the parallel query. The parallel query plan includes sub-actions such as parallel scan, parallel table joining, parallel sorting, parallel grouping, and parallel aggregation.

The leader and worker threads communicate with each other through the message queue. The worker thread sends data to the leader thread through the message queue. The leader thread sends control information to the worker thread also through the message queue.

The worker thread is responsible for executing tasks. The leader thread parses the query statement to create a parallel query plan and starts multiple worker threads for parallel task processing. To improve query efficiency, the worker threads directly copy plan shards from the leader thread to execute the query, without further optimization. This requires copying all nodes of the plan tree.

The worker threads return the intermediate result set to the leader thread after scanning, aggregation, sorting, and other operations. The leader thread collects all data sets from the worker threads, performs secondary operations such as merge sort and secondary GROUP BY, and returns the final results to the client.

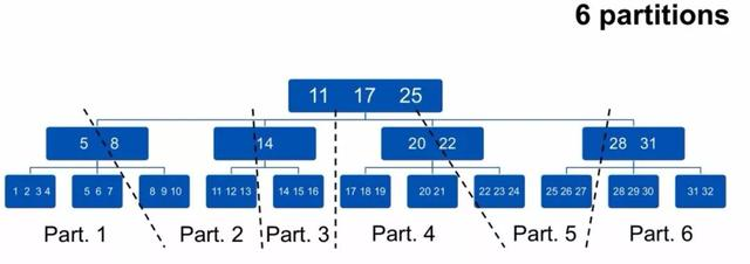

The parallel scan layer balances workloads based on the data structure features of the storage engine. The workload can be balanced among all worker threads by dividing scanned data into multiple partitions. If the storage engine is structured based on a B+ tree, partition division starts from the root. If the root cannot be divided into sufficient partitions to satisfy the specified degree of parallelism (DOP), division proceeds to the next layer.

If six partitions are required but the root node can only be divided into four partitions, the layer following the root node is divided. During a parallel query, the B+ tree is divided into as many partitions as possible so that scan segments are evenly allocated to multiple worker threads. When a worker thread finishes scanning a partition quickly due to a strong filter condition, it automatically attaches to the next partition for continuous scanning. The automatic attach method helps balance the loads among all threads.

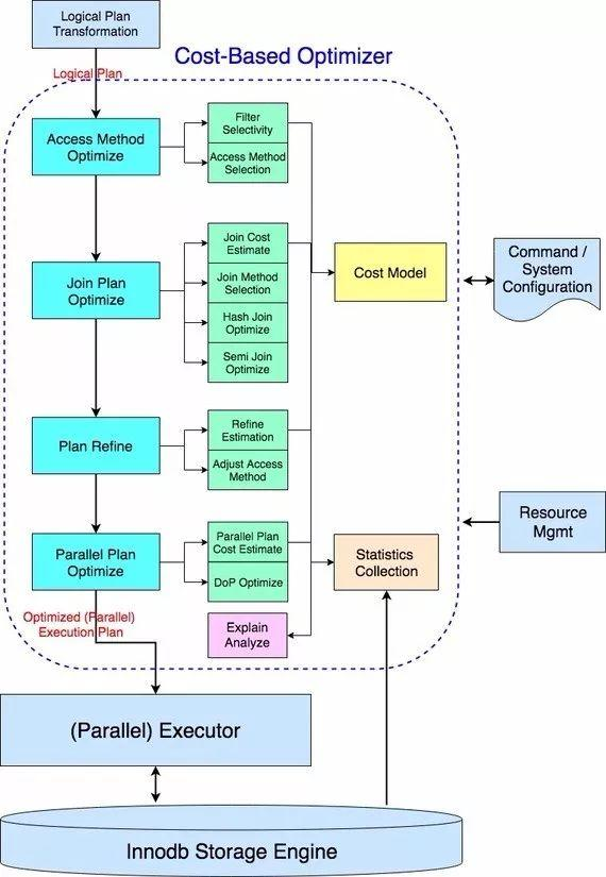

If a parallel query plan is incorrectly executed, slow queries may occur due to service diversity on the cloud. ApsaraDB for POLARDB provides a next-generation cost-based optimizer to solve the problem of slow queries. ApsaraDB for POLARDB implements Compressed Histogram to automatically detect and accurately describe frequently accessed data. The data access frequency and value space are included in selectivity calculation to solve the common problem of data skew.

ApsaraDB for POLARDB uses Compressed Histogram to estimate a wide range of costs, including the size of table joining results, which is critical for optimizing the join cost and join order. In MySQL, such estimations are rough and made by an empirical formula, resulting in a significant error when you estimate rows_per_key with indexes or estimate default parameter values without indexes. The error increases in processes where tables are joined, resulting in the inefficient execution of the parallel query plan.

ApsaraDB for POLARDB uses Compressed Histogram to merge overlapping parts for calculation and adapt the estimation algorithm based on the histogram type. This improves estimation accuracy and helps the optimizer choose the optimal join order. In a test on randomly created data with a normal distribution, the query speed was increased by 240% to 1200% after the optimization of multi-table union queries. In a TPC-H test, the join order of multiple queries was changed, and performance was improved by 77% to 332%.

ApsaraDB for POLARDB also uses Compressed Histogram to optimize the logic of record_in_range. MySQL uses index dive to estimate the number of records in a range for an indexed filter condition. This operation accounts for a high proportion of CPU usage when short queries are processed in online transaction processing (OLTP). After index dive is replaced by histogram estimation, the response time for most queries in the core e-commerce business of Taobao was reduced by half.

ApsaraDB for POLARDB uses the PolarFS distributed file system developed by Alibaba Cloud as its storage layer. PolarFS is a distributed storage system that offers low latency and high performance. It uses a full-user space I/O stack and is specially designed for database applications, marking the first of its kind in China. For more information, refer to the paper "PolarFS: An Ultra-low Latency and Failure Resilient Distributed File System for Shared Storage Cloud Database" presented at the VLDB 2018 conference. PolarFS is on an equal footing with local SSD architectures in terms of low latency and high I/O performance. In addition, PolarFS provides the distributed cluster feature to easily scale up the storage capacity and performance.

As a storage infrastructure that deeply collaborates with ApsaraDB for POLARDB, PolarFS features excellent performance and scalability, along with a range of database-oriented highly reliable and available storage technologies that have been accumulated in the course of addressing the challenging business requirements of ApsaraDB for POLARDB customers and conducting extensive R&D and O&M activities on the public cloud.

PolarFS can store the multi-version pages that are dynamically created by the B+ tree of the ApsaraDB for POLARDB storage engine. This allows ApsaraDB for POLARDB to distribute queries among multiple compute nodes and maintain global snapshot isolation semantics.

To reduce read/write conflicts, modern databases use MVCC to provide different transaction isolation levels such as read committed (RC), snapshot isolation (SI), and serializable snapshot isolation (SSI). Under MVCC, the B+ tree dynamically maintains a series of versions on each page. Multiple transactions in parallel execution can separately access different versions on a page.

Data is replicated and synchronized with a certain latency across nodes in an ApsaraDB for POLARDB cluster. Therefore, each compute node may correspond to different versions on the pages of the B+ tree. The multi-version storage feature provides the appropriate version of each node. In ApsaraDB for POLARDB, when a compute node writes a page to PolarFS, it needs to provide the version (log sequence number [LSN]) of the page. PolarFS stores the page and its version metadata. When reading pages, a compute node provides version information to retrieve the appropriate (historical) version from storage.

The database layer of ApsaraDB for POLARDB periodically sends the lower limit of resource usage by all compute nodes of a cluster to PolarFS. The resource usage is specific to the versions of different compute nodes. PolarFS clears unused historical versions based on the received version information.

Data reliability is assured in the design of ApsaraDB for POLARDB. In an actual distributed system, data reliability may be affected by data errors that result from bugs in the hardware, firmware, and software, such as disks, networks, or memory. Reliability problems of storage result from silent errors, such as lost write, misdirected write, and block corruption. Reliability problems of networks and memory result from bit inversion and software bugs.

ApsaraDB for POLARDB and PolarFS provide end-to-end data verification to ensure data reliability when exceptions occur, such as hardware and software faults and misoperations.

When a compute node writes data, ApsaraDB for POLARDB checks the correctness of data during the time from when the data leaves the storage engine of the compute node to when the data is stored on the disk of a storage node in PolarFS. This prevents the writing of abnormal data.

When data is read, PolarFS and the storage engine of ApsaraDB for POLARDB verify the checksum of the data to identify silent errors in disks. This prevents silent errors from spreading.

When service traffic is low, data is continuously scanned in the background to check whether the checksum of single-replica data is correct and whether data is consistent across all replicas. Appropriate verification is essential for data migration. When performing data migration in any form, ApsaraDB for POLARDB verifies the checksum of data replicas and data consistency across replicas. Data is migrated to the destination only when the checksum is correct and data is consistent. This prevents data corruption and the spread of data errors from a single replica during migration.

PolarFS can quickly back up and restore physical snapshots in ApsaraDB for POLARDB. Snapshots are a popular backup solution based on storage systems. In essence, the snapshot solution uses a Redirect-On-Write mechanism that records the metadata changes in a block device to perform replication on the volume when data is written. It then sends the content of the write operation to the volume used by the new replica. This allows you to restore the database to any snapshot time point.

The snapshot solution uses a postprocessing mechanism based on the time and write load model. Data is not backed up when you create a snapshot. The loads of backup data are evenly distributed across the time window during which data writing actually occurs after the snapshot is created. This enables fast response to backup and restoration actions.

ApsaraDB for POLARDB can restore user data to any point in time through the snapshot mechanism provided by the underlying storage system and the incremental backup of redo logs. This is more efficient than the restoration method based on full data and the incremental data of logical logs.

ApsaraDB for POLARDB is fully compatible with MySQL and PostgreSQL, which are currently the most popular open-source database ecosystems. ApsaraDB for POLARDB is also highly compatible with Oracle syntax, enabling traditional enterprises to migrate to the cloud at less than one-tenth of the cost required by commercial databases.

In ApsaraDB for POLARDB, Data Management (DMS) is used instead of Oracle Enterprise Manager (OEM) as a GUI management tool, and POLARDB Plus is used instead of SQL Plus as a command-line tool. ApsaraDB for POLARDB is used in the same way as Oracle DBA. In the client SDK, OCI and O-JDBC Driver can be replaced by libpq and JDBC Driver, respectively, simply by replacing the SO and JAR packages and without having to modify the main code.

ApsaraDB for POLARDB supports the common SQL syntax of Oracle in the data manipulation language (DML) format, all advanced syntaxes such as CONNECT BY, PIVOT, and LISTAGG, and built-in function libraries used by stored procedures.

For advanced functions such as security management and Automatic Workload Repository (AWR), ApsaraDB for POLARDB provides the same format and operation syntax as in Oracle. ApsaraDB for POLARDB is fully compatible with or provides equivalent alternatives to the operation methods, use habits, ecosystem tools, SQL syntax, and formats in Oracle. With the Advanced Database & Application Migration (ADAM) migration evaluation tool, you can migrate applications from Oracle to ApsaraDB for POLARDB with little or no modifications.

ApsaraDB for POLARDB will release a large number of new technologies and enterprise-level features in the second half of 2019. These will improve the availability and performance of ApsaraDB for POLARDB.

ApsaraDB for POLARDB will support a warm buffer pool that is decoupled from compute nodes. This can minimize the service impact when compute nodes are restarted. The warm buffer pool can also mitigate the service impact in the cases of model replacement and specification upgrade in serverless mode. The warm buffer pool uses an independent memory, and therefore it can be dynamically scaled in or out.

ApsaraDB for POLARDB will support parallel DDL, which can minimize table-level DDL latency. Parallel DDL optimizes parallel execution and reduces the time required by DDL operations such as indexing by nearly 1000%. ApsaraDB for POLARDB has extensively optimized DDL replication to enable faster cross-region batch replication with less resource consumption.

ApsaraDB for POLARDB supports cross-region and long-distance physical replication, helping you deploy a global database. The physical replication function can replicate data to all data centers in the world in real time, allowing users in all regions to enjoy faster responses from local data centers.

ApsaraDB for POLARDB supports a storage capacity of 100 TB. The levels of single-table indexing increase with the growth in table size. This slows down data retrieval. The physical lock of a single table may become a bottleneck for parallel DML execution.

These problems can be solved through appropriate partitioning. A common practice is to reduce the burden on a single table by using the sharding function provided by external middleware for databases.

As ApsaraDB for POLARDB provides more features such as parallel queries, you can create partition tables to implement sharding more effectively in databases.

Effective partitioning provides support for larger tables and eliminates the global physical lock conflicts of some database indexes. This improves the overall DML performance.

Partitioning allows improved separation of cold and hot data so that you can store cold and hot data in different media. This ensures data access performance and reduces data storage costs.

ApsaraDB for POLARDB provides better support for extremely large tables through a range of enhanced partition table functions, such as global index, foreign key constraint, and interval partition.

ApsaraDB for POLARDB will release a row-level compression function. A common practice is to compress data pages by using a compression algorithm, such as LZ77 or Snappy. However, the entire data page must be compressed when changes are made to only one row of data, which results in high CPU overhead.

In some cases, data page compression may cause data bloat and multiple splits of index pages. ApsaraDB for POLARDB uses fine-grained row-level compression, where the compression method varies depending on the data type.

Data is compressed in both the external storage and memory. Row-level data is decompressed only when it is queried, and data pages are not decompressed. Data is compressed in storage and decompressed only when it is queried. Therefore, logs record compressed data. This reduces the log size and mitigates the burden of transmitting data and logs over networks. Related indexes are also compressed in storage.

The reduction in the stored data volume offsets the additional overhead due to decompression and does not compromise performance.

Analytic databases are typically separated from online transaction processing. At the end of a business day, the data from online transaction processing and the previously analyzed and processed data are imported to a data warehouse for analysis and report generation.

A hybrid transaction/analytical processing (HTAP) database provides all-in-one support for many enterprise-level applications, without the need for time-consuming and costly data migration. This allows you to create T+0 analysis reports at the end of transactions.

ApsaraDB for POLARDB provides in-memory column store tables for compatibility with HTAP databases. Physical and logical logs are used to synchronize the row store data of ApsaraDB for POLARDB. Real-time big data analysis can be performed on column store data by using analytic operators. This way, you obtain the analysis results without having to use other systems.

The volume of stored data is increasing, but the access frequency is not normally distributed. There is a significant difference in access frequency between hot and cold data. X-Engine uses a storage architecture that stores data at different layers based on the data access frequency, which is the standard for dividing hot and cold data. A specific storage structure is designed for each data layer based on access characteristics so that data is written to an appropriate storage device.

Different from a B+ tree, X-Engine uses the log-structured merge-tree (LSM tree) as the layered storage architecture. The LSM tree reduces the cost of thread context switch through multiple transaction processing queues and pipeline processing. It also calculates the task quantity ratio in each phase to streamline the entire pipeline. This improves the transaction processing performance. Data reuse technology reduces the cost of data merging and minimizes performance jitter due to cache eviction. The compaction process is accelerated by using a field-programmable gate array (FPGA) to maximize the SQL processing capabilities.

X-Engine increases the transaction processing performance by 1000% compared with other storage engines with similar architectures, such as RocksDB.

For more information about X-Engine, refer to the paper "X-Engine: An Optimized StorageEngine for Large-scale E-Commerce Transaction Processing" presented at the SIGMOD 2019 conference.

Currently, ApsaraDB for POLARDB is used by Taobao, Tmall, Cainiao, and other Alibaba business lines, as well as in government affairs, retail, finance, telecommunications, manufacturing, and other fields. To date, 400,000 databases have been migrated to Alibaba Cloud.

Outside of Alibaba, ApsaraDB for POLARDB helps Beijing quickly and smoothly schedule more than 20,000 buses in its public transport system, making it more convenient for 8 million commuters to use them each day. ApsaraDB for POLARDB also helps ZhongAn Insurance process insurance policy data at a 25% better efficiency than what was previously used.

Self-Driven Databases: Intelligent Parameter Tuning for Alibaba Databases Explained

Alibaba Clouder - February 3, 2021

Alibaba Cloud Community - July 26, 2023

ApsaraDB - April 30, 2019

ApsaraDB - April 9, 2025

ApsaraDB - June 7, 2022

Alibaba Clouder - January 8, 2021

ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by ApsaraDB