This article analyzes the generation of Binlog and how to process and generate Global Binlog through the system.

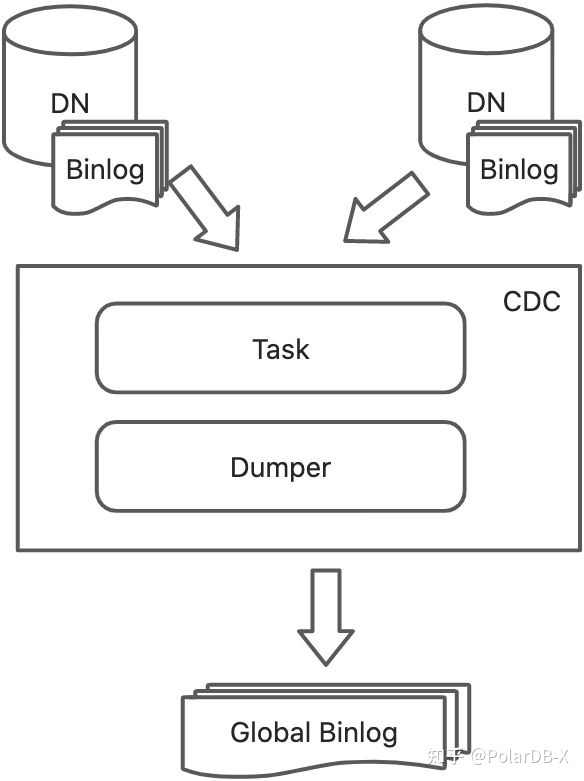

The life of Global Binlog refers to the story generated during the period from the generation of the original Binlog to the final generation of the Global Binlog. This article describes the key processes that happen during Binlog pulling, data shaping and merging and the final generation of the Global Binlog. Global Binlog involves the Task and Dumper components, which are used to reshape, merge, and store Binlog. The following section describes the codes for Binlog processing by these two components.

When a user writes data to PolarDB-X, the final data will be downloaded to the underlying DN, which will generate the original binlog. The task component will pull the original binlog, reshape and merge it into the Global Binlog, and then send it to the downstream Dumper component. Let's look at the core code of Task to understand the processing of the entire original Binlog:

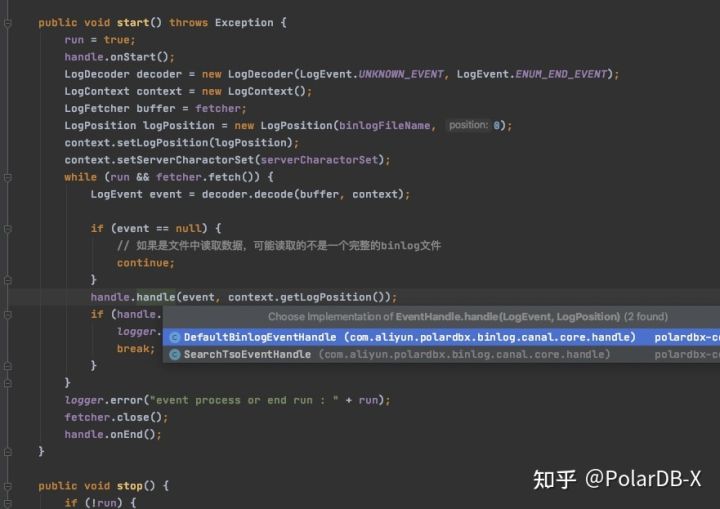

We can take the code com.aliyun.polardbx.binlog.canal.core.BinlogEventProcessor (GitHub address: https://github.com/ApsaraDB/galaxycdc ) as an entry to view the key entry class of the entire binlog and see how binlog is pulled and delivered to the downstream handler.

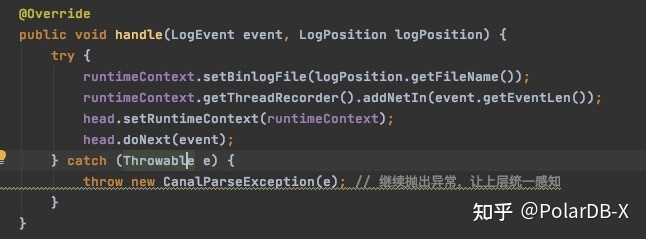

In the handler, binlogs are processed by filters in some columns. In the code (com.aliyun.polardbx.binlog.canal.core.handle.DefaultBinlogEventHandle), we can see the following logic:

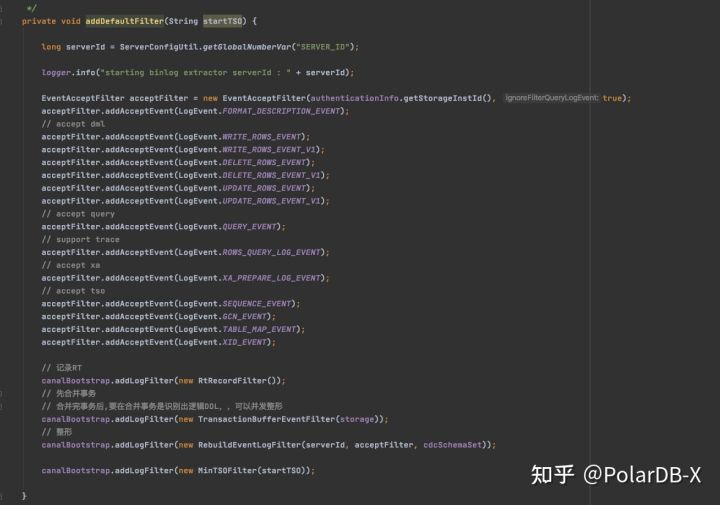

All filters are traversed step by step through the doNext method of the head object, and finally, binlog events are filtered and formatted, and logical events are output to downstream. The head object is a filter linked list. Please see the following code for more information about the initialization logic:

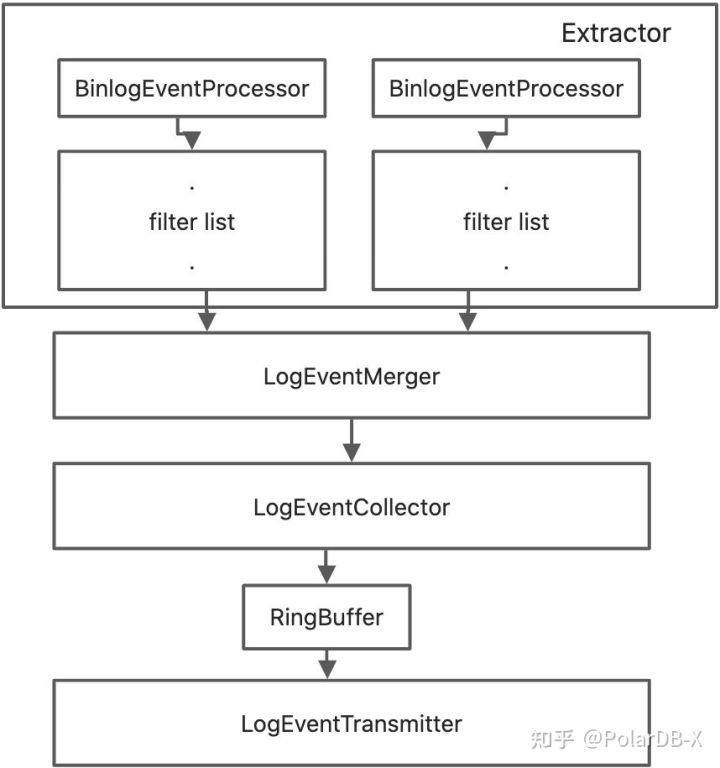

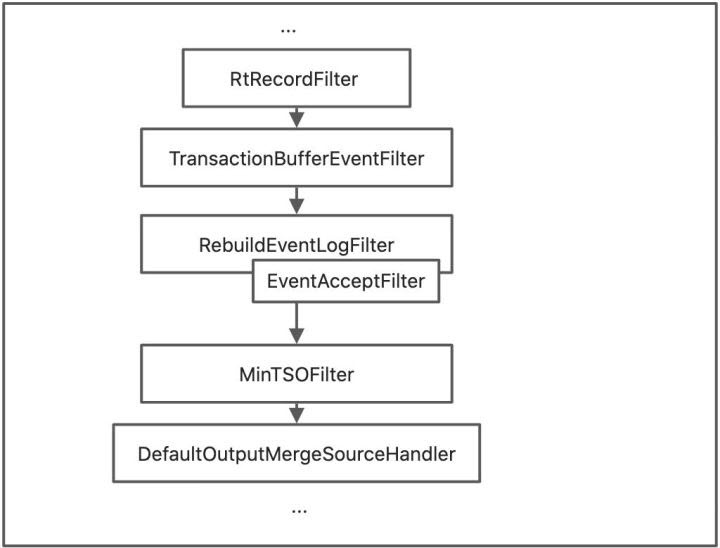

The filter chain in the Extractor is shown in the following figure:

Some column filters are added to the list during BinlogExtractor initialization, and binlog events are validated one by one in sequence.

com.aliyun.polardbx.binlog.extractor.filter.RtRecordFilter: records the rt processed by the downstream logic of the current event.

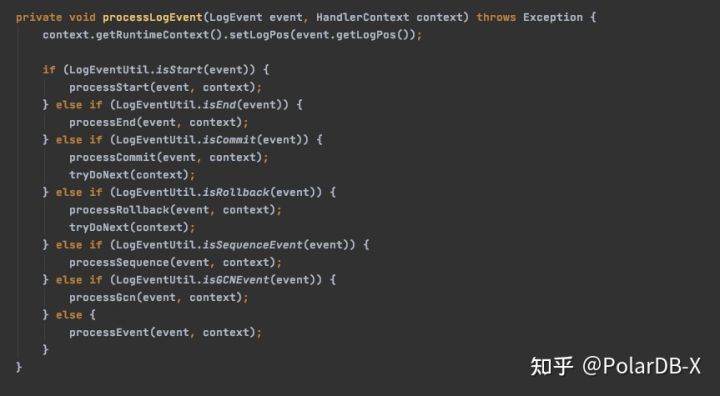

com.aliyun.polardbx.binlog.extractor.filter.TransactionBufferEventFilter: processes transaction-related events and marks tso events.

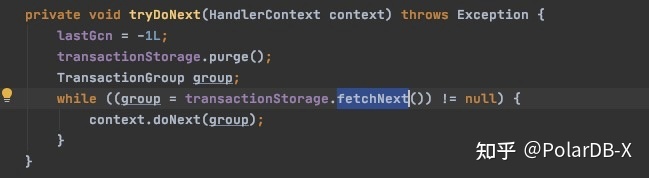

When a commit event is received, it attempts to execute tryDoNext:

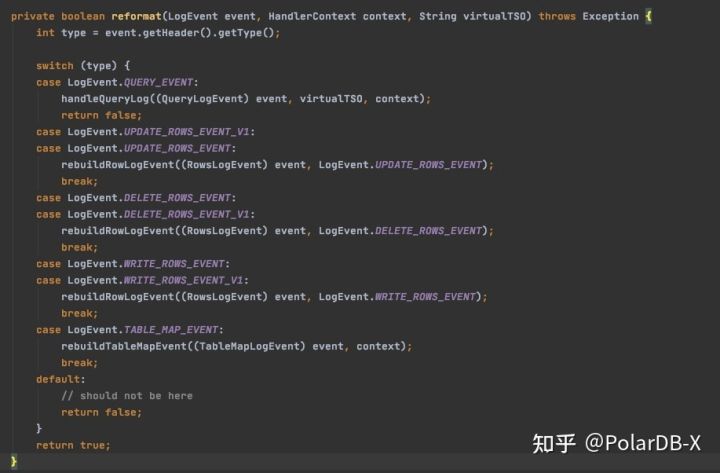

The transaction push algorithm can be viewed in class com.aliyun.polardbx.binlog.extractor.filter. View in the TransactionStorage. The com.aliyun.polardbx.binlog.extractor.filter.RebuildEventLogFilter is responsible for filtering and shaping binlog events. Please see the handle method for more information. The key codes of the reformat method can be viewed below:

In Global Binlog, we only care about QueryEvent, RowEvent (Insert/Update/Delete), and TableMapEvent. Therefore, we only process the data of these events.

com.aliyun.polardbx.binlog.extractor.filter.MinTSOFilter performs a preliminary filter on transactions. The data is pushed to the downstream transaction merging code com.aliyun.polardbx.binlog.merge.MergeSource through the com.aliyun.polardbx.binlog.extractor.DefaultOutputMergeSourceHandler. SourceId uniquely marks the current data stream id, and the queue stores the transaction index txnKey.

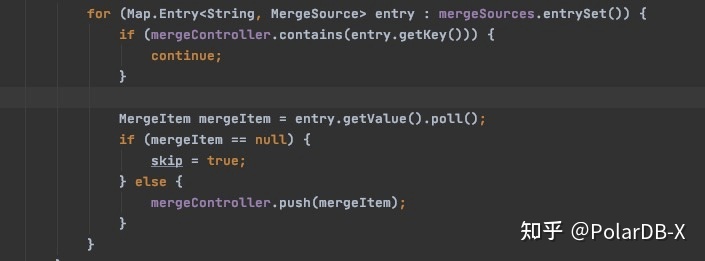

In the com.aliyun.polardbx.binlog.merge.LogEventMerger, the queue traversals of the MergeSource corresponding to all DNs are extracted.

The com.aliyun.polardbx.binlog.merge.MergeController stores the corresponding sourceId and data to ensure each sourceId only receives one piece of data. When the downstream pulls data, the data stored in the priority queue is popped out, pushed to the com.aliyun.polardbx.binlog.collect.LogEventCollector, and delivered to the downstream Dumper component using the ringBuffer to the com.aliyun.polardbx.binlog.transmit.LogEventTransmitter.

When the Global Binlog system is started, the Task component is started first to monitor the port. The Dumper component attempts to connect to the Task. After the Dumper components receive the Global Binlog pushed by the Task, it processes the binlog in detail before writing the processed results to disk. Let's analyze the core process of binlog processing from the entry of the Dumper components to consume task push data. In the start method of the com.aliyun.polardbx.binlog.dumper.dump.logfile.LogFileGenerator class, this method starts grpc to connect to the Task port.

The com.aliyun.polardbx.binlog.rpc.TxnMessageReceiver interface is implemented in the code. This interface consumes all the data pushed from the upstream, processes relevant Global Binlog position and other related information in the consume method, and writes it to the disk through the com.aliyun.polardbx.binlog.dumper.dump.logfile.BinlogFile.

private void consume(TxnMessage message, MessageType processType) throws IOException, InterruptedException {

...

switch (processType) {

case BEGIN:

...

break;

case DATA:

...

break;

case END:

...

break;

case TAG:

currentToken = message.getTxnTag().getTxnMergedToken();

if (currentToken.getType() == TxnType.META_DDL) {

...

} else if (currentToken.getType() == TxnType.META_DDL_PRIVATE) {

...

} else if (currentToken.getType() == TxnType.META_SCALE) {

...

} else if (currentToken.getType() == TxnType.META_HEARTBEAT) {

...

} else if (currentToken.getType() == TxnType.META_CONFIG_ENV_CHANGE) {

...

}

break;

default:

throw new PolardbxException("invalid message type for logfile generator: " + processType);

}

}In the consome method, transaction-related events are processed one by one. If the events are marked with tags for interaction between systems, the corresponding tags are processed logically.

This article sorts the key processes involved in binlog pulling, shaping processing, and final storing. The life of a global binlog is a transition from the original physical binlog to a logical one.

An Interpretation of PolarDB-X Source Codes (7): Life of Private Protocol Connection (CN)

An Interpretation of PolarDB-X Source Codes (9): Life of DDL

ApsaraDB - October 24, 2022

ApsaraDB - September 11, 2024

ApsaraDB - June 12, 2024

ApsaraDB - October 24, 2022

ApsaraDB - November 1, 2022

ApsaraDB - October 25, 2022

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn MoreMore Posts by ApsaraDB