In the previous articles, we explained the three parts making up GalaxySQL and introduced the important modules and directories starting from the directory. Finally, we listed some key interfaces as the entry for debugging codes without explanation. This article describes the key code related to SQL parsing and execution in GalaxySQL (CN) codes from the perspective of SQL execution.

The life of SQL refers to the story that occurs in the CN codes from the time when the client creates a connection and sends the SQL to the time when the client receives the returned result. Similar to a person's life, observing the life of SQL from different perspectives can draw different conclusions, such as:

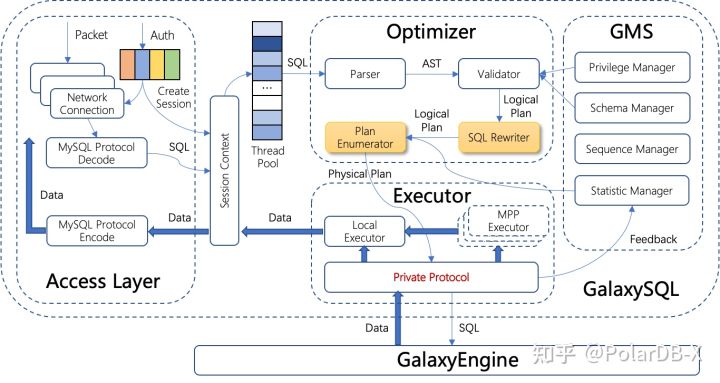

As shown in the preceding figure, CN can be divided into the protocol layer, optimizer, and executor. The life of SQL starts from the protocol layer, which is responsible for accepting user connection requests, establishing connected context, converting data packets sent by users into SQL statements, and handing them to the optimizer to generate physical execution plans. The physical execution plan contains the locally executed operator and the physical SQL sent to the DN. The executor sends the physical SQL to the DN, summarizes the results returned by the DN, and submits them to the locally executed operator for processing. Finally, the processing results are returned to the protocol layer, encapsulated into packets according to the MySQL protocol, and sent to the user.

Due to space constraints, this article only contains GalaxySQL (CN)-related content. GalaxyEngine (DN)-related content will be introduced in subsequent articles.

The following introduces the implementation details of the protocol layer, optimizer, and executor in the SQL parsing execution process.

The first task to be completed at the protocol layer is to monitor the network port and wait for users to establish a connection. This part of logic is described in the CN Startup Process. In the NIOAcceptor constructor, each CN process only starts one NIOAcceptor to monitor the network port.

After receiving the TCP connection request from the client, the protocol layer binds the TCP connection to one NIOProcessor in the NIOAcceptor#accept. Each NIOProcessor starts two threads to read/write TCP packets. The implementation of the read/write threads is encapsulated in the NIOReactor.

The NIOAcceptor#accept also binds the NIOProcessor to a FrontendConnection object. The FrontendConnection encapsulates information about the MySQL protocol processing logic and Session Context. There are two specific implementations: ServerConnection and ManagerConnection. ServerConnection is used to process SQL sent by the client. ManagerConnection is used to process some internal management commands.

The logic for parsing MySQL protocol packages in the ServerConnection is encapsulated in NIOHandler. There are two implementations, FrontendAuthorityAuthenticator and FrontendCommandHandler, which are used for authentication and command execution, respectively. The specific process is that NIORreactor calls AbstractConnection#read to complete SSL parsing and packet reorganization to obtain specific packets to be processed, calls FrontendConnection#handleData to obtain a new thread from the thread pool, and calls the FrontendConnection#handler#handle operation to complete authentication and packet parsing.

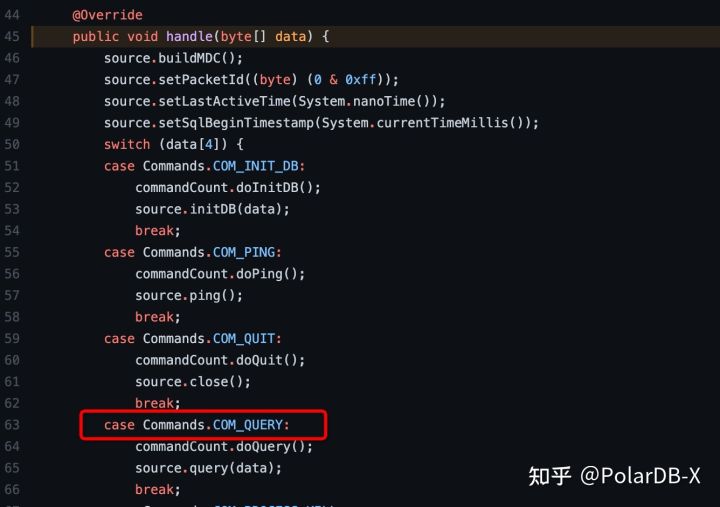

As shown in the preceding figure, the FrontendCommandHandler#handle calls different processing functions based on the Command type. The most commonly used Command is COM_QUERY. If all DML/DDL statements are not executed through the PreparedStatement, the Command is COM_QUERY. The corresponding processing function is FrontendConnection#query.

The FrontendConnection#query method continues to parse the data package to obtain the SQL text and passes it in the ServerQueryHandler#queryRaw to classify and execute according to the SQL type. If the client sends a MySQL Multi-Statement, multiple statements will be cut according to the syntax and sent for execution. There is a detail here that the ServerQueryHandler#executeStatement method will generate a unique traceId for each SQL statement. traceId will eventually appear in various log files, and HINTs of actual physical SQL issued (which can be obtained from the binlog of DN), which is convenient for troubleshooting.

The processing logic of common DML/DDL statements is encapsulated in the ServerConnection#innerExecute method. The processing can be divided into optimized execution and returned packages. TConnection#executeQuery encapsulates the logic of the optimization and execution, where the Planner#plan is the optimizer entry, and the PlanExecutor#execute is the executor entry.

The execution result is wrapped into a ResultCursor object. The result is read in ServerConnection#sendSelectResult and assembled into a COM_QUERY Response package to return to the client.

During execution, the ExecutionContext records the execution plan properties/SQL parameters/HINT and other context information as Session Context, which is passed between the three modules.

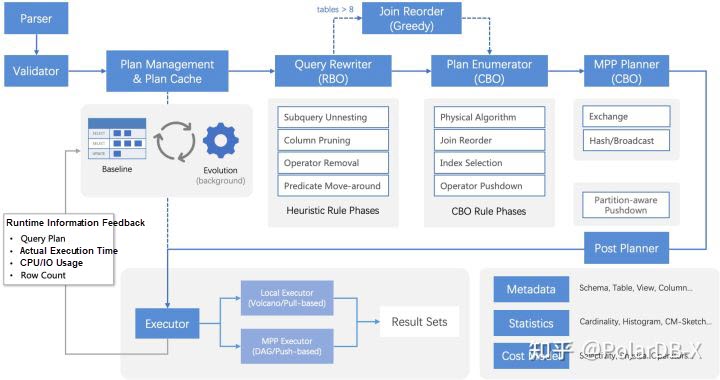

Like all databases, the main process of the optimizer module includes four basic steps: Parser/Validator/SQL Rewriter (RBO)/Plan Enumerator (CBO). On top of this, GalaxySQL adds three steps based on its features:

In the optimizer, SQL needs to be processed in seven steps: Parser → Plan Management → Validator → SQL Rewriter(RBO) → Plan Enumerator(CBO) → Mpp Planner → Post Planner. The entry point is Planner#plan. The following section describes the key interfaces in each step.

The implementation of Parser is based on the Parser component in Druid (Alibaba's open-source connection pool management software). It is a hand-written parser that converts SQL text into an abstract syntax tree (AST). The Parser contains MySqlLexer for lexical parsing and MySqlStatementParser for grammatical parsing. Similar to reading a sentence, when parsing SQL, we first need to divide the SQL text into multiple tokens, such as a simple query statement.

SELECT * FROM t1 WHERE id > 1;It will be divided into the following tokens:

SELECT (keyword), * (identifier), FROM (keyword), t1 (identifier),

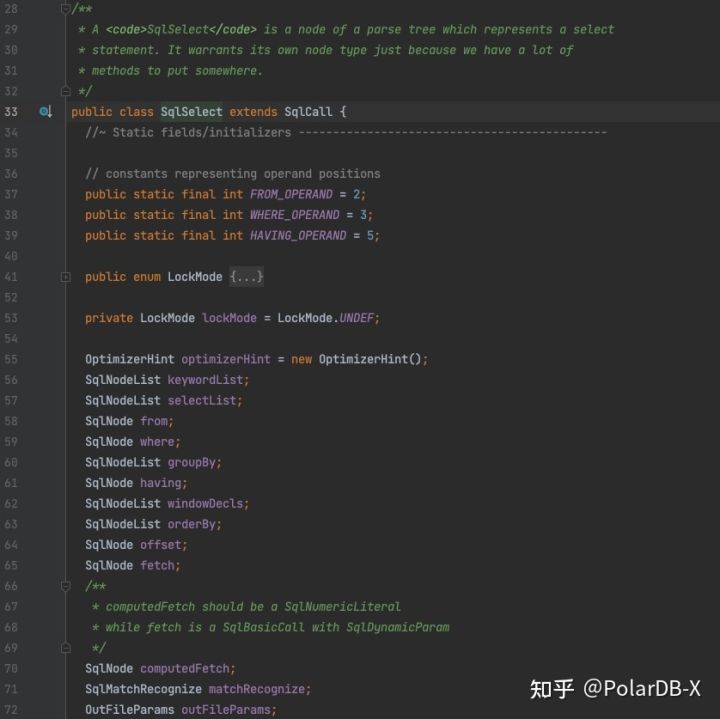

WHERE (keyword), id (identifier), > (gt), 1 (literal_int), ; (semicolon)This process of splitting words is lexical analysis. Next, syntax parsing reads all tokens to determine whether they meet the syntax of an SQL clause and generate AST. The object generated by the SELECT statement result parsing is SqlSelect, as shown in the following figure:

Anyone careful may have noticed that SqlSelect and the type SqlNode of its member variables are both in the polardbx-calcite package instead of the polardbx-parser package because GalaxySQL uses Apache Calcite as the optimizer framework. The whole framework is strongly bound to the AST data structure. Therefore, the SQL parsing process first obtains the AST object of Druid and then converts it to SqlNode through a visitor. The code is FastSqlToCalciteNodeVisitor, which contains some syntax changes and permission verification.

All SQL needs to be parameterized first to support Plan Cache better, which means using placeholders to replace constant parameters in SQL text and converting SQL text to parameterized SQL + parameter list. For example, SELECT * FROM t1 WHERE id > 1 will be converted to SELECT * FROM t1 WHERE id > ? and a parameter 1. The advantage is that SQL with different parameters of the same template can hit the same Plan Cache, which is more efficient. Of course, there are disadvantages. When the parameters are different, the cost of the entire query is different, and different plans may be required. This problem has been solved in Plan Management. The parameterized related codes are located in the DrdsParameterizeSqlVisitor and are still implemented as a visitor. The returned result is encapsulated in a SqlParameterized object.

In terms of data structure, Plan Cache can be considered a map with keys like SQL templates, parameter information, metadata version, and other information and values like execution plans. The purpose is to reduce the performance overhead caused by repeatedly optimizing the same SQL templates and eliminate performance fallback that may be caused by version upgrades in combination with execution plan evolution.

The logic for calling Plan Cache and Plan Management is encapsulated in Planner#doPlan. The implementation code of Plan Cache is in PlanCache#get, and the implementation code of Plan Management is in PlanManager#choosePlan.

The implementation of Plan Cache is similar to the Map.com putIfAbsent() in Java. If there is an already generated execution plan in the map, the execution plan is directly returned. If it does not exist, an execution plan is generated and saved in the map and then returned to the execution plan. In other words, after passing through Plan Management & Plan Cache, you will get an execution plan. The four steps of Validator/SQL Rewriter/Plan Enumerator/Mpp Planner are called inside Plan Cache. Post Planner cannot be cached because it depends on specific parameters and needs to be called after receiving the execution plan.

Early database implementations often performed query optimization directly on AST. However, since AST lacks the hierarchy of relational algebra operators, it is difficult to write optimization rules that are orthogonal to each other, which will cause all optimization logic to be stacked together and difficult to maintain. Modern database implementations usually convert AST into an operator tree composed of relational algebra operators as a logical plan during validating or binding, and GalaxySQL is no exception.

The transition from AST to a logical plan is divided into two steps. The entry is in Planner#getPlan. First, the semantic check is performed in SqlConverter#validate, including namespace check and type check. A special point is that SqlValidatorImpl#performUnconditionalRewrites contains some content to rewrite AST, which is mainly used to block different syntax structures with the same semantics. Then, SqlConverter#toRel contains a logical plan to convert the AST saved by the SqlNode object to the RelNode object. The conversion process is complicated, so additional explanations are not provided here.

SQL Rewriter is an RBO component of GalaxySQL. It uses fixed rule groups to optimize logical plans. In GalaxySQL, RBO optimization can be divided into two parts. One is to perform traditional relational algebra optimization (such as predicate derivation and LEFT JOIN to INNER JOIN), and the other is to complete partial computing push-down.

The code for calling RBO is encapsulated in the Planner#optimizeBySqlWriter. The RBO framework implements a large number of optimization rules based on HepPlanner. The rule grouping information is stored in the RuleToUse, and the sequence of rule group execution is recorded in the SQL_REWRITE_RULE_PHASE.

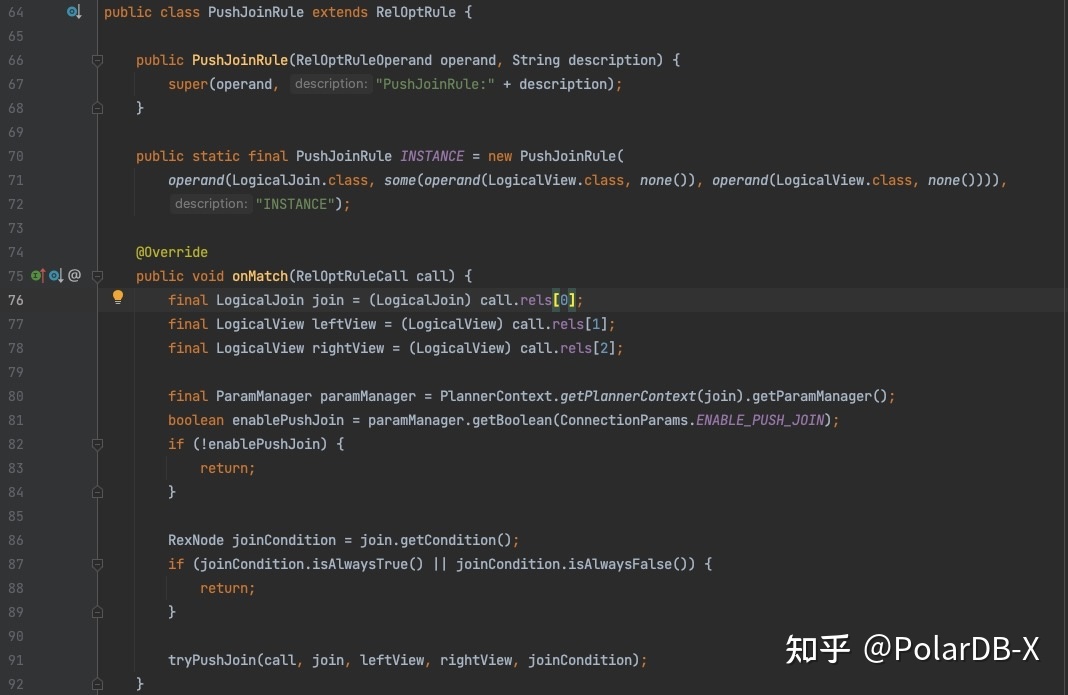

The RBO rule implements three fixed contents, including matching subtree structure, optimizing the logic, and returning the transformed plan. The preceding figure shows the rule used to push down the JOIN. Lines 70-72 in the constructor indicate that this rule matches the operator tree of two LogicalView attached to LogicalJoin. When the RBO framework matches this subtree, the onMatch interface of the rule is called. After the rule is optimized, the RelOptRuleCall.transformTo is called to return the transformed plan.

The RBO framework executes all the rules listed in the SQL_REWRITE_RULE_PHASE in an out-of-order manner. The rules are executed serially between groups. Each rule is matched repeatedly. After a certain round of matching, all the rules in a group are not hit, and the group execution is considered to end.

Plan Enumerator is a CBO component of GalaxySQL. It generates and enumerates physical execution plans and selects the most suitable physical execution plan based on the cost.

The code for calling CBO is encapsulated in the Planner#optimizeByPlanEnumerator. The CBO framework is based on the VolcanoPlanner framework. Unlike RBO, CBO rules do not need to be grouped in advance, and all the rules used are stored in the RuleToUse#CBO_BASE_RULE. Similar to RBO rules, the execution process of CBO rules also matches a subtree and then returns the transformed plan. The difference is that CBO does not replace the original subtree with the newly generated plan but saves the generated new execution plan in RelSubset and then selects the plan with the lowest cost from RelSubset. The code entry is VolcanoPlanner#findBestExp.

Mpp Planner is an optimization stage added by GalaxySQL for MPP execution plans. It generates exchange operators based on data distribution and reduces redundant data shuffle. The code entry is in Planner#optimizeByMppPlan. The code for generating the MppExchange operator is in the MppExpandConversionRule#enforce.

Post Planner is mainly used to push down the execution plan twice based on the shard situation after the actual parameters are brought in to prune the partitions. Assuming table r and table t are in a table group and the split fields are both id, consider the following SQL:

SELECT * FROM r JOIN t ON r.name = t.name WHERE r.id =0 AND t.id =1;What is seen in RBO/CBO is the parameterized plan, which is similar to SELECT * FROM r JOIN t O N r.name = t.name WHERE r.id = ?AND t.id =?;. It is impossible to determine whether the required data falls on the same partition group because there is no equality condition on the partition key. However, after the partition is pruned based on the parameters, it will be found that the data falls on shard 0. This is a single-shard Join statement, which can be directly issued.

PostPlanner is called in Planner. The implementation code is in PostPlanner#optimize.

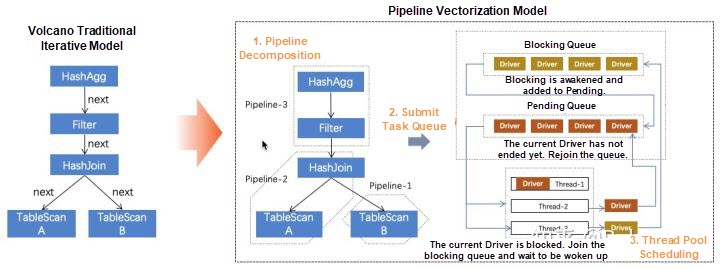

The PolarDB-X actuator supports two execution models, the traditional Volcano iterative model and the push model that supports Pipeline vectorization. The two models have their advantages and disadvantages. There are three optional execution procedures after the execution plan enters the executor: Cursor, Local, and Mpp. The cursor procedure is used to execute DML, DDL, and DAL statements. Local and Mpp procedures are used to execute DQL statements. The Cursor procedure only supports the Volcano iterative model, while the Local procedure supports both models. The Mpp procedure adds multi-machine task scheduling to the Local procedure.

The executor entry code is in the PlanExecutor#execByExecPlanNodeByOne. Then, in the ExecutorHelper#execute, the execution procedure is selected according to the execution mode determined by the optimizer. The following takes the Cursor procedure as an example to describe the overall process of planned execution.

In the Cursor procedure, the corresponding handler is found first according to the operator in the execution meter. The code location is CommandHandlerFactoryMyImp#getCommandHandler. The handler is responsible for converting the current operator into a specific implementation of a Cursor operation, and a nested call of the CommandHandlerFactoryMyImp#getCommandHandler operation is used to convert the entire execution plan into a Cursor tree. In the ResultSetUtil#resultSetToPacket, call the Cursor.next interface to obtain all execution results and return them to the user.

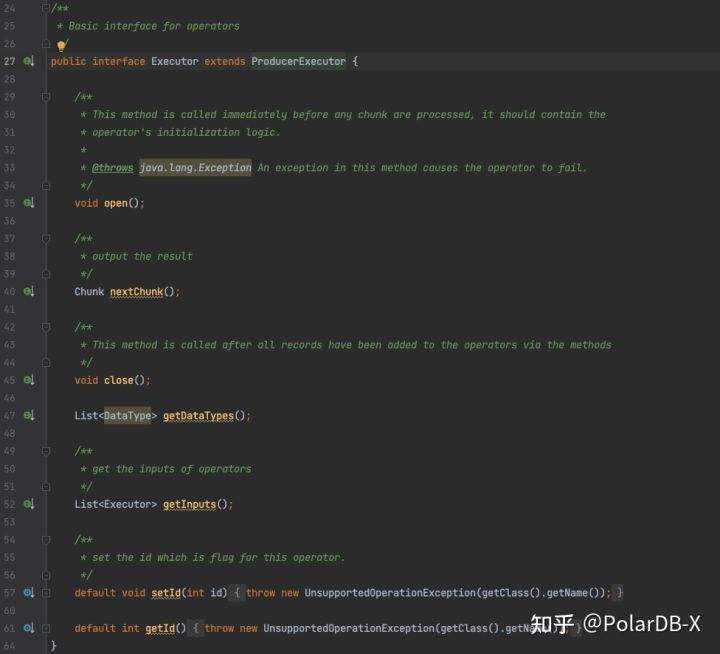

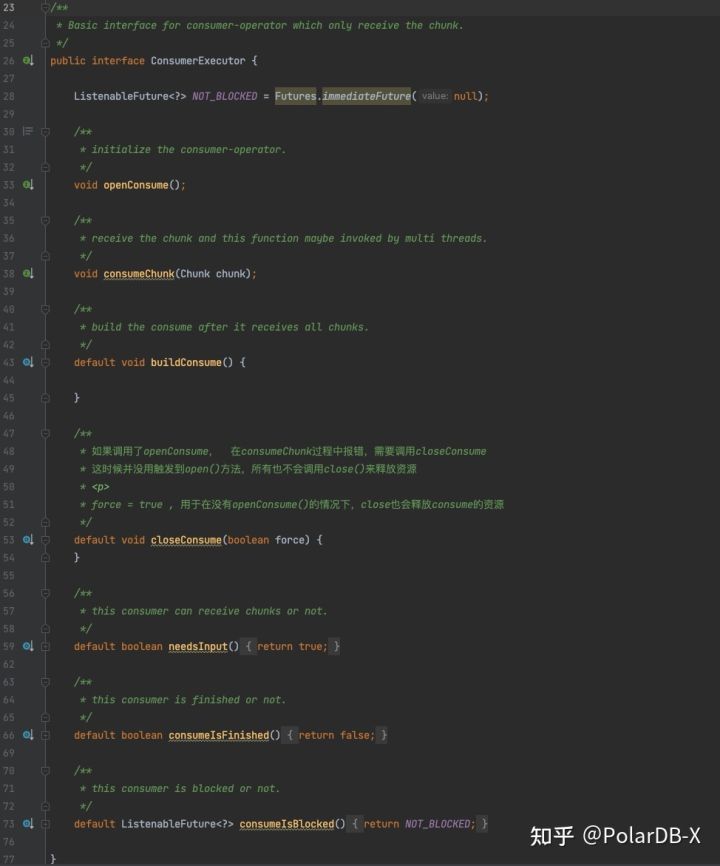

The execution entry of the Local procedure is SqlQueryLocalExecution#start. First, the execution plan is split into multiple pipelines in the LocalExecutionPlanner#plan. The specific logic of the split is LocalExecutionPlanner#visit. Then, a driver containing the specific execution logic is generated for each pipeline and put into the scheduling queue. The execution logic is encapsulated in the Executor or ConsumerExecutor, representing the iteration model and the push model, respectively. The execution result is encapsulated in the SmpResultCursor, which is the same as the Cursor. In the ResultSetUtil#resultSetToPacket, the Cursor.next interface is called to obtain all the execution results and return them to the user.

The execution entry of the Mpp procedure is SqlQueryExecution#start. First, in the PlanFragmenter#buildRootFragment, the execution plan is divided into multiple Fragments according to the Exchange operator inserted by MppPlanner. Then, in the SqlQueryScheduler#schedule, the StageScheduler is called to generate and distribute parallel computing tasks. The task information is encapsulated in the HttpRemoteTask object. The execution procedure after the parallel computing task being sent to the execution node is the same as the local procedure.

This article introduces the key codes of the protocol layer, optimizer, and executor from the perspective of SQL execution. The protocol layer is responsible for network connection management, package-to-SQL, and execution result-to-package conversion. The first section introduces the execution process of the protocol layer and gives the code entry for NIO-based network connection management, SSL protocol resolution, authentication, protocol resolution, package reorganization, etc. The optimizer is responsible for the conversion from SQL to the execution plan. The conversion process consists of seven stages Parser → Plan Management → Validator → SQL Rewriter(RBO) → Plan Enumerator(CBO) → Mpp Planner → Post Planner. The second section briefly introduces the work content of each stage and gives the code entry. The executor is responsible for obtaining the final execution result according to the execution plan, supporting the iterative model and the Pipeline vectorization model, and is divided into three execution procedures: Cursor, Local, and MPP. The third section describes the basic process and key entry of each procedure.

An Interpretation of PolarDB-X Source Codes (3): CDC Code Structure

An Interpretation of PolarDB-X Source Codes (5): Insert Process of DML

ApsaraDB - November 1, 2022

ApsaraDB - November 1, 2022

ApsaraDB - November 1, 2022

ApsaraDB - October 25, 2022

ApsaraDB - November 1, 2022

ApsaraDB - October 18, 2022

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB