After an SQL statement enters the CN of PolarDB-X, it will go through the complete processing of the protocol layer, optimizer, and executor. First, after parsing, authentication, and verification, it is parsed into a relational algebra tree. Then, an execution plan is generated through RBO and CBO in the optimizer, and finally, the execution is completed on the DN. It is different from DML; logical DDL statements involve the read and write of metadata and physical DDL statements, which directly affect the consistency of system states.

The key goals of PolarDB-X DDL implementation are the online and crash safe of DDL, which means the concurrency between DDL and DML and the atomicity and durability of DDL itself. The idea of PolarDB-X following online schema change introduces dual-version metadata in CN for complex logical DDL (such as adding and subtracting global secondary indexes and migrating partition data). DDL only occupies MDL locks when emptying low-version transactions, which reduces the frequency of blocking business SQL. The DDL engine unifies the definition method of logical DDL and the scheduling execution process in the executor module. Developers only need to encapsulate physical interfaces as Tasks with timing dependencies and crash recovery methods and pass them to the DDL engine, which guarantees the corresponding execution and rollback logic.

This article mainly explains the implementation of PolarDB-X DDL in the compute node (CN). For simplicity, we will skip the pre-processing of DDL in the protocol layer and optimizer and the dispatch of the Handler to the executor. For this part, please refer to the following article on source code interpretation:

We will focus on the execution process of DDL in the executor. Before reading this article, let’s review some knowledge related to PolarDB-X online schema change, metadata locking, and DDL engine principles:

1. A logical DDL statement is parsed and then entered into the optimizer. After a simple type conversion, a logicalPlan and an executionContext are generated. The executor assigns the logicalPlan to the corresponding DDLHandler based on the type of the logicalPlan. The public base class of DDLHandler is LogicalCommonDdlHandler. Please see the com.alibaba.polardbx.executor.handler.ddl.LogicalCommonDdlHandler#handle for its public execution entry.

public Cursor handle(RelNode logicalPlan, ExecutionContext executionContext) {

BaseDdlOperation logicalDdlPlan = (BaseDdlOperation) logicalPlan;

initDdlContext(logicalDdlPlan, executionContext);

// Validate the plan first and then return immediately if needed.

boolean returnImmediately = validatePlan(logicalDdlPlan, executionContext);

.....

setPartitionDbIndexAndPhyTable(logicalDdlPlan);

// Build a specific DDL job by subclass that override buildDdlJob

DdlJob ddlJob = returnImmediately?

new TransientDdlJob():

// @override

buildDdlJob(logicalDdlPlan, executionContext);

// Validate the DDL job before request.

// @override

validateJob(logicalDdlPlan, ddlJob, executionContext);

// Handle the client DDL request on the worker side.

handleDdlRequest(ddlJob, executionContext);

.....

return buildResultCursor(logicalDdlPlan, executionContext);

}2. Common context information (such as traceId, transaction ID, ddl type, and global configuration) is first stripped from the executionContext in the handle method. Then, the validateJob method is used to verify the correctness of DDL. The buildDdlJob method constructs DDL tasks. For example, AlterTableHandler, a DDL Handler overloads these two methods. Developers define and verify the DDL job based on the semantics of the DDL.

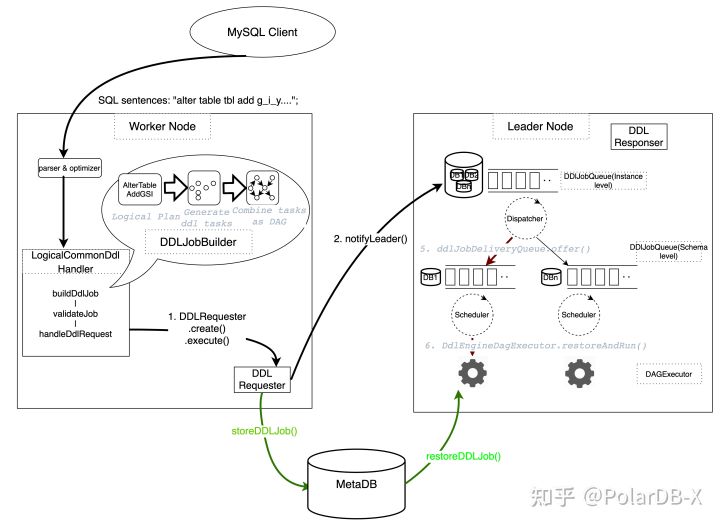

3. At this point, DDL is converted from logicalPlan to a DDL job in the Handler. The com.alibaba.polardbx.executor.handler.ddl.LogicalCommonDdlHandler#handle DdlRequest method forwards the DDL request corresponding to the DDL job to the DDL engine on the Leader CN node for scheduling execution.

4. As a daemon process, the DDL engine only runs on the Leader CN node and polls DDLJobQueue. If the queue is not empty, the scheduling and execution process of the DDL Job is triggered. The DDL engine maintains two-level queues, which are instance-level and database-level DDL job queues. This ensures that DDL statements are concurrently executed between logical databases without interference.

It is worth noting that during the process of the DDL Requester pushing the DDL request to the DDL engine, there is an inter-node communication from the Worker Node to the Leader Node. This process depends on the method provided by com.alibaba.polardbx.gms.sync.GmsSyncManagerHelper#sync. The node that initiates communication encapsulates syncAction as information in sync, and the receiver executes corresponding actions according to syncAction after listening to the occurrence of sync. This inter-node communication mechanism exists in the process of DDL engine loading DDL jobs and in the process of metadata synchronization between points when DDL jobs are executed (please see the upcoming article). In both scenarios, the encapsulated syncAction triggers the receiver to pull the communication content (DDL job or metadata) from MetaDB. This communication mechanism uses database services as communication intermediaries to ensure high availability and consistency of communication content delivery.

DDL job is the core concept of the DDL execution engine. It is a DDL transaction simulated at the DDL engine level. It attempts to implement atomicity and persistence in logical DDL composed of a series of heterogeneous interfaces (such as metadata modification, inter-node communication, physical DDL, and DML) to ensure the stability of the system state. Based on the common transaction implementation, transaction elements (such as undolog, lock, WAL, and version snapshot) are implemented in parallel in DDL jobs and DDL engines.

The DDL engine entry (the preceding handleDdlRequest method) is used as the demarcation point of DDL data traffic, dividing the lifecycle of DDL into definition and execution. Next, we will use adding a global secondary index as an example to explain the key logic of building DDL atomicity and the collaboration process between DDL and DML when defining DDL jobs. The scheduling execution and error handling mechanism of DDL in the DDL engine will be explained in the next section.

create database db1 mode = "auto";

use db1;

create table t1(x int, y int);alter table t1 add global index `g_i_y` (`y`) COVERING (`x`) partition by hash(`y`);As mentioned earlier, except for the physical DDL and DML executed on the DN, the key interfaces included in DDL Job are modification and synchronization of metadata.

The metadata in the PolarDB-X is managed by GMS uniformly and can be divided into two parts by physical location:

polardbx-gms/src/main/java/com/alibaba/polardbx/gms path. private ResultCursor executeQuery(ByteString sql, ExecutionContext executionContext,

AtomicBoolean trxPolicyModified) {

// Get all meta version before optimization

final long[] metaVersions = MdlContext.snapshotMetaVersions();

// Planner

ExecutionPlan plan = Planner.getInstance().plan(sql, executionContext);

...

//for requireMDL transaction

if (requireMdl && enableMdl) {

if (!isClosed()) {

// Acquire meta data lock for each statement modifies table data

acquireTransactionalMdl(sql.toString(), plan, executionContext);

}

...

//update Plan if metaVersion changed, which indicate meta updated

}

...

ResultCursor resultCursor = executor.execute(plan, executionContext);

...

return resultCursor;

}It is worth noting that the memory meta-information builds a lock based on a logical table. This lock is a memory MDL lock. When a DML transaction starts, the acquireTransactionalMdl attempts to obtain the MDL lock of the current latest version of the relevant logical table and releases it after the execution is completed.

When a DDL Job is executed, the metadata of the preceding two parts is modified according to a certain time series. Synchronization between DDL and DML is a key step to implement the online feature of DDL in this process.

The DDL Job Handler for adding the global secondary index is AlterTableHandler, which overloads the buildDdlJob method (com.alibaba.polardbx.executor.handler.ddl.LogicalAlterTableHandler#buildDdlJob) of LogicalCommonDdlHandler. This method is eventually dispatched to the DDL Job corresponding to the construction global secondary index (com.alibaba.polardbx.executor.ddl.job.factory.gsi.CreatePartitionGsiJobFactory).

public class CreatePartitionGsiJobFactory extends CreateGsiJobFactory{

@Override

protected void excludeResources(Set<String> resources) {

super.excludeResources(resources);

//meta data lock in MetaDB

resources.add(concatWithDot(schemaName, primaryTableName)); //db1.t1

resources.add(concatWithDot(schemaName, indexTableName)); //db1.g_i_y

...

}

@Override

protected ExecutableDdlJob doCreate() {

...

if (needOnlineSchemaChange) {

bringUpGsi = GsiTaskFactory.addGlobalIndexTasks(

schemaName,

primaryTableName,

indexTableName,

stayAtDeleteOnly,

stayAtWriteOnly,

stayAtBackFill

);

}

...

List<DdlTask> taskList = new ArrayList<>();

//1. validate

taskList.add(validateTask);

//2. create gsi table

//2.1 insert tablePartition meta for gsi table

taskList.add(createTableAddTablesPartitionInfoMetaTask);

//2.2 create gsi physical table

CreateGsiPhyDdlTask createGsiPhyDdlTask =

new CreateGsiPhyDdlTask(schemaName, primaryTableName, indexTableName, physicalPlanData);

taskList.add(createGsiPhyDdlTask);

//2.3 insert tables meta for gsi table

taskList.add(addTablesMetaTask);

taskList.add(showTableMetaTask);

//3.

//3.1 insert indexes meta for primary table

taskList.add(addIndexMetaTask);

//3.2 gsi status: CREATING -> DELETE_ONLY -> WRITE_ONLY -> WRITE_REORG -> PUBLIC

taskList.addAll(bringUpGsi);

//last tableSyncTask

DdlTask tableSyncTask = new TableSyncTask(schemaName, indexTableName);

taskList.add(tableSyncTask);

final ExecutableDdlJob4CreatePartitionGsi result = new ExecutableDdlJob4CreatePartitionGsi();

result.addSequentialTasks(taskList);

....

return result;

}

...

}The excludeResources method declares the occupation of persistent metadata locks for related objects by ddlJob. In this example, the metadata of the primary table and the GSI table is modified. Therefore, the locked objects include db1.t1 and db1.g_i_y. Note: This lock is different from the aforementioned memory MDL lock. The former is persisted to MetaDB's read_write_lock table during the initiation when the DDL engine executing DDL Jobs to control the concurrency between DDL.

The doCreate method declares a series of Tasks that are executed in time series. Its semantics are listed below:

Verification

Create a GSI Table

Sync Metadata of GSI Tables

In the Tasks above, the write operation of persistent meta-information is applied to the corresponding meta-information table in MetaDB, and the broadcast operation of meta-information is applied to the meta-information cached by CN.

Next, we briefly introduce three typical Tasks, of which methods included in CreateTableAddTablesMetaTask are listed below:

public class CreateTableAddTablesMetaTask extends BaseGmsTask {

@Override

public void executeImpl(Connection metaDbConnection, ExecutionContext executionContext) {

PhyInfoSchemaContext phyInfoSchemaContext = TableMetaChanger.buildPhyInfoSchemaContext(schemaName,

logicalTableName, dbIndex, phyTableName, sequenceBean, tablesExtRecord, partitioned, ifNotExists, sqlKind,

executionContext);

FailPoint.injectRandomExceptionFromHint(executionContext);

FailPoint.injectRandomSuspendFromHint(executionContext);

TableMetaChanger.addTableMeta(metaDbConnection, phyInfoSchemaContext);

}

@Override

public void rollbackImpl(Connection metaDbConnection, ExecutionContext executionContext) {

TableMetaChanger.removeTableMeta(metaDbConnection, schemaName, logicalTableName, false, executionContext);

}

@Override

protected void onRollbackSuccess(ExecutionContext executionContext) {

TableMetaChanger.afterRemovingTableMeta(schemaName, logicalTableName);

}

}1. addTableMetaTask

Refers to adding the metadata related to the GSI table to the tableMeta of the primary table. The executeImpl calls the metadata read/write interface (com.alibaba.polardbx.executor.ddl.job.meta.TableMetaChanger#addTableMeta) provided by MetaDB to write metadata to a GSI table. In addition to the core logic corresponding to executeImpl, addTableMetaTask provides the rollbackImpl to clear the metadata of the GSI table. This is the **undolog88 corresponding to the Task. We can call this method to restore the original tableMeta information when a DDL job is rolled back.

2. TableSyncTask

As a special case of the preceding inter-node communication mechanism, it synchronizes tableMeta metadata among CN nodes and notifies all CN nodes in the cluster to load the metadata of tableMeta from MetaDB.

3. bringUpGsi

It is a task list composed of a series of tasks. It defines the evolution of metadata and the data backfilling process concerning online schema change.

In addition to the preceding tasks, the meta information read/write and physical DDL and DML Tasks commonly used in logical DDL have been predefined.

polardbx-executor/src/main/java/com/alibaba/polardbx/executor/ddl/job/task/basicReaders can find them in this directory.

At the end of the doCreate method, addSequentialTasks add tasks in batches to the created DDLJob. As the simplest task combination operation, addSequentialTask constructs dependencies between tasks based on the subscript order of the elements in the parameter taskList as the topological order. This method indicates DDL Tasks are combined by DAG in DDLJob. The DDL engine schedules DDL Tasks based on the topological order of the DAG. When declaring complex dependencies, we can also use methods (such as addTask and addTaskRelationShip) to declare tasks and their dependency order separately.

The bringUpGsi in the previous section is a complete implementation of the online-schema-change process, defined in:

com.alibaba.polardbx.executor.ddl.job.task.factory.GsiTaskFactory#addGlobalIndexTasksWe use this method as an example to describe several key components of DDL and DML synchronization.

• public static List<DdlTask> addGlobalIndexTasks(String schemaName,

String primaryTableName,

String indexName,

boolean stayAtDeleteOnly,

boolean stayAtWriteOnly,

boolean stayAtBackFill) {

....

DdlTask writeOnlyTask = new GsiUpdateIndexStatusTask(

schemaName,

primaryTableName,

indexName,

IndexStatus.DELETE_ONLY,

IndexStatus.WRITE_ONLY

).onExceptionTryRecoveryThenRollback();

....

taskList.add(deleteOnlyTask);

taskList.add(new TableSyncTask(schemaName, primaryTableName));

....

taskList.add(writeOnlyTask);

taskList.add(new TableSyncTask(schemaName, primaryTableName));

...

taskList.add(new LogicalTableBackFillTask(schemaName, primaryTableName, indexName));

...

taskList.add(writeReOrgTask);

taskList.add(new TableSyncTask(schemaName, primaryTableName));

taskList.add(publicTask);

taskList.add(new TableSyncTask(schemaName, primaryTableName));

return taskList;

}Please see the section below for the state definition in the index metadata:

com.alibaba.polardbx.gms.metadb.table.IndexStatus.While DDL updates this field, the optimizer assigns the corresponding gsiWriter in RBO for the DML statement that modifies the primary table, taking a simple Insert statement:

insert into t1 values(1,2)For example:

1. In the corresponding RBO phase, insert statements polardbx-optimizer/src/main/java/com/alibaba/polardbx/optimizer/core/planner/rule/OptimizeLogicalInsertRule.java is associated with the writer of the corresponding GSI in the execution plan according to gsiMeta in the metadata of the main table.

//OptimizeLogicalInsertRule.java

private LogicalInsert handlePushdown(LogicalInsert origin, boolean deterministicPushdown, ExecutionContext ec){

...//other writers

final List<InsertWriter> gsiInsertWriters = new ArrayList<>();

IntStream.range(0, gsiMetas.size()).forEach(i -> {

final TableMeta gsiMeta = gsiMetas.get(i);

final RelOptTable gsiTable = catalog.getTableForMember(ImmutableList.of(schema, gsiMeta.getTableName()));

final List<Integer> gsiValuePermute = gsiColumnMappings.get(i);

final boolean isGsiBroadcast = TableTopologyUtil.isBroadcast(gsiMeta);

final boolean isGsiSingle = TableTopologyUtil.isSingle(gsiMeta);

//different write Strategy for corresponding table type.

gsiInsertWriters.add(WriterFactory

.createInsertOrReplaceWriter(newInsert, gsiTable, sourceRowType, gsiValuePermute, gsiMeta, gsiKeywords,

null, isReplace, isGsiBroadcast, isGsiSingle, isValueSource, ec));

});

...

}2. The writer applies to the physical execution plan in the Handler of the executor. The getInput method determines whether to generate physical writes to a GSI by DML based on the state information of indexStatus contained in gsiWriter. Then, DML enables double writes to a GSI only after the index is updated to WRITE_ONLY.

//LogicalInsertWriter.java

protected int executeInsert(LogicalInsert logicalInsert, ExecutionContext executionContext,

HandlerParams handlerParams) {

...

final List<InsertWriter> gsiWriters = logicalInsert.getGsiInsertWriters();

gsiWriters.stream()

.map(gsiWriter -> gsiWriter.getInput(executionContext))

.filter(w -> !w.isEmpty())

.forEach(w -> {

writableGsiCount.incrementAndGet();

allPhyPlan.addAll(w);

});

//IndexStatus.java

...

public static final EnumSet<IndexStatus> WRITABLE = EnumSet.of(WRITE_ONLY, WRITE_REORG, PUBLIC, DROP_WRITE_ONLY);

public boolean isWritable() {

return WRITABLE.contains(this);

}

...

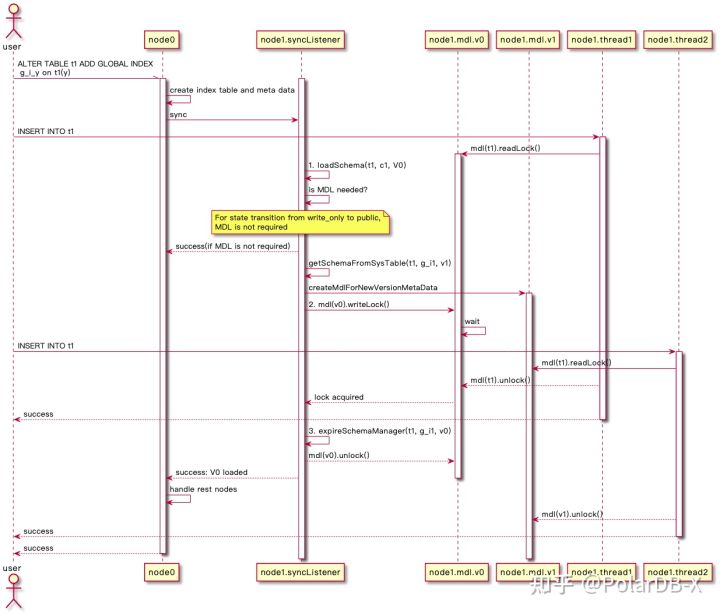

When synchronizing metadata of MetaDB and memory through sync, follow these steps:

The responders of the sync calls above are all nodes in the CN cluster (including the caller itself). When all nodes complete the metadata version switching, the sync call is successful. As such, the following points are guaranteed:

Among them, steps 1, 2, and 3 are implemented below:

com.alibaba.polardbx.executor.gms.GmsTableMetaManager#tonewversion public void tonewversion(String tableName, boolean preemptive, Long initWait, Long interval, TimeUnit timeUnit) {

synchronized (OptimizerContext.getContext(schemaName)) {

GmsTableMetaManager oldSchemaManager =

(GmsTableMetaManager) OptimizerContext.getContext(schemaName).getLatestSchemaManager();

TableMeta currentMeta = oldSchemaManager.getTableWithNull(tableName);

long version = -1;

... // Query the metadata version in the current MetaDB and assign it to vesion.

//1. loadSchema

SchemaManager newSchemaManager =

new GmsTableMetaManager(oldSchemaManager, tableName, rule);

newSchemaManager.init();

OptimizerContext.getContext(schemaName).setSchemaManager(newSchemaManager);

//2. mdl(v0).writeLock

final MdlContext context;

if (preemptive) {

context = MdlManager.addContext(schemaName, initWait, interval, timeUnit);

} else {

context = MdlManager.addContext(schemaName, false);

}

MdlTicket ticket = context.acquireLock(new MdlRequest(1L,

MdlKey

.getTableKeyWithLowerTableName(schemaName, currentMeta.getDigest()),

MdlType.MDL_EXCLUSIVE,

MdlDuration.MDL_TRANSACTION));

//3. expireSchemaManager(t1, g_i1, v0)

oldSchemaManager.expire();

.... // The PlanCache that uses the old version of the metadata is invalid.

context.releaseLock(1L, ticket);

}

}DDL Jobs are synchronized between DDL and DML when they are defined using the preceding elements. This ensures the online execution of DML.

This article mainly interprets the code related to the DDL Job definition on the CN side in PolarDB-X, takes adding a global secondary index as an example, sorts the overall process of DDL Job definition and execution, and focuses on the key logic related to online and crash safe features in DDL job definition. Please stay tuned for more information about the DDL Job execution process in the DDL engine.

An Interpretation of PolarDB-X Source Codes (8): Life of Global Binlog

An Interpretation of PolarDB-X Source Codes (10): Life of Transactions

ApsaraDB - November 1, 2022

ApsaraDB - October 24, 2022

ApsaraDB - October 24, 2022

ApsaraDB - October 24, 2022

ApsaraDB - November 1, 2022

ApsaraDB - September 11, 2024

Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn MoreMore Posts by ApsaraDB