GalaxyCDC is a core component of the cloud-native distributed database system PolarDB-X, responsible for the generation, distribution, and subscription of global incremental logs. GalaxyCDC allows PolarDB-X databases to provide incremental logs that are compatible with MySQL Binlog formats and protocols. This allows us to seamlessly connect with downstream tools of MySQL Binlog. This article will give a systematic introduction to the code structure of GalaxyCDC and explain how to quickly build a development environment.

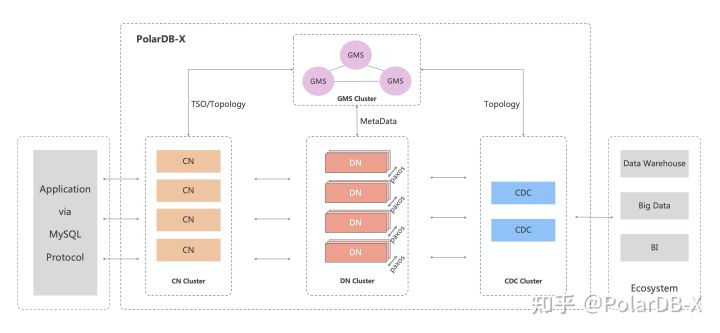

As shown in the preceding figure, the PolarDB-X contains four core components. Compute Node (CN) is responsible for computing, Data Node (DN) is responsible for storing, Global Meta Service (GMS) is responsible for managing metadata and providing TSO services, and Change Data Capture (CDC) is responsible for generating change logs. CDC (as export of Binlog data) is responsible for three aspects:

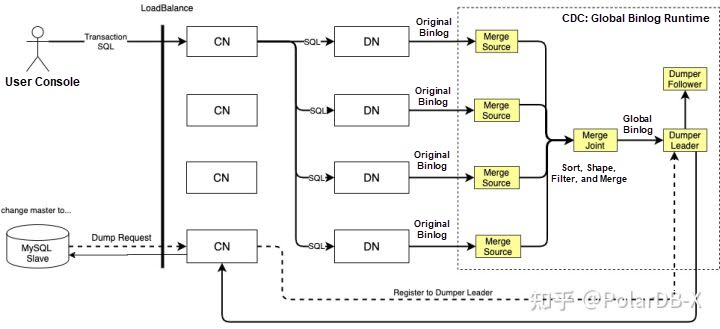

Help us understand more vividly that GalaxyCDC is a Streaming system, InputSource is the original Binlog of each DN, Computing logic is a series of computing rules and processing algorithms (sorting, overshooting, shaping, merging, etc.), and TargetSink is a global logical Binlog file that shields the internal details of distributed databases and provides the Replication capability compatible with MySQL ecosystem. Its run time state diagram is shown below:

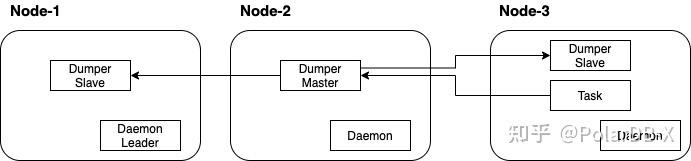

From the perspective of technical architecture, CDC consists of three core components: Daemon, Task, and Dumper, which are shown below:

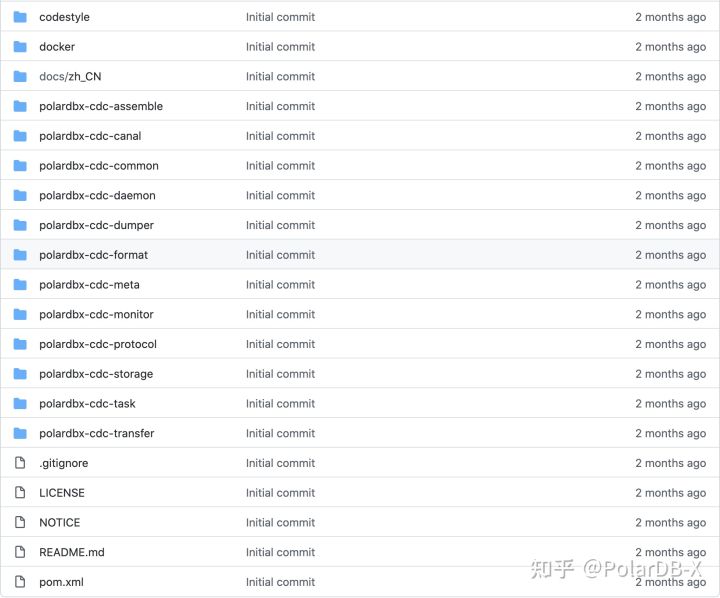

The GalaxyCDC project code is managed on GitHub (repository address) and follows the Apache License 2.0 protocol. GalaxyCDC is a multi-module Java project. Services are exposed between modules through interfaces. The module relationships are recorded in pom.xml. You can run the mvn dependency:tree command to view all dependencies.

As shown in the figure above, the whole project contains 15 directory modules (CDC also has some functional modules in the GalaxySQL project. Please refer to [Annex 3] for details). Each module is introduced below.

It provides a built-in code style configuration file, mainly for IDEA. If you are interested in contributing source code, you can set it based on the style file.

It provides a series of Docker-related configuration files. You can quickly build a Docker image locally by running the build.sh in the directory.

It is a program building module providing related configurations (such as building and packaging). If the mvn clean package -Dmaven.test.skip=true is executed in the root directory of the GalaxyCDC project, the polardbx-binlog.tar.gz package will be generated in the target directory of the assemble.

The Binlog data parsing module references the source code of the open-source Canal and customizes much. The core codes are described below:

| Package (File) Name | Features |

| com.aliyun.polardbx.binlog.canal.binlog | It defines various data models corresponding to the MySQL Binlog Event and the core implementation of Decode for Binlog binary data. |

| com.aliyun.polardbx.binlog.canal.core | Canal's run time kernel module, including data parsing process management, ddl processing, permission management, HA processing. |

The public library module provides basic tools, domain models, data access, and public configurations. The core codes are introduced below:

| Package (File) Name | Features |

| com.aliyun.polardbx.binlog.domain | It contains the definition of the domain model class, where the source code under the po package is automatically generated by the code generation tool and corresponds to the CDC metadata table. |

| com.aliyun.polardbx.binlog.dao | It contains all the data access layer class definitions and is automatically generated by the code generation tool. |

| com.aliyun.polardbx.binlog.heartbeat | The TsoHeartbeat component is included to ensure that Binlog streams continue to flow forward. This is a necessary condition for generating virtual TSO in hybrid transaction policy scenarios. |

| com.aliyun.polardbx.binlog.leader | It provides a LeaderElector based on the MySQL GET_LOCK function implementation. |

| com.aliyun.polardbx.binlog.rpc | It defines the Rpc interface for data interaction between Task and Dumper. |

| com.aliyun.polardbx.binlog.scheduler | It includes the implementation of functions related to cluster scheduling and resource allocation. |

| com.aliyun.polardbx.binlog.task | It contains the functions related to the timing heartbeat of the Task and Dumper components. |

| generatorConfig.xml | It is a code generator configuration file, which uses MyBatis Generator for automated code generation. |

The daemon process module provides functions (such as HA scheduling, TSO heartbeat, information collection monitoring, and OpenAPI access). The core codes are introduced below:

| Package (File) Name | Features |

| com.aliyun.polardbx.binlog.daemon.cluster com.aliyun.polardbx.binlog.daemon.schedule | It includes cluster control-related functions, such as heartbeat, run time topology scheduling, and HA detection. |

| com.aliyun.polardbx.binlog.daemon.rest | It contains several Rest-style interfaces, such as alarm event collection, Metrics collection, and system parameter setting. |

The global Binlog dump module receives the Binlog data written by the Task module and offers data persistence. It provides a data subscription service compatible with the MySQL dump protocol. The core codes are described below:

| Package (File) Name | Features |

| com.aliyun.polardbx.binlog.dumper.dump.client | Data synchronization is performed between the primary and secondary Dumpers and the corresponding Client implementation. |

| com.aliyun.polardbx.binlog.dumper.dump.logfile | The logical Binlog file building module's two core classes are LogFileGenerator and LogFileCopier, which are responsible for processing logical Binlog files of Dumper Master and Dumper Slave. |

The data shaping module converts the format of physical Binlog (adding columns, deleting columns, and converting physical database tables into logical database and table names, etc.) to ensure the converted data format and logical database and table schemas maintain the same state.

The metadata management module maintains historical versions of all logical and physical databases and tables of the PolarDB-X based on timelines. It can create schema snapshots at any given point in time. This module provides basic support for DDL processing and Binlog shaping. The module also maintains the SQL script definition (src/main/resources/db/migration) of the CDC system database and tables. CDC uses Flyway to manage the table structure.

Monitoring module (a built-in monitoring implementation) is used to manage and maintain monitoring events. In the running state, the monitoring information is sent to the daemon process through the module.

It is a definition module of the data transmission service protocol. CDC uses gRpc and protobuf to transmit data. This module defines the data protocol interface and data exchange format, whose core codes are introduced below:

| Package (File) Name | Features |

| com.aliyun.polardbx.binlog.protocol | It contains the data exchange format definition between Task nodes and Dumper nodes. |

| com.aliyun.polardbx.rpc.cdc | It contains the definition of the data exchange format between Dumper nodes and CN nodes. |

The data storage module is encapsulated and extended based on rocksdb. When memory resources are insufficient (such as large transactions and big columns), it is used to transfer memory data to disks.

It is the core task module, which can be considered the kernel of CDC. The core business logic (such as transaction sorting, shaping, merging, DDL processing, and metadata lifecycle maintenance) is completed in this module. The core codes are introduced below:

| Package (File) Name | Features |

| com.aliyun.polardbx.binlog.extractor | It includes a first-level sorting function, which parses the original physical Binlog of each DN and sorts it by TSO, supplemented by data shaping, metadata history version maintenance, DDL processing, etc. |

| com.aliyun.polardbx.binlog.merge | It includes a global sorting function, receives ordered queues of the first-level sorting output, performs multileg merging, and outputs the globally ordered transaction queues. |

| com.aliyun.polardbx.binlog.collect | The transaction merging function is included to extract data from globally ordered transaction queues and merge local transactions with the same transaction id to obtain a complete global transaction. |

| com.aliyun.polardbx.binlog.transmit | The data transmission function is included, and the sorted and merged data is sent to the downstream Dumper component through the transmission module. |

It is a built-in transfer program. The correctness of the CDC global Binlog can be verified by running the transfer program. The use of the method will be described in detail later.

The system metadata table of CDC is stored in the GMS metadatabase. The metadata table is introduced below:

It is a system parameter information table, which stores system-level parameter configurations.

It is a topology configuration table of system run time, which stores the configuration information of dumper and task, such as specifying which node to execute on, Rpc port value, and running memory.

A node information table that stores information (such as the resource configuration and runtime state of each node). Each node corresponds to one record in the table.

It is a dumper running state information table. Each dumper process corresponds to one record in the table.

It is a task running a state information table. Each task process corresponds to one record in the table.

It is a logical database and table metadata information history table, which is used to record each logical DDL SQL and its corresponding database and table topology information.

A record table rests in the physical database and table metadata information, which is used to record each physical DDL SQL.

It is a Binlog file information table. Each logical Binlog file corresponds to one record in the table.

It is a command information table, through which some interactions between CDC and CN are completed, such as triggering the full initialization of metadata.

The scheduling history table. Each time a cluster is rescheduled ( such as a Rebalance triggered by node downtime), historical information is recorded in the table.

The DN node history table. When a PolarDB-X performs horizontal scale-out or scale-in, the number of DN will change. Each change will be notified to CDC with tagging, and CDC will record all change history by timeline through this table. Each time the Dumper process starts, it queries the maximum TSO recorded in the logical Binlog file and sends it to the Task process. The Task queries the list of DN that should be connected from the binlog_storage_history table through this TSO.

It is a parameter change history table. Some parameters in CDC need to be bound to the timeline. This table is used to record the change history of parameters. Similar to binlog_storage_history, the system uses TSO as the benchmark and uses the parameters of the corresponding period.

Flyway Execution History Table

Let's introduce how to build a development environment based on source code to facilitate secondary development or code debugging:

1. The minimum requirement of JDK is 1.8.

2. Prepare a PolarDB-X instance (start CN + DN and do not start CDC components), which can be deployed through pxd method or source code compilation and installation.

3. Prepare the GalaxySQL source code and run the mvn install -D maven.test.skip=true -D env=release command to obtain the polardbx-parser package. CDC references this module.

4. Prepare the GalaxyCDC source code and adjust the configuration in dev.properties. Adjust the configuration according to the actual situation. The configurations that may need to be adjusted include:

polardbx.instance.id

mem_size

metaDb_url

metaDb_username

metaDbPasswd

polarx_url

polarx_username

polarx_password

dnPasswordKey5. Run mvn compile -D maven.test.skip=true -D env=dev in the GalaxyCDC root directory to compile the CDC source code

6. Start Daemon process and run the com.aliyun.polardbx.binlog.daemon.DaemonBootStrap

7. Start Task process, run the com.aliyun.polardbx.binlog.TaskBootStrap, and specify the input parameter "taskName=Final"

8. Start the Dumper process, run the com.aliyun.polardbx.binlog.dumper.DumperBootStrap, and specify the input parameter "taskName=Dumper-1"

9. Execute some SQL through PolarDB-X Server and observe whether Binlog data is generated in the {HOME}/binlog directory:

create database transfer_test;

CREATE TABLE `transfer_test`.`accounts` (

`id` int(11) NOT NULL,

`balance` int(11) NOT NULL,

`gmt_created` datetime not null,

PRIMARY KEY (`id`)

) ENGINE = InnoDB DEFAULT CHARSET = utf8 dbpartition by hash(`id`) tbpartition by hash(`id`) tbpartitions 2;

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (1,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (2,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (3,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (4,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (5,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (6,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (7,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (8,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (9,100,now());

INSERT INTO `transfer_test`.`accounts` (`id`,`balance`,`gmt_created`) VALUES (10,100,now());10. Version 8.0 is recommended to install MySQL on Docker. An installation example is provided below:

docker run -itd --name mysql_3309 -p 3309:3306 -e MYSQL_ROOT_PASSWORD=root mysql

Log on to the docker instance: docker exec -it mysql_3309 bash.

Edit /etc/mysql/my.cnf.

a. Add the following configuration to disable Gtid (GalaxyCDC global Binlog currently does not support Gtid).

gtid_mode=OFF

enforce_gtid_consistency=OFF

b. Change the server id to avoid duplication with the primary database.

server_id = 2

Restart a docker instance: docker restart mysql_3309.11. Log on to the newly installed MySQL client and run the following commands to check whether the accounts table and other information have been synchronized to MySQL:

stop slave;

reset slave;

CHANGE MASTER TO

MASTER_HOST='xxx',

MASTER_USER='xxx',

MASTER_PASSWORD='xxx',

MASTER_PORT=xxx,

MASTER_LOG_FILE='binlog.000001',

MASTER_LOG_POS=4,

MASTER_CONNECT_RETRY=100;

start slave;12. You can run the transfer program in the transfer project to test in the transfer scenario and start the entry class:com.aliyun.polardbx.binlog.

The transfer.Main is where different performances can be seen when TSO is enabled, and TSO is not enabled. The former ensures strong consistency (consistent balances can be queried from downstream MySQL at any time), while the latter only ensures final consistency. After stopping the test program, you can use the following SQL to verify whether the data on both sides are consistent.

`SELECT BIT_XOR(CAST(CRC32(CONCAT_WS(',', id, balance, CONCAT(ISNULL(id), ISNULL(balance))))AS UNSIGNED)) AS checksum FROM accounts;`The following are some core classes in the CDC Stream procedure, which form the structure of the CDC run time. It is easy to use quickly.

com.aliyun.polardbx.binlog.extractor.BinlogExtractor com.aliyun.polardbx.binlog.extractor.filter.RtRecordFilter com.aliyun.polardbx.binlog.extractor.filter.TransactionBufferEventFilter com.aliyun.polardbx.binlog.extractor.filter.RebuildEventLogFilter com.aliyun.polardbx.binlog.extractor.filter.MinTSOFilter com.aliyun.polardbx.binlog.extractor.DefaultOutputMergeSourceHandler

com.aliyun.polardbx.binlog.extractor.sort.Sorter

com.aliyun.polardbx.binlog.merge.LogEventMerger

com.aliyun.polardbx.binlog.collect.handle.TxnShuffleStageHandler com.aliyun.polardbx.binlog.collect.handle.TxnSinkStageHandler

com.aliyun.polardbx.binlog.transmit.LogEventTransmitter

com.aliyun.polardbx.binlog.storage.LogEventStorage

com.aliyun.polardbx.binlog.dumper.dump.logfile.LogFileGenerator

com.aliyun.polardbx.binlog.dumper.dump.logfile.LogFileCopier

com.aliyun.polardbx.binlog.daemon.schedule.TopologyWatcher

This article mainly introduces the code engineering structure of GalaxyCDC, lists the metadata table list, shows the construction process of the local development and debugging environment, and gives the core function components. I hope you are more familiar with the codes of GalaxyCDC through practices based on the introduction given in this article and some information given in the appendix. Later, we will launch a series of articles focusing on each point and provide a more detailed interpretation of the source code.

An Interpretation of PolarDB-X Source Codes (2): CN Startup Process

An Interpretation of PolarDB-X Source Codes (4): Life of SQL

ApsaraDB - October 18, 2022

ApsaraDB - October 24, 2022

ApsaraDB - June 12, 2024

ApsaraDB - September 11, 2024

ApsaraDB - October 24, 2022

ApsaraDB - October 25, 2022

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB