Before reading this article, we recommend reading the Interpretation Series of PolarDB-X Source Codes: Life of SQL to learn about the SQL execution process and the components of GalaxySQL (CN). Then, come back and read this article to learn about the steps to implement Insert to deepen your understanding of each component. The process of SQL statements of the Insert class can be divided into parsing, verification, optimizer, executor, and physical execution (GalaxyEngine execution). This article uses a simple Insert statement to interpret it through debugging. Table creation statements:

# A simple subtable in sub-database, sbtest, in PolarDB-X

CREATE TABLE `sbtest` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`k` int(11) NOT NULL DEFAULT '0',

`c` char(120) NOT NULL DEFAULT '',

`pad` char(60) NOT NULL DEFAULT '',

PRIMARY KEY (`id`)

)dbpartition by hash(`id`) tbpartition by hash(`id`) tbpartitions 2;

# Debug Statements

insert into sbtest(id) values(100);After connecting with PolarDB-X, execute an Insert statement insert into sbtest(id) values(100);. PolarDB-X receives the string statement and starts to execute the SQL statement. TConnection#executeSQL is displayed:

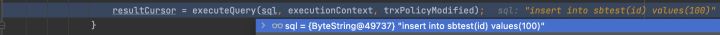

Before executing the SQL statement, the ExecutionContext retains the parameters, configurations, and other context information of the SQL statement. This variable will accompany the SQL statement through parsing, verification, optimizer, and executor until it is sent to GalaxyEngine(DN). When PolarDB-X executes the SQL statement, the execution plan needs to be obtained first. Please see TConnection#executeQuery for more information:

ExecutionPlan plan = Planner.getInstance().plan(sql, executionContext);PolarDB-X has a built-in PlanCache and obtained the execution plan of the SQL in PlanCache to avoid parsing, checking, optimizer, and other interfaces for the same SQL every time. Of course, it does not cache according to pure string SQL but generates SqlParameterized, as shown in the following figure (Planner#plan). What is cached is the SQL template. The SQL variable in this class (INSERT INTO sbtest (id)\nVALUES (?)) can be applied to similar statements (?). It represents a value that can be entered. Different values are the same type of SQL statement.

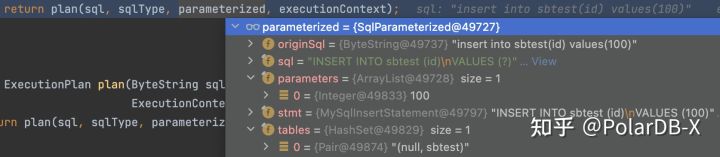

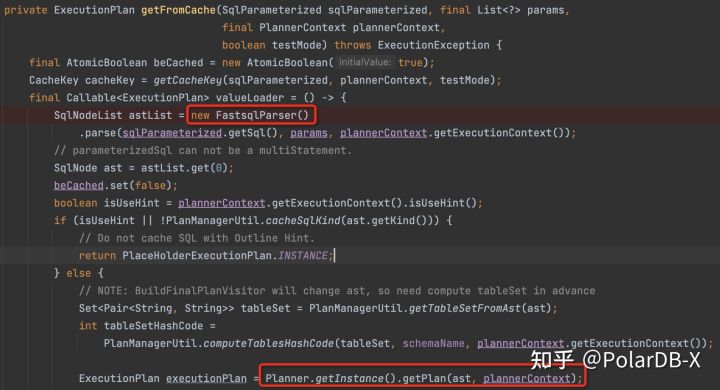

If PlanCache cannot be found, a new execution plan needs to be generated. Please see PlanCache#getFromCache for more information:

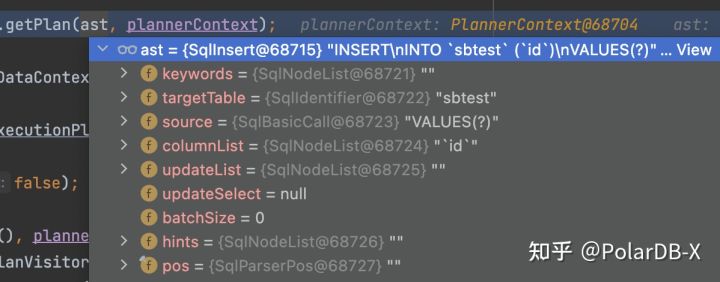

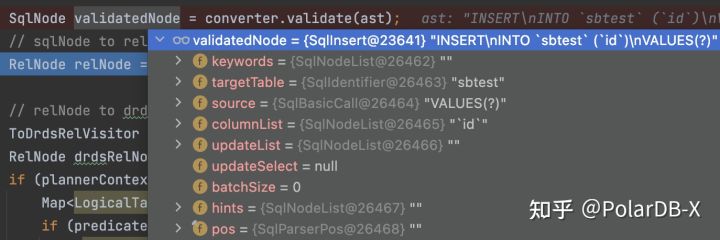

First, parse the string into an abstract syntax tree through FastsqlParser, check whether there are syntax errors, etc., and generate SqlNode. This SQL is an Insert statement, parsed into SqlInsert class, and then continues to obtain the execution plan according to the abstract syntax tree. The following are the specific SqlInsert contents:

Let's briefly explain several variables:

So far, the transformation of the string SQL statement to SqlNode has been completed, which means the parsing part has been completed.

The verification process checks whether the semantics of SqlNode are correct, such as whether the table exists, whether the column exists, and whether the type is correct. The specific entry is in the Planner#getPlan function:

SqlNode validatedNode = converter.validate(ast);This verifies the validity of the SQL. PolarDB-X reused the Apache Calcite framework, which is similar to the large framework used in the implementation of validate, including the two concepts of Scope and Namespace. On this basis, the validation entry of the SqlInsert type is in the SqlValidatorImpl#validateInsert(SqlInsert insert):

...

final SqlValidatorNamespace targetNamespace = getNamespace(insert);

validateNamespace(targetNamespace, unknownType);

...

final SqlNode source = insert.getSource();

if (source instanceof SqlSelect) {

final SqlSelect sqlSelect = (SqlSelect) source;

validateSelect(sqlSelect, targetRowType);

} else {

final SqlValidatorScope scope = scopes.get(source);

validateQuery(source, scope, targetRowType);

}

...The general process checks whether the insert into sbtest statement is correct and whether the SqlInsert.source is valid. This SQL statement is Values, so check whether Values are valid. If it is an INSERT... SELECT statement, the source is SqlSelect. Check whether the Select statement is valid. If no error is reported, there is no error in the semantics of the SQL statement. If the verification is passed, it can be found that the SQL statement is still SqlInsert.

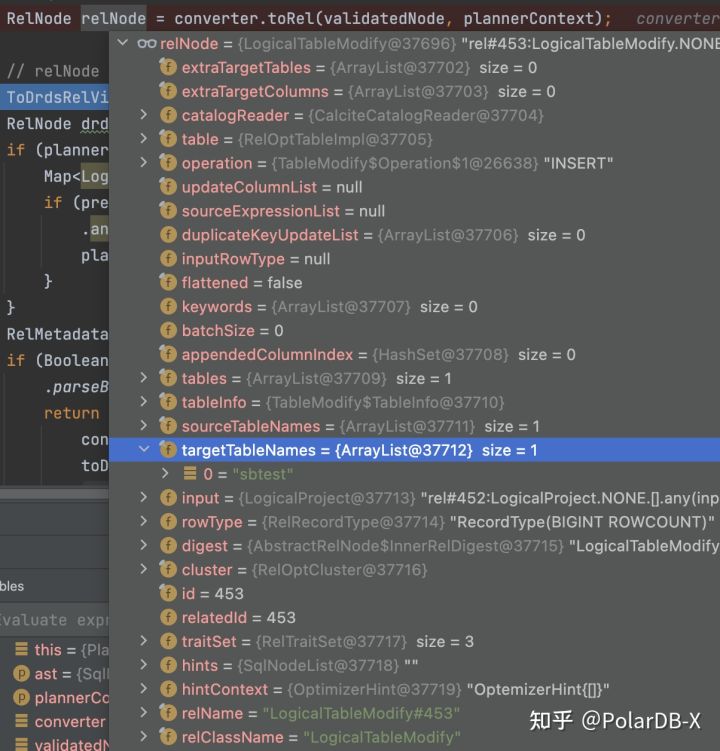

Before entering the optimizer, SqlNode(SqlInsert) needs to be converted into RelNode. The general meaning is to convert the SQL syntax tree into relational expressions. The entry is Planner#getPlan.

RelNode relNode = converter.toRel(validatedNode, plannerContext);The specific conversion process is in SqlConverter#toRel:

...

final SqlToRelConverter sqlToRelConverter = new TddlSqlToRelConverter(...);

RelRoot root = sqlToRelConverter.convertQuery(validatedNode, false, true);

...The TddlSqlToRelConverter class is a PolarDB-X converter that inherits the SqlToRelConverter class of Calcite. The execution process of converting SqlInsert is TddlSqlToRelConverter#convertInsert(SqlInsert call).

RelNode relNode = super.convertInsert(call);

if (relNode instanceof TableModify) {

...

}It can be found that the SqlToRelConverter#convertInsert will be called. SqlInsert will be converted into a LogicalTableModify. The following are the contents of this class:

Several variables can be noticed:

operation: operation type;

input: input source. Here, this SQL is values.

There is a new RelNode in PolarDB-X, so RelNode will be converted into its defined one, whose entry is Planner#getPlan.

ToDrdsRelVisitor toDrdsRelVisitor = new ToDrdsRelVisitor(validatedNode, plannerContext);

RelNode drdsRelNode = relNode. accept (toDrdsRelVisitor);The conversion process is ToDrdsRelVisitor#visit(RelNode other):

if ((other instanceof LogicalTableModify)) {

...

if (operation == TableModify.Operation.INSERT || ...) {

LogicalInsert logicalInsert = new LogicalInsert(modify);

...

}

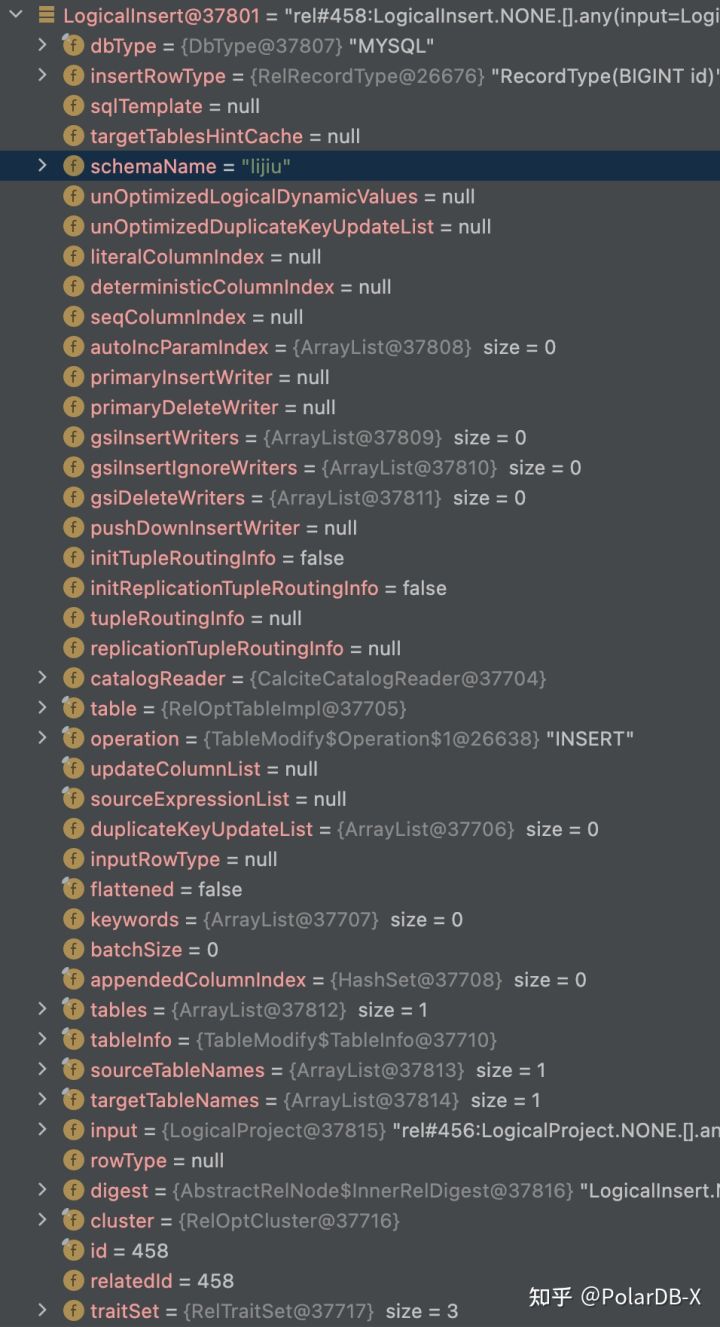

}The Insert type is converted to LogicalInsert, which is the internal RelNode of the PolarDB-X. The execution is based on this type. The following are the contents of LogicalInsert (some variables are not in the screenshot):

Most variables, like LogicalTableModify, have added gsi-related variables specific to PolarDB-X. Then, after the optimizer stage, the execution codes of the optimizer are in the Planner#sqlRewriteAndPlanEnumerate.

private RelNode sqlRewriteAndPlanEnumerate(RelNode input, PlannerContext plannerContext) {

CalcitePlanOptimizerTrace.getOptimizerTracer().get().addSnapshot("Start", input, plannerContext);

//RBO Optimization

RelNode logicalOutput = optimizeBySqlWriter(input, plannerContext);

CalcitePlanOptimizerTrace.getOptimizerTracer().get()

.addSnapshot("PlanEnumerate", logicalOutput, plannerContext);

//CBO Optimization

RelNode bestPlan = optimizeByPlanEnumerator(logicalOutput, plannerContext);

// finally we should clear the planner to release memory

bestPlan.getCluster().getPlanner().clear();

bestPlan.getCluster().invalidateMetadataQuery();

return bestPlan;

}The optimizer of Insert is mainly in the RBO process, which defines some rules. CBO rules have little change to Insert. OptimizeLogicalInsertRule rules of RBO can be focused on, and the execution plan of the SQL is determined based on the information of GMS (PolarDB-X Metadata Management). LogicalInsert may be converted into other RelNode for execution, convenient to distinguish different SQL execution methods. First, the SQL execution strategy is determined. There are three main types:

public enum ExecutionStrategy {

/**

* Foreach row, exists only one target partition.

* Pushdown origin statement, with function call not pushable (like sequence call) replaced by RexCallParam.

* Typical for single table and partitioned table without gsi.

*/

PUSHDOWN,

/**

* Foreach row, might exists more than one target partition.

* Pushdown origin statement, with nondeterministic function call replaced by RexCallParam.

* Typical for broadcast table.

*/

DETERMINISTIC_PUSHDOWN,

/**

* Foreach row, might exists more than one target partition, and data in different target partitions might be different.

* Select then execute, with all function call replaced by RexCallParam.

* Typical for table with gsi or table are doing scale out.

*/

LOGICAL;

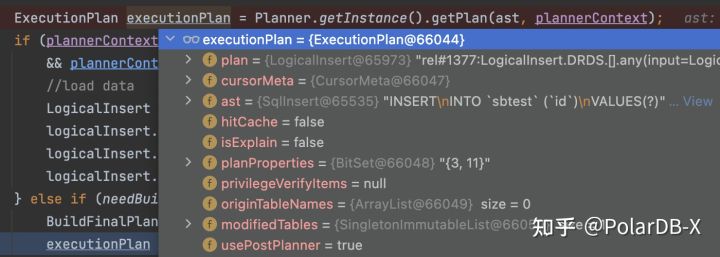

};Since this SQL is simple, the policy is PUSHDOWN, and the processing process is simple. Then, InsertWriter is generated. This class is responsible for generating SQL statements that are sent to DN and stored in LogicalInsert. The processing rules of OptimizeLogicalInsertRule are more detailed. Anyone interested can check the onMatch method personally. After the optimizer, it is still a RelNode of the LogicalInsert class. This means the optimizer has completed execution. An execution plan is generated in PlanCache#getFromCache, as shown in the following figure (not all variables are in the figure):

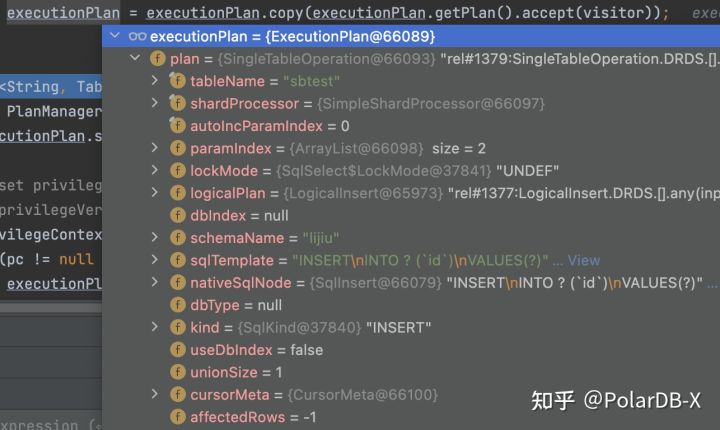

ExecutionPlan.plan is the execution plan. The PolarDB-X also overwrites the execution plan for a simple Insert. The codes are in PlanCache#getFromCache:

BuildFinalPlanVisitor visitor = new BuildFinalPlanVisitor(executionPlan. getAst (), plannerContext);

executionPlan = executionPlan. copy (executionPlan. getPlan (). accept (visitor));insert into sbtest(id) values(100); statement execute BuildFinalPlanVisitor#buildNewPlanForInsert(LogicalInsert logicalInsert, ExecutionContext ec). The Insert statement is relatively simple, and there is only one value, which contains a shard key and an auto_increment column. Moreover, according to just one shard key, which shard of DN to send to is determined without any more operations on the CN side. Therefore, the execution plan is simplified. The BuildFinalPlanVisitor#buildSingleTableInsert is converted to the SingleTableOperation, and the database and table sharding rules are saved. The final execution plan is listed below:

The execution plan becomes SingleTableOperation. The execution plan is generated.

After an SQL statement generates an execution plan, it is executed by the executor, whose entry is in TConnection#executeQuery:

ResultCursor resultCursor = executor. execute (plan, executionContext);Then, ExecutionPlan.plan is executed by the ExecutorHelper#execute method, which is the previous SingleTableOperation. Execution policies include CURSOR, TP_LOCAL, AP_LOCAL, and MPP. The Insert type is basically CURSOR. Then, the corresponding Handler is used for processing according to the execution plan. Please see the CommandHandlerFactoryMyImp class for more information. For example, SingleTableOperation is MySingleTableModifyHandler and LogicalInsert is LogicalInsertHandler. It will be executed in the corresponding Handler. A Cursor will be generally returned. The Cursor will call the real execution process. Calling a Cursor.next will get the result, and the result of the Insert statement is Affect Rows. This SQL will create a MyPhyTableModifyCursor, whose entry is MySingleTableModifyHandler#handleInner:

...

MyPhyTableModifyCursor modifyCursor = (MyPhyTableModifyCursor) repo.getCursorFactory().repoCursor(executionContext, logicalPlan);

...

affectRows = modifyCursor.batchUpdate();

...Create a MyPhyTableModifyCursor based on ExecutionContext and SingleTableOperation and then execute it:

public int[] batchUpdate() {

try {

return handler.executeUpdate(this.plan);

} catch (SQLException e) {

throw GeneralUtil.nestedException(e);

}

}The plan here is the SingleTableOperation. The handler is the MyJdbcHandler that interacts between the CN and DN of the PolarDB-X. It can be considered as the handler for executing the physical plan. It will generate real physical SQL according to the plan and send it to the DN for execution. Since this SQL is simple, CN does not need too much processing. Let's take another example of the Insert statement: insert into sbtest(k) values(101),(102);. After the optimizer, the execution plan of this statement is LogicalInsert, as shown in the following figure:

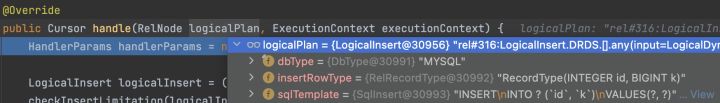

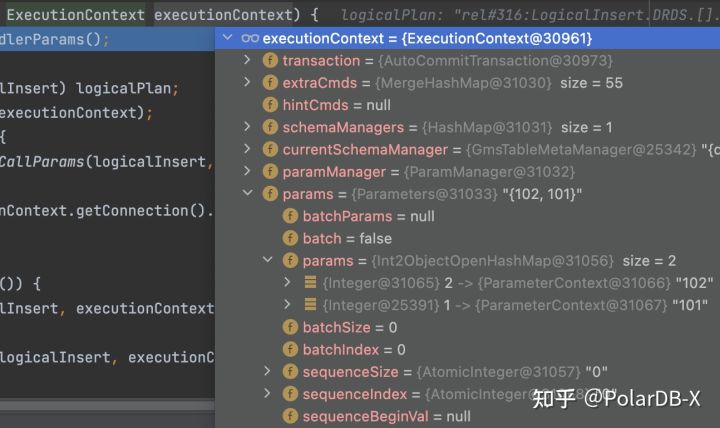

We can find that the sqlTemplate is INSERT\nINTO ?(id, k )\nVALUES(?, ?), the table name may need to be replaced by a physical table name, and a column id is added at the same time because the column is auto_increment. There will be a global sequence table to record the value of the column and ensure global uniqueness. The inserted values parameter is retained in the ExecutionContext params, as shown in the following figure:

The value of the id column will only be obtained when the physical execution plan is generated. The LogicalInsert plan is executed by the applicable LogicalInsertHandler. Execution process:

public Cursor handle(RelNode logicalPlan, ExecutionContext executionContext){

...

LogicalInsert logicalInsert = (LogicalInsert) logicalPlan;

...

if (!logicalInsert.isSourceSelect()) {

affectRows = doExecute(logicalInsert, executionContext, handlerParams);

} else {

affectRows = selectForInsert(logicalInsert, executionContext, handlerParams);

}

...

}Different execution methods are selected based on whether the source is a Select statement. The specific execution process is LogicalInsertHandler#executeInsert:

...

// Generate a physical execution plan for the primary table.

final InsertWriter primaryWriter = logicalInsert.getPrimaryInsertWriter();

List<RelNode> inputs = primaryWriter.getInput(executionContext);

...

// If a GSI exists, it will generate a physical execution plan for the GSI table.

final List<InsertWriter> gsiWriters = logicalInsert.getGsiInsertWriters();

gsiWriters.stream().map(gsiWriter -> gsiWriter.getInput(executionContext))...;

...

// Execute all physical execution plans.

final int totalAffectRows = executePhysicalPlan(allPhyPlan, executionContext, schemaName, isBroadcast);

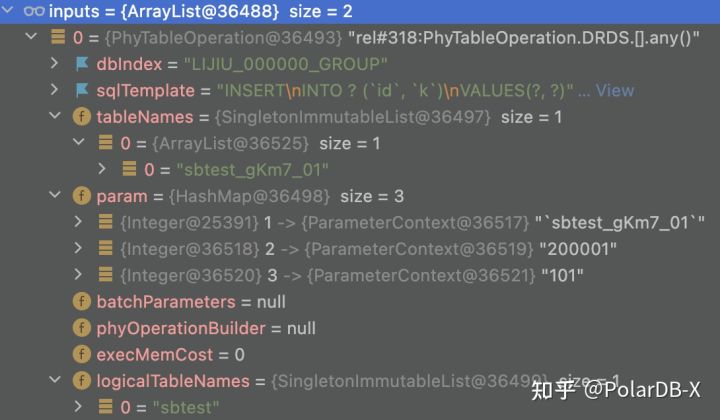

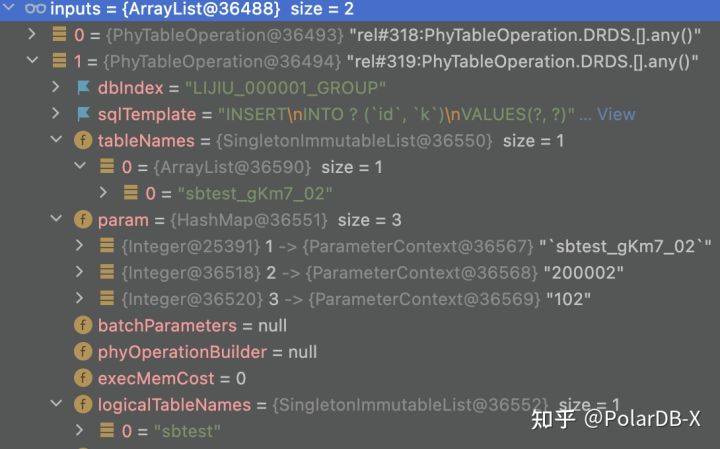

...When a physical execution plan is generated for the primary table, the value of the id is obtained first. Since the id is a shard key, the two values are located on different physical database shards based on the shard key. Two physical execution plans are generated:

Among them, dbIndex is the physical database name, and tableNames is the physical table name. Param saves the parameter value of slqTemplate. Fill the values, it becomes a complete SQL. Then, execute all physical execution plans to complete the SQL execution.

In PolarDB-X, the interaction between CN and DN is in MyJdbcHandler. Let's take SingleTableOperation as an example to see the specific interaction process:

public int[] executeUpdate(BaseQueryOperation phyTableModify) throws SQLException {

...

// Obtain the database name and parameters of the physical execution plan.

Pair<String, Map<Integer, ParameterContext>> dbIndexAndParam =

phyTableModify.getDbIndexAndParam(executionContext.getParams() == null ? null : executionContext.getParams()

.getCurrentParameter(), executionContext);

...

// Obtain the connection based on the database name.

connection = getPhyConnection(transaction, rw, groupName);

...

// Form a string SQL statement based on parameters.

String sql = buildSql(sqlAndParam.sql, executionContext);

...

// Create prepareStatement based on the connection.

ps = prepareStatement(sql, connection, executionContext, isInsert, false);

...

// Set parameters.

ParameterMethod.setParameters(ps, sqlAndParam.param);

...

// Execute.

affectRow = ((PreparedStatement) ps).executeUpdate();

...

}The physical execution plan is sent to the DN for execution. After the execution is completed, it is returned to the executor according to affectRow, and the result is returned to the user. A complete SQL statement is executed.

This article introduces the processing of Insert statements by PolarDB-X in parsing, checking, optimizer, and executor by debugging simple Insert statements. Insert statements have many special uses, which are not summarized in this article. Interested students can view the corresponding codes.

An Interpretation of PolarDB-X Source Codes (4): Life of SQL

An Interpretation of PolarDB-X Source Codes (6): Distributed Deadlock Detection

ApsaraDB - November 1, 2022

ApsaraDB - October 24, 2022

ApsaraDB - October 24, 2022

ApsaraDB - August 23, 2024

ApsaraDB - August 13, 2024

ApsaraDB - November 1, 2022

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB