By Qwen Team

We are excited to open source the Powerful, Diverse, and Practical Qwen2.5-Coder series, dedicated to continuously promoting the development of Open CodeLLMs.

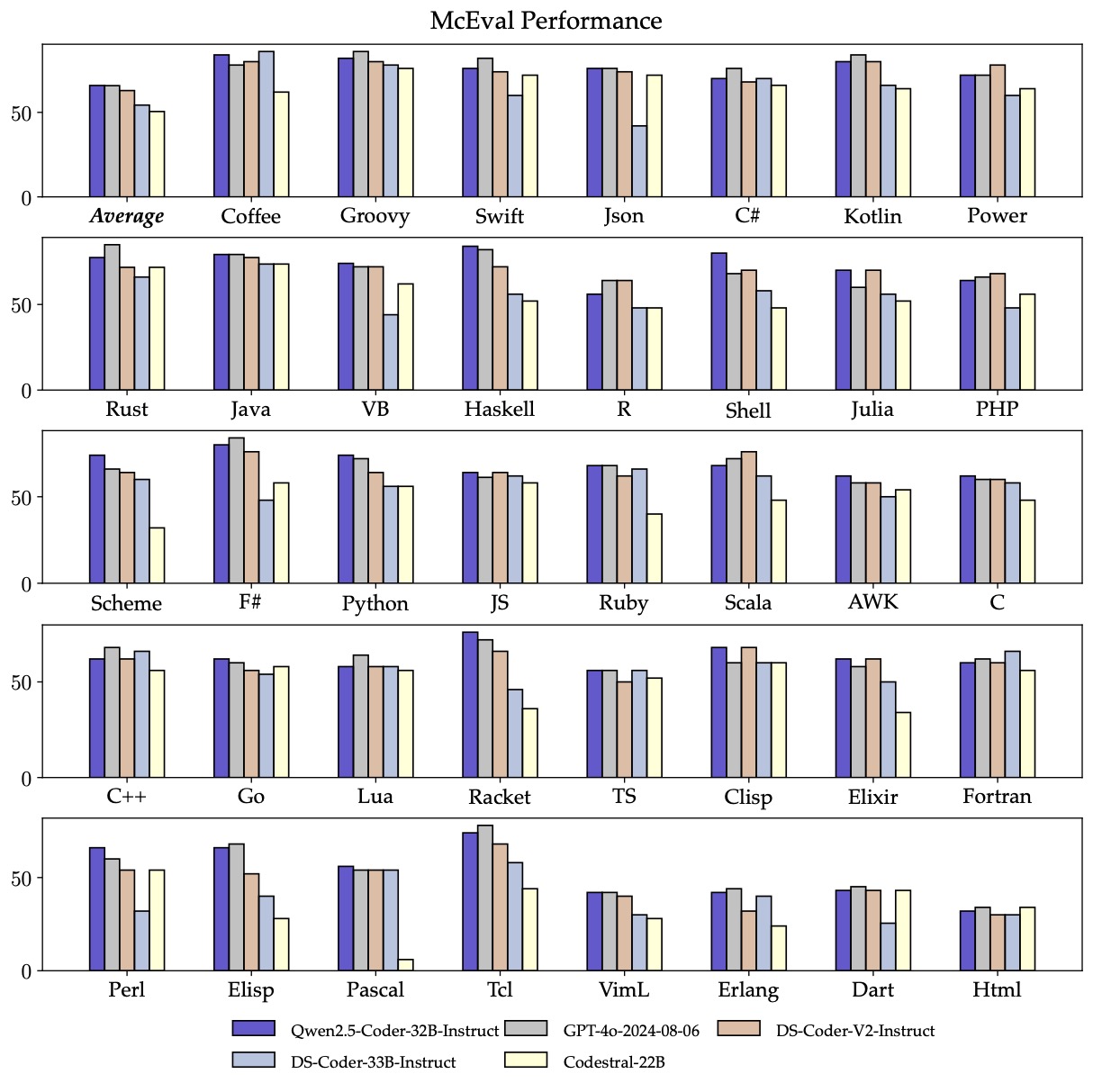

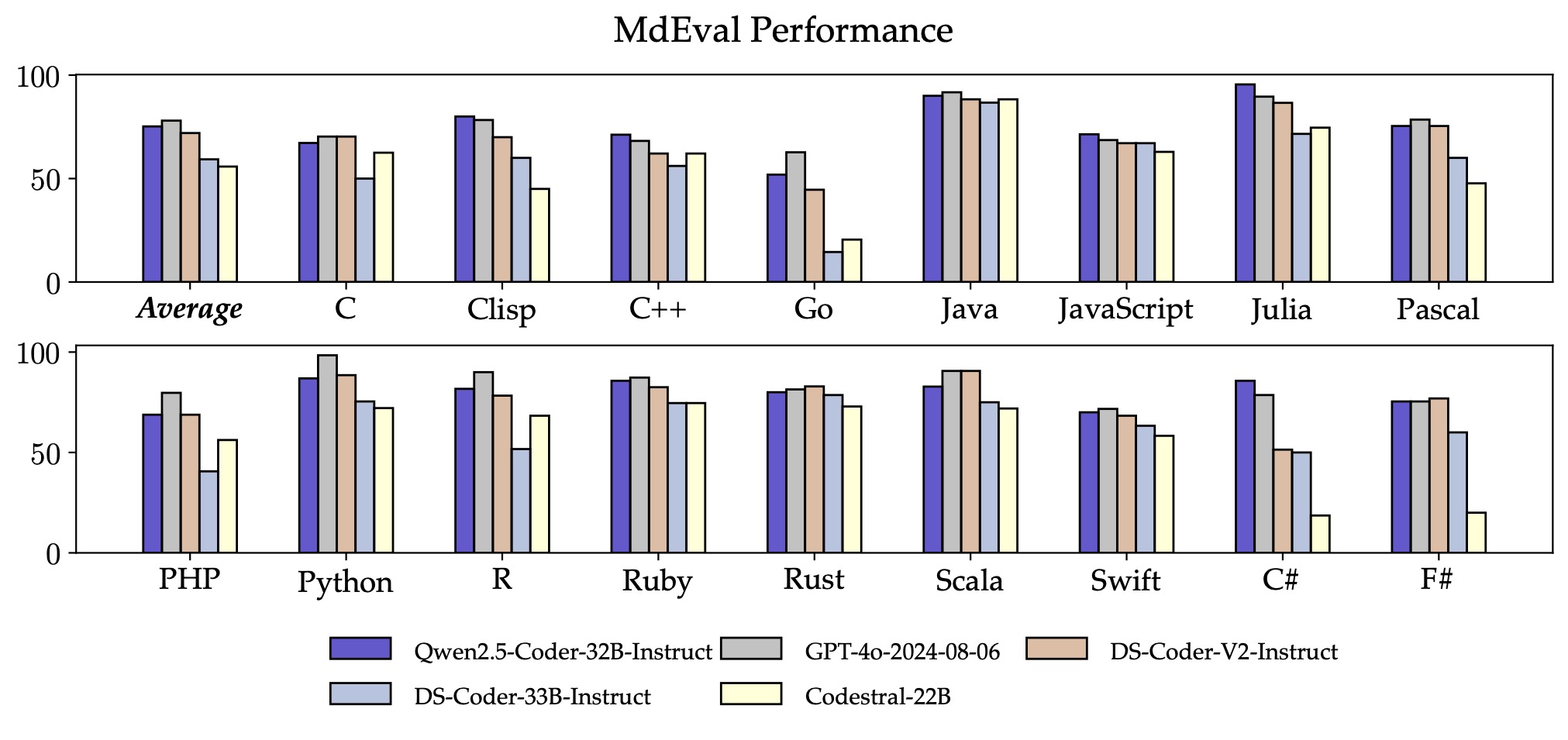

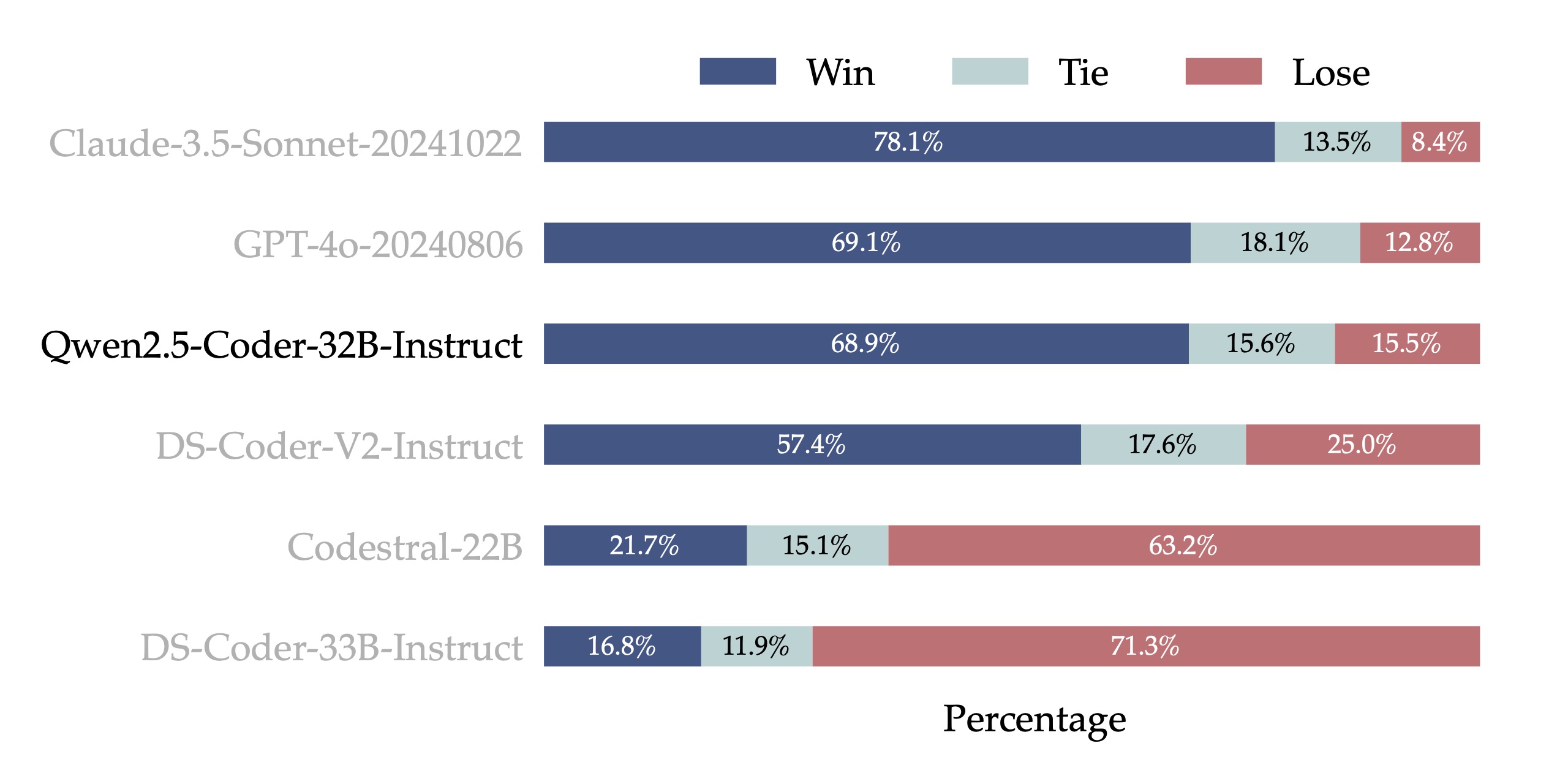

Additionally, the multi-language code repair capabilities of Qwen2.5-Coder-32B-Instruct remain impressive, aiding users in understanding and modifying programming languages they are familiar with, significantly reducing the learning cost of unfamiliar languages. Similar to McEval, MdEval is a multi-language code repair benchmark, where Qwen2.5-Coder-32B-Instruct scored 75.2, ranking first among all open-source models.

This time, Qwen2.5-Coder has open-sourced a rich variety of model sizes, including 0.5B/1.5B/3B/7B/14B/32B, which not only meets the needs of developers in different resource scenarios but also provides a good experimental platform for the research community. The following table provides detailed model information:

| Models | Params | Non-Emb Params | Layers | Heads (KV) | Tie Embedding | Context Length | License |

|---|---|---|---|---|---|---|---|

| Qwen2.5-Coder-0.5B | 0.49B | 0.36B | 24 | 14 / 2 | Yes | 32K | Apache 2.0 |

| Qwen2.5-Coder-1.5B | 1.54B | 1.31B | 28 | 12 / 2 | Yes | 32K | Apache 2.0 |

| Qwen2.5-Coder-3B | 3.09B | 2.77B | 36 | 16 / 2 | Yes | 32K | Qwen Research |

| Qwen2.5-Coder-7B | 7.61B | 6.53B | 28 | 28 / 4 | No | 128K | Apache 2.0 |

| Qwen2.5-Coder-14B | 14.7B | 13.1B | 48 | 40 / 8 | No | 128K | Apache 2.0 |

| Qwen2.5-Coder-32B | 32.5B | 31.0B | 64 | 40 / 8 | No | 128K | Apache 2.0 |

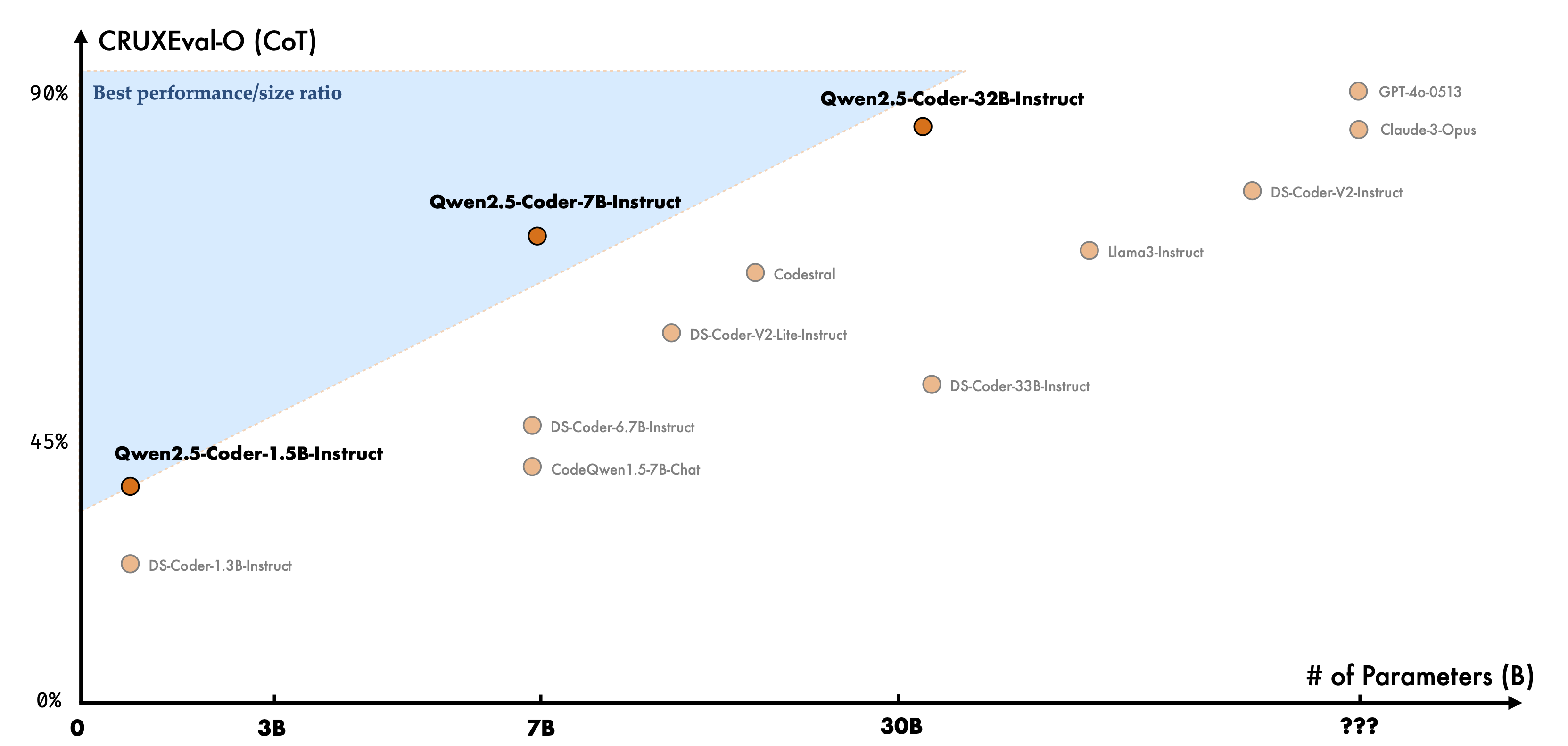

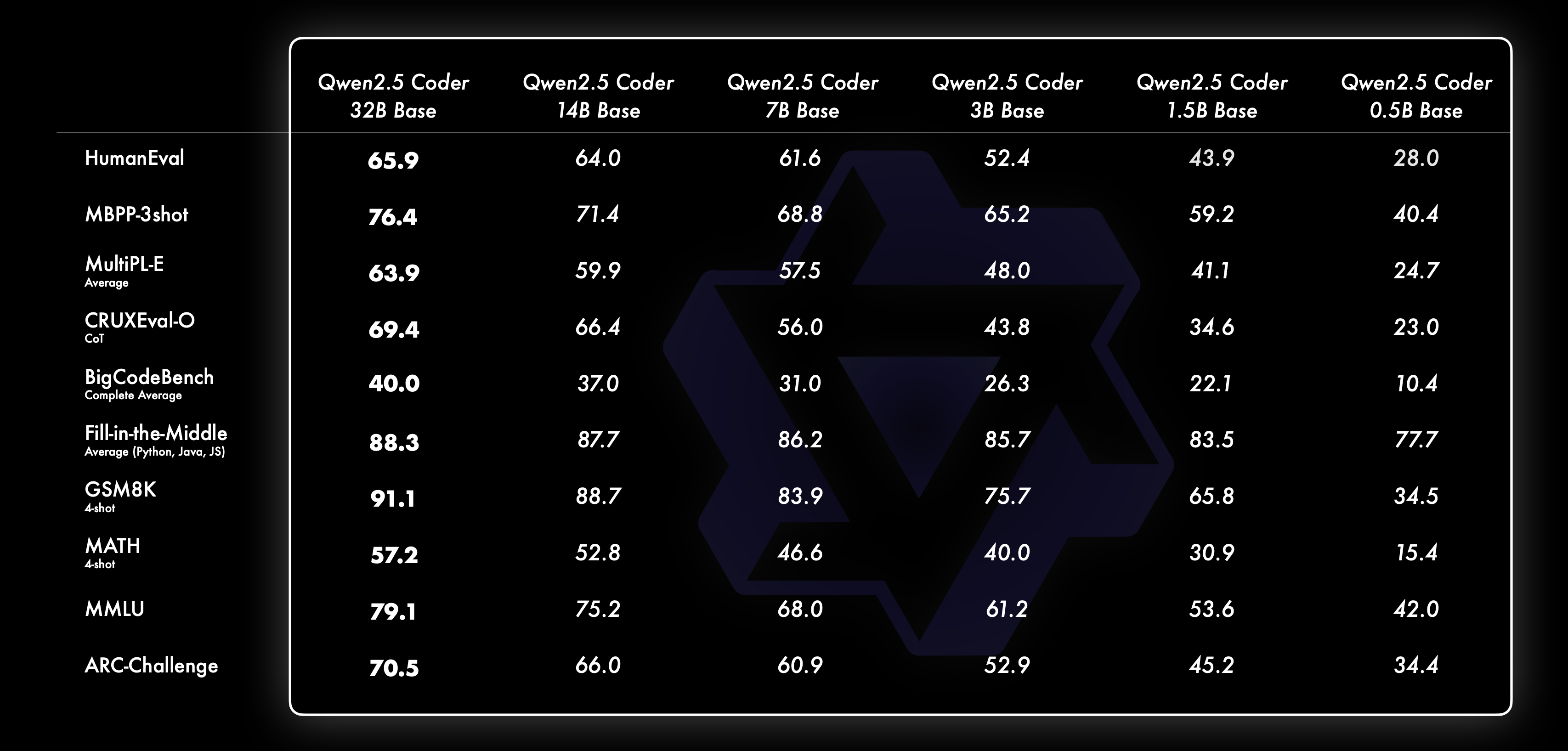

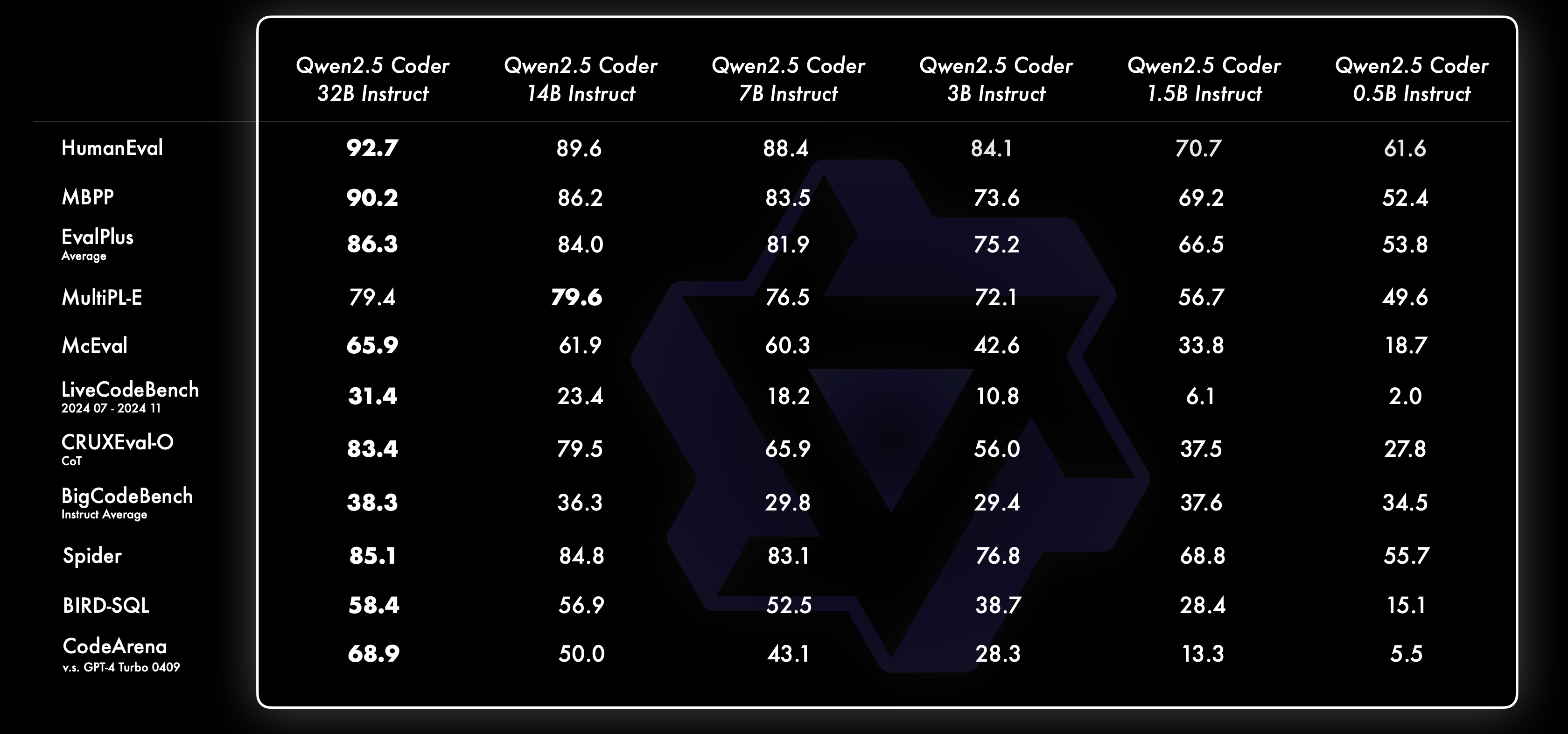

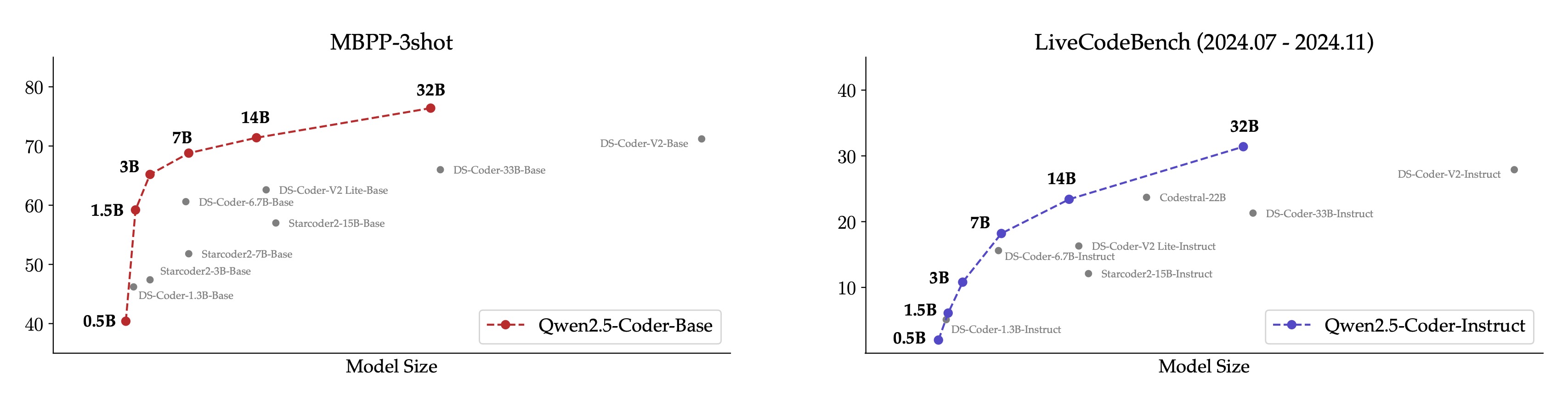

We have always believed in the philosophy of Scaling Law. We evaluated the performance of different sizes of Qwen2.5-Coder across all datasets to verify the effectiveness of Scaling in Code LLMs. For each size, we open-sourced both Base and Instruct models, where the Instruct model serves as an official aligned model that can chat directly, and the Base model serves as a foundation for developers to fine-tune their own models.

Here are the performances of the Base models of different sizes:

Here are the performances of the Instruct models of different sizes:

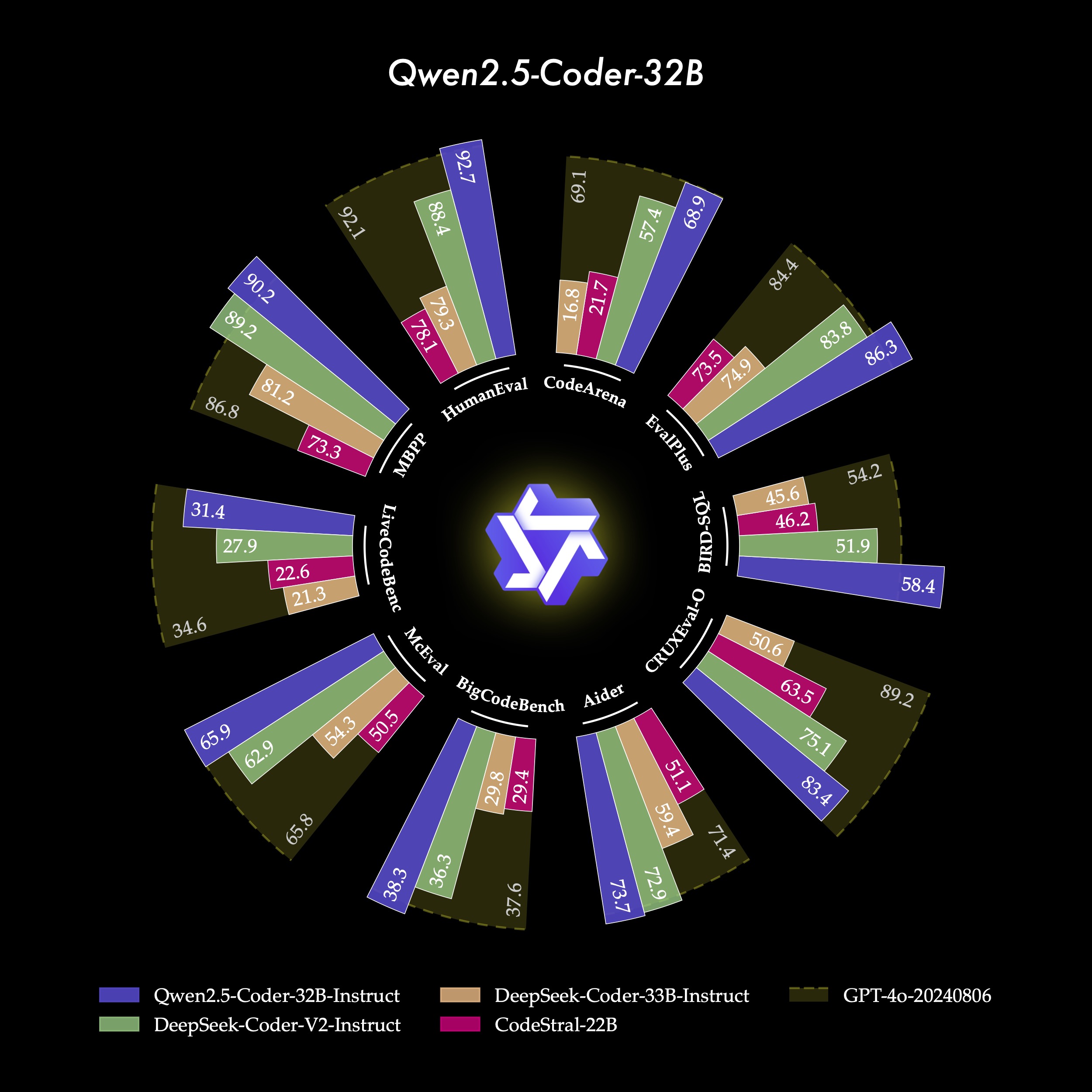

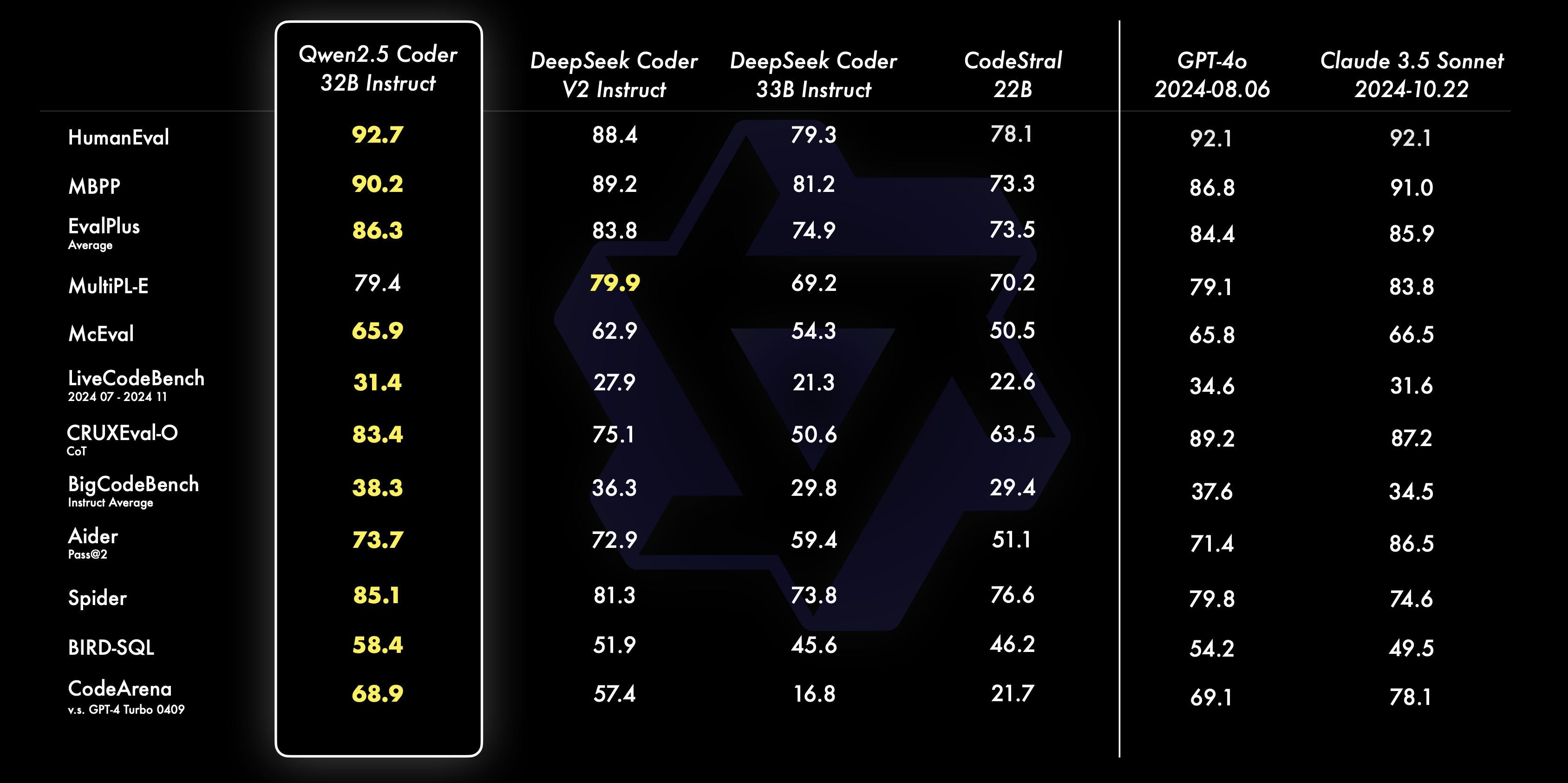

We present a comparison of different sizes of Qwen2.5-Coder with other open-source models on core datasets.

There is a positive correlation between model size and model performance, and Qwen2.5-Coder has achieved SOTA performance across all sizes, encouraging us to continue exploring larger sizes of Coder.

A practical Coder has always been our vision, and for this reason, we explored the actual performance of Qwen2.5-Coder in code assistants and Artifacts scenarios.

Code assistants have become widely used, but most currently rely on closed-source models. We hope that the emergence of Qwen2.5-Coder can provide developers with a friendly and powerful option. Here is an example of Qwen2.5-Coder in the Cursor.

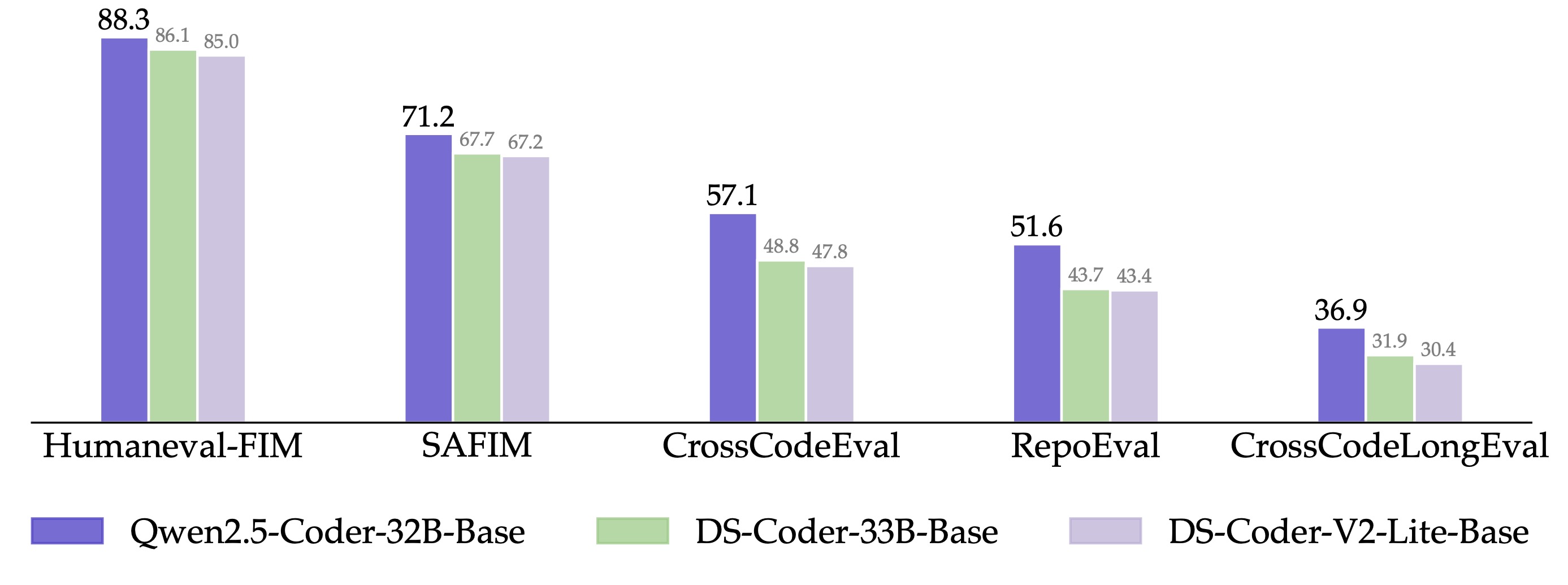

Additionally, Qwen2.5-Coder-32B has demonstrated strong code completion capabilities on pre-trained models, achieving SOTA performance on a total of 5 benchmarks: Humaneval-Infilling, CrossCodeEval, CrossCodeLongEval, RepoEval, and SAFIM. To maintain a fair comparison, we controlled the maximum sequence length to 8k and used the Fill-in-the-Middle mode for testing. Among the four evaluation sets of CrossCodeEval, CrossCodeLongEval, RepoEval, and Humaneval-Infilling, we evaluated whether the generated content was exactly equal to the true labels (Exact Match). In SAFIM, we used the one-time execution success rate (Pass@1) for evaluation.

Artifacts are an important application of code generation, helping users create visual works. We chose Open WebUI to explore the potential of Qwen2.5-Coder in the Artifacts scenario, and here are some specific examples.

Example: Three-body Problem Simulation

Example: Lissajous Curve

Example: Drafting a resume

Example: Emoji dancing

We will soon launch the code mode on the Tongyi official website https://tongyi.aliyun.com supporting one-click generation of websites, mini-games, and data charts, among other visual applications. We welcome everyone to experience it!

Qwen2.5-Coder 0.5B / 1.5B / 7B / 14B / 32B are licensed under Apache 2.0, while 3B is under Qwen-Research license;

We believe that this release can truly help developers and explore more interesting application scenarios with the community. Additionally, we are delving into powerful reasoning models centered around code, and we believe we will meet everyone soon!

@article{hui2024qwen2,

title={Qwen2. 5-Coder Technical Report},

author={Hui, Binyuan and Yang, Jian and Cui, Zeyu and Yang, Jiaxi and Liu, Dayiheng and Zhang, Lei and Liu, Tianyu and Zhang, Jiajun and Yu, Bowen and Dang, Kai and others},

journal={arXiv preprint arXiv:2409.12186},

year={2024}

}

@article{yang2024qwen2,

title={Qwen2 technical report},

author={Yang, An and Yang, Baosong and Hui, Binyuan and Zheng, Bo and Yu, Bowen and Zhou, Chang and Li, Chengpeng and Li, Chengyuan and Liu, Dayiheng and Huang, Fei and others},

journal={arXiv preprint arXiv:2407.10671},

year={2024}

}Qwen2.5-Coder open-source address:

Introduction to Container Technology and Its Basic Principles

Best Practice for Using KMS to Sign and Verify the Signature

1,347 posts | 477 followers

FollowCed - November 21, 2024

Alibaba Cloud Community - December 31, 2024

Alibaba Cloud Community - November 22, 2024

Alibaba Cloud Community - November 22, 2024

Ced - February 17, 2025

Ashish-MVP - April 8, 2025

1,347 posts | 477 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Community