By Zhang Hongbo, Big Data Engineer of Soul

In the traditional offline data warehouse mode, extract-transform-load (ETL) is the first step before logs are input into the data warehouse. The Soul tracking logs contain a large amount of data and need to be dynamically partitioned for input. The dynamic partitions reach more than 1,200 every day with a varying data volume from tens of thousands to billions. The following figure shows the previous ETL process. In the process, the tracking logs are input to Kafka, collected to HDFS by Flume, and written into Hive after being processed by Spark ETL tasks performed for days. The task starts with small hours. The data processing takes about one hour, and the loading takes over an hour, so the overall execution time is two to three hours.

The architecture above faces the following problems:

The next day inbound ETL tasks are converted into real-time log input to solve the increasingly sharp problems of ETL performed through days, reduce resource costs, and advance data output. This ensures that the data can be used immediately, as it is persisted on the premise of data consistency.

Previously, the same data was maintained offline and in real-time separately under the Lambda architecture. However, there were still some difficulties in practice, such as unguaranteed transactions, the pressure on the clusters, and the loss of query performance caused by too many small files. Finally, the practice failed to meet the expectation.

Therefore, the data lake architecture, which has gradually been coming into the limelight recently, is chosen this time. The concept of data lake will be explained here since it can be considered a table format that regards metadata as big data. Currently, the mainstream data lakes are Delta Lake (including the open-source edition and the commercial edition), Hudi, and Iceberg. They all support features, such as ACID semantics, upsert, dynamic schema change, and time travel. Some advantages and disadvantages between these three data lakes are compared and summarized below:

Advantages:

Disadvantages:

Advantages:

Disadvantages:

Advantages:

Disadvantages:

During the research, Alibaba Cloud provided Delta with the E-MapReduce (EMR) version that was optimized with features and performance based on the open-source version. The feature optimizations include integration with Spark SQL and Spark Streaming SQL, MetaSync features to synchronize Delta metadata to Hive MetaStore automatically, and automatic compaction. Moreover, it also provides performance optimizations, covering the adaption to more search engines, such as Tez, Hive, and Presto, and query performance optimization with Z-order, data skipping, and merge.

During the tests, several bugs in EMR Delta have been reported. For example, the Delta table could not automatically create a Hive mapping table, the Tez engine could not read the Hive table of Delta type normally, and the Delta data read by Presto and Tez was inconsistent. All the bugs were solved quickly by Alibaba Cloud.

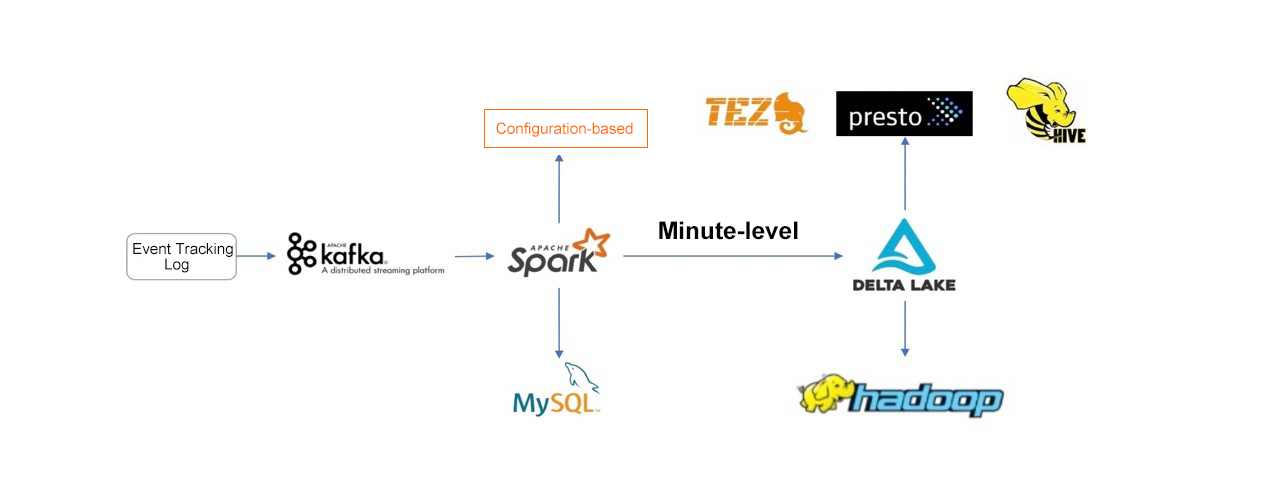

After Delta is introduced, the architecture of real-time log input looks like this:

The data is reported from event tracking to Kafka and written to HDFS as a Delta table by minute-level Spark tasks. Then, a mapping table of the Delta table is created automatically in Hive. By doing so, data query and analysis can be performed directly via query engines, such as Hive on MR, Tez, and Presto.

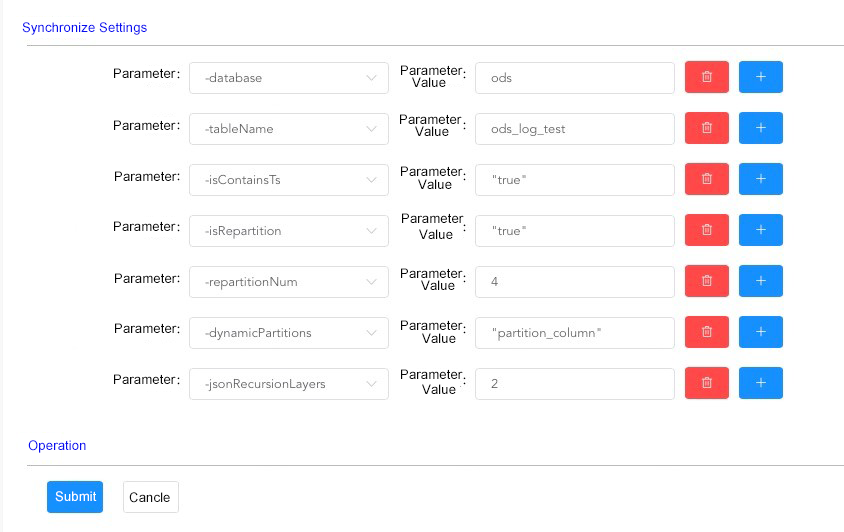

Based on Spark, general-purpose ETL tools have been encapsulated, and configuration-based access has been implemented. As such, users can access the overall process of source data into Hive without writing codes. In addition, a variety of useful features are implemented in the encapsulation layer to adapt more business scenarios:

1. The hidden partition similar to Iceberg is implemented. Users can use Spark SQL to change certain columns into a new column. The column can be used as a partition column or a newly-added column. For example, a new column can be generated from the date column using "substr(date,1,4) as year" to be a partition.

2. The regex check on dynamic partitions to avoid partitioning errors caused by dirty data is implemented. For example, Hive does not support Chinese partitions. Users can add a "\w+" regular expression to dynamic partitions so the dirty data of the unmatched partition fields will be filtered.

3. The event time field customization is implemented. Users can select any time field in the data as the event time for the corresponding partition to avoid data drift.

4. The customization of nested JSON parsing levels is implemented. Most log data is in JSON format, so nested JSON is unavoidable. Users can specify the levels of nested JSON to be parsed. Nested fields will be written into the table in single columns.

5. SQL-based dynamic partition with custom configuration is implemented. This scenario solves the performance problems of real-time tasks caused by the skew of the event tracking and optimizes resource usage.

Platform Construction: Now, the overall process of connecting logs to Hive has been embedded into the Soul data platform. Users can apply for log access through this platform. After approval, corresponding parameters can be configured to access the logs to Hive tables in real-time, which is easy to use and reduces operational costs.

EMR Delta implements the optimize and vacuum syntax to solve the problem of too many small files. EMR Delta executes the optimized syntax on the Delta table periodically to merge small files and the vacuum syntax to delete expired files to keep the files on HDFS in proper size and quantity. It is worth mentioning that EMR Delta also implements some auto-compaction policies, which can be configured to trigger compaction automatically. For example, when small files reach a certain quantity, start a minor compaction task in the streaming job to merge small files with little impact on real-time tasks.

Next, some problems encountered in the process of implementing Delta will be introduced.

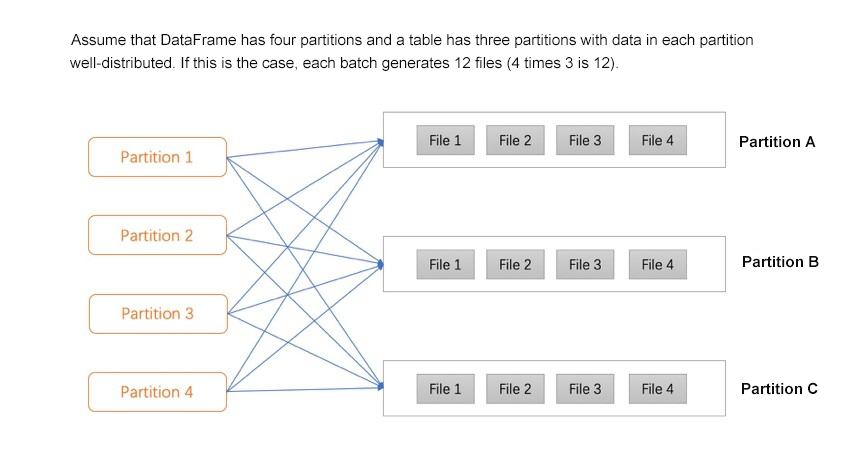

The event tracking in Soul is partitioned by the tracking type into a wide partition table. Different types of event tracking are distributed unevenly. For example, in the process of writing data to Delta through Spark with 5 minutes as a batch, the volume of most event tracking in 5 minutes is very small, with 10 MB and below. However, for a small number of event tracking, the data volume in 5 minutes can reach 1 GB or above. When implementing data partitioning, assume that DataFrame has M partitions and a table has N dynamic partitions. The data in each partition is well-distributed but disordered. If this is the case, N files are generated for each partition and correspond to N dynamic partitions. As such, M times N small files are generated for each batch.

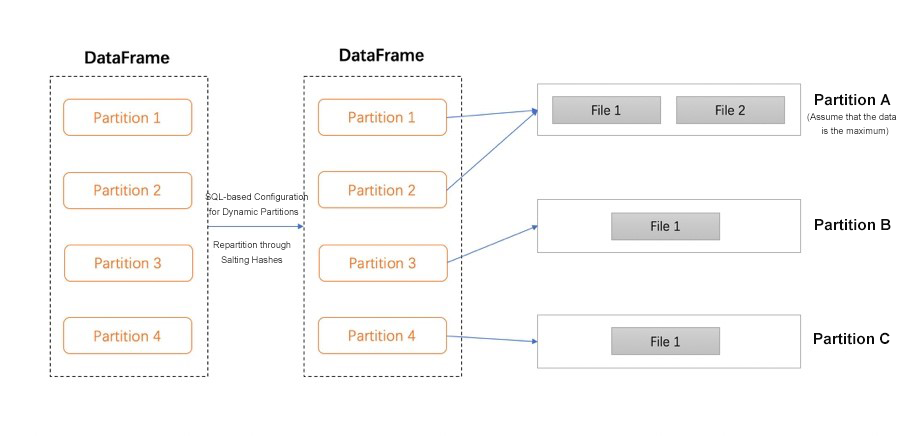

We recommend repartitioning DataFrame by the dynamic partition field before implementing the data partition to solve these problems. This ensures that each partition has data from different partitions, and each batch generates only N files, one file for each dynamic partition. Thus, the problem of small file expansion will be addressed. However, the data of several partitions with excessively large data volumes are distributed in one partition, which causes data skew in some partitions and the oversize files generated in each batch of these partitions.

Solutions: As shown in the following figure, users are provided with the features to customize the repartition column via SQL. In other words, users can use SQL to scatter the event tracking of having too much data to multiple partitions by salting hashes. No such operation is required for event tracking with normal data volume. This solution decreases the execution time of the slowest partition in each batch of the Spark task from 3 minutes to 40 seconds, thus avoiding problems of too small or too large files and resolving the performance problems caused by data skew.

The data lake supports dynamic schema changes. However, when building DataFrame before Spark writes data, the data schema must be obtained. If the schema cannot be dynamically changed at this time, new fields cannot be written into the Delta table, and the dynamic schema of the Delta table becomes useless. Different types cause different fields for event tracking. Therefore, the union of the fields of all data must be taken as the schema of the Delta table when writing data to the table, which requires users to perceive whether there are new fields or not when building DataFrame.

Solutions: An additional set of metadata is designed. When building DataFrame on Spark, determine whether new fields have been added based on this set of metadata primarily. If so, update the new fields to the metadata and build DataFrame with the metadata as a schema. As such, the schema change can be dynamically perceived at the application layer. In conjunction with the dynamic schema changes, the new fields are written automatically to the Delta table, and the changes are synchronized to the corresponding Hive table.

When using Spark Streaming, the consumer offset is submitted to Kafka after the data is processed, for which the commitAsync API in the spark-streaming-kafka-0-10 is called. It was misunderstood that the consumer offset of the current batch would be submitted after the data was processed. However, there is still duplicated data in the Delta table. The troubleshooting result shows that the offset is submitted at the start of the next batch rather than after the current batch data processing is completed. Then, the problem arises if a batch is 5 minutes long and the data processing is completed at 3 minutes. At this moment, the data is written into the Delta table successfully, but the offset submission succeeds after 5 minutes at the beginning of the second batch. If the task is restarted within the interval from 3 minutes to 5 minutes, the current batch of data will be consumed repeatedly, resulting in data duplication.

Solutions:

Since Delta separately maintains its own metadata, users need to parse the metadata to obtain data file information first when using external query engines for the query. As the volume of data in the Delta table grows, the volume of metadata increases accordingly, and the time consumed for this operation becomes longer.

Solutions: Alibaba Cloud has been constantly optimizing its query solutions to minimize the cost of parsing metadata using cache and other methods.

Currently, it is the log append scenario that is implemented based on Delta, and there is another classic business scenario for change data capture (CDC). Delta that supports update and delete commands can be applied in CDC. However, due to business considerations, Delta has not been used in the CDC scenario for the time being because the update and delete commands of the Delta table are executed in merge join, while the large data volume and frequent update of business tables together with the wide range of partitions involved in data updating may cause performance problems in the merge.

Alibaba Cloud is also continuously optimizing merge performance, such as join partition pruning and Bloom filter. The optimizations can reduce the number of files effectively during a join operation, especially for updating data that is partitioned centrally. In turn, this improves the performance significantly. In the future, we will attempt to apply Delta in the CDC scenario.

Alibaba Cloud JindoFS Handles Stress Testing Easily with More Than One Billion Files

Alibaba Big Data Practices on Cloud-Native – EMR Spark on ACK

62 posts | 7 followers

FollowAlibaba EMR - September 23, 2022

Alibaba EMR - August 22, 2022

Alibaba EMR - May 13, 2022

Alibaba EMR - June 2, 2021

Alibaba EMR - July 20, 2022

zhuoran - February 5, 2021

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by Alibaba EMR