Delta Lake is an open-source storage framework used by DataBricks to build the lake house architecture. It supports query and computing engines, such as Spark, Flink, Hive, PrestoDB, and Trino. As an open format storage layer, it provides a reliable, secure, and high-performance guarantee for the lake house architecture while providing the integration of batch processing and stream processing.

Key Features of Delta Lake:

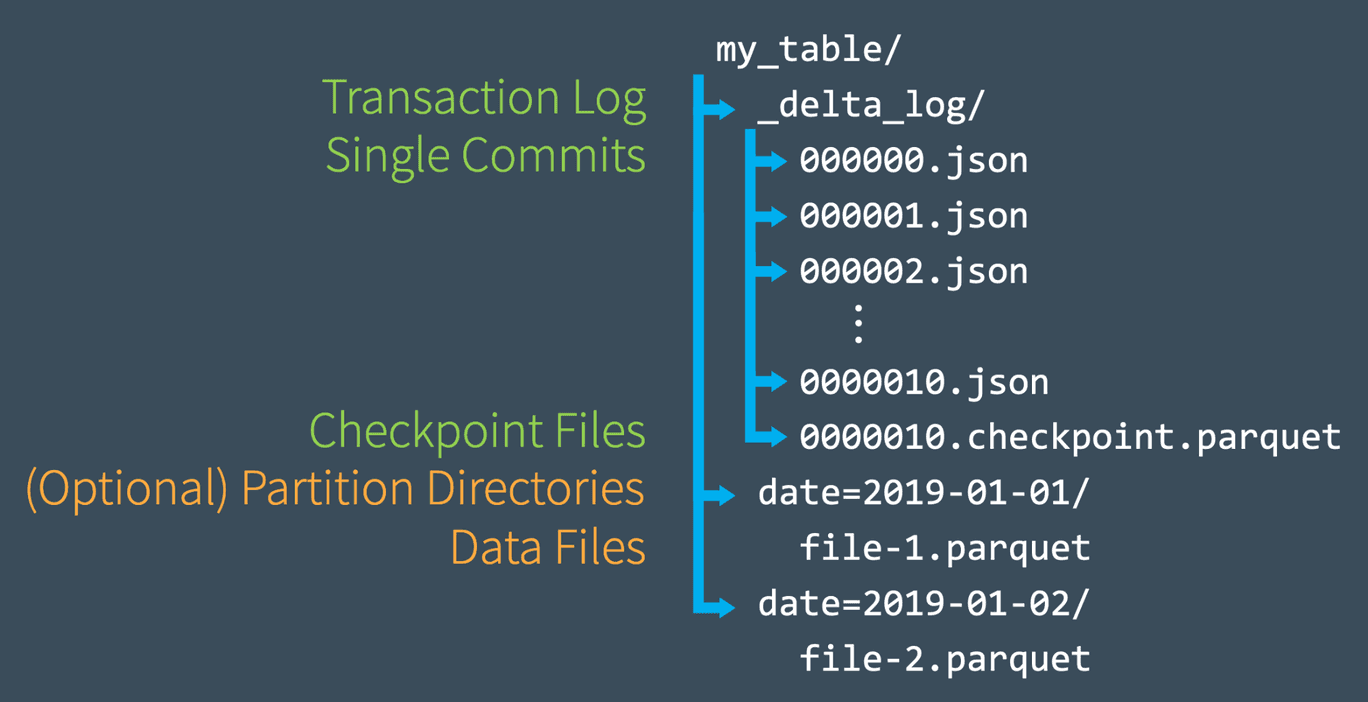

A big difference between lake tables and common Hive tables is that the metadata of lake tables is self-managed and stored in the file system. The following figure shows the file structure of a Delta Lake table:

The file structure of Delta Lake consists of two parts:

Delta Lake uses Snapshot to manage multiple versions of a table and supports time-travel queries for historical versions. No matter whether you query the latest snapshot or the snapshot information of a certain version in history, you need to parse the metadata information of the corresponding snapshot first, which mainly involves:

When loading a specific snapshot, try to find a checkpoint file less than or equal to this version and use the checkpoint file and the logs generated later than the checkpoint file to parse the metadata information to speed up the loading process.

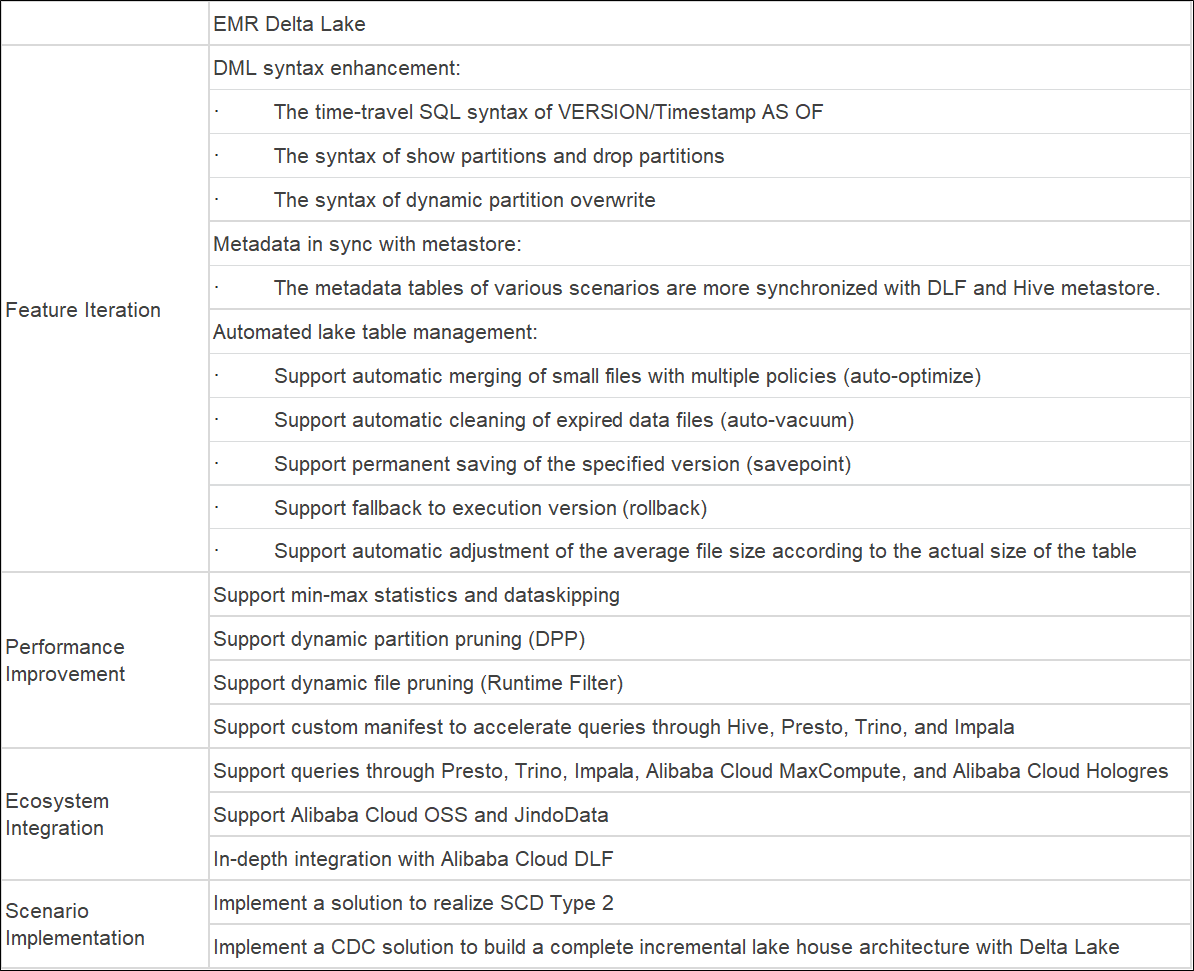

The Alibaba Cloud EMR Team has been following the Delta Lake community since 2019 and implementing it in the commercial products of EMR. During this period, Delta Lake has been continuously polished and upgraded in terms of iterative features, optimization performance, integrated ecology, higher usability, and scenario implementation to help integrate into EMR products and become more user-friendly.

The following table summarizes the main self-developed features of EMR Delta Lake compared with open-source Delta Lake (Community 1.1.0):

Note:

Delta Lake (1.x) only supports Spark 3 and is bound to specific Spark versions. As a result, some new features and optimizations cannot be used on the old versions and Spark 2, but features of EMR Delta Lake stay synchronized with Delta Lake (0.6) of Spark 2 and Delta Lake (1.x) of Spark 3.

Data Lake Formation (DLF) is a fully managed service that helps users build a cloud data lake and lake house quickly. It provides capabilities for unified metadata management, unified permission, secure and convenient data entry into the lake, and one-click data exploration. It seamlessly connects multiple computing engines, breaks data silos, and insights into business value.

EMR Delta Lake is deeply integrated with DLF. This enables Delta Lake tables to synchronize metadata to the DLF metastore automatically after they are created and written. Therefore, you do not need to create Hive foreign tables to associate with Delta Lake tables like in the open-source version. After synchronization, you can directly query through Hive, Presto, Impala, and even Alibaba Cloud MaxCompute and Hologres without additional operations.

Similarly, DLF has a mature ability to enter the lake. You can directly synchronize the data of MySQL, RDS, and Kafka to generate Delta Lake tables through the configuration of the product.

On the product side of DLF, the lake-formatted Delta Lake is a first-class citizen. DLF will also provide an easy-to-use visual display and lake table management capabilities in the next iteration to help you maintain lake tables better.

Slowly Changing Dimension (SCD) is considered one of the key ETL tasks for tracking dimensional changes. Star-shaped models are usually used to associate fact tables and dimension tables in data warehouse scenarios. If some dimensions in the dimension table are updated over time, how do you store and manage current and historical dimension values? Are they directly ignored, directly overwritten, or processed by other methods, such as permanently preserving all dimension values in history? According to different processing methods, SCD defines many types, among which SCD Type 2 keeps all historical values by adding new records.

We may not need to focus on all historical dimension values in an actual production environment, but on the new value in a certain time, such as day- or hour-based granularity, and the value of a dimension in each day or hour. Therefore, the actual scenario can be transformed into a slowly changing dimension based on a fixed granularity or business snapshot (Based-Granularity Slowly Changing Dimension – G-SCD).

There are several options in the implementation of traditional data warehouses based on Hive tables. The article introduces the following two solutions:

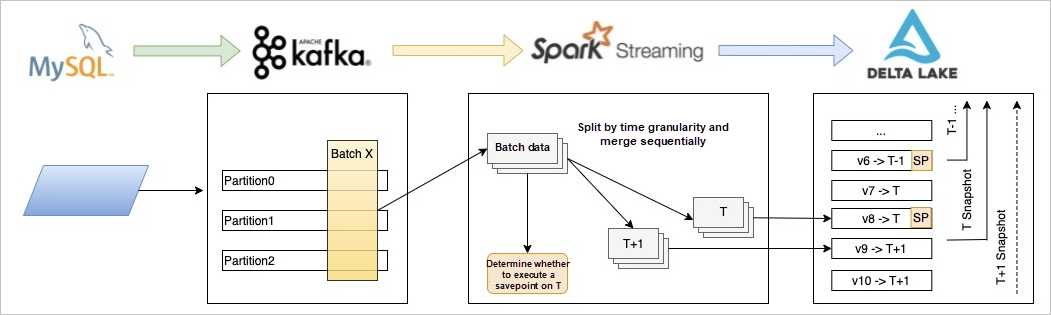

The SCD Type 2 scenario is implemented through the upgrade of Delta Lake combined with the adaptation of SparkSQL and Spark Streaming. The following figure shows the architecture:

Similarly, the data in the upstream Kafka is connected. The batch data is split based on the configured business snapshot granularity on the Streaming side, committed separately, and attached to the value of the business snapshot. After receiving data, Delta Lake saves the current relationship between the snapshot and the business snapshot. In addition, when the next business snapshot arrives, Delta Lake performs a savepoint on the previous snapshot to retain the version permanently. When you query, the specific snapshot is identified by the specific value of the specified business snapshot. Then, the query is implemented through time-travel.

Advantages of G-SCD on Delta Lake:

Under the current data warehouse architecture, we tend to layer data into ODS, DWD, DWS, and ADS for easy management. If the raw data is stored in MySQL or RDS, we can consume its binlog data to implement the incremental update of ODS tables. However, how about the incremental data updates from ODS to DWD or from DWD to the DWS layer? Since the Hive table does not have the ability to generate binlog-like data, we cannot implement incremental updates for each downstream process. However, the ability to enable lake tables to generate binlog-like data is the key to building real-time incremental data warehouses.

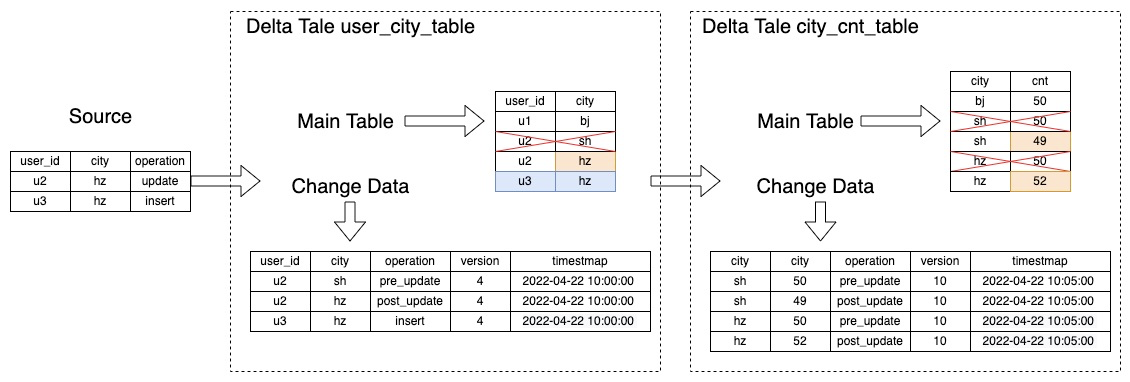

Alibaba Cloud EMR implements CDC capabilities that use it as a streaming source based on Delta Lake. When it is enabled, ChangeData is generated and persisted for all data operations so downstream streaming can be read. Also, SparkSQL syntax queries are supported (as shown in the following figure):

The Delta table user_city_table at the ODS layer receives source data to perform the merge operation and persist the changed data. At the DWS layer, the city_cnt_table table aggregated by city reads the ChangeData data of the user_city_table table, and the cnt aggregation fields are updated.

Delta Lake (as the main lake format promoted by EMR) has already been trusted and chosen by many users and has been implemented in their respective actual production environments, connecting various scenarios. We will continue to strengthen our investment in Delta Lake, deeply explore its integration with DLF, enrich capabilities for lake table O&M and management, and reduce the cost of users to enter the lake in the future. In addition, we will continue to optimize the reading and writing performance, improve the ecological construction with the big data system of Alibaba Cloud, and promote the construction of the integrated architecture of lake houses for users.

New Features of Alibaba Cloud Remote Shuffle Service: AQE and Throttling

Databricks Data Insight Open Course - An Evolution History and Current Situation of Delta Lake

62 posts | 7 followers

FollowAlibaba EMR - October 12, 2021

Alibaba EMR - May 13, 2022

Alibaba EMR - June 22, 2021

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba Cloud Community - September 30, 2022

Apache Flink Community - July 28, 2025

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba EMR