By Qingshan Lin (Longji)

The development of message middleware has spanned over 30 years, from the emergence of the first generation of open-source message queues to the explosive growth of PC Internet, mobile Internet, and now IoT, cloud computing, and cloud-native technologies.

As digital transformation deepens, customers often encounter cross-scenario applications when using message technology, such as processing IoT messages and microservice messages simultaneously, and performing application integration, data integration, and real-time analysis. This requires enterprises to maintain multiple message systems, resulting in higher resource costs and learning costs.

In 2022, RocketMQ 5.0 was officially released, featuring a more cloud-native architecture that covers more business scenarios compared to RocketMQ 4.0. To master the latest version of RocketMQ, you need a more systematic and in-depth understanding.

Today, Qingshan Lin, who is in charge of Alibaba Cloud's messaging product line and an Apache RocketMQ PMC Member, will provide an in-depth analysis of RocketMQ 5.0's core principles and share best practices in different scenarios.

This course is divided into three parts. In the first part, we will explore the typical technical architecture of the IoT and the role of message queues in this architecture. In the second part, we will discuss the differences between the requirements for message technology and the message technology for server-side applications in the IoT scenario. In the third part, we will learn how MQTT, a sub-product of RocketMQ 5.0, addresses these IoT technical challenges.

Let's first understand the IoT scenario and the role of messages in it.

The IoT is undoubtedly one of the hottest technology trends in recent years. Many research institutions and industry reports have predicted the rapid development of the IoT. First, the scale of IoT devices is expected to reach over 20 billion units by 2025.

Second, the data scale of the IoT is growing at a rate of nearly 28%, with over 90% of real-time data expected to come from the IoT in the future. This means that future real-time stream data processing will involve a large amount of IoT data.

Finally, edge computing is becoming increasingly important. In the future, 75% of data will be processed outside traditional data centers or cloud environments. Due to the large scale of IoT data, transferring all data to the cloud for processing would be unsustainable. Therefore, it's essential to fully utilize edge resources for direct computation and then transmit high-value results to the cloud, reducing latency and enhancing user experience.

What do messages have to do with the rapid development of the IoT and the large data scale? Let's examine the role of messages in IoT scenarios:

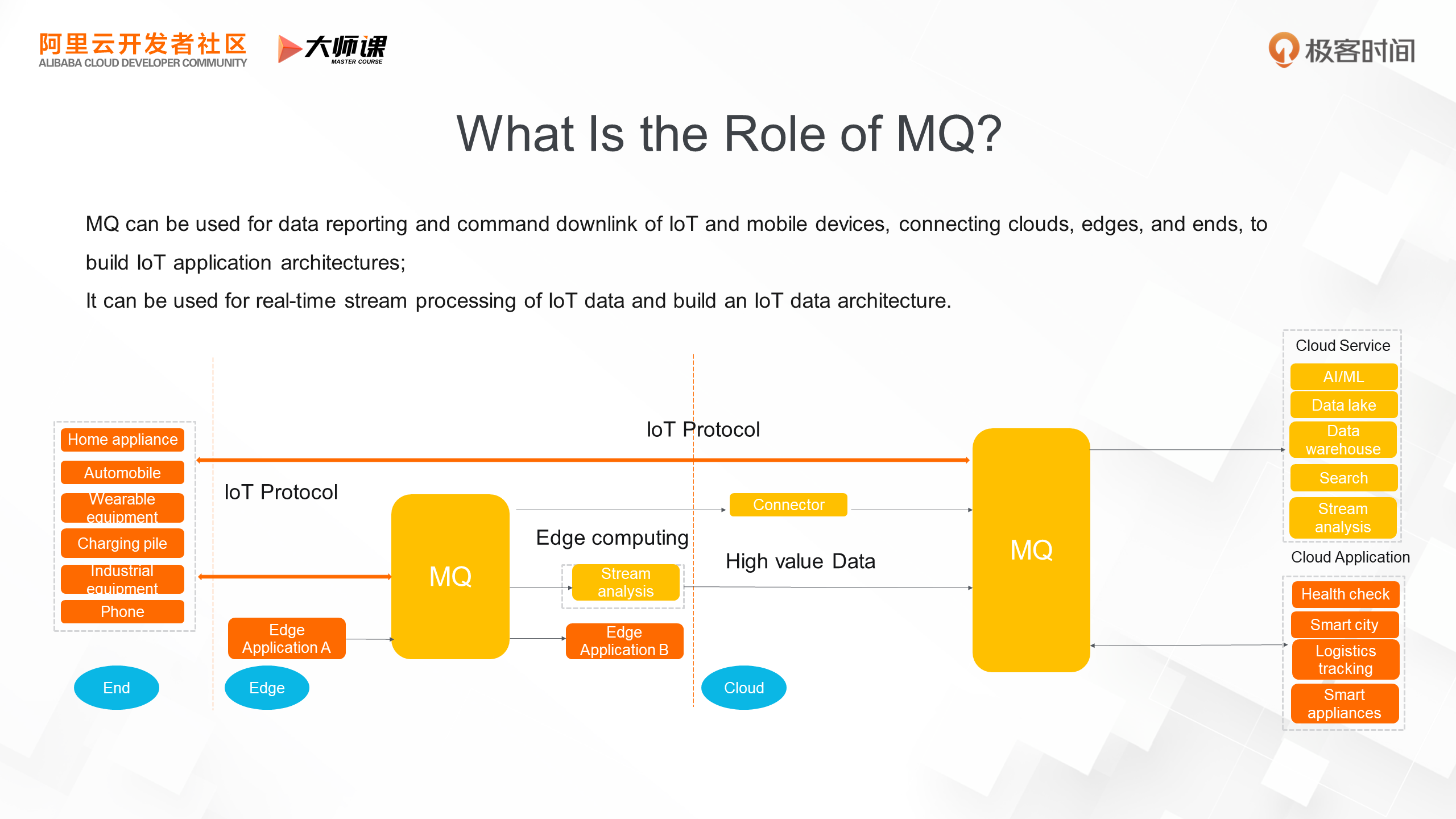

The first role is to connect. Messages enable communication between devices or between devices and cloud applications, supporting the application architecture of IoT and connecting the cloud, edge, and end.

The second role is data processing. IoT devices continuously generate data streams, and many scenarios require real-time stream processing. Based on the event stream storage and stream computing capabilities of MQ, a data architecture for IoT scenarios can be built.

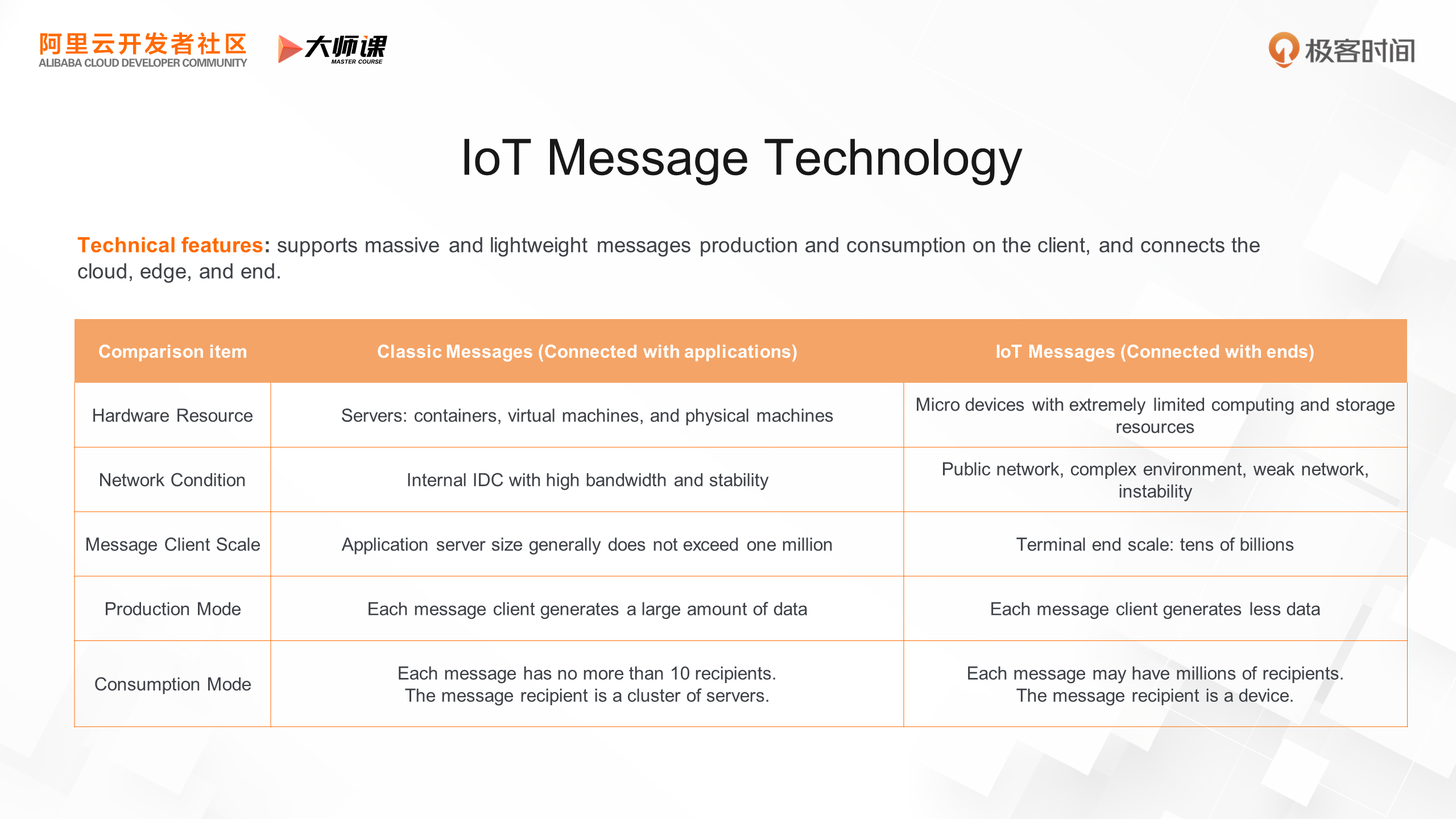

Let's take a look at the IoT scenario: what are the demands for message technology? Let's start by analyzing from this table what the differences are between IoT messaging technology and the classic messaging technology we discussed earlier.

Classic messaging provides the ability to publish and subscribe to server systems, while IoT messaging technology enables publishing and subscribing between IoT devices and between devices and servers. Let's look at the characteristics of each scenario.

In classic messaging scenarios, the message broker and message client are both server systems deployed in IDC or public cloud environments. Both the message client and message server are deployed on server models with relatively good configurations, such as containers, virtual machines, and physical machines. They are generally deployed in the same room, belonging to the internal network environment, where network bandwidth is particularly high and network quality is stable. The number of clients generally corresponds to the number of application servers, with a small scale of hundreds or thousands of servers. Only ultra-large-scale Internet companies can reach the million level. From the perspective of production and consumption, the message production and sending volume of each client generally corresponds to the TPS of its business, reaching hundreds of thousands of TPS. In terms of message consumption, cluster consumption is generally adopted, with an application cluster sharing a consumer ID and sharing the messages of the consumer group. The subscription ratio of each message is generally not high, normally not exceeding 10.

However, in IoT messaging scenarios, many conditions are different or even opposite. IoT message clients are miniature devices with limited computing and storage resources. The message server may be deployed in edge environments, with poor server configurations. Moreover, IoT devices are generally connected through public network environments, which are particularly complex and often move constantly. Sometimes, the network is disconnected or in a weak network environment, and network quality is poor. In IoT scenarios, the number of client instances for messages corresponds to the number of IoT devices, which can reach hundreds of millions, much larger than the number of servers of large Internet companies. The message TPS of each device is not high, but a message may be received by millions of devices at the same time. The subscription ratio is particularly high.

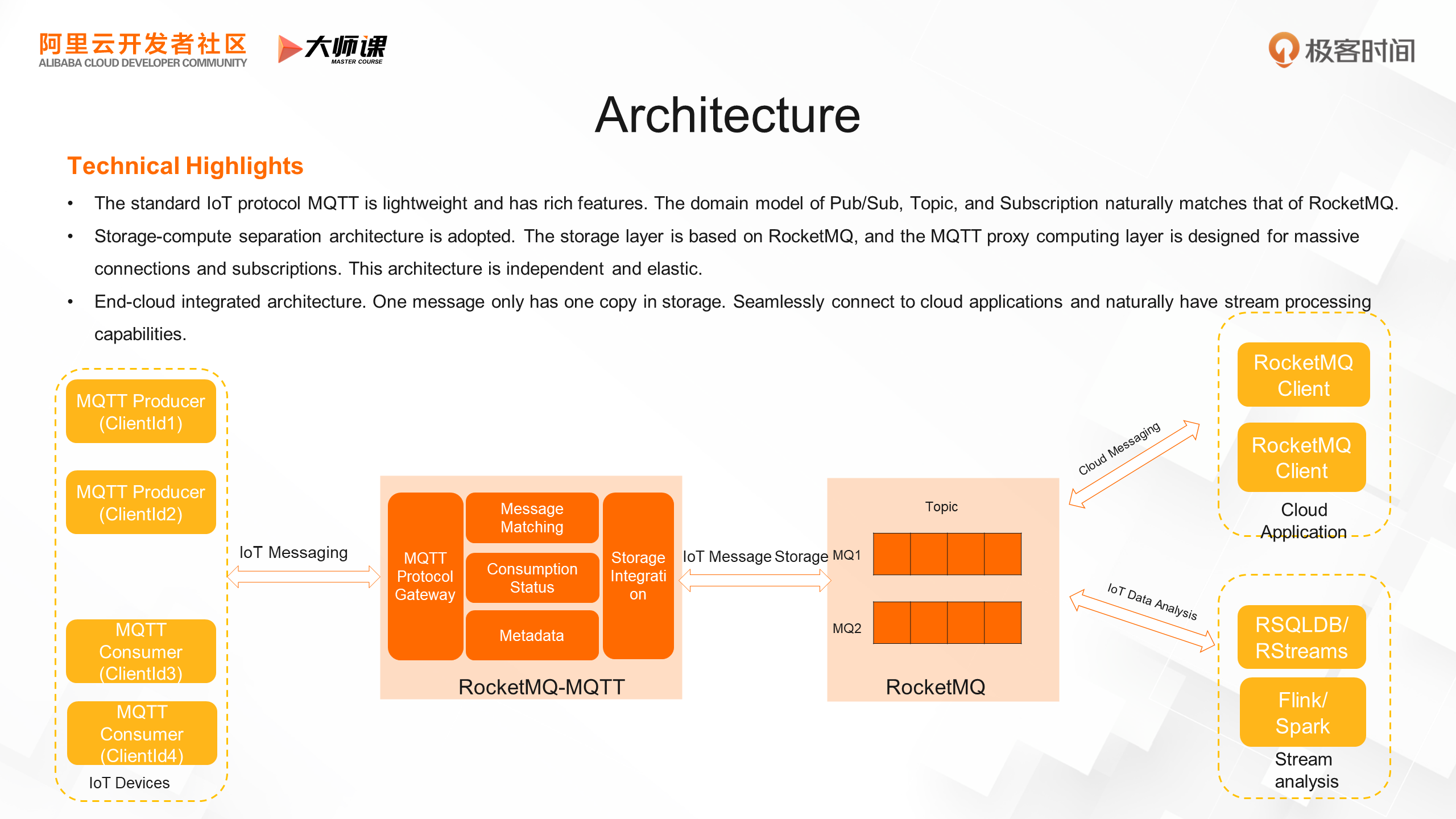

As we can see, the messaging technology required by the IoT is very different from classic messaging design. Next, let's take a look at what RocketMQ 5.0 has done to address the messaging scenarios of the IoT. In RocketMQ 5.0, we released a sub-product called RocketMQ-MQTT, which has three technical features:

First, it uses the standard IoT protocol MQTT, designed for the weak network environment and low computing power of the IoT. The protocol is very simple, yet it has rich features, supporting multiple subscription modes and QoS of multiple messages, such as at most once, at least once, and only once. Its domain model design is based on concepts like message, topic, publish, and subscribe, which is specially matched with RocketMQ, laying the foundation for building a cloud-integrated RocketMQ product form.

Second, it uses a storage-compute separation architecture. The RocketMQ broker serves as the storage layer, while the MQTT-related domain logic is implemented in the MQTT proxy layer, optimized for massive connections, subscription relationships, and real-time push. The proxy layer can be independently elastic according to the load of IoT services, and if the number of connections increases, you only need to add proxy nodes.

Third, it adopts an end-cloud integrated architecture. Since the domain model is close and RocketMQ is used as the storage layer, only one copy of a message is stored. This message can be consumed by both IoT devices and cloud applications. Additionally, RocketMQ itself is a natural stream storage, and the stream computing engine can seamlessly analyze IoT data in real-time.

Next, we will learn more about the implementation of the IoT technology of RocketMQ from several key technical points.

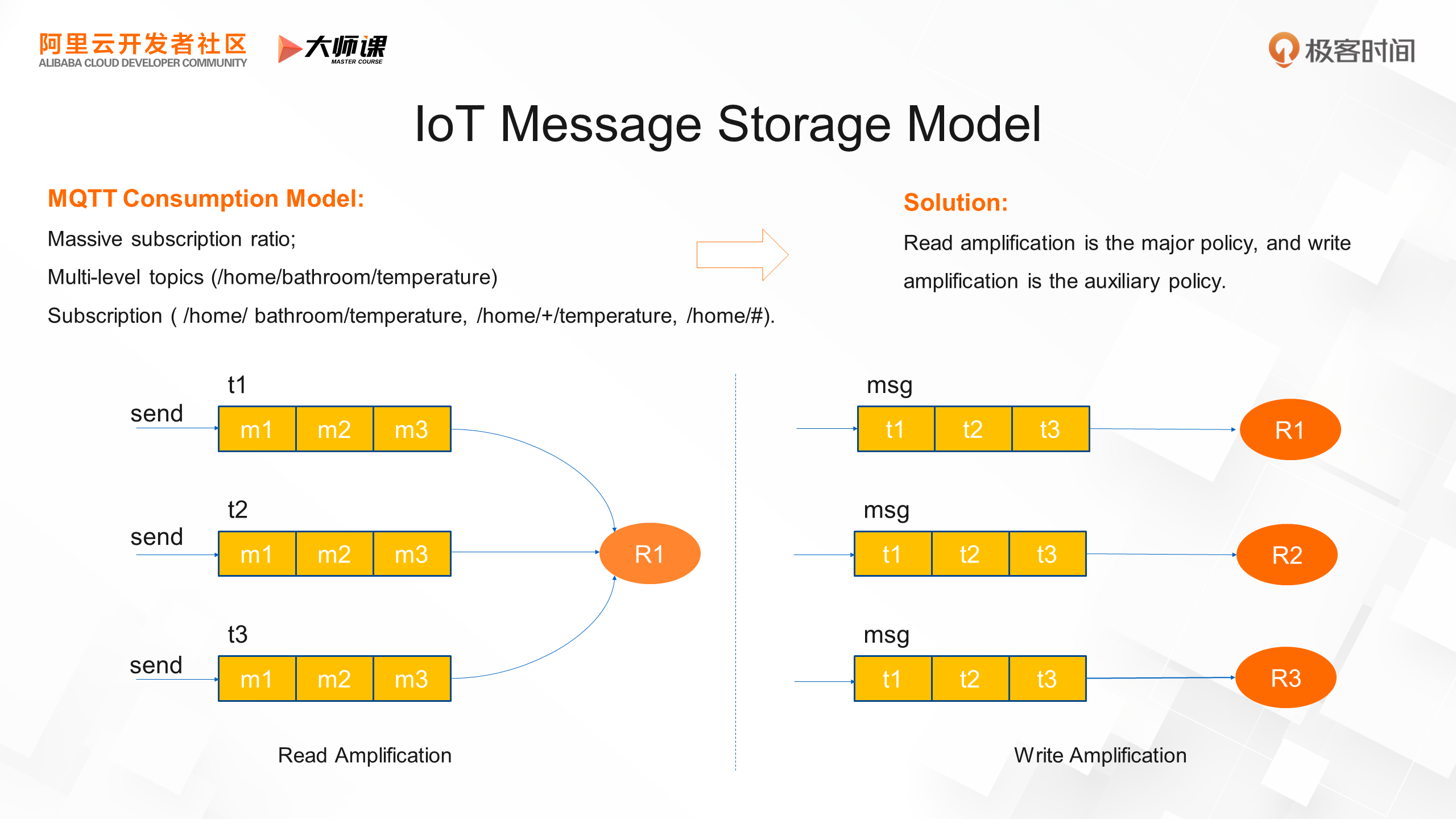

The first problem to be solved is the storage model of IoT messages. In the publish-subscribe business model, two storage models are commonly used. One is read amplification, where each message is written to only one common queue, and all consumers read this shared queue and maintain their own consumption offsets. The other model is write amplification, where each consumer has its own queue, and each message must be distributed to the target consumer's queue, which the consumer reads exclusively.

In an IoT scenario, where a message may be consumed by millions of devices, it is clear that choosing a read amplification model can significantly reduce storage costs and improve performance.

However, only the read amplification mode cannot fully meet the requirements. The MQTT protocol has its particularity, with multi-level topics and subscription methods that include both precise subscription and wildcard matching subscription. For example, in a home scene, we define a multi-level topic, such as home/bathroom/temperature. There is a direct subscription to the full multi-level topic, a subscription to all messages that only focus on temperature, or a subscription that only focuses on the first-level topic of home.

Consumers who directly subscribe to a full multi-level topic can use the read amplification method to read the public queue of the corresponding multi-level topic. Consumers who subscribe to a wildcard cannot retrieve the topic of the message. Therefore, they need to write a wildcard queue according to the subscription relationship of the wildcard when the message is stored, so that the consumer can read the message according to the wildcard queue to which it subscribes.

This is the storage model used by RocketMQ, where read amplification is the main policy and write amplification is the auxiliary policy.

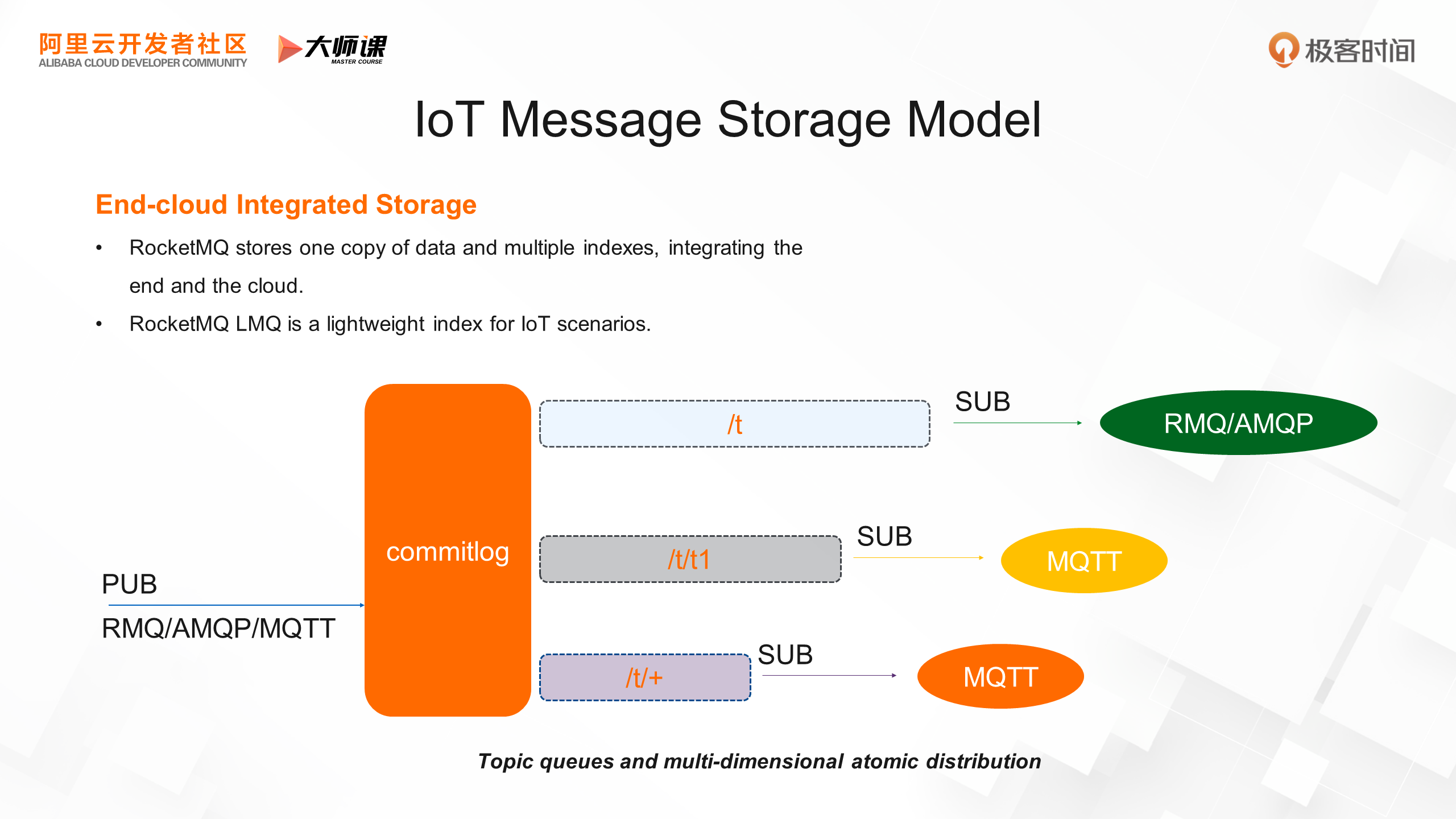

Based on the analysis in the last course, we designed a RocketMQ end-cloud integrated storage model. Now take a look at this figure.

Messages can come from various access scenarios, such as RMQ/AMQP on the server and MQTT on the device, but only one copy is written and stored in the commit log. Then, the queue indexes of multiple demand scenarios are distributed. For example, in the server scenario (MQ/AMQP), traditional servers can consume messages according to the level-1 topic queue, and in the device scenario, messages can be consumed based on the MQTT multi-level topic and wildcard subscription.

In this way, based on the same set of storage engines, we can support both server-side application integration and messaging in IoT scenarios, achieving end-cloud integration.

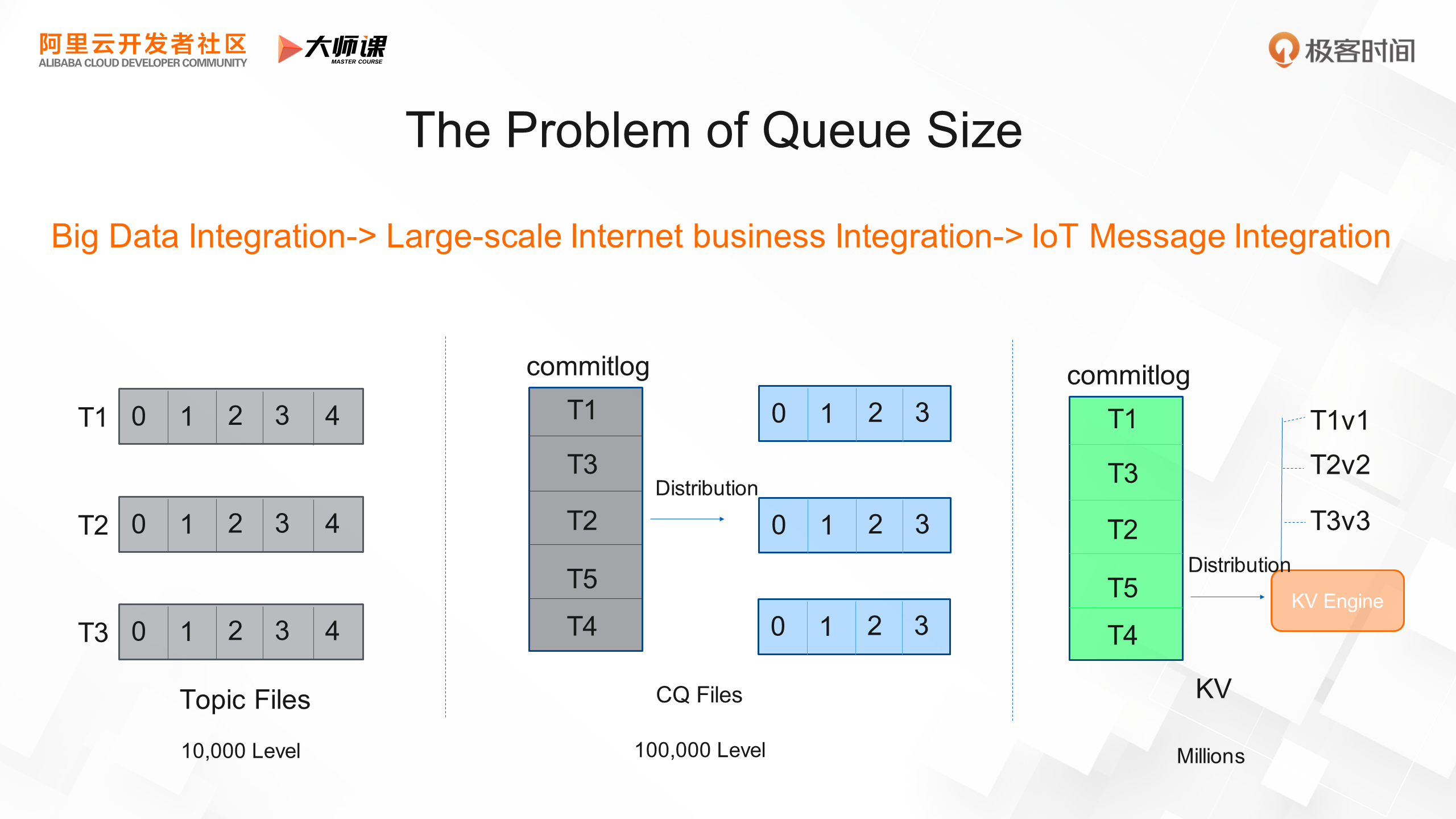

We all know that each topic in a message queue like Kafka is an independent file. However, as the number of topics increases, the number of message files also increases, causing sequential writing to degenerate into random writing, which significantly reduces performance. RocketMQ has been improved based on Kafka. It uses a commit log file to store all message contents and then uses CQ index files to represent the message queue in each topic. Since CQ index data is relatively small, the impact of increased files on I/O is much smaller, allowing the number of queues to reach 100,000. However, in this scenario of terminal queues, 100,000 queues are still too small. We hope to further increase the number of queues to reach one million queues. Therefore, we introduced the Rocksdb engine to distribute CQ indexes.

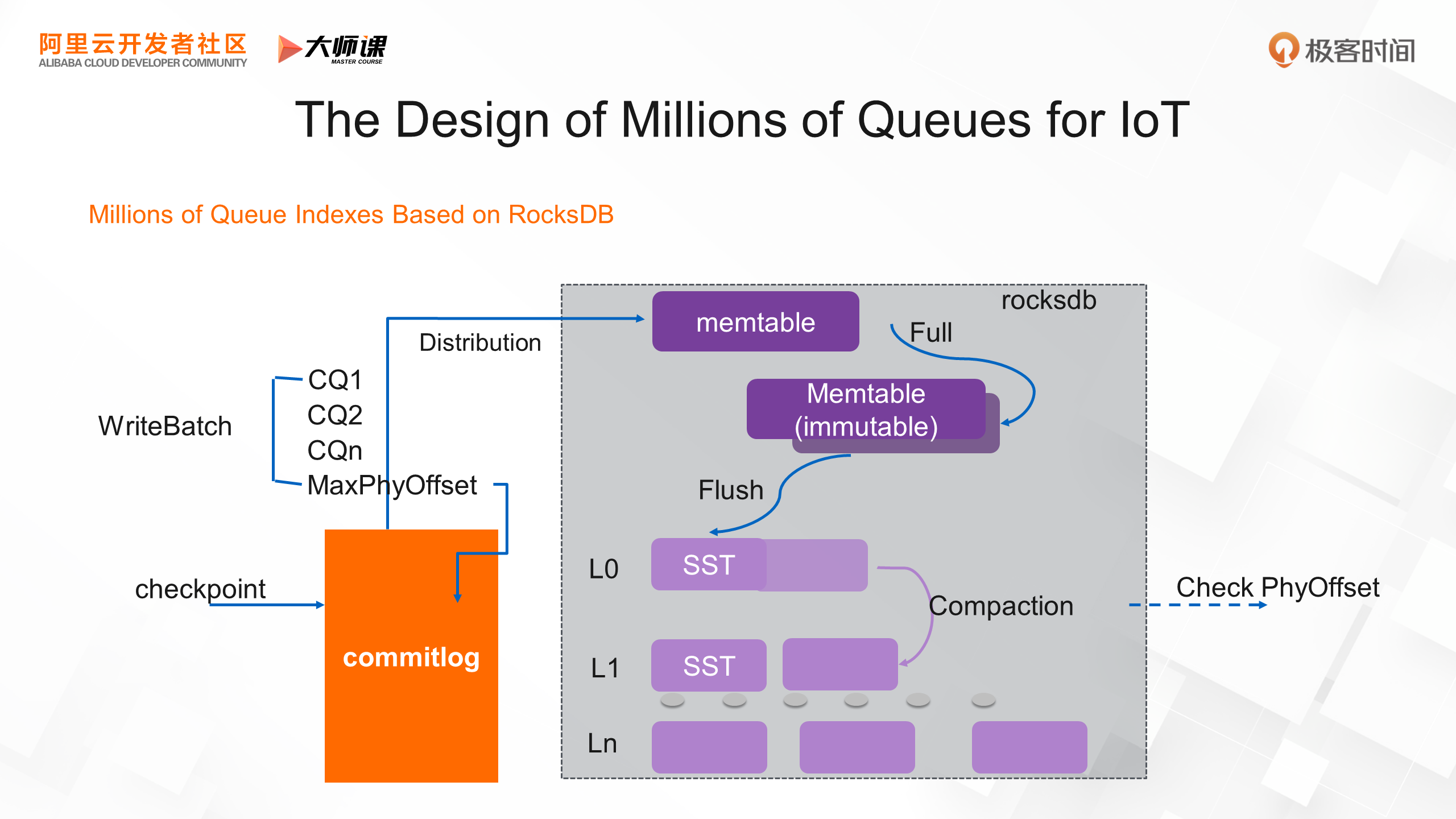

Rocksdb is a widely used stand-alone KV storage engine with high-performance sequential write capabilities. Since we have the commitlog already has the message sequence stream storage, we can remove the WAL in the Rocksdb engine and store the CQ index based on Rocksdb. The WriteBatch atomic feature of Rocksdb is used for distribution. The current MaxPhyOffset is injected into Rocksdb for distribution because Rocksdb can guarantee atomic storage. You can use this MaxPhyOffset to perform the Recover checkpoint. Finally, we also provide a custom implementation of Compaction to confirm PhyOffset to clean up deleted dirty data.

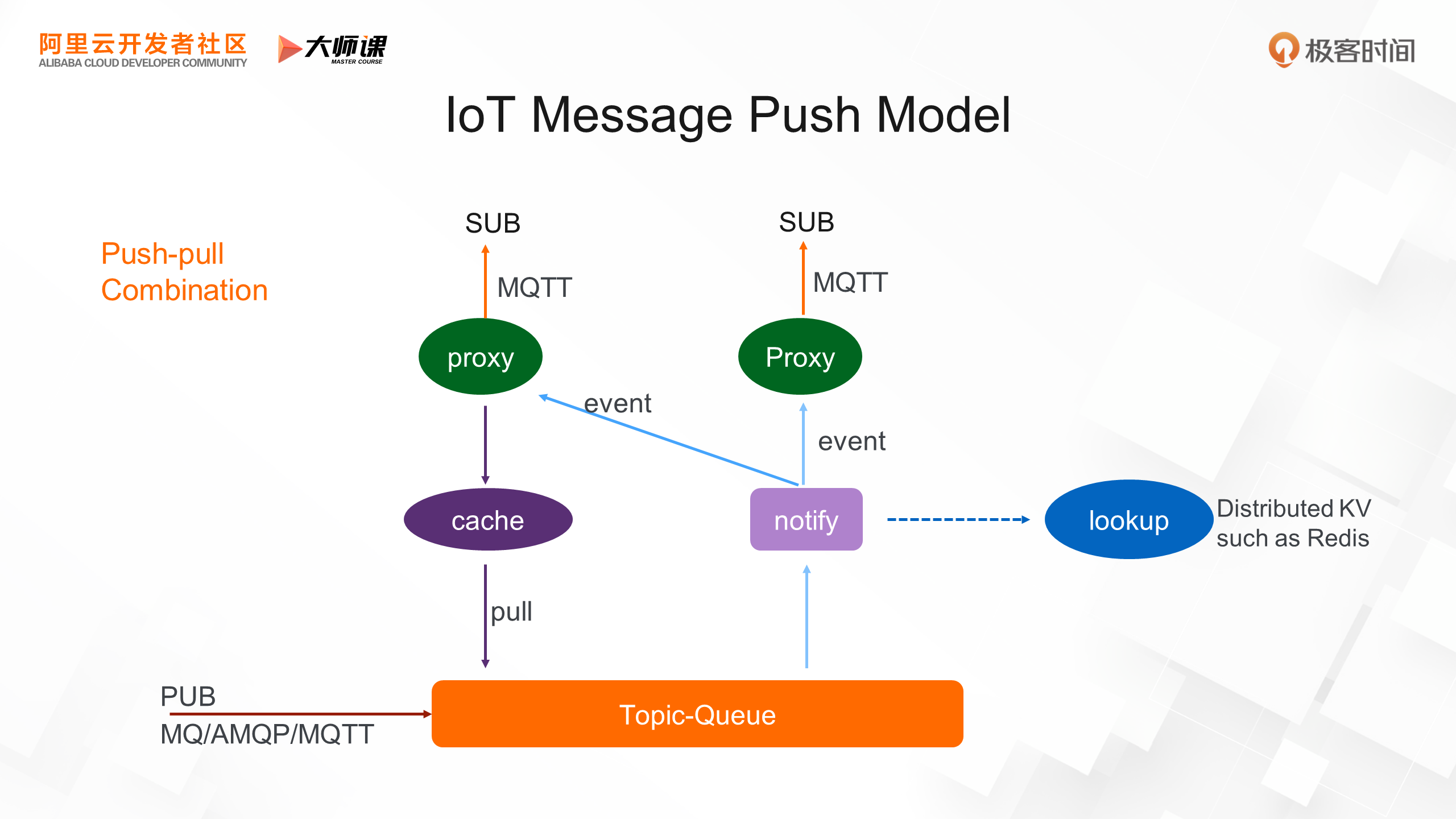

After introducing the underlying queue storage model, let's dive into the details of how matching lookup and reliable reach are achieved. In the classic consumption mode of Message Queue for Apache RocketMQ, consumers directly initiate long-polling requests from clients to accurately read the corresponding topic queue. However, in MQTT scenarios, the long polling mode cannot be used due to the large number of clients and subscription relationships, making consumption more complex. A push-pull combination model is used here.

A push-pull model is shown here. The terminal connects to a proxy node using the MQTT protocol. Messages can come from various scenarios (MQ/AMQP/MQTT). After a message is stored in the topic queue, a notify logic module detects the arrival of this new message in real-time. Then, a message event (i.e., the topic name of the message) is generated and pushed to the gateway node. The gateway node performs internal matching based on the subscription status of the connected terminal to find out which terminals can match. Then, a pull request is triggered to read the message from the storage layer and push it to the terminal.

A crucial problem is how the notify module knows which gateway nodes are interested in a message that the terminal is interested in. This is actually the key matching search problem. There are two common solutions: first, simply broadcasting events; second, storing online subscription relationships (such as the lookup module in the figure) in a centralized manner, performing matching and search, and then pushing accurately. The event broadcasting mechanism seems to have extensibility issues, but in fact, the performance is not bad, as the data we push is very small - just the topic name. Additionally, message events of the same topic can be merged into one event, which is our default online method. Storing online subscription relationships in a centralized manner, such as in RDS and Redis, is also a common practice. However, ensuring real-time consistency of data and performing matching and searching can impact the RT overhead of the entire real-time message chain.

There is also a cache module in this figure, used for Message Queue cache to avoid the situation where each terminal initiates data reading to the storage layer in a large broadcast ratio scenario.

In this course, we explored the technical architecture of a typical IoT scenario, where Message Queue plays two crucial roles: connecting IoT applications and completing their workflow, and connecting cloud-based data processing solutions through its stream storage capability. What are the key technical points of the IoT scenario? They include addressing the challenges of limited resources, weak network environments, and tens of billions of device connections. Finally, based on these IoT message technology requirements, we also learned about the IoT message technology of RocketMQ 5.0, which achieves end-cloud integration.

In the next course, we will delve into the event-driven architecture of RocketMQ 5.0 in the context of the cloud era.

Click here to go to the official website for more details.

RocketMQ 5.0 Stream Database: How to Implement Integrated Stream Processing?

RocketMQ 5.0: What Characteristics Does Event-driven in the Cloud Era Have?

206 posts | 12 followers

FollowAlibaba Cloud Native - June 6, 2024

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native - June 7, 2024

Alibaba Cloud Native - November 13, 2024

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - November 23, 2022

206 posts | 12 followers

Follow IoT Platform

IoT Platform

Provides secure and reliable communication between devices and the IoT Platform which allows you to manage a large number of devices on a single IoT Platform.

Learn More IoT Solution

IoT Solution

A cloud solution for smart technology providers to quickly build stable, cost-efficient, and reliable ubiquitous platforms

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn MoreMore Posts by Alibaba Cloud Native