By Jiao Xian

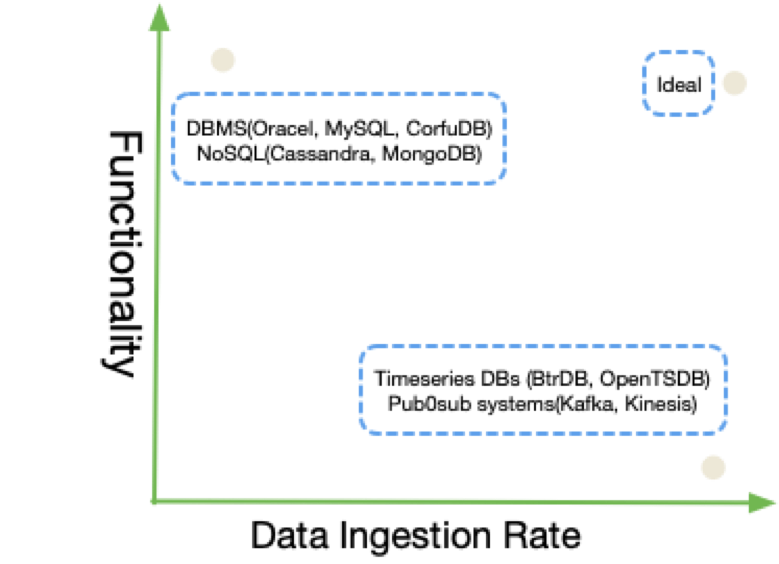

More and more applications can collect tens of millions of data points per second in many scenarios, such as terminal IoT network monitoring, smart home, and data centers. The collected data is used for online query display, monitoring, offline root cause analysis, and system optimizations. These scenarios require high-speed writes, low-latency online query, and low-overhead offline query. However, existing data structures can barely meet these requirements. Some data structures focus on high-speed writing and simple queries, while others focus on complex queries, such as ad hoc queries, offline queries, and materialized views. These data structures increase the maintenance overload and reduce the writing performance.

Confluo is designed to solve these challenges.

Confluo can solve several challenges at the same time because it has made some trade-offs in some specific scenarios. A typical scenario is telemetry data.

Telemetry data has the following important features:

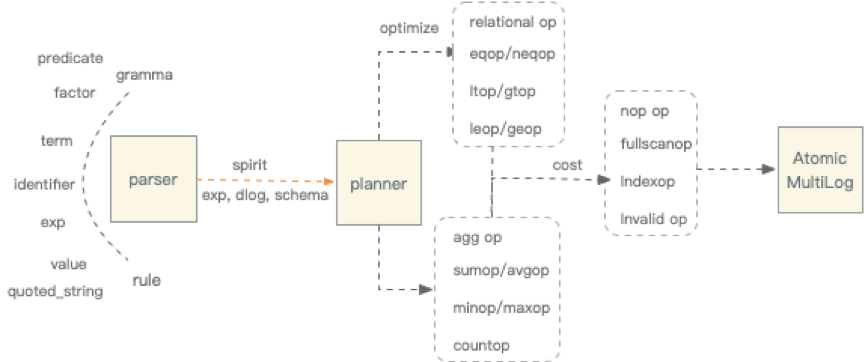

Based on these data features, Confluo implements an innovative data structure to support high throughput and online or offline query.

Confluo is designed for real-time monitoring and data stream analysis scenarios, such as network monitoring and diagnosis frameworks, time series databases, and a pub/sub message systems. The main features of Confluo include:

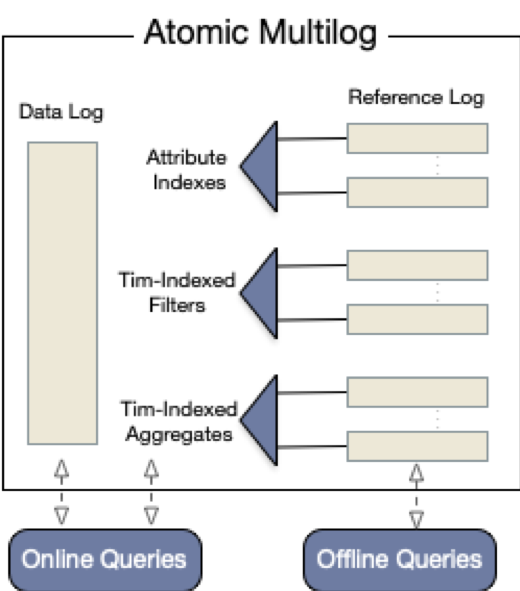

The basic storage abstraction in Confluo is an Atomic MultiLog (hereinafter referred to as "AM"), which relies on two critical technologies:

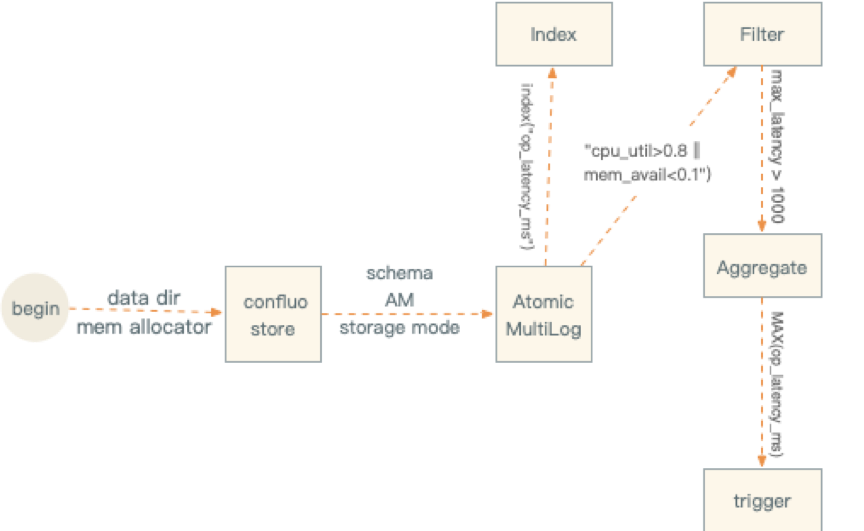

An AM is similar in interface to database tables. Therefore, applications need to create an AM with a pre-specified schema, and write data streams that conform to the schema. Applications then create indexes, filters, aggregates and triggers on the Atomic MultiLog to enable various monitoring and diagnosis functionalities.

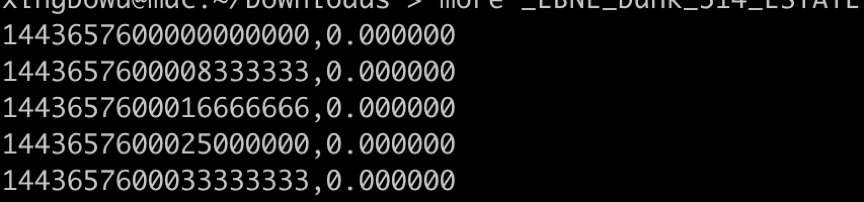

{

timestamp: ULONG,

op_latency_ms: DOUBLE,

cpu_util: DOUBLE,

mem_avail: DOUBLE,

log_msg: STRING(100)

}- Confluo also requires each record to have an 8-byte timestamp. If an application does not assign write timestamps, Confluo internally assigns one.

- The metric data is of double and string types.Create an AM with a specified schema. Three storage modes are available for an AM: IN_MEMORY, DURABLE, and DURABLE_RELAXED.

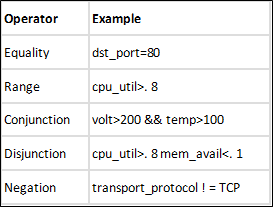

Perform basic operations on the AM. Define indexes, filters, aggregates, and triggers for the AM.

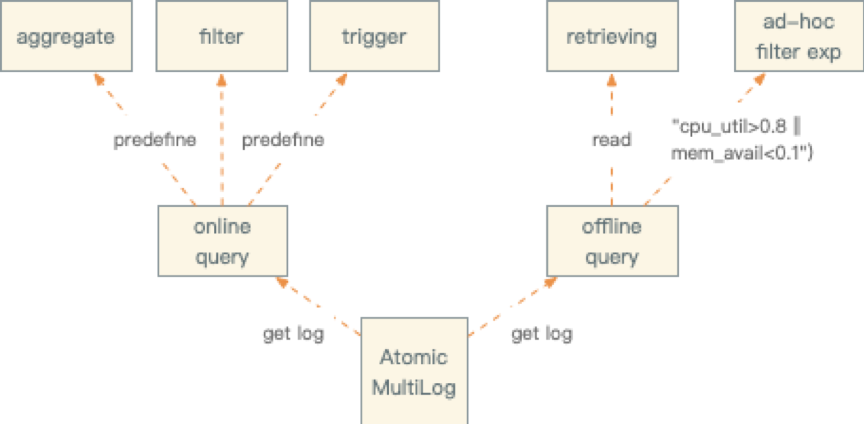

Queries in Confluo can either be online or offline, the difference being that online queries require pre-defined rules while offline queries do not.

In addition to raw data, Confluo also needs to store objects like indexes, pre-defined filters, and aggregations. Therefore, This increases the storage overhead. This problem can be solved by archiving some data to cold devices. Currently three data archiving methods are supported: periodic archival, forced archival, and allocator-triggered archival (based on memory).

Atomic MultiLog

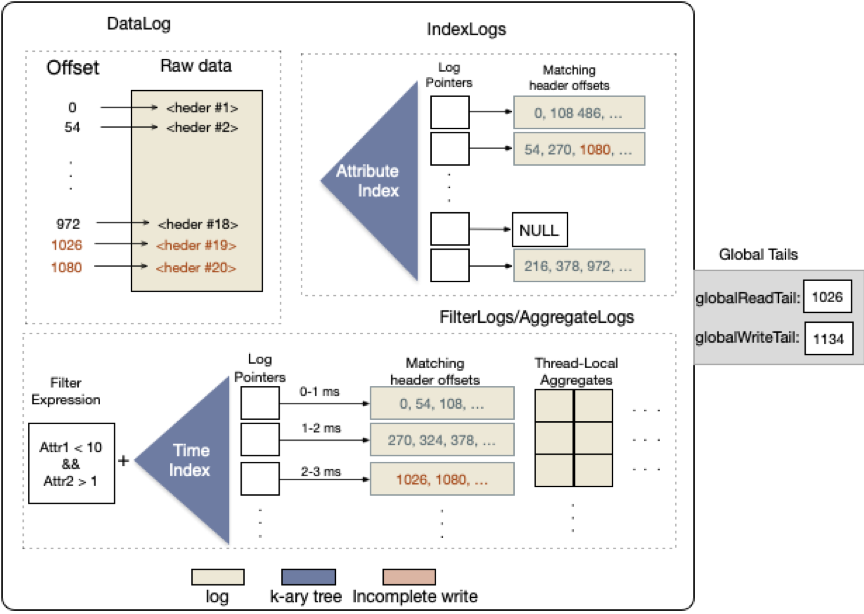

Atomic MultiLogs are the core of the entire system. Atomic MultiLogs mainly include DataLogs, IndexLogs, FilterLogs, AggregateLogs, and logs that describe how the entire collection of logs are updated as a single atomic operation.

Confluo is an open-source C ++ project. Confluo is available in two modes:

The core innovation of Confluo released by RISELab lies in its data structure: Atomic MultiLogs. Confluo supports concurrent read and write at high speed. A single core can run 1,000 triggers and 10 filters. An excellent capability of Confluo is that the atomic operations on new hardware and lock-free logs can support real-time, offline and fast writing.

2,593 posts | 793 followers

FollowAlibaba EMR - August 5, 2024

Alibaba Cloud Storage - February 27, 2020

Alibaba EMR - September 13, 2022

Alibaba Cloud Community - April 15, 2024

PM - C2C_Yuan - September 15, 2023

digoal - January 25, 2021

2,593 posts | 793 followers

Follow IoT Platform

IoT Platform

Provides secure and reliable communication between devices and the IoT Platform which allows you to manage a large number of devices on a single IoT Platform.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Cloud Data Transfer

Cloud Data Transfer

Unified billing for Internet data transfers and cross-region data transfers

Learn MoreMore Posts by Alibaba Clouder