By Jiao Xian

This article describes the design of time series data storage and indexing in InfluxDB. Because the clustering version of InfluxDB is no longer open source from version 0.12 onward, the content described in this article is related Standalone InfluxDB unless otherwise specified.

In the past three years since the release of InfluxDB, the architecture of its storage engine has experienced several significant changes. This article will briefly describe the evolution process of the InfluxDB storage engine.

**LevelDB-based LSMTree scheme****BoltDB-based mmap COW B+tree scheme****WAL + TSM file-based scheme**(TSMFile was generally available in Ver.0.9.6; Ver.0.9.5 only provided the prototype)**WAL + TSM file + TSI file-based scheme**InfluxDB has tried to use different storage engines, including LevelDB and BoltDB. However, these storage engines cannot perfectly support the following requirements inside InfluxDB:

=> *With LSMTrees inside LevelDB, the cost of deleting data is too high*=> *In LevelDB, lots of small files will be generated over time*=> *LevelDB only supports cold backup*=> *The B+tree write operation inside BoltDB has the throughput bottleneck*=> *BoltDB does not support compression*In addition, for the consistency of the technology stack and the simplicity of deployment (container-based deployment), the InfluxDB team hopes that the storage engine, like the upper-layer TSDB engine, is written in GO. Therefore, the potential RocksDB choice is excluded.

To solve the preceding pain points, the InfluxDB team decided to write their own storage engine.

Before explaining the storage engine inside InfluxDB, let's first review the data model in InfluxDB.

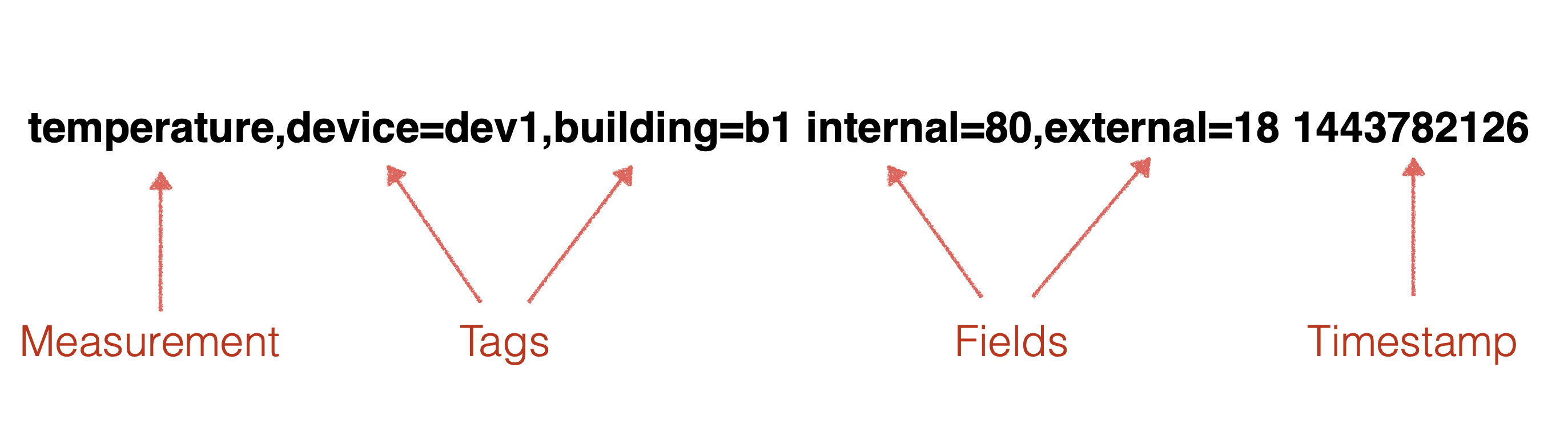

In InfluxDB, time series data supports multi-value models. The following shows a piece of typical data at a specific point in time.

Figure 1

Additionally, InfluxDB has the database concept (in a traditional DBMS) on top of measurement. Logically, a database can have multiple measurements beneath. In the standalone implementation of InfluxDB, each database actually corresponds to a directory under the file system.

A SeriesKey in InfluxDB is similar to the timeline concept in common time series databases. A SeriesKey is represented in memory like the following byte array (with escape comma and space) (github.com/influxdata/influxdb/model#MakeKey())

{measurement name}{tagK1}={tagV1},{tagK2}={tagV2},...

A SeriesKey cannot be longer than 65,535 bytes.

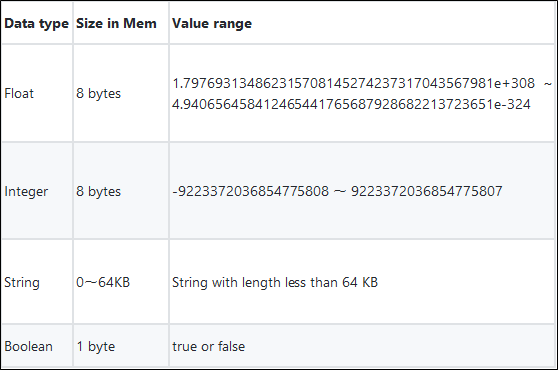

A field value in InfluxDB supports the following data types:

In InfluxDB, the field data type must remain unchanged in the following range; otherwise, a type conflict error is reported during data writing.

The same SeriesKey + the same field + the same shard

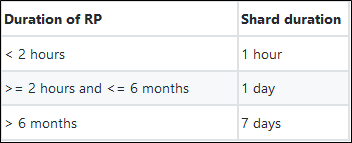

In InfluxDB, only one retention policy (RP) can be specified for a database. An RP can be used to describe for how long a specified database keeps time series data (duration). The shard concept is derived from the duration concept. Once the duration of a database is determined, the time series data in the duration inside that database will be further sharded by time so that the time series data can be stored as shards.

The following table shows the relation between the shard duration and the duration of an RP.

By default, if no RP is explicitly specified for a newly created database, the duration of RCs is permanent and the shard duration is 7 days.

Note: In the closed-source clustering version of InfluxDB, users can use RPs to specify that data is further sharded by SeriesKey after it has been sharded by time.

A storage engine inside a time series databases should be able to meet the performance requirements in the three following scenarios:

1. Write time series data in large batches.

2. Scan data in a specified timestamp range directly according to a timeline (Serieskey in InfluxDB).

3. Query all the matching time series data in a specified timestamp range indirectly by using measurements and some tags.

Based on the considerations in Section 1.2., InfluxDB announced their own solution - the WAL + TSM file + TSI file solution, which is described in the following section.

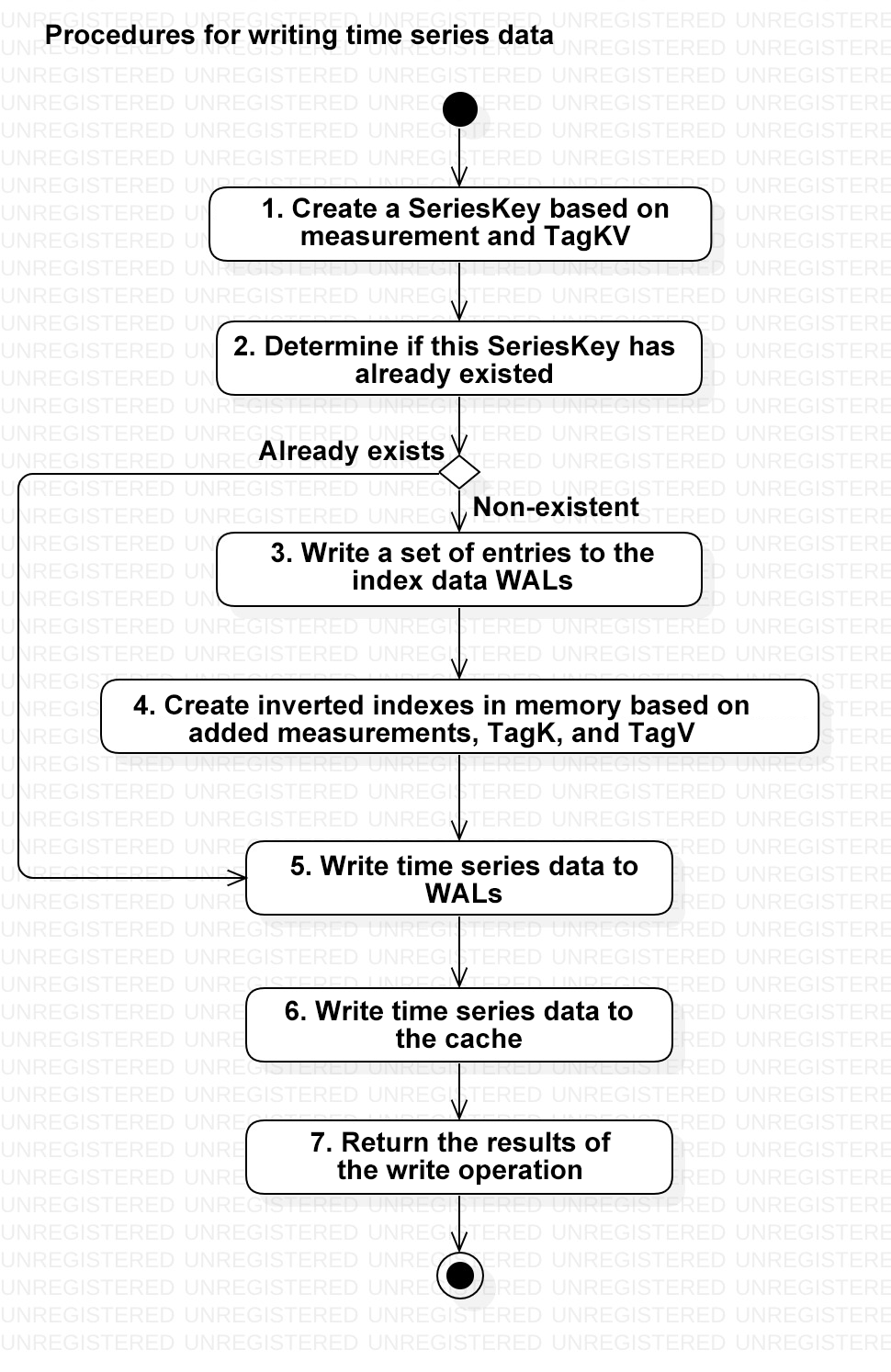

Like many other databases, InfluxDB first writes time series data into WALs and then into cache, and then performs disk flush to ensure data integrity and availability. The following figure shows the main procedures for InfluxDB to write time series data.

Figure 2

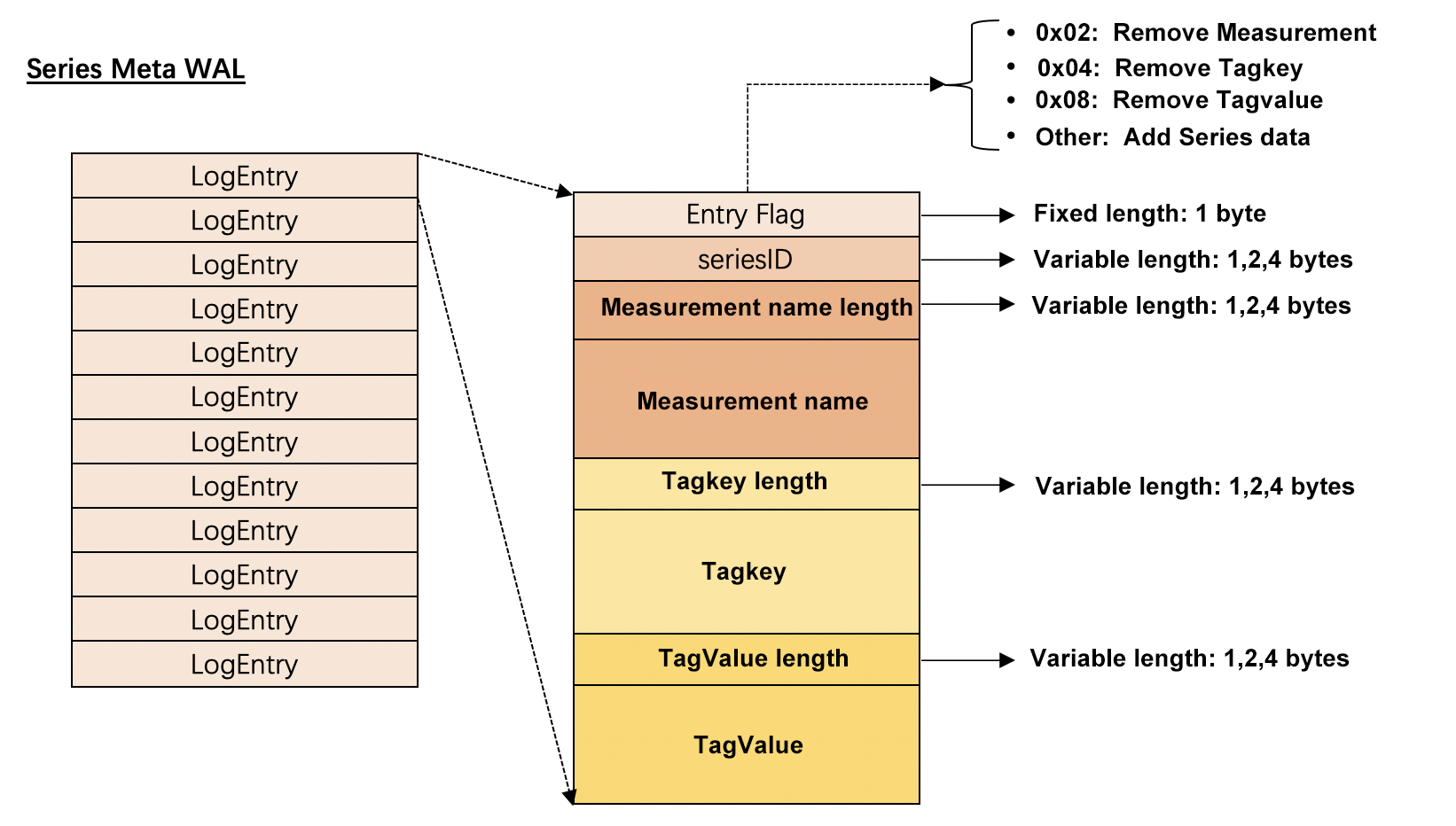

In InfluxDB, Serieskey data and time series data are separated and written into different WALs. The structure is shown as follows:

InfluxDB supports deleting measurements, TagKeys, and TagValues. Adding new SeriesKeys is also supported as more time series data is written. The WALs of index data will determine what the current operation is. The structure of index data WALs is shown in the following figure.

Figure 3

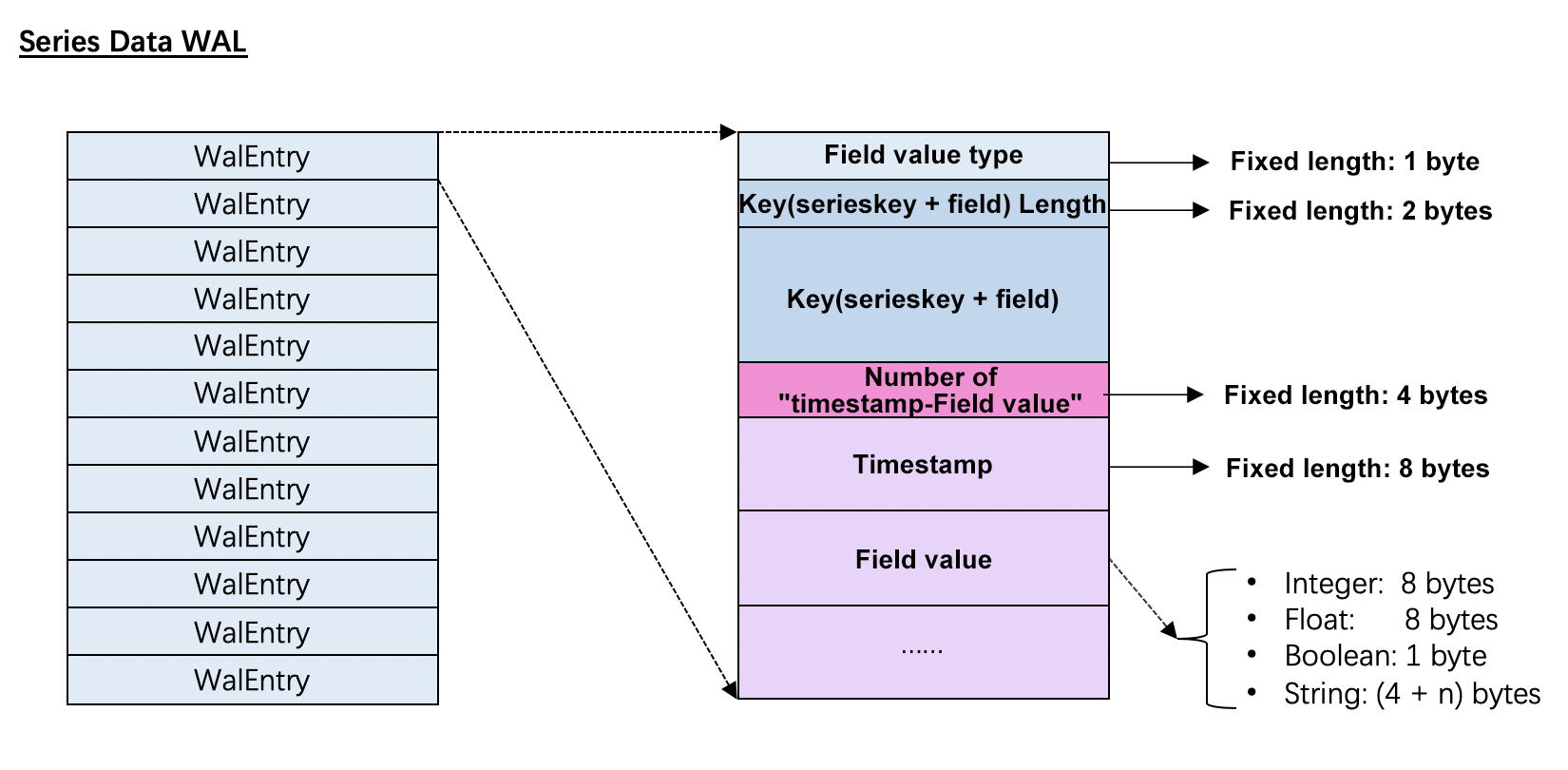

Because InfluxDB always only writes time series data, entries do not need to differentiate among operation types and simply record written data.

Figure 4

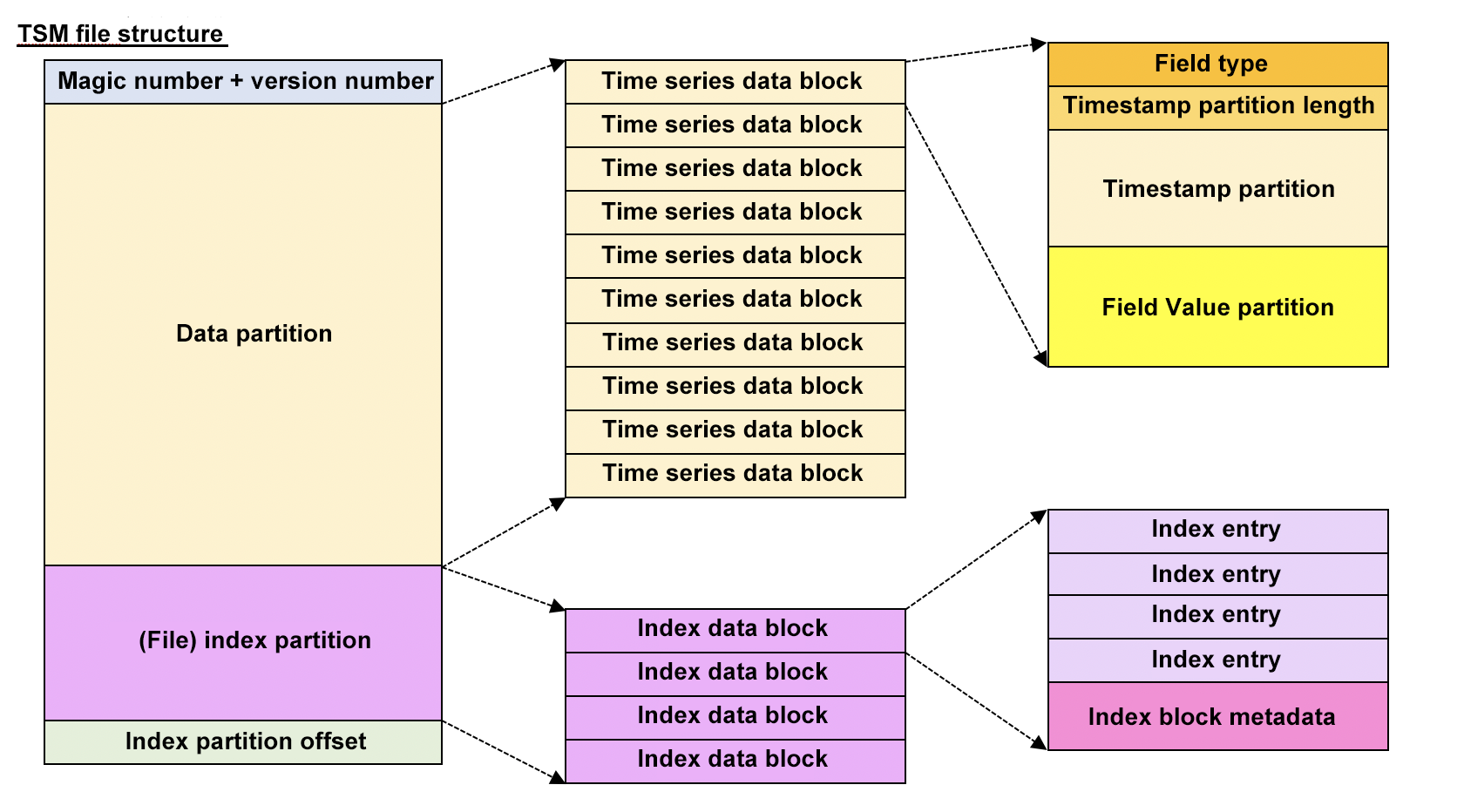

TSM files are the storage solution for time series data in InfluxDB. At the file system level, each TSM file corresponds to a shard.

The storage structure of TSM files is shown in the following figure.

Figure 5

In a TSM file, time series data (namely, timestamp + field value) is stored in the data partition; SeriesKeys and Field Names are stored in the index partition, and an index in the file that is created based on SeriesKey + FieldKey and similar to a B+ tree is used to quickly locate data blocks where time series data is stored.

Note: In the current version, the maximum length of a single TSM file is 2 GB. When the actual size exceeds this limit, a new TSM file will be created to save the data even if data is in the same shard. This article does not describe TSM file splitting for the same shard, which is more complicated.

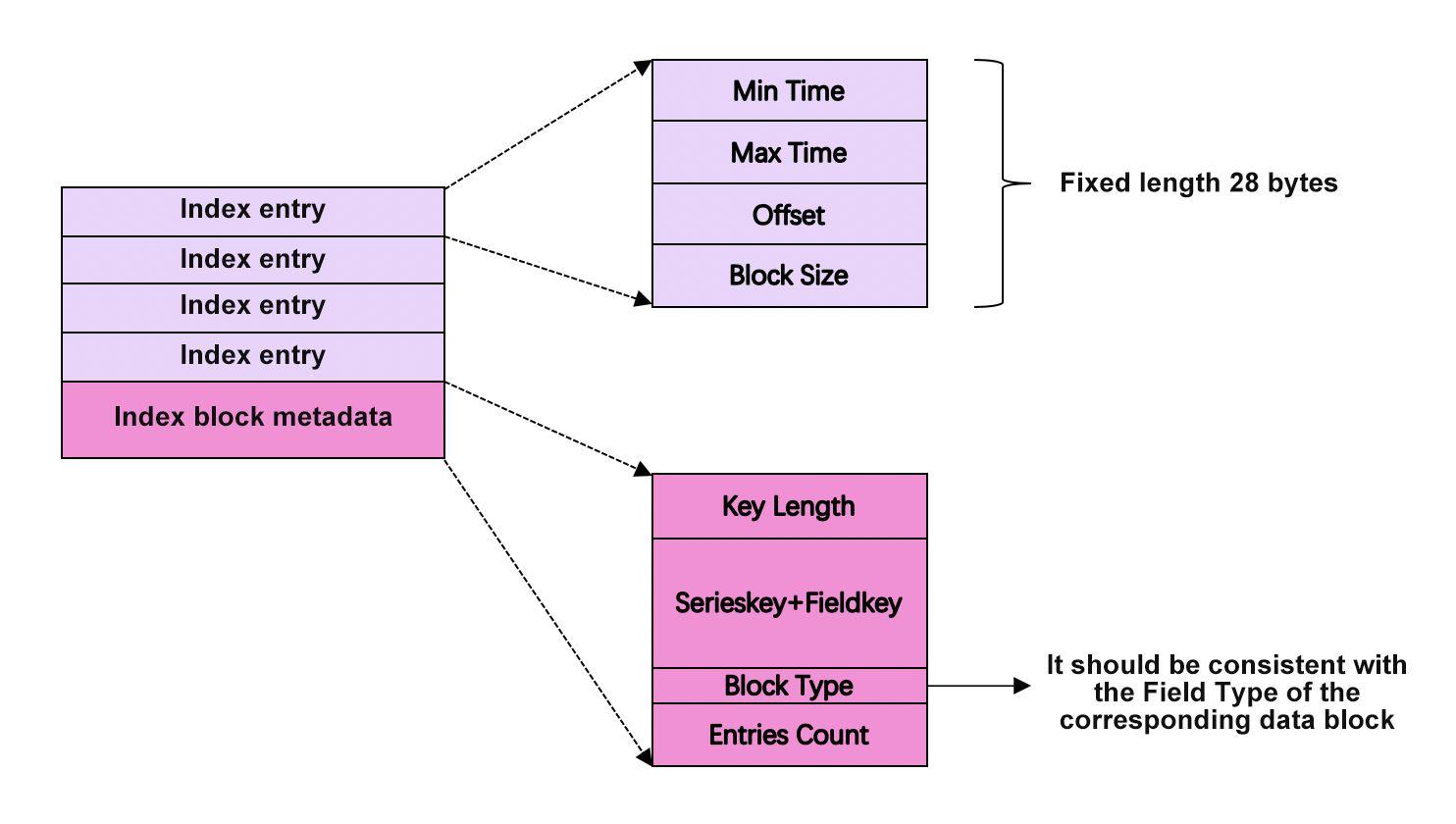

Structure of index blocks

The structure of an index block is as follows:

Figure 6

Where **Index entry** is calleddirectIndex in the source code of InfluxDB. In a TSMFile, index blocks are organized after **sorted** by SeriesKey + FieldKey.

After knowing the structure of the index partition of a TSM file, we can easily understand how InfluxDB efficiently scan time series data in the TSM file:

1. The specified SeriesKey and Field Name are used to perform binary search in the **index partition** to locate the **index data block** where the specified SeriesKey+FieldKey are.

2. InfluxDB searches the **index data block** by using the specified timestamp range to locate **index entries** where the matching data is.

3. InfluxDB loads the corresponding **time series data blocks** of the matching **index entries** into the memory for further scans.

*Note: The three preceding steps are just a brief description of the query mechanism. Practical implementations may include many complicated scenarios, for example, the time range of a scan may span across index blocks.*

Figure 2 shows the structure of time series data: All the Timestamp-Field field pairs of the same SeriesKey + FieldKey are split into two partitions - Timestamps and Value. This storage mode allows using different compression algorithms to store timestamps and field names in actual storage scenarios to reduce the size of time series blocks.

The compression algorithms used in InfluxDB are as follows:

During querying data, after time series data blocks are located by using the index in a TSM file, data is loaded into memory, and Timestamps and Field Values are compressed to make subsequent queries easier.

TSM files can perfectly meet the requirements of Scenario 1 and Scenario 2 described in the beginning of Part 3 of this article. However, during the process of querying data, how does InfluxDB ensure query performance if a user has specified more complex query criteria instead of specifying query criteria by using SeriesKeys? Generally, an inverted index can be used to solve this problem.

An inverted index inside InfluxDB is reliant on the two following data structures:

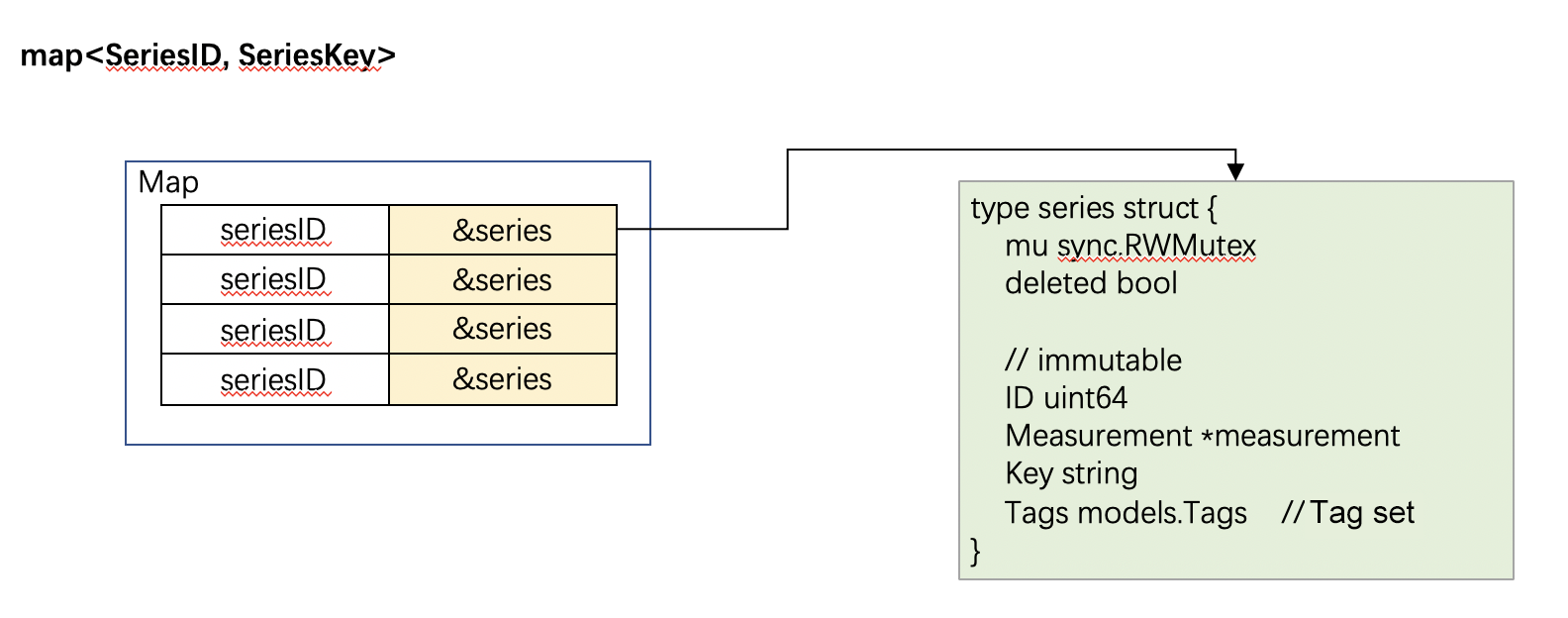

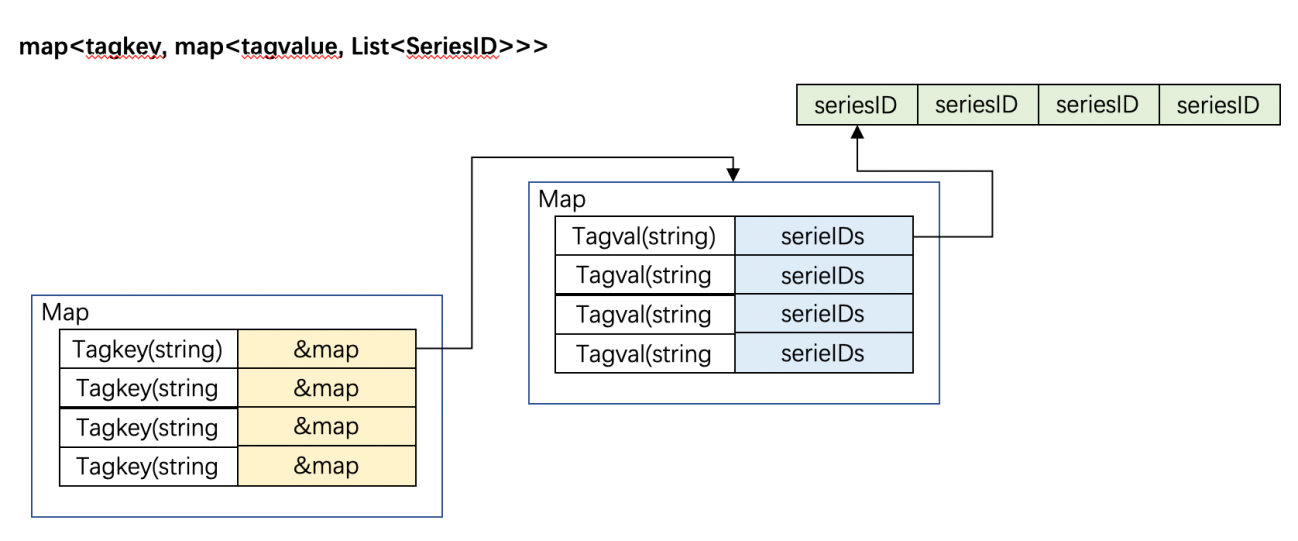

map<SeriesID, SeriesKey>map<tagkey, map<tagvalue, List<SeriesID>>>They are represented as follows in memory:

Figure 7

Figure 8

However, in the actual production environment, because a user's SeriesKey size will become very large, inverted indexes may use too much memory space. That's why TSI files are introduced in InfluxDB.

The overall storage mechanism of TSI files is similar to that of TSM files. Like a TSM file, a TSI file is generated for each shard. The storage structure is not described here.

The preceding content is a brief analysis of the storage mechanism in InfluxDB. Since I only see standalone InfluxDB, I do not know whether the clustering version of InfluxDB has some storage differences. However, we still can learn a lot from the storage mechanism of standalone InfluxDB.

2,593 posts | 792 followers

FollowAlibaba Cloud Storage - April 25, 2019

ApsaraDB - November 13, 2019

Alibaba Cloud Storage - April 25, 2019

Alibaba Clouder - July 31, 2019

Alibaba Clouder - July 31, 2019

Alibaba Developer - April 7, 2020

2,593 posts | 792 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Clouder