Multiline logs provide valuable information to help developers resolve application problems. Typical examples of multiline logs are stack traces. A stack trace outputs a list of the method calls that the application was processing an exception was thrown. The stack trace includes the exception and the line where the exception occurs. The following sample code shows a Java stack trace:

Exception in thread "main" java.lang.NullPointerException

at com.example.myproject.Book.getTitle(Book.java:16)

at com.example.myproject.Author.getBookTitles(Author.java:25)

at com.example.myproject.Bootstrap.main(Bootstrap.java:14)When you use a logging tool such as Elastic Stack, you may find it difficult to identify and search for a stack trace without proper configurations. When you send application logs by using a lightweight open-source log ingest node such as Filebeat, Kibana views each line of a stack trace as a single document.

Therefore, Kibana considers the preceding stack trace as four separate documents. As a result, errors and exceptions in the stack trace are separated from their context and are difficult to search for and understand. You can avoid this problem by adding configuration options to the filebeat.yml file when you use Filebeat to record application logs.

You can configure filebeat.inputs in the input part of the filebeat.yml file to add some multiline configuration options to ensure that multiline logs, such as stack traces, are sent as a complete document. For example, you can add the following configuration options to the input part of the filebeat.yml file to ensure that the Java stack trace referenced above will be sent as a single document.

multiline.pattern: '^[[:space:]]'

multiline.negate: false

multiline.match: afterLet's refer to the descriptions in Elastic's official documentation.

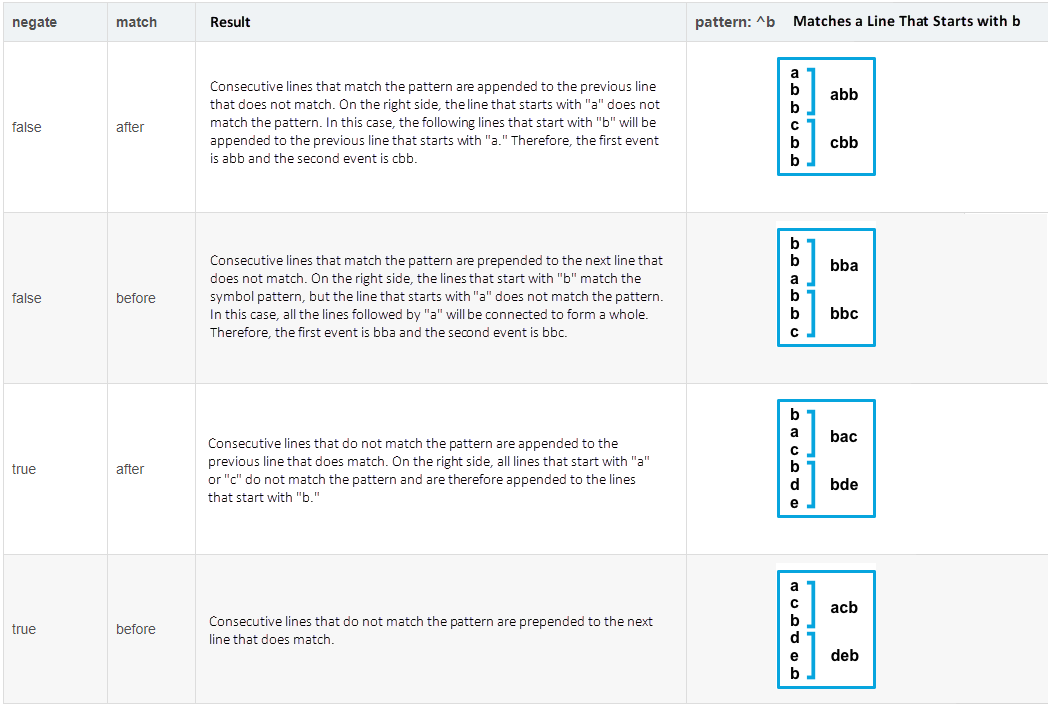

Specifies the regular expression (RegExp) pattern to match. Note that the RegExp patterns supported by Filebeat differ somewhat from the patterns supported by Logstash. For supported RegExp patterns, see RegExp support. Depending on how you configure other multiline options, lines that match the specified RegExp are considered either continuations of a previous line or the start of a new multiline event. You can set the negate option to negate the pattern.

This defines whether the pattern is negated. The default is false.

This specifies how Filebeat combines matching lines into an event. The settings are after or before. The behavior of these settings depends on what you specify for negate.

Let's use the preceding sample log as an example and create a file named multiline.log.

Exception in thread "main" java.lang.NullPointerException

at com.example.myproject.Book.getTitle(Book.java:16)

at com.example.myproject.Author.getBookTitles(Author.java:25)

at com.example.myproject.Bootstrap.main(Bootstrap.java:14)Create a profile in Filebeat:

filebeat.inputs:

- type: log

enabled: true

paths:

- /Users/liuxg/data/multiline/multiline.log

multiline.pattern: '^[[:space:]]'

multiline.negate: false

multiline.match: after

output.elasticsearch:

hosts: ["localhost:9200"]

index: "multiline"

setup.ilm.enabled: false

setup.template.name: multiline

setup.template.pattern: multilineRun the following command in Filebeat to view the content of the imported document:

GET multiline/_searchOutput:

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "multiline",

"_type" : "_doc",

"_id" : "9FI7OnIBmMpX8h3C4Cx8",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2020-05-22T02:34:55.533Z",

"ecs" : {

"version" : "1.5.0"

},

"host" : {

"name" : "liuxg"

},

"agent" : {

"ephemeral_id" : "53a2f64e-c587-4f82-90bc-870274227c54",

"hostname" : "liuxg",

"id" : "be15712c-94be-41f4-9974-0b049dc95750",

"version" : "7.7.0",

"type" : "filebeat"

},

"log" : {

"offset" : 0,

"file" : {

"path" : "/Users/liuxg/data/multiline/multiline.log"

},

"flags" : [

"multiline"

]

},

"message" : """Exception in thread "main" java.lang.NullPointerException

at com.example.myproject.Book.getTitle(Book.java:16)

at com.example.myproject.Author.getBookTitles(Author.java:25)

at com.example.myproject.Bootstrap.main(Bootstrap.java:14)""",

"input" : {

"type" : "log"

}

}

}

]

}

}In the preceding command output, we can see that the message field contains a total of three lines of information for a stack trace.

Another example is from Elastic's official documentation.

multiline.pattern: '^\['

multiline.negate: true

multiline.match: afterThe preceding command matches all the lines that start with a bracket ([) and appends the lines that do not start with a bracket ([) to the previous line. In this way, Filebeat matches a log that is similar to the following sample log:

[beat-logstash-some-name-832-2015.11.28] IndexNotFoundException[no such index]

at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver$WildcardExpressionResolver.resolve(IndexNameExpressionResolver.java:566)

at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver.concreteIndices(IndexNameExpressionResolver.java:133)

at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver.concreteIndices(IndexNameExpressionResolver.java:77)

at org.elasticsearch.action.admin.indices.delete.TransportDeleteIndexAction.checkBlock(TransportDeleteIndexAction.java:75)Assume that the following information is available:

Exception in thread "main" java.lang.IllegalStateException: A book has a null property

at com.example.myproject.Author.getBookIds(Author.java:38)

at com.example.myproject.Bootstrap.main(Bootstrap.java:14)

Caused by: java.lang.NullPointerException

at com.example.myproject.Book.getId(Book.java:22)

at com.example.myproject.Author.getBookIds(Author.java:35)

... 1 moreYou can specify the following configuration options:

multiline.pattern: '^[[:space:]]+(at|\.{3})[[:space:]]+\b|^Caused by:'

multiline.negate: false

multiline.match: afterIn the preceding example, the pattern matches the following conditions:

For more information, see the official documentation at https://www.elastic.co/guide/en/beats/filebeat/current/multiline-examples.html

In addition to the preceding multiline configuration options, you can set options to refresh the memory for multiline messages, set multiline.max_lines to specify the maximum number of lines that can be combined into one event, and set multiline.timeout, which indicates the timeout period, to the default value of 5 seconds. After the specified timeout, Filebeat sends the multiline event even if no new patterns are found to start a new event.

Let's look at a use case of multiline.flush_pattern. This configuration option for Filebeat is useful for multiline application logs with specified start and end tags for included events.

[2015-08-24 11:49:14,389] Start new event

[2015-08-24 11:49:14,395] Content of processing something

[2015-08-24 11:49:14,399] End eventIf you need to display these lines as a single document in Kibana, you can use the following multiline configuration options in the filebeat.yml file:

multiline.pattern: 'Start new event'

multiline.negate: true

multiline.match: after

multiline.flush_pattern: 'End event'According to the configuration options, when the system detects the "Start new event" pattern and the lines following the pattern do not match the pattern, the system appends these lines to the previous line that matches the pattern. When the system detects a line that starts with "End event," the flush_pattern option indicates that the multiline event ends.

Collecting application logs in a single location is the important first step to help resolve any problem with the application. Ensuring that logs are extracted and displayed in the tool helps companies reduce the average time required to resolve the problems.

[1] https://www.elastic.co/guide/en/beats/filebeat/current/multiline-examples.html

Declaration: This article is adapted from "Elastic Helper in the China Community" with the authorization of the author Liu Xiaoguo. We reserve the right to investigate unauthorized use. Source: https://elasticstack.blog.csdn.net/

How Do We Use an Ingest Node in Elasticsearch to Enrich Logs and Metrics

Alibaba Clouder - January 5, 2021

Alibaba Cloud Serverless - February 28, 2023

Data Geek - May 13, 2024

Alibaba Clouder - December 30, 2020

Alibaba Clouder - December 29, 2020

Alibaba Clouder - January 4, 2021

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Data Geek