In the past, data enrichment was available only in Logstash. Then, after an enrich processor was introduced in Elasticsearch 7.5.0, we could enrich data in Elasticsearch without having to configure a separate service or system.

The master data used for enrichment is usually created in CSV files. In this article, we will explain step by step how to enrich data by using the data in CSV files and the enrich processor that runs on an ingest node.

First, you can import the following sample master data in CSV format by using Kibana on Alibaba Cloud Elasticsearch or user-created Elasticsearch, Logstash, and Kibana (ELK) on Elastic Compute Service (ECS). Then, enrich the target document with the sample master data while ingesting the document into Elasticsearch. In this example, we store the master data in a file named test.csv. The data indicates information about devices in an organization's inventory.

"Device ID","Device Location","Device Owner","Device Type"

"device1","London","Engineering","Computer"

"device2","Toronto","Consulting","Mouse"

"device3","Winnipeg","Sales","Computer"

"device4","Barcelona","Engineering","Phone"

"device5","Toronto","Consulting","Computer"

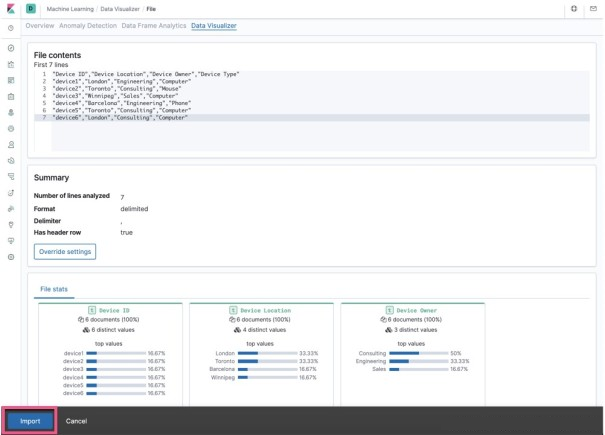

"device6","London","Consulting","Computer"Note that CSV data must not contain any spaces because the current version of Data Visualizer only accepts precisely formatted data. This issue is recorded in Github.

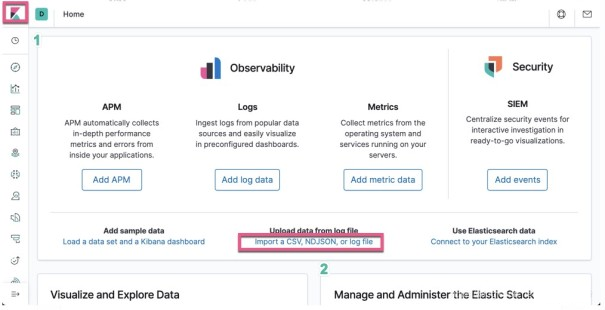

You can use Kibana to import the data into Elasticsearch. Open Kibana:

Click Import a CSV, NDJSON, or log file.

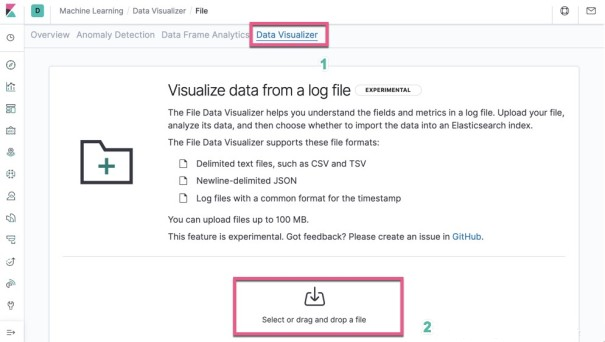

Click Select or drag and drop a file and then select the test.csv file that we just created.

Click Import.

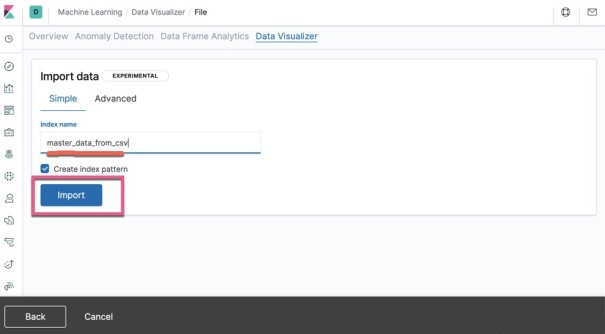

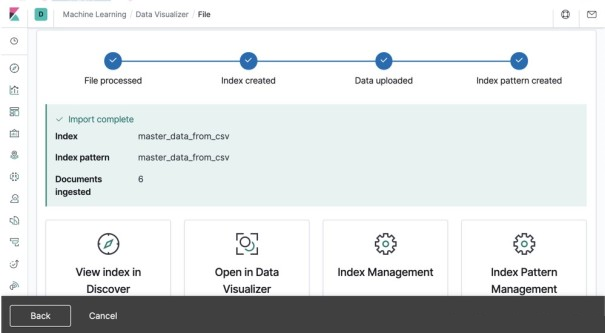

In this example, we name the imported index master_data_from_csv. Click Import.

So far, we have created the master_data_from_csv index. You can select any of the four options at the lower part of the UI to view the imported data.

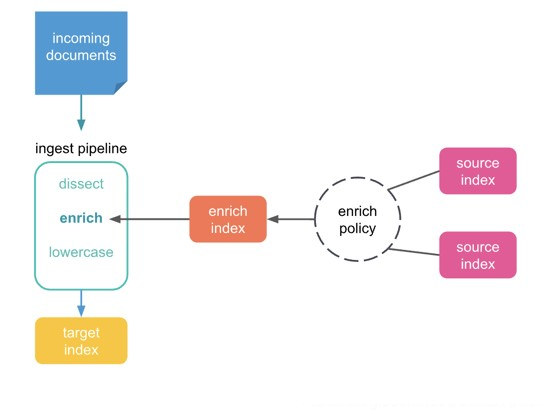

In this section, we will show you how to use the enrich processor to merge master data into a document from an input data stream. For information about the enrich processor, see my previous article "Elasticsearch: Enrich Processor - New Feature in Elasticsearch 7.5."

First, create an enrich policy that defines the field used to match the master data with the document from the input data stream. The following code is a sample policy applicable to our data:

PUT /_enrich/policy/enrich-devices-policy

{

"match": {

"indices": "master_data_from_csv",

"match_field": "Device ID",

"enrich_fields": [

"Device Location",

"Device Owner",

"Device Type"

]

}

}Run the preceding policy. Then, call the execute enrich policy API to create an enrich index for the policy.

PUT /_enrich/policy/enrich-devices-policy/_executeNext, create an ingest pipeline that uses the enrich policy.

PUT /_ingest/pipeline/device_lookup

{

"description": "Enrich device information",

"processors": [

{

"enrich": {

"policy_name": "enrich-devices-policy",

"field": "device_id",

"target_field": "my_enriched_data",

"max_matches": "1"

}

}

]

}Next, insert a document and apply the ingest pipeline to the document.

PUT /device_index/_doc/1? pipeline=device_lookup

{

"device_id": "device1",

"other_field": "some value"

}You can call the GET API operation to view the imported document.

GET device_index/_doc/1Output:

{

"_index" : "device_index",

"_type" : "_doc",

"_id" : "1",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"my_enriched_data" : {

"Device Location" : "London",

"Device Owner" : "Engineering",

"Device ID" : "device1",

"Device Type" : "Computer"

},

"device_id" : "device1",

"other_field" : "some value"

}

}As shown in the preceding code, a field named my_enriched_data is added to the returned document information. This field includes Device Location, Device Owner, Device ID, and Device Type, which come from the test.csv document that we previously imported. The enrich processor obtains the information from the master_data_from_csv index based on the associated device_id device1. In other words, our data contains more information now. As we said previously, this is the result of enrichment.

In the preceding process, we call the enrich processor by using the pipeline that we specified when we imported the data. However, in actual scenarios, we prefer to add this configuration to the index settings, instead of specifying a pipeline in the request URL. You can do this by adding index.default_pipeline to the index settings.

PUT device_index/_settings

{

"index.default_pipeline": "device_lookup"

}Now, all documents sent to device_index pass through the device_lookup pipeline. You no longer need to add pipeline=device_lookup to the URL. You can run the following PUT command to check whether the pipeline works properly.

PUT /device_index/_doc/2

{

"device_id": "device2",

"other_field": "some value"

}You can run the following command to view the document we just ingested:

GET device_index/_doc/2The following code shows the document:

{

"_index" : "device_index",

"_type" : "_doc",

"_id" : "2",

"_version" : 1,

"_seq_no" : 1,

"_primary_term" : 1,

"found" : true,

"_source" : {

"my_enriched_data" : {

"Device Location" : "Toronto",

"Device Owner" : "Consulting",

"Device ID" : "device2",

"Device Type" : "Mouse"

},

"device_id" : "device2",

"other_field" : "some value"

}

}In most cases, we need to enrich a document while importing the document. This ensures that the document in Elasticsearch contains the information that is required for searching or viewing the document. In this article, we showed how the enrich processor running on an ingest node enriches a document by using CSV data. This feature is useful for merging master data into a document while ingesting the master data into Elasticsearch.

Declaration: This article is adapted from "Elastic Helper in the China Community" with the authorization of the author Liu Xiaoguo. We reserve the right to investigate unauthorized use. Source: https://elasticstack.blog.csdn.net/

Two Methods That Can Greatly Reduce the Wait Time When Logstash Starts

Alibaba Clouder - December 29, 2020

Data Geek - May 15, 2024

Data Geek - April 8, 2024

Alibaba Cloud Storage - April 25, 2019

Alibaba Clouder - April 16, 2019

Alibaba Cloud Indonesia - May 11, 2023

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Data Geek

5101747859894340 March 21, 2022 at 10:52 am

It's very useful Thank you so much !