By Afzaal Ahmad Zeeshan, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

Ever wondered, in the past, how did we ever have the patience to search from the huge directories and lengthy files? Even for the sake of some little information, we had to go through hundreds of pages containing thousands of words, which was simply tedious. But now, with the advent of tremendously powerful search engines (thanks to efficient and dynamic algorithms), the process of information retrieval has become seamlessly responsive and fast.

These search engines work in a way that meets user expectations so amazingly and the key role is of the data organization which becomes possible because of super-efficient indexing of different types of data in order to respond to the actual user queries. Whether it be a normal query to find the users by name, employees by role, software products by category or services that are available to a specific user. Data, its related keywords and pointers to its relevant metadata – everything gets compiled and then organized in the database system using different indexing techniques to fasten the process of search querying and fetching the most relevant results.

Normally, enterprises, due to data privacy and protection concerns, try to build up their own search solutions. These solutions range from normal filtering, complex queries, all the way to the real-time analysis and analytics. This approach means that apart from normal infrastructure management, enterprises also involve in hiring engineers and operations teams to organize and manage the overall search services for the customers. This also implies, that as the user base grows, the infrastructure and its complexities grow too. This requires that you redesign the infrastructure as well as the algorithms that index and store the results for the queries to provide a constant time performance for your users.

Indexing has a correlation with search engine spiders, which are somewhat like web crawlers, using these crawlers search engines skim through the most relevant and necessary details to maintain the indexes of the resource content which will be used later to fetch the immediate user query results. Indexing works as a core of every searching algorithm; however, it is a dependent process. Indexing needs smartly designed data structures and design factor to generate the fast and accurate response of the user query. For instance, merge factor is needed to decide the strategy to update and merge content, fault tolerance ensures the reliability of the selected indexing scheme.

Not only these, the indexes that are generated might not be as much necessary to create the search results, meaning that we might have to integrate some other information in the indexes, or create multiple indexes—or created nested, and you know, this keeps going on as the data and queries grow.

In these cases, normal indexes such as database indexes, or file indexes do not help. In these cases, we need to create and manage services that consider multi-dimensional data, data coming from different sources, and different components requesting information. This can be solved by usage of solutions that are already built and developed by other organizations, since they have already been battle-tested. A few of these open source solutions are,

There are some industry-leading solutions available in the databases, like Full Text Search in PostgreSQL, SQL Server etc.

In these cases, you need to have your own servers and infrastructure setup. If you are a new startup, an organization with no idea of how much your infrastructure needs to scale, or you are just trying to explore, a cloud-based solution by Alibaba Cloud is what you are looking for!

Indexing works critically specially to search from a variety of different types of data such as images, audio files, long documents of various formats and extensions, because we need to collect, store and parse data accordingly to meet the certain requirement of each format. On Alibaba Cloud, you can ingest heterogenous data, and parse it as needed. This will make the searching, for everything on the cloud simpler and easier. For example, millions of new information resources and their events (added, stopped, restarted, etc.) added on the cloud on the regular basis, thus, to store, manage and then retrieve information from these resources will not be easy without this powerful backend indexing and caching algorithms. This is where Alibaba Cloud's search service comes in the action.

Elasticsearch is an open source solution by Elastic and is released under Apache License 2.0. Whereas, Alibaba Cloud's Elasticsearch service is the solution to leverage the same Elasticsearch support with a variety of different features to take the hassle of setup and configuration from you, these features include automatic integration, access and monitoring control, alarming, smart reporting and privilege to use any kind of plug-ins as per your demand and dependency using the real open-source Elasticsearch engine. Alibaba Cloud also makes the X-Pack available to you as a customer of this service, which is a package for the enterprises to manage more in their clusters. Being distributed, the Alibaba Elasticsearch cloud service, supports real-time data ingestion, searching for the queries and automatic clustering for structured and unstructured data, where every granular action is index based.

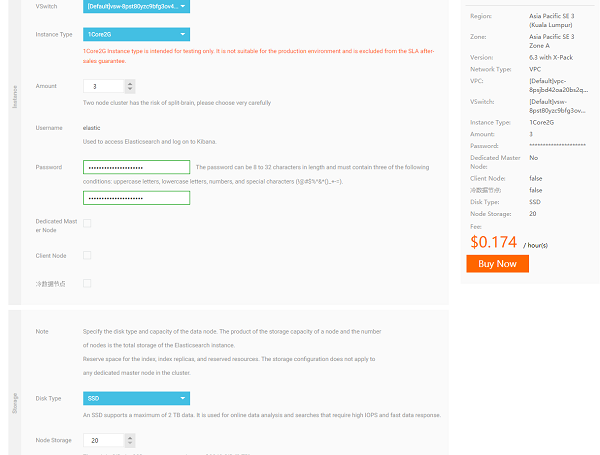

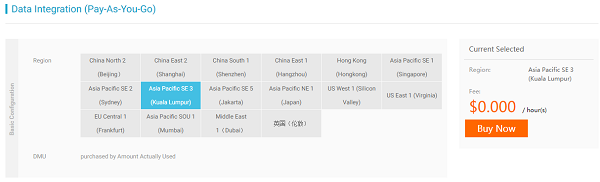

Creation of the service in Alibaba Cloud portal is quite simple, you just create the resource like you would create any other resource. You can use your credits, pay up-front, or use the service as a pay-as-you-go basis.

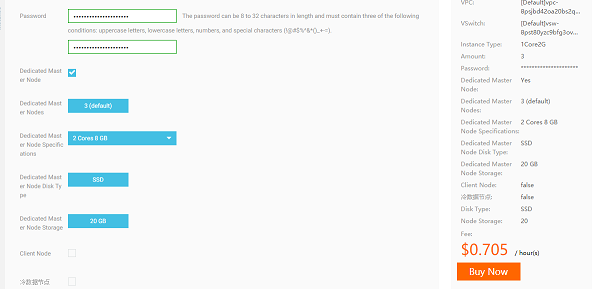

You can decide to design the overall structure for your service, such as addition of a dedicated master node in the cluster.

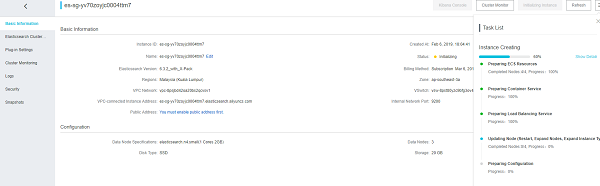

This change will automatically update the pricing information for your cluster. You can see that the addition of the master node here has a change in the pricing, and pricing now includes the price for that included. This will have improvement in the performance of your cluster, but this will cost more. Your cluster will be created, and you can then access the information from the cluster inside the portal—we can also access the Kibana dashboards, and I will show that in a minute.

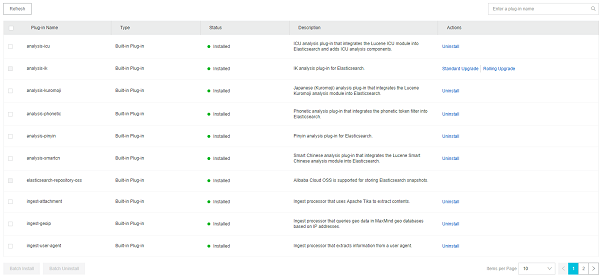

You can also check the status of your deployment and find out what is currently happening. As you can see the deployment is in progress. Any changes that you require can be audited inside the portal—which is the purpose of our search service. Once our service is up and running, we can explore other offerings that are available inside the service. Such as the packages that are made available by Alibaba Cloud as a part of this purchase,

These are built-in plugins that Alibaba Cloud automatically installs along with your setup. This enables you to quickly install and setup your search cluster and ingest the data.

Most of the information you require to connect to the service is available on the overview page for the resource. Public and private information for the connections and ports is available on that page too. Modifying most of the information (apart from the name) requires that your service be redeployed on the servers.

To generate an immediate and quick response of the user queries within your database the Alibaba Elasticsearch uses RESTful API that follows the semantics of Elasticsearch, meaning you can connect to the service using your own favorite runtime of SDK. A couple of services and SDKs are available on GitHub for Node.js, .NET Core, Java and Python etc. Let me search—no pun intended—a few of them for you,

Connect to the service using your own environment and try out the service yourself. For Node.js (sample from their GitHub repository: https://www.npmjs.com/package/elasticsearch ), it can be as easy as,

var elasticsearch = require('elasticsearch');

var client = new elasticsearch.Client({

host: 'localhost:9200',

log: 'trace'

});

client.ping({

// ping usually has a 3000ms timeout

requestTimeout: 1000

}, function (error) {

if (error) {

console.trace('elasticsearch cluster is down!');

} else {

console.log('All is well');

}

});

// You can use client.insert(), for try-outs.

try {

const response = await client.search({

q: 'pants'

});

console.log(response.hits.hits)

} catch (error) {

console.trace(error.message)

}Within each of the SDK, you can find more distributions for these APIs which are dedicated for distinct operations. For instance, SDK API supports quick search in JSON typed document, it creates automatic indexes, collection mapping and data retrieval. Then we have Get, Delete and Update API which is used to perform dedicated CRUD operation as needed.

The internal mechanics works amazingly to make the data organization smooth, the base ES protocol has a wide range of API functions to deal with multiple data sources I.e. Multi Get and Bulk API, which enable the process to get multiple documents based on Index. Whereas, the bulk API call ensures the high speed and performance by a single index/delete API call. For now, Perl and Python are the officially supported client providers for the bulk query operations.

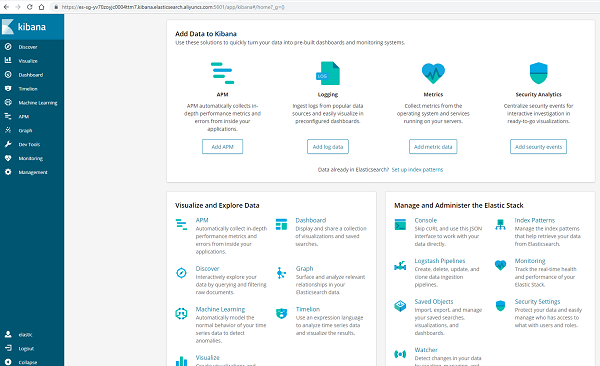

The Kibana dashboards are available on the overview page, you can visit the page for most of the dashboard-oriented tasks,

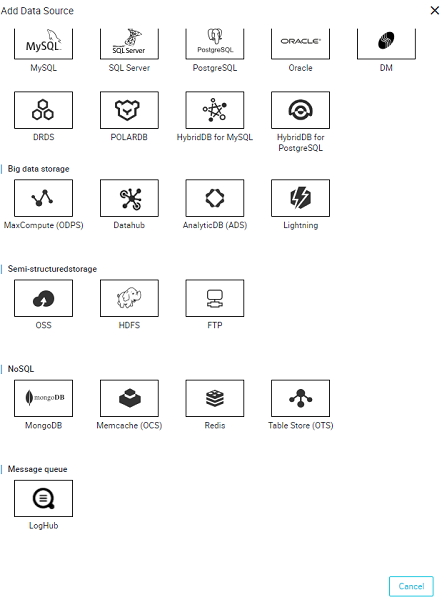

Dashboard can support many operations and provides a graphical interface on top of the native CLI that you can use to quickly perform the actions. Ingestion of the documents can also be done using the Data Integration service, which is free service. You can use that service to automatically ingest the service from a pool of resources to your Elasticsearch service on Alibaba Cloud.

Data Integration service lets you synchronize the data from Alibaba Cloud resources to Alibaba Cloud Elasticsearch, you can set a timer when you want to sync the data between these services. You can always visit Alibaba Cloud's website to find out which services are supported for this feature. More information on how to use Data Integration tool for perform this service is also shown in the same article.

Data Integration service allows you to connect multiple data sources and capture the data from them, its much more like a data-lake kind of a service. Where you can ingest multiple sources into a single source.

This way, you can quickly and easily ingest data from multiple sources and connect them in one single search service for your users—or for internal usage.

This is somewhat of a usage for this service. In next couple of articles, we will explore how to connect caching services to quickly return search results to the users.

2,599 posts | 765 followers

FollowPM - C2C_Yuan - April 1, 2021

ApsaraDB - December 13, 2024

Iain Ferguson - May 9, 2022

Alibaba Clouder - June 18, 2020

ApsaraDB - October 21, 2020

Alibaba Cloud Community - August 5, 2022

2,599 posts | 765 followers

Follow Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Clouder