The Stable Diffusion is a text-to-image potential diffusion model released in 2022. It can generate corresponding images based on text cues. The Stable Diffusion model is a variant of the diffusion model, which can denoise the random Gaussian noise step by step to obtain the samples of interest. Compared with traditional generative models, Stable Diffusion can generate realistic and detailed images without the need for complex training processes or large datasets. At present, the project has been applied to a variety of scenarios, including computer vision, digital art, video games, and other fields.

This article introduces how to quickly build a personal text-based image generation service based on Alibaba Cloud AMD servers and OpenAnolis AI container service.

When you create an ECS instance, you must select an instance type based on the size of the model. The inference process of the entire model consumes a large number of computing resources, and the run-time memory occupies a large amount of memory. To ensure the stability of the model, select an ecs.g8a.16xlarge instance type. In addition, Stable Diffusion needs to download multiple model files, which can occupy a large amount of storage. When creating an instance, at least 100 GB of storage disk should be allocated. Finally, to guarantee the speed of environment installation and model download, the instance bandwidth is allocated 100 Mbit/s.

Alibaba Cloud Linux 3.2104 LTS 64-bit is chosen for the instance operating system.

For more information about how to install Docker on Alibaba Cloud Linux 3, see Install and use Docker (Linux). After the installation is completed, make sure that the Docker daemon has been enabled.

systemctl status dockerThe OpenAnolis community provides a variety of container images based on Anolis OS, including AMD-optimized PyTorch images. You can use these images to create a PyTorch runtime environment.

docker pull registry.openanolis.cn/openanolis/pytorch-amd:1.13.1-23-zendnn4.1

docker run -d -it --name pytorch-amd --net host -v $HOME:/root registry.openanolis.cn/openanolis/pytorch-amd:1.13.1-23-zendnn4.1The above command first pulls the container image, then uses the image to create a container named pytorch-amd that runs in independent mode and maps the user's home directory to the container to preserve the development content.

After the PyTorch container is created and run, run the following command to access the container environment:

docker exec -it -w /root pytorch-amd /bin/bashYou must run subsequent commands in the container environment. If you exit unexpectedly, re-enter the container environment. To check whether the current environment is a container, you can use the following command to query.

cat /proc/1/cgroup | grep docker

# A command output indicates that it is the container environmentBefore you deploy Stable Diffusion, you need to install some required software.

yum install -y git git-lfs wget mesa-libGL gperftools-libsThe subsequent download of the pre-trained model requires support for Git LFS to be enabled.

git lfs installDownload the Stable Diffusion web UI source code.

git clone -b v1.5.2 https://github.com/AUTOMATIC1111/stable-diffusion-webui.gitStable Diffusion web UI needs to clone multiple code repositories during the deployment process, and the cloning process may fail due to network fluctuations.

mkdir stable-diffusion-webui/repositories && cd $_

git clone https://github.com/Stability-AI/stablediffusion.git stable-diffusion-stability-ai

git clone https://github.com/Stability-AI/generative-models.git generative-models

git clone https://github.com/crowsonkb/k-diffusion.git k-diffusion

git clone https://github.com/sczhou/CodeFormer.git CodeFormer

git clone https://github.com/salesforce/BLIP.git BLIPAfter all code repositories are cloned, you need to switch each code repository to a specified branch to ensure stable generation results.

git -C stable-diffusion-stability-ai checkout cf1d67a6fd5ea1aa600c4df58e5b47da45f6bdbf

git -C generative-models checkout 5c10deee76adad0032b412294130090932317a87

git -C k-diffusion checkout c9fe758757e022f05ca5a53fa8fac28889e4f1cf

git -C CodeFormer checkout c5b4593074ba6214284d6acd5f1719b6c5d739af

git -C BLIP checkout 48211a1594f1321b00f14c9f7a5b4813144b2fb9In the Stable Diffusion runtime, you need to use the pre-trained model. The model file is large (about 11 GB), you need to manually download the pre-trained model here.

cd ~ && mkdir -p stable-diffusion-webui/models/Stable-diffusion

wget "https://www.modelscope.cn/api/v1/models/AI-ModelScope/stable-diffusion-v1-5/repo?Revision=master&FilePath=v1-5-pruned-emaonly.safetensors" -O stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.safetensors

mkdir -p ~/stable-diffusion-webui/models/clip

git clone --depth=1 https://gitee.com/modelee/clip-vit-large-patch14.git ~/stable-diffusion-webui/models/clip/clip-vit-large-patch14The Stable Diffusion runtime needs to download the ViT multimodal model from HuggingFace. Since the model has been downloaded by using a domestic image, you need to modify the script file to directly call the model from the local machine.

sed -i "s?openai/clip-vit-large-patch14?${HOME}/stable-diffusion-webui/models/clip/clip-vit-large-patch14?g" ~/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/modules/encoders/modules.pyBefore deploying the Python environment, you can change the pip download source to speed up the download of the dependency package.

mkdir -p ~/.config/pip && cat > ~/.config/pip/pip.conf <<EOF

[global]

index-url=http://mirrors.cloud.aliyuncs.com/pypi/simple/

[install]

trusted-host=mirrors.cloud.aliyuncs.com

EOFInstall Python runtime dependencies.

pip install cython gfpgan open-clip-torch==2.8.0 httpx==0.24.1

pip install git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1To ensure that ZenDNN can fully release CPU computing power, two environment variables need to be set: OMP_NUM_THREADS and GOMP_CPU_AFFINITY.

cat > /etc/profile.d/env.sh <<EOF

export OMP_NUM_THREADS=\$(nproc --all)

export GOMP_CPU_AFFINITY=0-\$(( \$(nproc --all) - 1 ))

EOF

source /etc/profileFinally, run the script to automate the deployment of the runtime environment for Stable Diffusion.

cd ~/stable-diffusion-webui

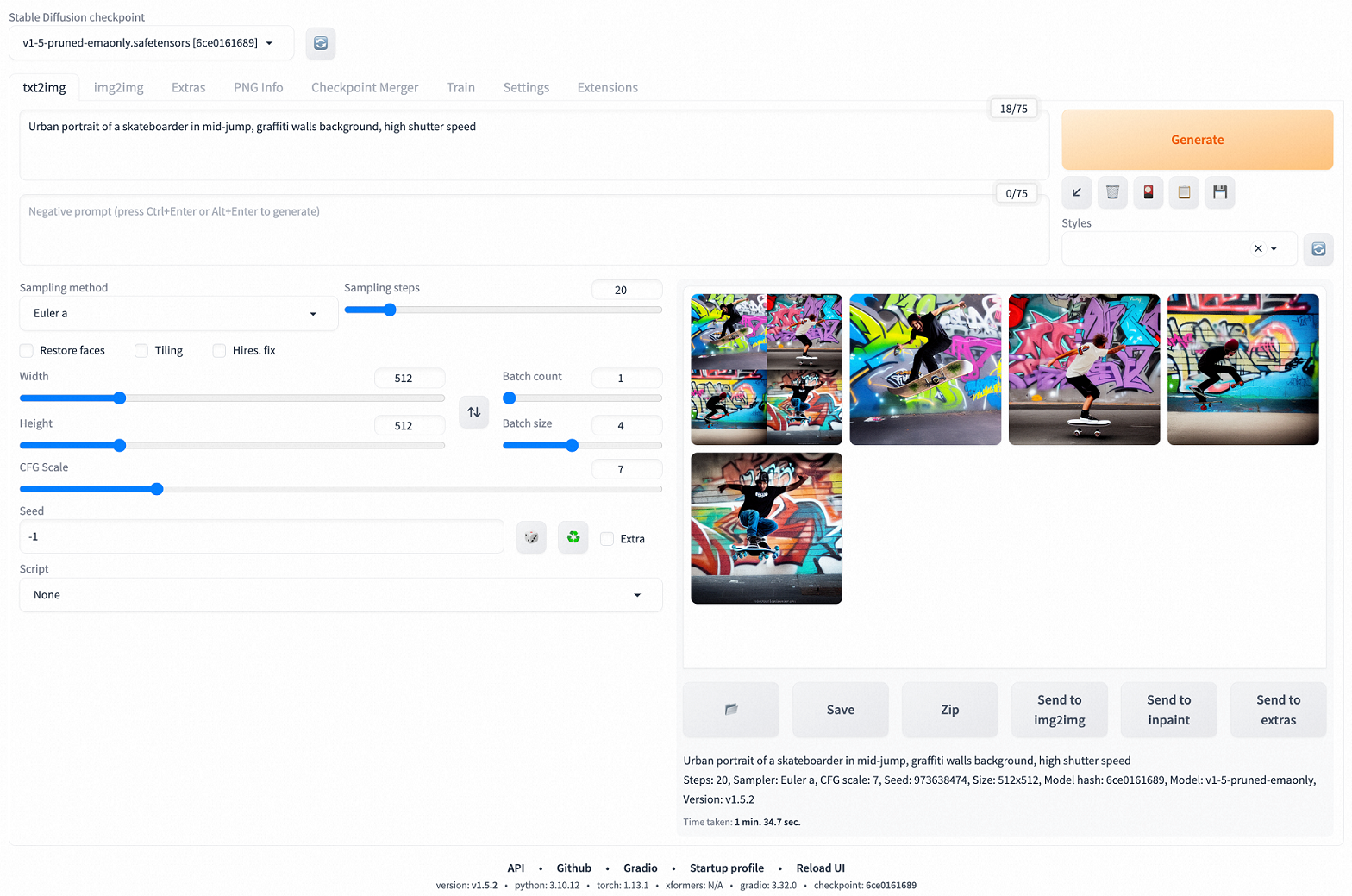

venv_dir="-" ./webui.sh -f --skip-torch-cuda-test --exitA web demo is provided in the project source code, which can be used to interact with Stable Diffusion in real time.

export LD_PRELOAD=/usr/lib64/libtcmalloc.so.4

export venv_dir="-"

python3 launch.py -f --skip-torch-cuda-test --skip-version-check --no-half --precision full --use-cpu all --listenAfter the service is deployed, you can go to http://<ECS public IP address>:7860 to access the service.

1,344 posts | 471 followers

FollowAlibaba Cloud Serverless - July 27, 2023

Alibaba Cloud Native Community - September 19, 2023

Farruh - October 2, 2023

Alibaba Cloud Native Community - March 18, 2024

Farruh - October 1, 2023

Alibaba Container Service - August 4, 2023

1,344 posts | 471 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Cloud Community