Generative AI (GenAI) models have revolutionized the way we create and experience content. These models possess the remarkable ability to generate text, images, music, and videos that closely resemble human-created content. They have become invaluable tools across industries, enabling creative expression, automation, and problem-solving.

In this article, we will explore how you can create your own GenAI model without the need for costly investments or extensive time commitments. While training and utilizing language-based models like BERT and GPT can be resource-intensive, we will focus on a stable diffusion text and image generation solution as an example model. This approach offers a cost-effective alternative that delivers impressive results.

The Diffusion Model stands as a powerful technique within the realm of generative AI specifically designed to generate lifelike, high-quality images. It operates on a probabilistic framework, iteratively refining an initial image over multiple steps or time intervals.

During the diffusion process, carefully designed noise sources are applied to the image at each step, gradually degrading its quality. However, through a series of calculated transformations, the model learns to reverse this degradation and restore the image with enhanced quality.

The key concept behind the diffusion model lies in leveraging data-dependent noise sources to encourage the model to capture the underlying structure and intricate patterns present in the training data. By iteratively applying these noise sources and optimizing the model, the diffusion model becomes capable of generating highly realistic and diverse images that closely resemble the training dataset.

This approach has gained significant popularity in domains such as computer vision, art, and creative applications. It empowers the creation of visually captivating images, artistic compositions, and even realistic deepfake content. The diffusion model offers a unique mechanism for generating images abundant in fine details, diverse textures, and coherent structures.

By harnessing the potential of the diffusion model, developers and artists can explore novel frontiers in image generation, content creation, and visual storytelling. It unlocks opportunities for innovative applications in areas like virtual reality, augmented reality, advertising, and entertainment.

The Diffusion text-to-image generation solution provided by Alibaba Cloud's Platform for AI (PAI) product facilitates a streamlined end-to-end construction process. This comprehensive solution supports offline model training, inference, and empowers the generation of images directly from text inputs.

Alibaba Cloud's PAI offers a comprehensive solution for AI and an example for text and image generation, providing an end-to-end, customizable white box solution. It supports text and image generation functions, allowing users to create intelligent text-image generation models tailored to their business scenarios. Alternatively, users can leverage the default models provided by PAI for model tuning and online deployment to generate diverse images based on different text inputs.

The following components of PAI play a vital role in this process:

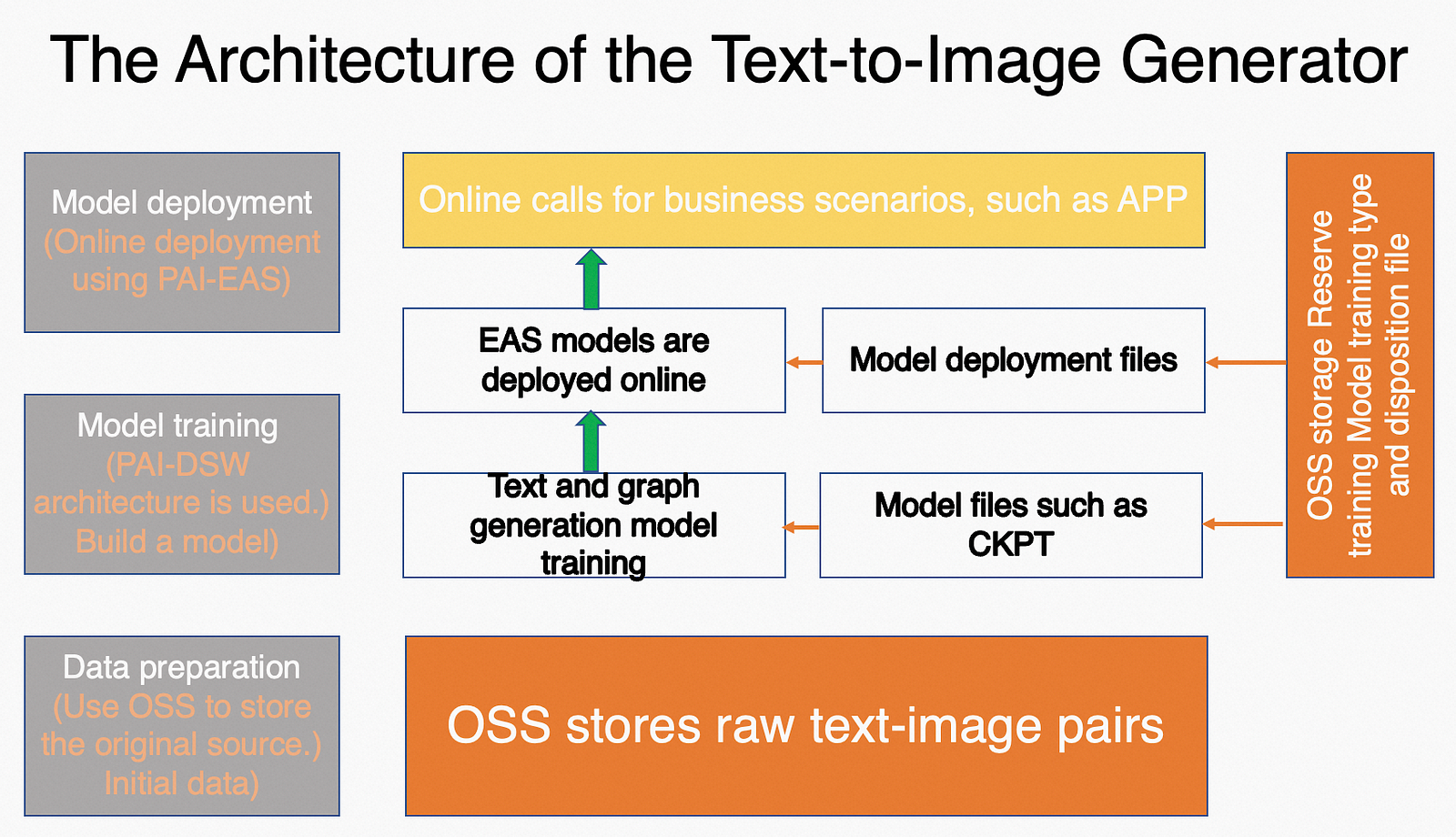

Architecture of text-to-image model development and deployment on PAI involves utilizing PAI-DSW for fine-tuning the model, PAI-EAS for online deployment and generation of images from text, and PAI-DLC for streamlined deep learning environment setup and management.

The overall architecture of text-to-image model development and deployment on PAI involves utilizing PAI-DSW for fine-tuning the model, PAI-EAS for online deployment and generation of images from text, and PAI-DLC for streamlined deep learning environment setup and management.

Before we dive into the deployment options, let's ensure we have everything ready for a smooth process. Here are the preparations you need to complete:

By completing these preparations, you'll be ready to explore the deployment options and choose the one that best suits your needs and preferences. Let's dive in!

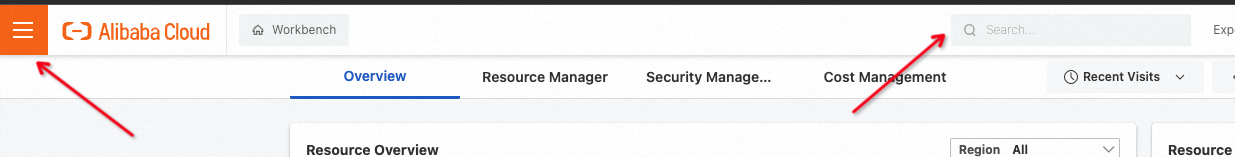

1. Log into the Alibaba Cloud Console, your gateway to powerful cloud computing solutions.

2. Navigate to the PAI page, where the magic of Machine Learning Platform for AI awaits. You can search for it or access it through the PAI console.

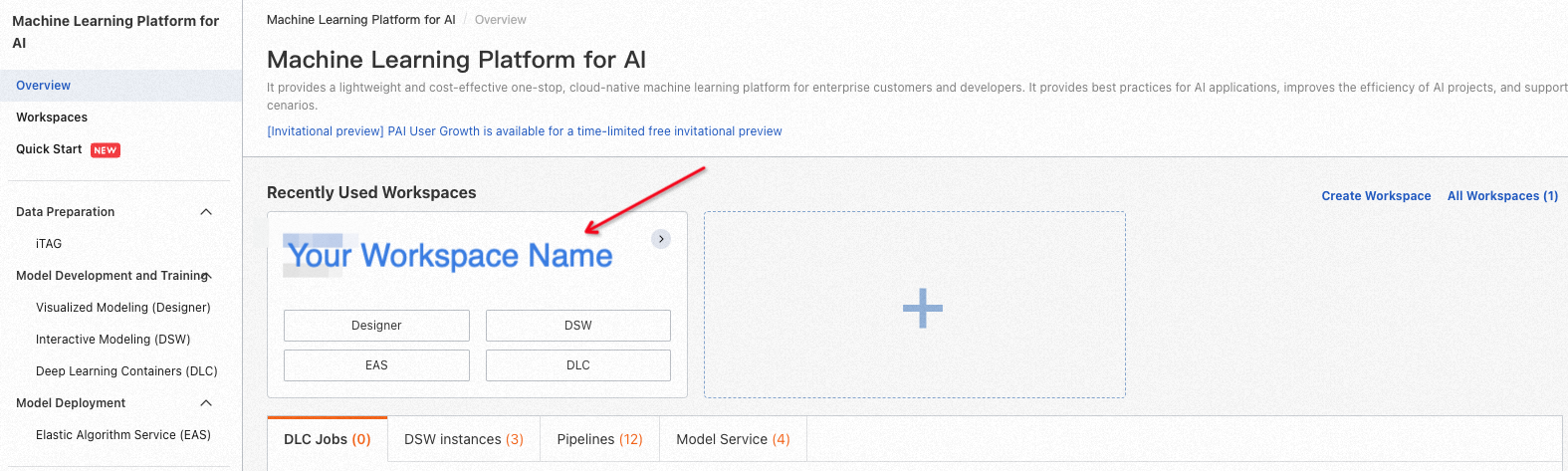

3. If you're new to PAI, fear not! Creating a workspace is a breeze. Just follow the documentation provided, and you'll be up and running in no time.

4. Immerse yourself in the world of workspaces as you click on the name of the specific workspace you wish to operate in. It's in the left-side navigation pane.

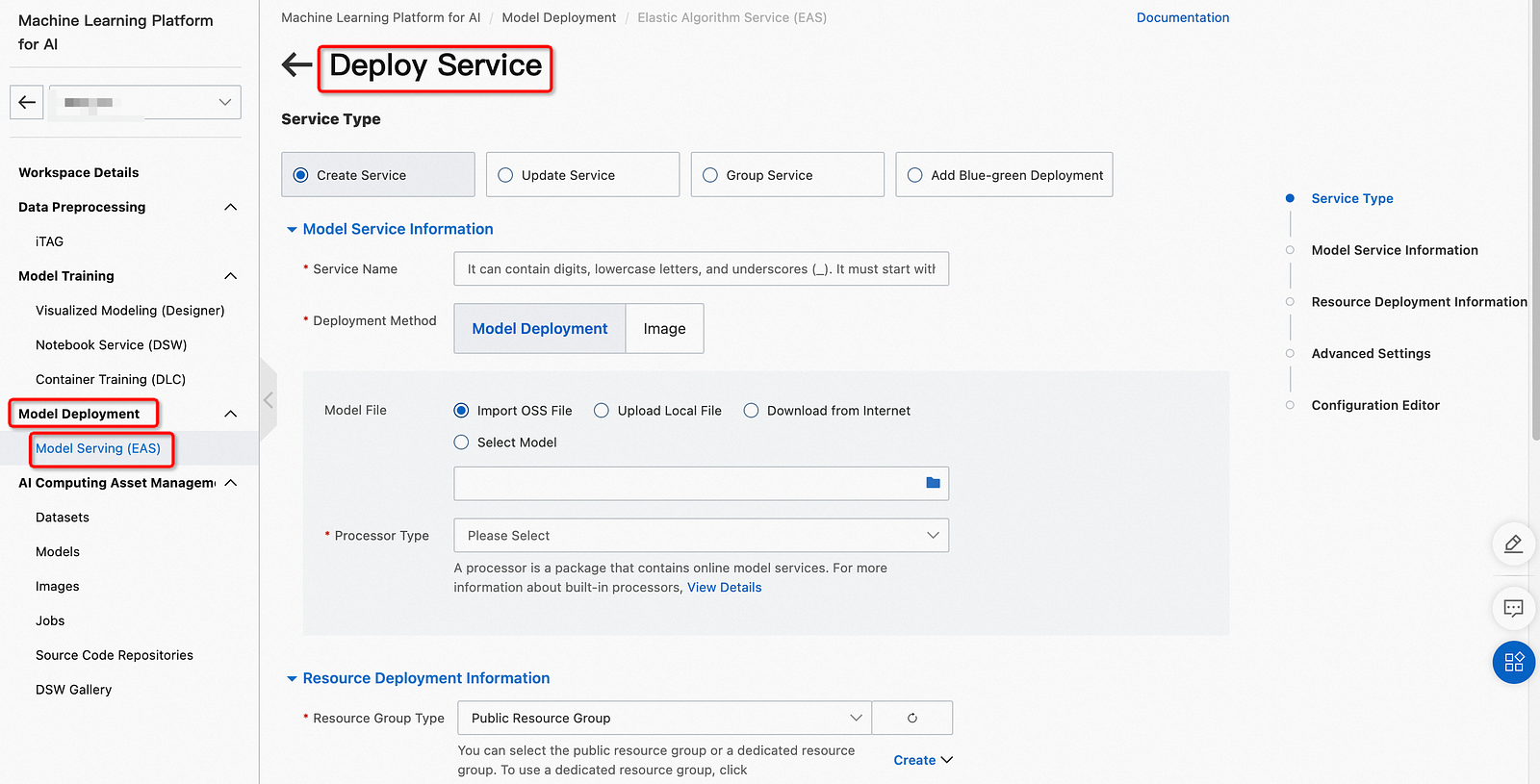

5. In the left navigation bar of the workspace page, select Model Deployment > Model Online Service (EAS) to enter the PAI EAS Model Online Service page.

6. On the Inference Service tab, click Deploy Service .

7. On the Deploy Service page, configure parameters and click Deploy .

1. Paste the content of the following JSON file into the text box under the corresponding configuration edit .

{

"name": "<Replace with your own service name>",

"processor_entry": "./app.py",

"processor_path": "https://pai-vision-exp.oss-cn-zhangjiakou.aliyuncs.com/wzh-zhoulou/dl_eas_processor/ldm_0117/eas_code_230116_v3.tar.gz",

"model_path": "https://pai-vision-exp.oss-cn-zhangjiakou.aliyuncs.com/wzh-zhoulou/dl_eas_processor/ldm_0117/eas_model_230116.tar.gz",

"data_image": "registry.cn-shanghai.aliyuncs.com/pai-ai-test/eas-service:ch2en_ldm_v002",

"metadata": {

"resource": "<Select the resource group you use in the console>",

"instance": 1,

"memory": 50000,

"cuda": "11.4",

"gpu": 1,

"rpc.worker_threads": 5,

"rpc.keepalive": 50000

},

"processor_type": "python",

"baseimage": "registry.cn-shanghai.aliyuncs.com/eas/eas-worker-amd64:0.6.8"

}The service provides a RESTful API, and the specific Python call example is as follows.

import json

import sys

import requests

import base64

from io import BytesIO

from PIL import Image

import os

from PIL import PngImagePlugin

hosts = '<Replace with your service call address>' # Service address, which can be obtained in the call information under the service method column of the target service.

head = {

"Authorization": "<Replace with your service token>" # Service authentication information, which can be obtained in the call information under the service method column of the target service.

}

def decode_base64(image_base64, save_file):

img = Image.open(BytesIO(base64.urlsafe_b64decode(image_base64)))

img.save(save_file)

datas = json.dumps({

"text": "a cat playing the guitar",

"skip_translation": False,

"num_inference_steps": 20,

"num_images": 1,

"use_blade": True,

}

)

r = requests.post(hosts, data=datas, headers=head)

data = json.loads(r.content.decode('utf-8'))

text = data["text"]

images_base64 = data["images_base64"]

success = data["success"]

error = data["error"]

print("text: " + text)

print("num_images:" + str(len(images_base64)))

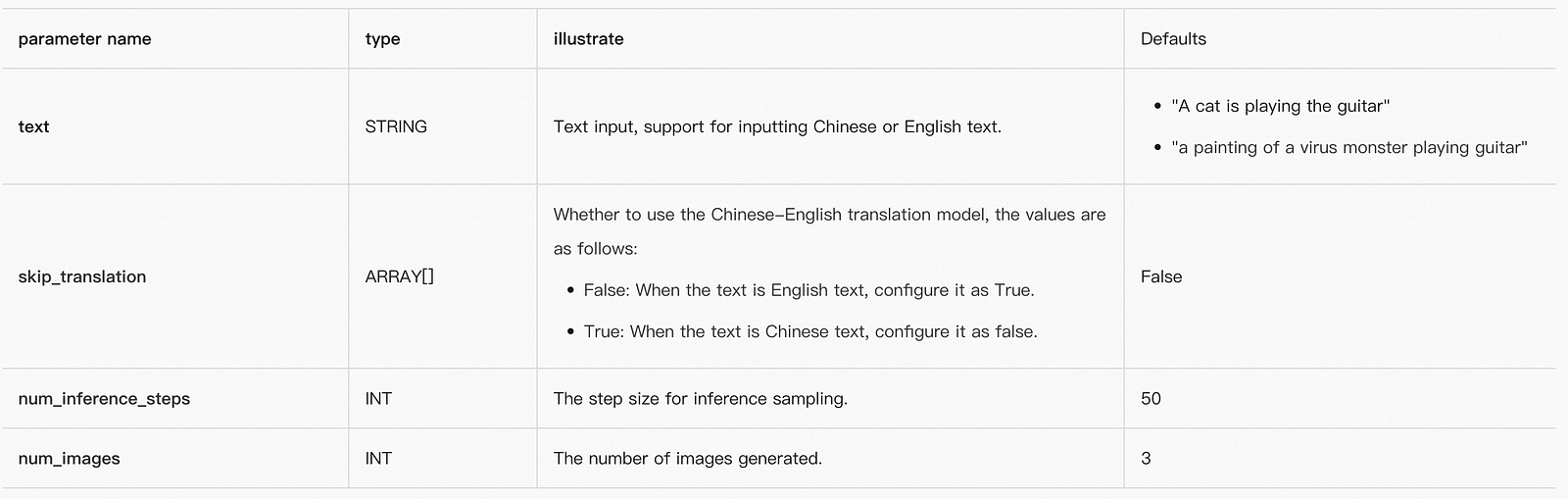

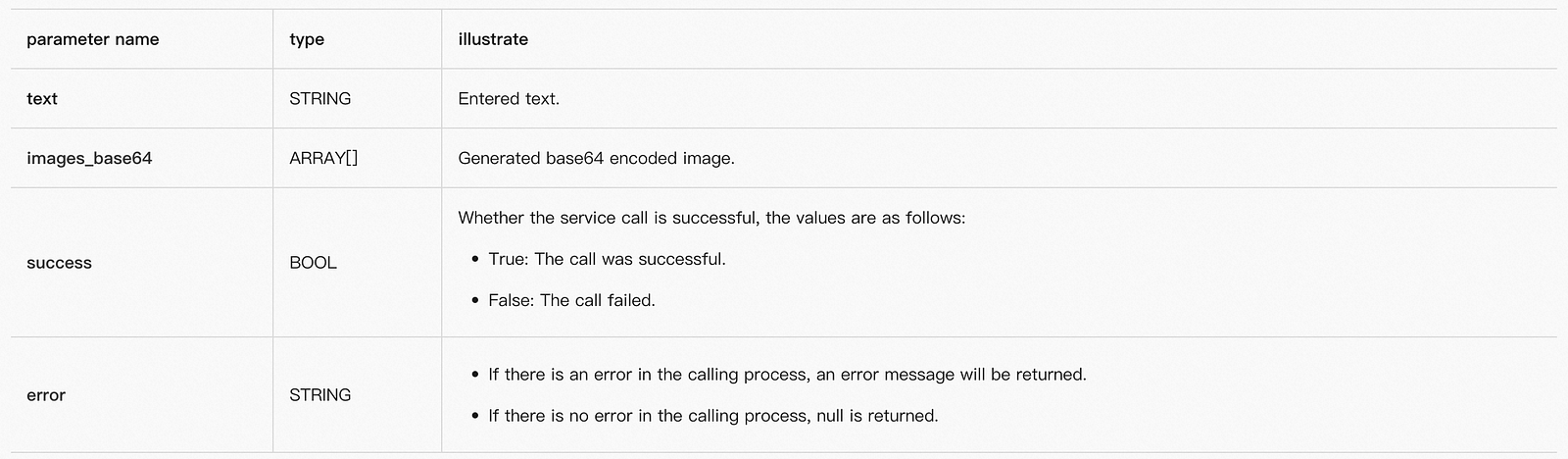

decode_base64(images_base64[0], "./decode_ldm_base64.png")The service call parameters are configured as follows.

The service return parameters are described as follows.

1. Prepare training and validation datasets.

This article uses a subset of a text and image dataset for model training. The specific format requirements of the training data set and the verification data set are as follows.

1) Data: Training dataset; Format: TSV; Columns: text column, Image column (base64 encoded); File: T2I_train.tsv.

2) Data: Validation dataset; Format: TSV; Columns: text column, Image column (base64 encoded); File: T2I_val.tsv

2. Upload the dataset to the OSS Bucket. For details, see Uploading Files .

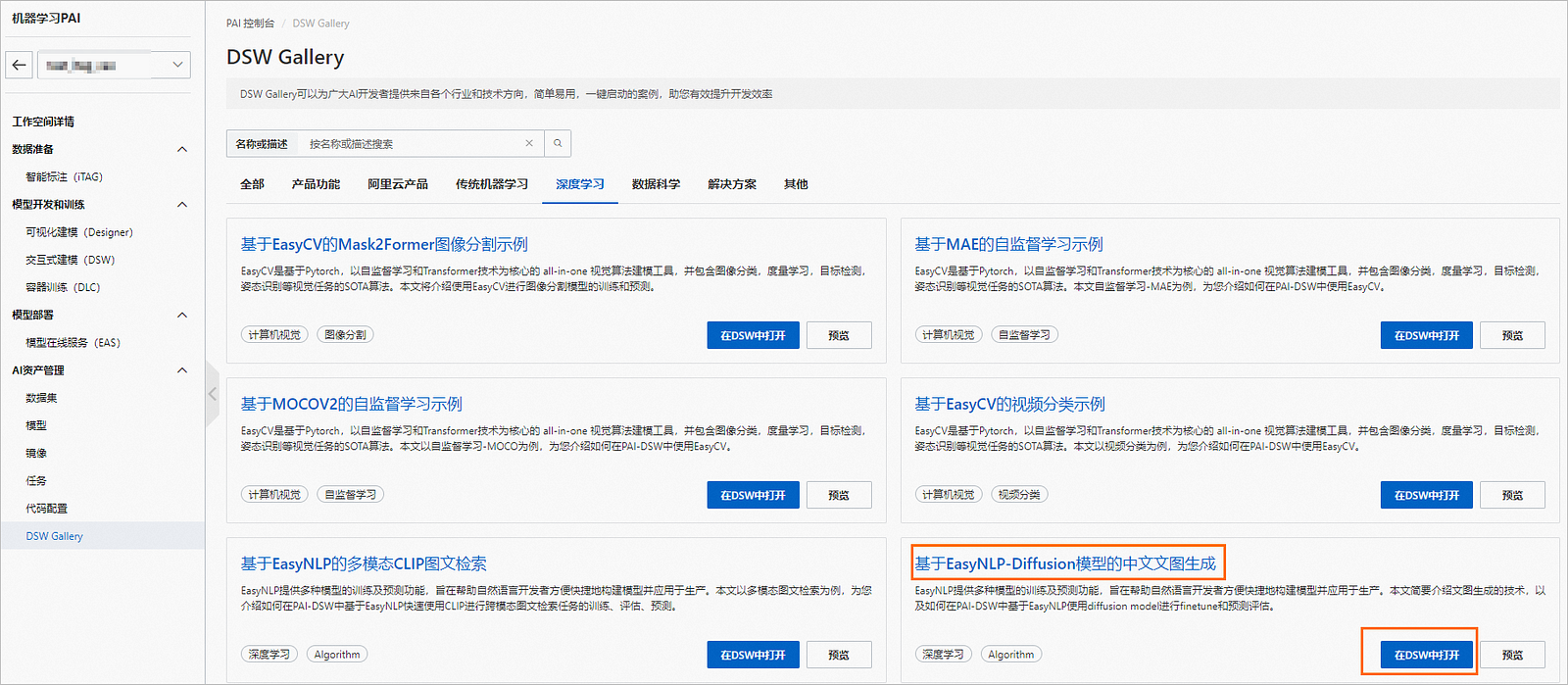

1. Enter DSW Gallery, see Function Trial: DSW Gallery for details .

2. In the Chinese text-image generation area based on the EasyNLP Diffusion model on the Deep Learning tab , click Open in DSW , and follow the console operation instructions to build a text-image generation model, namely the Finetune model.

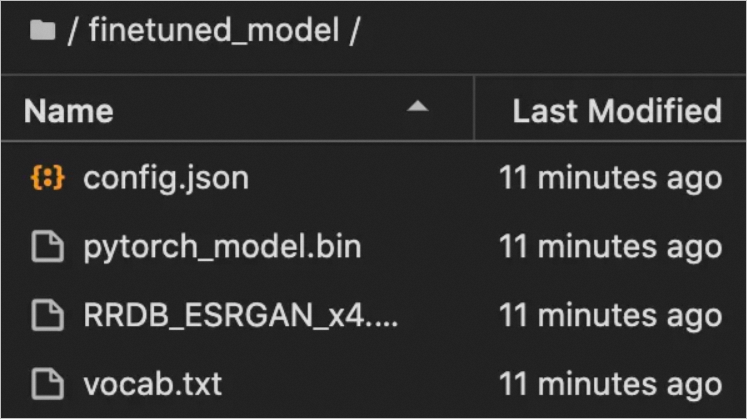

3. Package the trained model and other related configuration files. Package the trained model and other configuration files into tar.gz format, and upload them to the user's OSS Bucket. The directory structure for models and configurations is shown in the figure below.

You can use the following command to pack the directory into tar.gz format.

cd finetuned_model/

tar czf finetuned_model.tar.gz config.json pytorch_model.bin RRDB_ESRGAN_x4.pth vocab.txtfinetuned_model.tar.gz is the packaged model file.

1. Deploy the model service.

2. Refer to Step 1: Deploy the service, paste the content of the following JSON file into the text box under the corresponding configuration edit, and deploy the Finetune model service.

{

"baseimage": "registry.cn-shanghai.aliyuncs.com/eas/eas-worker-amd64:0.6.8",

"data_image": "registry.cn-shanghai.aliyuncs.com/pai-ai-test/eas-service:ch_ldm_v100",

"metadata": {

"cpu": 15,

"gpu": 1,

"instance": 1,

"memory": 50000,

"resource": "<请替换成自己的资源组>",

"rpc": {

"keepalive": 50000,

"worker_threads": 5

}

},

"model_path": "<请替换成finetune后打包并上传到OSS的模型文件>",

"processor_entry": "./app.py",

"processor_path": "http://pai-vision-exp.oss-cn-zhangjiakou.aliyuncs.com/wzh-zhoulou/dl_eas_processor/ch_ldm/ch_ldm_blade_220206/eas_processor_20230206.tar.gz",

"processor_type": "python",

"name": "<自己的服务名称>"

}in:

3. Call the model service.

Refer to Step 2: Invoke the service to call the Finetune model service. Among them: text only supports Chinese text input; service call parameters do not support configuration skip_translation.

The GenAI-Diffusion Graphic Generation Solution offers a wide range of benefits and applications that can revolutionize various industries. With its stable diffusion and webUI interface, this solution enables automated content creation for marketing and advertising, personalized recommendations, chatbots, and virtual environment generation.

One of its key benefits is the ability to automate content creation for marketing and advertising purposes. Marketers can easily generate high-quality graphics and visuals tailored to their specific needs, saving time and resources while ensuring consistency and enhancing brand messaging.

The solution also excels in personalized recommendations and chatbot applications. By leveraging AI and fine-tuning through the webUI interface, businesses can provide personalized recommendations to customers, improving user experiences and increasing customer satisfaction. Additionally, chatbots powered by GenAI-Diffusion can generate visually engaging content, enhancing communication and creating a more interactive conversational experience.

Moreover, this solution is a valuable tool for creating virtual environments and generating visual content. Whether it's for gaming, virtual reality experiences, or architectural visualizations, GenAI-Diffusion seamlessly generates realistic and immersive graphics. The ability to fine-tune through the webUI interface allows users to customize visuals to their specific requirements, resulting in visually stunning virtual environments.

If you would like to learn more about the GenAI-Diffusion Graphic Generation Solution and its applications, we encourage you to contact Alibaba Cloud or visit our website at www.alibabacloud.com. Our team of experts is available to provide further information and discuss how this solution can benefit your specific needs. Together, let's explore the possibilities of automated content creation, personalized recommendations, chatbots, and virtual environments.

Rapid Deployment of AI Painting with WebUI on PAI-EAS using Alibaba Cloud

Alibaba Cloud Community - February 13, 2025

Alibaba Cloud Community - September 15, 2022

Alibaba Cloud Community - October 25, 2024

Alibaba Cloud Indonesia - November 22, 2023

Farruh - November 23, 2023

PM - C2C_Yuan - February 13, 2025

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Farruh