Note

To view the release notes for ack-koordinator, see Release notes.

What is ack-koordinator

ack-koordinator is a QoS-aware scheduling system. ack-koordinator improves resource utilization and ensures resource supply for applications based on their QoS classes. This prevents performance degradation due to resource contention. For example, ack-koordinator can colocate service-oriented applications and batch processing tasks on the same node. ack-koordinator uses features such as CPU Burst and dynamic resource overcommitment to improve resource utilization, while ensuring the performance of service-oriented applications. ack-koordinator is suitable for batch processing, high-performance computing (HPC), AI, and machine learning scenarios. ack-koordinator is the key component that Container Service for Kubernetes (ACK) uses to implement QoS-aware scheduling. ack-koordinator is also used to enable the following features: CPU Suppress, topology-aware scheduling, dynamic resource overcommitment, load-aware scheduling, descheduling, and resource profiling.

To enable the preceding features, you need to first install ack-koordinator in the ACK console. Then, configure a ConfigMap or add relevant pod annotations to enable the features.

Architecture

ack-koordinator consists of two control planes (Koordinator Manager and Koordinator Descheduler) and one DaemonSet component (Koordlet):

Koordinator Manager: Koordinator Manager is deployed as a Deployment and runs on a primary pod and a secondary pod to ensure high availability.

SLO Controller: SLO Controller manages resource overcommitment and dynamically adjusts the amount of resources that are overcommitted. SLO Controller also manages the SLO policies of each node.

Recommender: Recommender provides the resource profiling feature and estimates the peak resource demand of workloads. Recommender simplifies the configuration of resource requests and limits for containers.

Koordinator Descheduler: Koordinator Descheduler is deployed as a Deployment and is used to conduct descheduling.

Koordlet: Koordlet is deployed as a DaemonSet and is used to support dynamic resource overcommitment, load-aware scheduling, and QoS-aware scheduling in colocation scenarios.

Koord-Scheduler is not included in ack-koordinator. The relevant scheduling capabilities required by ack-koordinator are provided by the ACK scheduler.

Note

In an ACK Serverless cluster, ack-koordinator consists of only the Koordinator Manager component and supports the resource profiling feature.

Version description

In ack-koordinator v1.1.1-ack.1 and later, the version number uses the x.y.z-ackn format.

x.y.z: indicates the corresponding open source Koordinator version. This means that ack-koordinator supports all features provided by this open source version.

ackn: indicates feature enhancement and optimization based on the open source Koordinator version.

Terms

Kubernetes defines the following QoS classes: Guaranteed, Burstable, and BestEffort. Koordinator is compatible with Kubernetes QoS classes and provides enhancements for Kubernetes QoS classes. Koordinator provides two QoS classes: latency-sensitive (LS) and best-effort (BE). You can use the koordinator.sh/qosClass field to specify Koordinator QoS classes. Koordinator QoS classes aim to improve resource utilization and enable fine-grained resource scheduling in colocation scenarios. This ensures the service quality of your workloads.

Scheduling refers to the process of allocating pending pods to nodes. Descheduling refers to the process of scheduling pods that match eviction rules on a node to another node. In scenarios where hotspot nodes exist due to cluster resource inequality or where pods fail to be scheduled based on the predefined policy due to changes in node attributes, you can use descheduling to optimize resource usage and schedule pods to the optimal nodes. This ensures the high availability and efficiency of the workloads in your cluster.

Colocation refers to the process of deploying different types of workloads on the same compute node. Workloads of different types have different QoS classes. This improves resource utilization and reduces resource costs. Colocation improves the utilization of compute resources and storage resources, reduces idle resources, and reduces cluster resource costs. Colocation is suitable for workloads that need to handle traffic fluctuations.

Features

The components of ack-koordinator support the features of the corresponding open source Koordinator version. When you install and configure the components of ack-koordinator, only basic feature gates are enabled by default. To use advanced features supported by open source Koordinator, you must manually enable the corresponding feature gates for ack-koordinator. For more information about the advanced features supported by open source Koordinator, see Koordinator official documentation.

Category | References | Description | Whether the feature is the same as that of the open source Koordinator version |

Category | References | Description | Whether the feature is the same as that of the open source Koordinator version |

Load-aware scheduling | This feature can monitor the loads of nodes and schedule pods to nodes with lower loads to implement load balancing. This avoids overloading nodes. | Yes |

QoS-aware scheduling | Enable CPU Burst | This feature automatically detects CPU throttling events and automatically adjusts the CPU limit to a proper value. After this feature is enabled, the CPU resources used by containers can burst above the CPU limit when the CPU demand spikes. This ensures CPU supply for containers. | Yes |

Enable topology-aware CPU scheduling | This feature fixes pods to the CPU cores on nodes. This addresses the issue of application performance degradation caused by frequent CPU context switching and memory access across non-uniform memory access (NUMA) nodes. | No |

Enable dynamic resource overcommitment | This feature monitors the loads of a node in real time and then schedules resources that are allocated to pods but are not in use. This allows you to meet the resource requirements of BE workloads while ensuring fair memory scheduling among BE workloads. | Yes |

Enable CPU Suppress | After dynamic resource overcommitment is enabled, this feature can limit the CPU usage of BE pods on a node within a proper range to prioritize the CPU requests of LS pods. | Yes |

Enable CPU QoS | This feature prevents resource contention among applications with different QoS classes and prioritizes the CPU requests of LS applications. | Yes |

Enable memory QoS | This feature allows you to assign different QoS classes to different containers based on your business requirements. This allows you to prioritize the memory requests of applications with high QoS classes while ensuring fair memory allocation. | Yes |

Enable resource isolation based on the L3 cache and MBA | This feature prevents resource contention for L3 cache (last level cache) and memory bandwidth among applications of different QoS classes and prioritizes the memory requests of LS applications. | Yes |

Dynamically modify the resource parameters of a pod | This feature allows you to use cgroup configuration files to modify the resource parameters of pods. This allows you to temporarily adjust the CPU parameters, memory parameters, and disk IOPS limits of a pod or Deployment without the need to restart the pod or Deployment. | No |

Use DSA to accelerate data streaming | This feature improves the data processing performance of data-intensive workloads on nodes based on Intel® Data Streaming Accelerator (DSA) and optimizes the performance of nearby memory access acceleration. | No |

Enable nearby memory access acceleration feature | This feature migrates the memory on the remote NUMA node of a CPU-bound application to the local NUMA node in a secure manner. This improves the hit ratio of local memory and improves the performance of memory-intensive applications. | No |

Descheduling | Enable the descheduling feature | This feature is suitable for scenarios such as uneven cluster resource utilization, heavily loaded nodes, and demand for new scheduling policies. Descheduling refers to the process of scheduling pods that match eviction rules on a node to another node. This helps maintain cluster health, optimize resource usage, and improve the QoS for workloads. | Yes |

Work with load-aware hotspot descheduling | This feature dynamically monitors changes in the loads of nodes and automatically optimizes the nodes that exceed the load threshold to prevent node overloading. | Yes |

GPU topology awareness | Enable NUMA topology-aware scheduling | This feature schedules pods to optimal NUMA nodes. This reduces memory access across NUMA nodes to optimize the performance of your application. | No |

Resource profiling | This feature analyzes the historical resource usage data and then provides resource specification recommendations for containers. This greatly simplifies the configuration of resource requests and limits for containers. | No |

Installation and management

ack-koordinator is available on the Add-ons page in the ACK console. On the Add-ons page, you can install, update, and uninstall ack-koordinator.

Install and manage ack-koordinator

You can install ack-koordinator on the Add-ons page in the ACK console. After ack-koordinator is installed, you can update ack-koordinator or modify the configurations of ack-koordinator on the Add-ons page.

If the version of ack-koordinator you are using is earlier than 0.7, ack-koordinator is installed from the Marketplace page. Refer to Migrate ack-koordinator from the Marketplace page to the Add-ons page for migration.

Install ack-koordinator: Perform the following steps to install ack-koordinator. You can check the installation progress on the Add-ons page.

Modify ack-koordinator configurations: After you modify the configurations of ack-koordinator, ACK automatically re-installs ack-koordinator based on the new configurations.

Update ack-koordinator: If you have used other methods to manually modify the modules of the deployed ack-koordinator component, the modifications are overwritten after ack-koordinator is updated.

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

On the Add-ons page, find and click ack-koordinator. In the ack-koordinator (ack-slo-manager) card, click Install.

In the Install ack-koordinator (ack-slo-manager) dialog box, configure the parameters and click OK.

Optional: In the left-side navigation pane of the cluster management page, choose to view the status of ack-koordinator.

If Deployed is displayed in the Status column, ack-koordinator is installed.

Uninstall ack-koordinator

Delete topology ConfigMaps on existing nodes

The topology-aware CPU scheduling feature creates a topology ConfigMap in the kube-system namespace for each node in an ACK cluster. ack-koordinator 0.5.1 and later can automatically delete the topology ConfigMaps of the nodes that are removed from a cluster. If you uninstall ack-koordinator, the topology ConfigMaps of the existing nodes in the cluster are retained. The retained topology ConfigMaps do not impact other features but occupy storage space. We recommend that you delete these ConfigMaps at your earliest convenience.

On the Add-ons page, find ack-koordinator and follow the on-screen instructions to uninstall ack-koordinator.

Delete topology ConfigMaps.

In the left-side navigation pane, choose . On the page that appears, select kube-system from the Namespace drop-down list.

Enter -numa-info into the Name search box and select a ConfigMap that matches the ${NODENAME}-numa-info naming rule from the list. Then, click Delete in the Actions column to delete the ConfigMap.

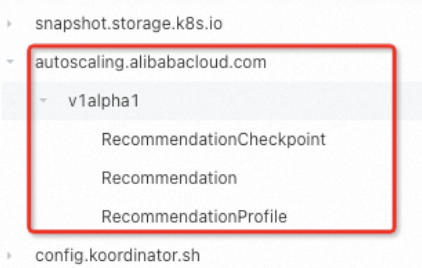

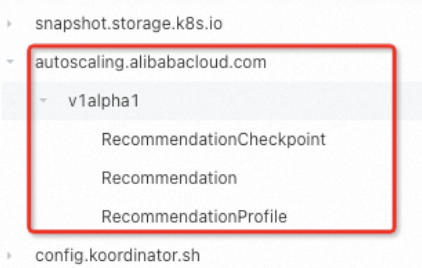

Delete CRDs

After you uninstall ack-koordinator, some CustomResourceDefinitions (CRDs) used by ack-koordinator may be retained. The retained CRDs do not impact other features but occupy storage space. However, if you install ack-koordinator again, the retained CRDs may impact the features of ack-koordinator. In this case, we recommend that you delete the retained ConfigMaps at your earliest convenience. The following table lists the CRDs.

autoscaling.alibabacloud.com | slo.koordinator.sh |

autoscaling.alibabacloud.com | slo.koordinator.sh |

|

|

Billing

No fee is charged when you install or use the ack-koordinator component. However, fees may be charged in the following scenarios:

ack-koordinator is a non-managed component that occupies worker node resources after it is installed. You can specify the amount of resources requested by each module when you install the component.

By default, ack-koordinator exposes the monitoring metrics of features such as resource profiling and fine-grained scheduling as Prometheus metrics. If you enable Prometheus metrics for ack-koordinator and use Managed Service for Prometheus, these metrics are considered custom metrics and fees are charged for these metrics. The fee depends on factors such as the size of your cluster and the number of applications. Before you enable Prometheus metrics, we recommend that you read the Billing topic of Managed Service for Prometheus to learn about the free quota and billing rules of custom metrics. For more information about how to monitor and manage resource usage, see Query the amount of observable data and bills.

What to do next

Relationship between ack-koordinator and ack-slo-manager

ack-koordinator is formerly known as ack-slo-manager and facilitates the incubation of open source Koordinator. ack-slo-manager also benefits from Koordinator in terms of technologies as Koordinator reaches maturity and stability. Therefore, ack-koordinator supports the features of open source Koordinator and also provides other features. To use the latest features and bug fixes of ack-koordinator, follow the steps described in Migrate ack-koordinator from the Marketplace page to the Add-ons page to update ack-koordinator to the latest version.

Migrate ack-koordinator from the Marketplace page to the Add-ons page

If the version of ack-koordinator that you are using is earlier than 0.7, ack-koordinator is installed from the Marketplace. You must uninstall it from the Marketplace before reinstalling it. Perform the following steps to migrate ack-koordinator from the Marketplace to the Add-ons page.

If you have modified the ConfigMap of ack-koordinator on the Marketplace page, back up the ConfigMap before you update ack-koordinator. If the ConfigMap is not modified, proceed to Step 2.

Back up the ConfigMap of ack-koordinator in the ACK console or by using kubectl.

Run the following command to save the ConfigMap to the slo-config.yaml file. In this example, the namespace of the ConfigMap is kube-system and the name of the ConfigMap is ack-slo-manager-config. Replace them with the actual values.

kubectl get cm -n kube-system ack-slo-manager-config -o yaml > slo-config.yaml

Run the vim slo-config.yaml command to change the namespace of the ConfigMap in the preceding file to kube-system, change the name of the ConfigMap to ack-slo-config, and then delete all annotations and labels in the ConfigMap in case the update automatically overwrites these settings.

Run the following command to apply the modified ConfigMap to the cluster.

kubectl apply -f slo-config.yaml

Record the key-value pair in the ConfigMap.

In the left-side navigation pane, choose . In the top navigation bar of the page, select the namespace that is specified when you install ack-koordinator from the Marketplace. The default namespace is kube-system.

Enter the ConfigMap name ack-slo-manager-config into the search box, click the name of the ConfigMap that is displayed, and then record the key-value pair.

Use the recorded key-value pair to create another ConfigMap.

In the left-side navigation pane, choose . In the top navigation bar, select All Namespaces.

In the upper-right corner of the ConfigMap page, click Create. In the panel that appears, enter ack-slo-config into the ConfigMap Name field, select the kube-system namespace, click + Add, enter the recorded key-value pair, and then click OK.

In the left-side navigation pane of the Clusters page, choose , and check the status of ack-slo-manager. Then, click Delete in the Actions column to uninstall the ack-slo-manager that was installed from the Marketplace.

On the Add-ons page, update ack-koordinator to the latest version.

Important

If you have modified the ConfigMap of ack-koordinator on the Marketplace page, you need to specify the name of the backup ConfigMap that is created in Step 1 in the ack-koordinator Parameters dialog box.

Upgrade from resource-controller to ack-koordinator

The resource-controller component is phased out. ack-koordinator supports all the features of resource-controller. For example, you can use ack-koordinator to enable topology-aware CPU scheduling and dynamically modify the resource parameters of a pod. If resource-controller is being used by your cluster, perform the following steps to upgrade from resource-controller to ack-koordinator:

Update resource-controller to the latest version.

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

On the Add-ons page, find resource-controller and follow the on-screen instructions to update resource-controller.

Install and configure ack-koordinator.

On the Add-ons page, find and click ack-koordinator. In the ack-koordinator card, click Install.

In the Install ack-koordinator (ack-slo-manager) dialog box, modify agentFeatures (the feature gate switch of ack-slo-agent) on demand and set other parameters. Then, click OK.

Check whether the cluster uses the CPU Burst feature described in Dynamically modify the resource parameters of a pod. This feature allows you to create a CRD or add a pod annotation to modify the cgroup configuration file named cpu.cfs_quota_us. If this feature is used, perform Step ii. If this feature is not used, perform Step c.

Run the following command to obtain the feature-gate configurations from the YAML file of the ack-koordlet DaemonSet:

kubectl get daemonset -n kube-system ack-koordlet -o yaml |grep feature-gates

- --feature-gates=AllAlpha=false,AllBeta=false,...,CPUBurst=true,....

Run the following command to modify the feature-gate configurations of ack-koordlet. Disable Enable CPU Burst by specifying CPUBurst=false. Separate multiple parameters with commas (,). Keep the other settings unchanged.

After CPU Burst is disabled, CPU Burst is unavailable for all containers in the cluster. This prevents the cgroup configuration file cpu.cfs_quota_us from being modified by two modules at the same time.

AllAlpha=false,AllBeta=false,...,CPUBurst=false,....

If you want to dynamically scale the CPU resources of containers, we recommend that you enable the CPU Burst feature. This feature can automatically adjust the CPU limit of pods. For more information, see Enable CPU Burst.

In the left-side navigation pane of the cluster management page, choose to view the status of ack-koordinator.

If Deployed is displayed in the Status column, ack-koordinator is installed.

On the Add-ons page, find resource-controller and follow the on-screen instructions to uninstall resource-controller.

FAQ

Component installation error: no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1" ensure CRDs are installed first

Managed Service for Prometheus is not installed in the cluster. Install the Prometheus component by following the steps in Use Managed Service for Prometheus, or clear Enable Prometheus Metrics for ack-koordinator when you install ack-koordinator on the Add-ons page in the ACK console.

Component installation error: task install-addons-xxx timeout, error install addons map[ack-slo-manager:Can't install release with errors: ... function "lookup" not defined

Update Helm to V3.0 or later. For more information, see [Component Updates] Update Helm V2 to V3.

Release notes

September 2024

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.5.0-ack1.14 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.5.0-ack1.14-4225002-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.5.0-ack1.14-4225002-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.5.0-ack1.14-4225002-aliyun

| 2024-09-12 | The computing logic of the maxUnavailablePerWorkload parameter during descheduling is optimized. The issue in the feature of using cgroup configuration files to dynamically modify the resource parameters of a pod is fixed. Metrics related to resource profiling are renamed. Internal API operations are optimized.

| No impact on workloads |

July 2024

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.5.0-ack1.12 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.5.0-ack1.12-a096ae3-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.5.0-ack1.12-a096ae3-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.5.0-ack1.12-a096ae3-aliyun

| 2024-07-29 | Internal API operations are optimized. | No impact on workloads |

January 2024

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.3.0-ack1.8 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.3.0-ack1.8-94730e0-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.3.0-ack1.8-94730e0-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.3.0-ack1.8-94730e0-aliyun

| 2024-01-24 | | No impact on workloads |

December 2023

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.3.0-ack1.7 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.3.0-ack1.7-3500ce9-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.3.0-ack1.7-3500ce9-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.3.0-ack1.7-3500ce9-aliyun

| 2023-12-21 | The ack-koordinator component can be installed in ACK Edge clusters. The component can be installed on worker nodes, but not on edge nodes. This version provides the same features as v1.3.0-ack1.6.

| No impact on workloads |

October 2023

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.3.0-ack1.6 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.3.0-ack1.6-3500ce9-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.3.0-ack1.6-3500ce9-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.3.0-ack1.6-3500ce9-aliyun

| 2023-10-19 | Internal API operations are optimized. | No impact on workloads |

June 2023

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.2.0-ack1.3 | registry-cn-zhangjiakou.ack.aliyuncs.com/acs/koord-manager:v1.2.0-ack1.3-89a9730-aliyun registry-cn-zhangjiakou.ack.aliyuncs.com/acs/koordlet:v1.2.0-ack1.3-89a9730-aliyun registry-cn-zhangjiakou.ack.aliyuncs.com/acs/koord-descheduler:v1.2.0-ack1.3-89a9730-aliyun

| 2023-06-09 | Internal API operations are optimized. | No impact on workloads |

April 2023

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.2.0-ack1.2 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.2.0-ack1.2-b675c9a8-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.2.0-ack1.2-b675c9a8-aliyun registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.2.0-ack1.2-b675c9a8-aliyun

| 2023-04-25 | | No impact on workloads |

March 2023

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.1.1-ack.2 | registry.cn-hangzhou.aliyuncs.com/acs/koord-manager:v1.1.1-ack.2 registry.cn-hangzhou.aliyuncs.com/acs/koordlet:v1.1.1-ack.2 registry.cn-hangzhou.aliyuncs.com/acs/koord-descheduler:v1.1.1-ack.2

| 2023-03-23 | Internal API operations are optimized. | No impact on workloads |

January 2023

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v1.1.1-ack.1 | | 2023-01-11 | | No impact on workloads |

November 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.8.0 | | 2022-11-17 | | If you want to use load-aware pod scheduling after ack-slo-manager is updated, you must update the Kubernetes version of your cluster to 1.22.15-ack-2.0. Other features are not affected. |

September 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.7.2 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.7.2 | 2022-09-16 | The following issue induced by 0.7.1 is fixed: topology-aware scheduling does not take effect on pods. | No impact on workloads |

v0.7.1 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.7.1 | 2022-09-02 | The CPU throttling issue that occurs when topology-aware scheduling is used in a CentOS 3 kernel environment is fixed. Managed Service for Prometheus can be enabled and disabled. Resource profiling features are supported. ack-slo-manager can no longer be installed from the Marketplace in the ACK console.

| No impact on workloads |

August 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.7.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.7.0 | 2022-08-08 | ack-slo-manager is migrated from the Marketplace to the Add-ons page in the ACK console. If you want to install ack-slo-manager, go to the Add-ons page. | No impact on workloads |

July 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.6.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.6.0 | 2022-07-26 | Internal API operations are optimized and component configurations are simplified. | No impact on workloads |

June 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.5.2 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.5.2 | 2022-06-14 | Specific internal API operations are optimized. CPU QoS for containers is optimized. Automatic creation of the ack-slo-manager namespace can be disabled.

| No impact on workloads |

v0.5.1 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.5.1 | 2022-06-02 | | No impact on workloads |

April 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.5.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.5.0 | 2022-04-29 | | No impact on workloads |

v0.4.1 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.4.1 | 2022-04-14 | | No impact on workloads |

v0.4.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.4.0 | 2022-04-11 | The memory consumption of slo-agent is reduced. | No impact on workloads |

February 2022

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.3.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.3.0 | 2022-02-25 | | No impact on workloads |

December 2021

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.2.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.2.0 | 2021-12-10 | | No impact on workloads |

September 2021

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.1.1 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.1.1-c2ccefa | 2021-09-02 | Internal API operations are optimized. | No impact on workloads |

July 2021

Version | Image address | Release date | Description | Impact |

Version | Image address | Release date | Description | Impact |

v0.1.0 | registry.cn-hangzhou.aliyuncs.com/acs/ack-slo-manager:v0.1.0-09766de | 2021-07-08 | Load-aware pod scheduling is supported. | No impact on workloads |

Elastic Compute Service (ECS)

Elastic Compute Service (ECS)

Container Compute Service (ACS)

Container Compute Service (ACS)