This topic describes common issues that you may encounter when you deploy workloads in Alibaba Cloud Container Service for Kubernetes (ACK) clusters and provides the corresponding solutions.

What is the general process for running a containerized application in an ACK cluster?

You can deploy your application code on-premises or in the cloud. Code written in any programming language can be deployed, delivered, and run in a containerized manner. The process from code development to running a containerized application involves four main stages.

Write business code.

Use a Dockerfile to build an image.

Upload the image to an image repository. You can use Container Registry (ACR) to store the image and perform operations, such as version control, distribution, and pulling.

ACR is available in Personal and Enterprise editions, which are designed for individual developers and enterprise customers, respectively. For more information, see What is Container Registry (ACR)?.

Deploy a workload in an ACK cluster to run the containerized application. You can use the various containerized application management features provided by ACK. For more information, see Workloads.

Why is image pulling slow or failing?

Symptoms

A container takes too long to pull an image, or the image pull fails.

Causes

This issue may be caused by the following reasons:

When you pull an image over the public network, public network access is not enabled for the cluster, or the peak bandwidth of the public IP address is set too low.

When you pull an image from ACR, the image key is incorrect, or the passwordless component is configured incorrectly.

Solutions

When you pull an image over the public network, both the cluster and the image repository must have public network access. For more information, see Enable public network access for a cluster. For an ACR example, see Configure public access control for an ACR instance.

For more information about how to configure the passwordless component, see Pull an image within the same account and Pull an image across accounts.

For more information about how to configure an image key, see How do I use imagePullSecrets?.

How do I troubleshoot ACK application issues?

Application failures in ACK are mainly caused by issues with pods, controllers (such as deployments, StatefulSets, or DaemonSets), and services. Check for the following types of issues.

Check pods

For more information about how to handle pod exceptions, see Troubleshoot abnormal pods.

Check deployments

When you create resources such as deployments, DaemonSets, StatefulSets, or jobs, the issue may be related to pods. For more information, see Troubleshoot abnormal pods to check for pod issues.

You can locate the issue by viewing the events and logs related to the deployment:

This topic uses a deployment as an example. The operations for viewing events and logs for DaemonSets, StatefulSets, or jobs are similar.

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, click the name of the target cluster. In the navigation pane on the left, choose .

On the Stateless page, click the name of the target Deployment. Then, click the Event or Log tab to check for abnormal information and identify the problem.

Check services

A Service provides load balancing for a group of pods. The following sections describe how to locate several common issues related to services:

Check the Service Endpoints.

Obtain the cluster KubeConfig and use kubectl to connect to the cluster.

Run the following command to view the Service Endpoints.

In the following command,

<service_name>is the name of the target Service.kubectl get endpoints <service_name>Make sure the number of addresses in the ENDPOINTS value is the same as the expected number of pods that match the Service. For example, if a deployment has 3 replicas, the number of addresses in the ENDPOINTS value must be 3.

Missing Endpoints in a Service

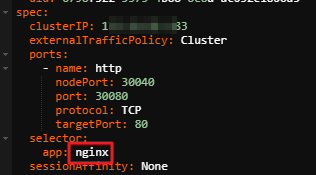

If a Service is missing Endpoints, you can use the Service selector to check whether the Service is associated with any pods. The following is an example:

Assume that the Service YAML file contains the following information.

Replace

<app>and<namespace>in the following command and check whether the returned pods are the ones associated with the Service.kubectl get pods -l app=<app> -n <namespace>Note<app>is the value of theapplabel of the pod.<namespace>is the namespace where the Service is located. If the Service is in the default namespace, you do not need to specify this parameter.

If the returned pods are the associated pods but there are no Endpoint addresses, you may not have specified the correct port for the Service. If the port specified in the Service is not being listened to in the pod, the pod is not added to the ENDPOINTS list. Therefore, make sure that the container port specified by the Service is accessible in the pod. Run the following command.

curl <ip>:<port>Note<ip>is the `clusterIP` from the YAML file in Step 1.<port>is the `port` value from the YAML file in Step 1.The specific test method depends on your actual environment.

Network forwarding issues

If the client can connect to the Service and the addresses in the Endpoints are correct, but the connection drops quickly, traffic may not be forwarded to your pods. Typically, you need to perform the following checks:

Check whether the pods are working correctly.

Locate pod issues. For more information, see Troubleshoot abnormal pods.

Check whether the pod addresses are reachable.

Run the following command to obtain the IP addresses of the pods.

kubectl get pods -o wideLog on to any node and use the `ping` command to test the pod IP addresses to confirm network connectivity.

Check whether the application is listening on the correct port.

If your application listens on port 80, you must specify the container port as 80 in the Service. On any node, run the

curl <ip>:<Port>command to check whether the container's port in the pod is normal.

How do I manually upgrade the Helm version?

The Helm v2 Tiller server-side component has known security vulnerabilities. An attacker can use Tiller to install unauthorized applications in the cluster. Therefore, we recommend that you upgrade to Helm v3. For more information, see Upgrade from Helm V2 to Helm V3.

How do I use images from a Container Registry Enterprise Edition instance in the Chinese mainland in an ACK cluster outside China?

In this scenario, you need to purchase a Standard or Premium Edition of Container Registry Enterprise Edition in the Chinese mainland and a Basic Edition of Container Registry Enterprise Edition outside the Chinese mainland.

After the purchase, use instance synchronization to sync images from the instance in the Chinese mainland to the instance outside the Chinese mainland. For more information, see Same-account instance synchronization. Obtain the image address from the Container Registry Enterprise Edition instance outside the Chinese mainland and use this address to create an application in the ACK cluster.

Synchronization is slow when you use ACR Personal Edition. If you use a self-built repository, you must purchase a GA instance for acceleration. The cost of a self-built repository and GA acceleration is high. We recommend that you use Container Registry Enterprise Edition. For more information about the billing of Container Registry Enterprise Edition, see Billing.

How do I perform zero-downtime rolling updates for an application?

During the process of deleting an old application and creating a new one, temporary 5XX access errors may occur. This is because there is a delay of several seconds when pod changes are synchronized to Server Load Balancer (SLB). You can resolve this issue by configuring graceful shutdown and other methods to achieve zero-downtime rolling updates in Kubernetes. For more information, see How to achieve zero-downtime rolling updates in K8s?.

How do I obtain container images?

For information about preparations and notes on pulling container images for workloads, see Pull an image.

How do I restart a container?

You cannot directly restart a single container. However, you can achieve the same effect by performing the following steps:

Run the following command to view the container status and identify the container that you want to restart.

kubectl get podsDelete the pod. Deleting a pod also triggers its controller, such as a deployment or DaemonSet, to create a new pod instance. This effectively restarts the container. The command to delete a single pod is as follows:

kubectl delete pod <pod-name>After you delete the pod, Kubernetes automatically creates a new pod to replace it based on the corresponding controller configuration.

NoteIn a production environment, avoid directly manipulating pods. Instead, use controllers, such as ReplicaSets and Deployments, to manage and update containers. This ensures cluster state consistency and correctness.

Run the following command to verify the container status. Confirm that the container status is `Running`, which indicates a successful restart.

kubectl get pods

How do I change the namespace of a deployment?

If you want to move a deployment from one namespace to another, you need to modify the deployment's namespace accordingly. When you change the namespace, you must also manually modify the namespace of the deployment's persistent volume claims, ConfigMaps, secrets, and other dependent resources to ensure that the deployment runs correctly.

Use the

kubectl getcommand to export the resource configuration in YAML format.kubectl get deploy <deployment-name> -n <old-namespace> -o yaml > deployment.yamlIn `deployment.yaml`, replace the

namespaceparameter with the new namespace, and then save and exit.apiVersion: apps/v1 kind: Deployment metadata: annotations: generation: 1 labels: app: nginx name: nginx-deployment namespace: new-namespace # Specify the new namespace. ... ...Use the

kubectl applycommand to update the deployment to the new namespace.kubectl apply -f deployment.yamlUse the

kubectl getcommand to view the deployment in the new namespace.kubectl get deploy -n new-namespace

How do I expose pod information to containers?

ACK is consistent with community Kubernetes and follows its specifications. You can expose pod information to containers in two ways:

Environment variables: Pass pod information to a container by setting environment variables.

Files: Provide pod information to a container as files by mounting them.

How do I use imagePullSecrets?

ACR Personal Edition instances created on or after September 9, 2024, do not support the passwordless component. When you use a newly created ACR Personal Edition instance, we recommend that you save the username and logon password in a secret and reference the secret in the imagePullSecrets field.

The passwordless component cannot be used at the same time as a manually configured

imagePullSecretsfield.The secret must be in the same namespace as the workload.

Run the following command and replace the parameters to create a secret that contains a username and password.

kubectl create secret docker-registry image-secret \ --docker-server=<ACR-registry> \ --docker-username=<username> \ --docker-password=<password> \ --docker-email=<email@example.com>docker-server: The ACR instance address. The network type must be the same as that of the image address (internal network or public network address).docker-username: The username for the ACR access credential.docker-password: The password for the ACR access credential.docker-email: Optional.

After you create the secret, you can use the username and password by configuring the

imagePullSecretsfield in a ServiceAccount or a workload.Use a Service Account

Add the

imagePullSecretsfield to the ServiceAccount. The following example uses the default ServiceAccount nameddefaultin thedefaultnamespace:apiVersion: v1 kind: ServiceAccount metadata: name: default ... imagePullSecrets: - name: acr-3 # Enter the ACR key SecretWhen a workload uses this ServiceAccount, it can pull the image.

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-test namespace: default labels: app: nginx spec: serviceAccountName: default # If you use the default SA of the namespace, you can leave this unspecified replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: <acrID>.cr.aliyuncs.com/<repo>/nginx:latest # Replace with the ACR instance linkUse directly in a workload

You can use the secret in a workload by referencing it in the

imagePullSecretsfield.apiVersion: apps/v1 kind: Deployment metadata: name: nginx-test namespace: default labels: app: nginx spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: imagePullSecrets: - name: image-secret # Use the Secret created in the previous step containers: - name: nginx image: <acrID>.cr.aliyuncs.com/<repo>/nginx:latest # Replace with the image address of the ACR repository

Why does image pulling still fail after I configure the passwordless component?

A possible reason is that the passwordless component is configured incorrectly. For example:

The instance information in the passwordless component does not match the ACR instance.

The image address used to pull the image does not match the domain name of the instance specified in the passwordless component configuration.

Follow the steps in Pull an image within the same account to troubleshoot the issue.

If the image pull still fails after the component is correctly configured, the failure may be caused by a conflict between the passwordless component and an imagePullSecrets field that was manually entered in the workload YAML file. To resolve this issue, manually delete the imagePullSecrets field, and then delete and recreate the pod.

Why do containers fail to start on new nodes after I enable image acceleration for a node pool?

After you enable Container Image Acceleration for a node pool, containers fail to start and the following error is reported:

failed to create containerd container: failed to attach and mount for snapshot 46: failed to enable target for /sys/kernel/config/target/core/user_99999/dev_46, failed:failed to open remote file as tar file xxxxThis issue occurs because after you enable Container Image Acceleration, you must also install the aliyun-acr-acceleration-suite component and configure pull credentials to pull private images. For more information, see Configure container image pull credentials.

If the issue is not resolved after you install the component, we recommend that you disable container image acceleration to restore your services. You can try to configure it again later.