In scenarios where CPUs and GPUs frequently communicate with each other, the memory access across non-uniform memory access (NUMA) nodes may cause issues such as increased latency and limited bandwidth. These issues affect the overall performance of an application. To resolve such issues, Container Service for Kubernetes (ACK) supports NUMA topology-aware scheduling based on the scheduling framework of Kubernetes to schedule pods to optimal NUMA nodes. This reduces the memory access across NUMA nodes to optimize the performance of your application.

How it works

A NUMA node is the basic unit of a NUMA system. Multiple NUMA nodes on a single cluster node constitute a NUMA set, which is used to efficiently allocate computing resources and reduce competition for memory access between processors. For example, a high-performance server that has eight GPUs usually contains multiple NUMA nodes. If no CPU cores are bound to an application or CPUs and GPUs are not allocated to the same NUMA node, the performance of the application may be degraded due to CPU contention or cross-NUMA node communication between CPUs and GPUs. To maximize the performance of your application, you can allocate CPUs and GPUs to the same NUMA node.

The native solution based on the kubelet CPU policy and NUMA topology policy can help you allocate CPUs and GPUs to the same NUMA node on a single server. However, this solution may encounter the following difficulties in a cluster:

The scheduler is not aware of the specific CPUs and GPUs allocated to a NUMA node. In this case, the scheduler cannot determine whether the remaining CPU and GPU resources can meet the quality of service (QoS) requirements of pods. As a result, a large number of pods enter the AdmissionError state after scheduling. In severe cases, the cluster may stop working.

The allocation of CPUs and GPUs is uncontrollable at the upper layer. In native Kubernetes, the kubelet topology policy can be viewed only in the process startup parameters of nodes, but cannot be obtained at the cluster level by using methods such as node labels. Therefore, when you submit a job, you cannot specify that the workload is run on a NUMA node to which both CPUs and GPUs are allocated. As a result, the performance of your application is highly variable.

The topology policy is not easy to use. The NUMA topology policy is declared on nodes, so a single node can carry only one NUMA topology policy. Before you submit a job and use the topology policy, a cluster resource administrator must manually divide the nodes of the cluster by adding special labels to the nodes and configure nodeAffinity settings to schedule pods to the nodes with matching labels. In addition, pods with different policies cannot be scheduled to the same node even if they do not interfere with each other. This reduces the resource utilization of the cluster.

To resolve the preceding issues of the native kubelet solution, ACK supports NUMA topology-aware scheduling based on the scheduling framework of Kubernetes. ACK uses the gputopo-device-plugin component and ack-koordlet of the ack-koordinator component to report the topology of CPUs and GPUs on a node. Both components are developed by Alibaba Cloud. ACK also supports the ability to declare the NUMA topology policy on workloads. The following figure shows the architecture of NUMA topology-aware scheduling.

Prerequisites

Cluster

An ACK Pro cluster that runs Kubernetes 1.24 or later is created. For more information, see Create an ACK managed cluster. For more information about how to update an ACK cluster, see Upgrade clusters.

Nodes

Elastic Compute Service (ECS) instances of the GPU-accelerated compute-optimized instance family sccgn7ex or Lingjun nodes are used. For more information, see Overview of instance families and Manage Lingjun clusters and nodes.

The

ack.node.gpu.schedule=topologylabel is added to the nodes for which you want to enable NUMA topology-aware scheduling. For more information, see Enable scheduling features.

Components

The version of the kube-scheduler component is later than V6.4.4. For more information, see kube-scheduler. To update the kube-scheduler component, perform the following operations: Log on to the ACK console. On the Clusters page, click the name of the cluster that you want to manage. In the left-side navigation pane, choose . Find and update the component.

The ack-koordinator component is installed. This component is formerly known as ack-slo-manager. For more information, see ack-koordinator (FKA ack-slo-manager).

ACK Lingjun cluster: Install the ack-koordinator component.

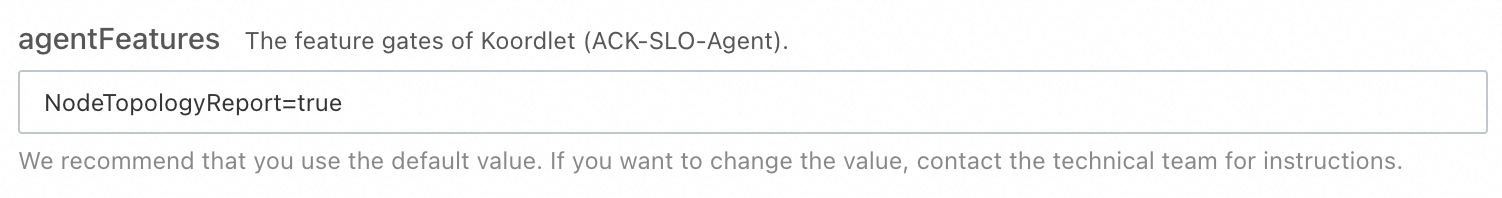

ACK Pro cluster: When you configure parameters for the ack-koordinator component, enter NodeTopologyReport=true for the agentFeatures parameter.

The topology-aware GPU scheduling component is installed. For more information, see Install the topology-aware GPU scheduling component.

ImportantIf you install the topology-aware GPU scheduling component before you install the ack-koordinator component, you must restart the topology-aware GPU scheduling component after the ack-koordinator component is installed.

Limits

You cannot use NUMA topology-aware scheduling together with topology-aware CPU scheduling or topology-aware GPU scheduling.

NUMA topology-aware scheduling applies only to scenarios in which both CPUs and GPUs are allocated to a NUMA node.

The number of requested CPU cores of all containers in a pod must be an integer and the same as the limits on the number of CPU cores.

The GPU resources of all containers in a pod must be requested by using

aliyun.com/gpu. The number of requested GPUs must be an integer.

Billing

To use this feature, you must install the cloud-native AI suite, which may generate additional fees. For more information, see Billing of the cloud-native AI suite.

The ack-koordinator component is free to install and use, but additional fees may apply in the following scenarios:

ack-koordinator is a non-managed component and consumes worker node resources after installation. You can configure the resource requests for each module during installation.

By default, ack-koordinator exposes monitoring metrics for features such as resource profiling and fine-grained scheduling in Prometheus format. If you select the Enable Prometheus monitoring for ACK-Koordinator option and use Alibaba Cloud Prometheus, these metrics are considered custom metrics and will incur fees. The cost depends on your cluster size and the number of applications. Before you enable this feature, review the Prometheus billing information to understand the free quota and pricing for custom metrics. You can monitor and manage your resource usage by querying your usage data.

Configure NUMA topology-aware scheduling

You can add the following annotations to the YAML file of a pod to declare the requirements for NUMA topology-aware scheduling:

apiVersion: v1

kind: Pod

metadata:

annotations:

cpuset-scheduler: required # Specifies whether to allocate both CPUs and GPUs to a NUMA node.

scheduling.alibabacloud.com/numa-topology-spec: | # The requirements of the pod for the NUMA topology policy.

{

"numaTopologyPolicy": "SingleNUMANode",

"singleNUMANodeExclusive": "Preferred",

}

spec:

containers:

- name: example

resources:

limits:

aliyun.com/gpu: '4'

cpu: '24'

requests:

aliyun.com/gpu: '4'

cpu: '24'The following table describes the parameters of NUMA topology-aware scheduling.

Parameter | Description |

| Specifies whether to allocate both CPUs and GPUs to a NUMA node. Set the value to |

| The NUMA topology policy to be used during pod scheduling. Valid values:

|

| Specifies whether to schedule the pod to specific NUMA nodes during pod scheduling. Valid values: Note NUMA node types:

|

Compare the performance

In this example, the model loading process is used to test the performance improvement before and after NUMA topology-aware scheduling is enabled. The text-generation-inference tool is used to load a model on two GPUs. The NSight tool is used to compare the loading speed of the GPUs before and after NUMA topology-aware scheduling is enabled.

Lingjun nodes are used in this example. For more information about how to download the text-generation-inference tool, see Text Generation Inference. For more information about how to download the NSight tool, see Installation Guide.

The test results may vary based on the test tools that are used. The performance comparison data in this example is only the test results obtained by using the NSight tool. The actual data depends on your operating environment.

Before NUMA topology-aware scheduling is enabled

The following sample code shows the YAML file of an application in the test scenario before NUMA topology-aware scheduling is enabled:

Check the duration of the model loading process. The test results show that the process takes about 15.9 seconds.

After NUMA topology-aware scheduling is enabled

The following sample code shows the YAML file of an application in the test scenario after NUMA topology-aware scheduling is enabled:

Check the duration of the model loading process. The test results show that the process takes about 5.4 seconds. The duration is shortened by 66%.