By Jian Shen.

To optimize queries by using the Relational Cache of E-MapReduce Spark, you generally need to store a large volume of data for pre-computing purposes. However, during actual query operations, you may only need to read a small volume of data. Therefore, to allow queries to have better response times, only seconds in length, you'll have to quickly identify your target data out of a large volume of data. But how do you do that exactly?

To answer this question, this article will look into how you can accelerate query speeds by using the Spark Relational Cache of Alibaba Cloud E-MapReduce (EMR). Specifically, this article will cover what you can do in terms of storage format, data partitioning, file indexing, and data sorting optimizations to improve and even accelerate your overall query speeds and performance.

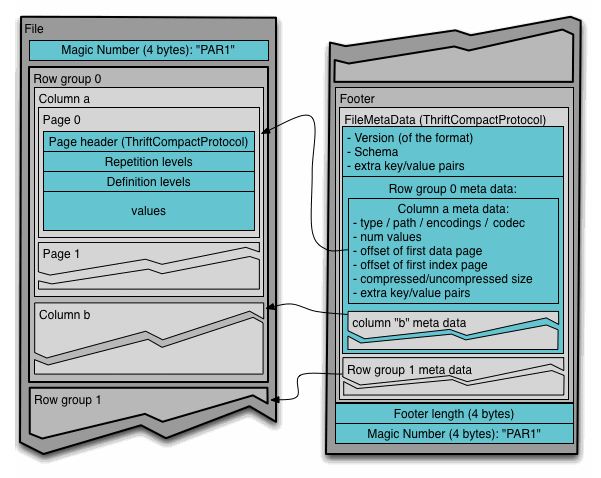

Storage formats and their related metadata is an important piece of the optimization. As part of our solution, we will use the default data storage format Parquet along with some of its connected statistic data and some filter. Let's discuss it.

First, the Parquet file format, having the full support from the Spark community, is the default data storage format for Spark. Parquet is a columnar storage format, meaning that it allows you to easily crop fields. Each data file in Parquet consists of multiple row groups, and the footer of each data file records the statistics of each row group, such as the maximum and minimum values.

Next, the statistics that can be used from this file format structure can help to reduce I/O overhead during data reading operations (i.e. queries). In the current version of Spark, the Catalyst optimizer has additionally pushed some filters down to the Parquet reader. As such, the row group to be read is determined by filtering based on the statistics in the Parquet files. I/O overhead is reduced, and the query response time is shortened. Something to note is that this feature is also supported by most other columnar storage formats.

Now, let's talk about filters. Many filters for Relational Cache are both known and fixed. For query optimization purposes, we will use these filters to push the fixed query filters down to the Parquet reader, which will select the target row group accordingly. The actual result of this filtering is suitable for our purposes because the volume of data to be read usually only accounts for a relatively small portion of the total data volume, which is fine for us.

Below is an illustration of how the metadata is filtered.

Another piece of optimizing queries is data partitioning. For this you will need to use the Expand operator when building cubes in Spark, but before this, you'll also need to first understand how to group IDs to the Relational Cache of Spark to distinguish different grouping sets.

Generally speaking, after you've got grouping IDs figured out, you'll only need the data corresponding to one of the grouping IDs in subsequent queries. Therefore, grouping IDs is natural choice when it comes to optimizing queries through data partitioning.

In Spark and other big data analysis engines like Hive, data partitioning is a common method for storing files of structured data by directories, which are specific values of one or more fields of the structured data.

So now, to select the data corresponding to a grouping ID, you'll need to read only the data in the corresponding directory. By doing so, the files without matched grouping IDs will be ignored, with the total number of tasks to be started greatly reduced. As such, task scheduling overhead in Spark is lowered, improving your overall query performance as a result.

In this section, we will look at how you can use file indexing to improve efficiency by narrowing the scope of queries. Following this, we will discuss what file indexing does and what it means for you.

If the total data volume is quite large, the number of files to be stored will also be high. In this case, even if we can get better filtering results by using the footers of Parquet, we may still need to start certain tasks to read these footers. In fact, in an actual implementation of Spark, the number of footer reading tasks is normally similar to the number of files. Therefore, scheduling these tasks can be time-consuming especially when cluster computing resources are limited. Therefore, to tackle this issue and further reduce the scheduling overhead of Spark jobs and improve execution efficiency, you can index files.

File indexes are similar to independent footers. With file indexes, you can collect the maximum and minimum data values of each field in each file in advance, and then store these values in a hidden data table. By doing this, you will only need to read the independent table and perform filtering at the file level to obtain the target file.

This is an independent stage, because a file corresponds to only one record in this hidden table. Therefore, the number of tasks that are required for reading the hidden table would be much less than the overhead of reading footers of all data files, and following this, the number of tasks in subsequent stages can also be significantly reduced. In access scenarios with Relational Cache, the overall acceleration effect would this solution would be quite obvious.

In this section, we are going to look at ways in which you can filter data to make queries more efficient. First, we will look at filtering data and data sorting and discuss some of their problems. Then, we will look into why you may want to consider using space-filling curves as an even better way to increase your query speeds.

To make queries more efficient, we could filter data based on statistics like the maximum and minimum values. This can be done regardless of whether you are using footers or file indexes. However, if, let's say for more extreme situations, such as when the maximum and minimum values of all data files and row groups of a key are equal to the global maximum and minimum values, filtering may not be a very effective option. So, for this sort of case, data sorting may be the answer.

However, there is a problem at least with conventional data sorting. When you sort multiple fields in a database, the filtering effects for the fields may vary. This is because the fields may have a primary-secondary relationship. That is, the fields at the top of the sorting field sequence have good filtering effects, but those lower down usually have poorer filtering effects because the data is decentralized. So, we'll need to find an even better option for sorting data filtering which takes the effects of multiple fields into account.

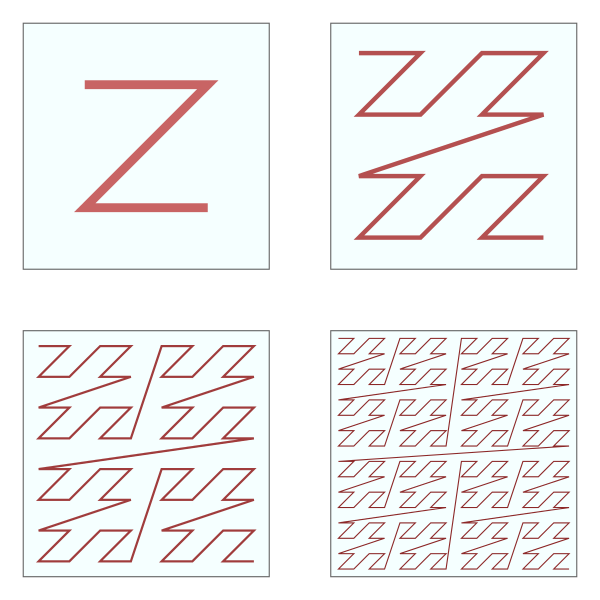

The answer to this involves the concept of a space-filling curve. Imagine data as a limited space. When you consider data as such, it has the consequence that the way in which you sort and divide data into blocks makes all the values fall into a small range, so that file indexing can have a more efficient result. For this, you can select the Z-order curve to sort multi-dimensional data space. By choosing this curve, the filtering effect for each column is balanced. The following figure shows the Z-order curve in two-dimensional space.

Image Source: Wikipedia

However, something that is important to note is that the filtering effects for single columns may not be completely optimal when the number of sorting columns increases. Therefore, in actual use, to obtain the best overall results, we need to make trade-offs for the columns to be sorted well.

In this article, we have discussed how you can use EMR Spark Relational Cache to accelerate the query process by quickly extracting the target data from a cube that contains a large volume of data. Specifically, you can do so through things such as columnar storage, file indexing, and Z-order. With these, again, you can quickly identify your target data by using filtering to greatly reduce the actual I/O data volume, avoid I/O bottlenecks, and optimize the overall query performance.

Introducing JindoFS: A High-performance Data Lake Storage Solution

62 posts | 6 followers

FollowAlibaba Clouder - April 14, 2021

Alibaba EMR - May 7, 2020

Alibaba EMR - August 28, 2019

Alibaba Clouder - April 13, 2021

Alibaba EMR - November 14, 2024

Alibaba Clouder - July 21, 2020

62 posts | 6 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba EMR

5260485642767126 March 31, 2020 at 8:35 am

Hey, Great post! I support online learning hence sharing one online learning platform BlueMap. Visit: www.bluemap.co BlueMap specialises in providing training and services for the IT community. We provide trainings in the field of IT Infrastructure to professionals around the world. Our training methodology focuses on maintaining the right blend of theory and practical with course material and lab guides carefully designed by our highly experienced trainers preparing professionals for real-world challenges. All courses provided by BlueMap help candidates apply knowledge to practice. Apart from training, we also provide hardware setup and software implementations of technologies we have expertise in.