By Chen Yuzhao

This article introduces the optimization and evolution of Flink Hudi's original mini-batch-based incremental computing model through stream computing. Users can use Flink SQL to write CDC data to Hudi storage in real-time. The upcoming Version 0.9 Hudi-native supports CDC format. The main contents are listed below:

Check out the GitHub page! You are welcome to give it a like and send stars!

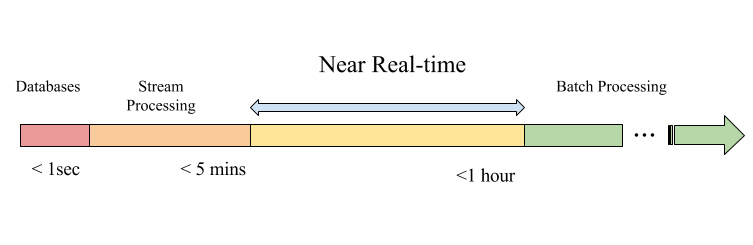

Since 2016, the Apache Hudi community has been exploring use cases in near-real-time scenarios through Hudi's UPSERT capabilities [1]. The batch processing model of MR/Spark allows users to inject data into HDFS/OSS at the hourly level. In pure real-time scenarios, users can use the stream computing engine Flink + KV/OLAP storage architecture to implement end-to-end real-time analysis in seconds (5-minute level). However, there are still a large number of use cases in the second level (5-minute level) to the hour level, which we call NEAR-REAL-TIME.

In practice, there are a large number of cases that belong to the category of near-real-time:

Currently, the near-real-time solutions are relatively open.

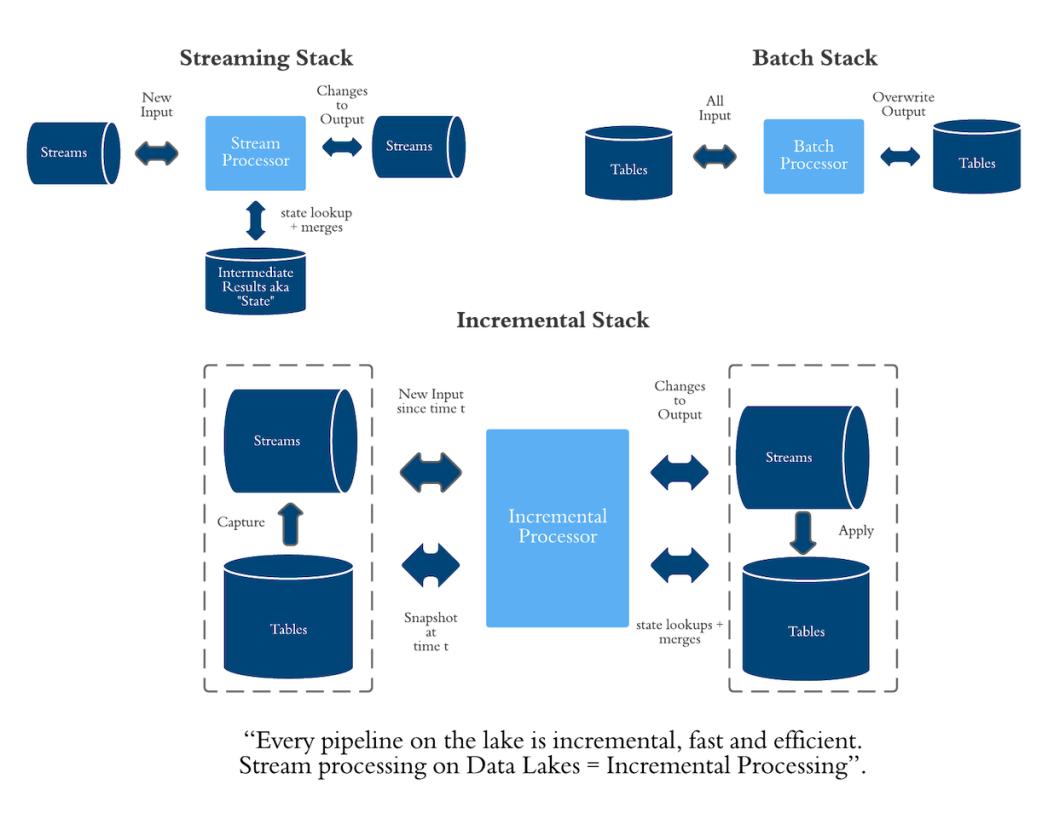

Therefore, the Hudi community proposed an incremental computing model based on mini-batch:

Incremental dataset :arrow_right: Incremental calculation result merge saved result :arrow_right: External storage

This model pulls incremental datasets (the datasets before two commits) through a snapshot stored in the lake, calculates incremental results (such as simple count) through batch processing frameworks (such as Spark/Hive), and merges them into stored results.

Core issues to be addressed by the Incremental data model:

The mini-batch-based incremental computing model can improve latency and save computing costs in some scenarios. However, there is a limitation: There are some restrictions on the pattern of SQL. Since the calculation is based on batches, the batch computing itself does not maintain the state, which requires the calculated indicators to be merged easily. Simple count and sum can be done, but avg and count distinct still need to pull full data for recalculation.

With the popularity of stream computing and real-time warehouses, the Hudi community is also actively embracing changes. The original mini-batch-based incremental computing model is continuously optimized and evolved through stream computing. Streaming data are introduced into the lake in Version 0.7, and Version 0.9 supports the native CDC format.

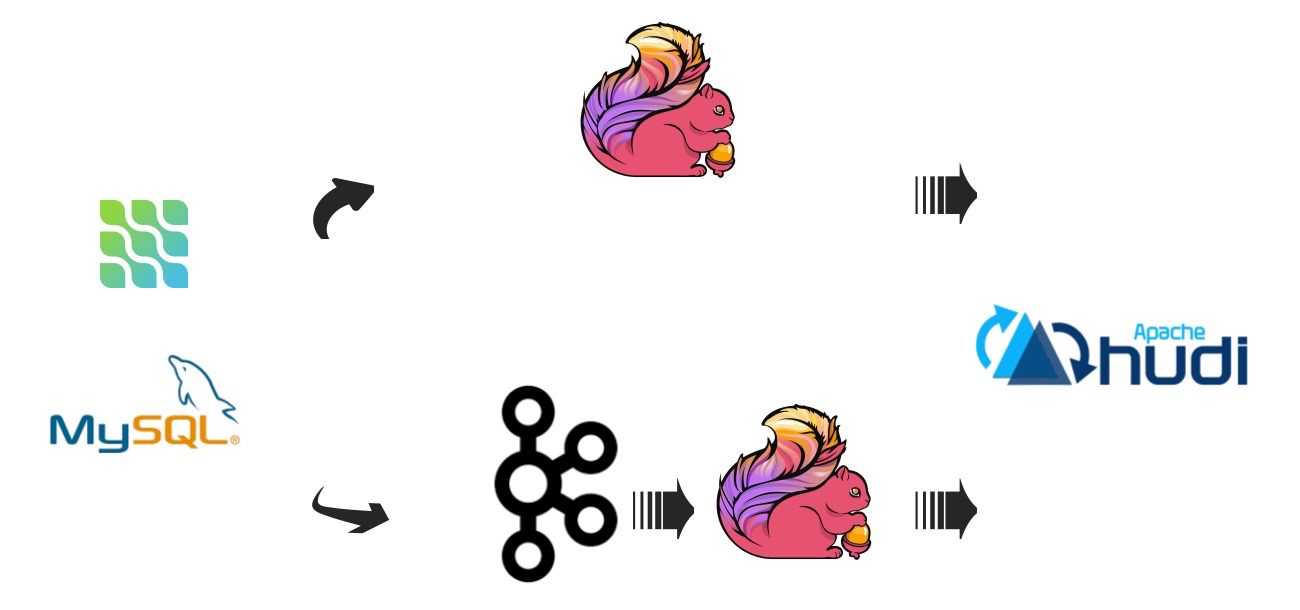

As CDC technology matures, CDC tools like debezium are becoming more popular. The Hudi community has also successively integrated the capabilities of stream writing and stream reading. Users can use Flink SQL to write CDC data into Hudi storage in real-time.

The second scheme has batter fault tolerance and extensibility.

In the upcoming Version 0.9, Hudi-native supports CDC format, and all change records of a record can be saved. Based on this, Hudi and the stream computing system are more perfectly integrated and can read streaming CDC data. [2]

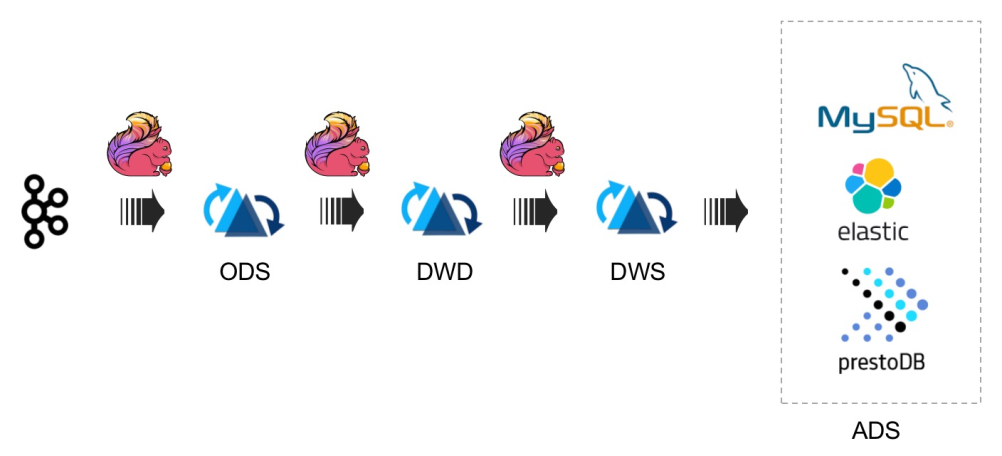

All message changes in the CDC stream are saved after entering the lake and used for streaming consumption. The state computing of Flink accumulates the results (state) in real-time. Users can use streaming write Hudi to synchronize the computing changes to Hudi Lake storage. After that, users can connect to the changelog of the Hudi storage for streaming consumption in Flink to implement state computing at the next level. This is a near-real-time end-to-end ETL pipeline:

This architecture shortens the end-to-end ETL delay to minute-level. The storage format of each layer can be compressed into column-based storage (Parquet and ORC) through compaction to provide OLAP analysis capability. Due to the openness of the data lake, the compressed format can be connected to various query engines, such as Flink, Spark, Presto, and Hive.

A Hudi data lake table has two forms:

We will show two forms of Hudi tables through a demo.

hudi-flink-bundle jarPrepare a segment of CDC data in debezium-json format in advance:

{"before":null,"after":{"id":101,"ts":1000,"name":"scooter","description":"Small 2-wheel scooter","weight":3.140000104904175},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606100,"transaction":null}

{"before":null,"after":{"id":102,"ts":2000,"name":"car battery","description":"12V car battery","weight":8.100000381469727},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":103,"ts":3000,"name":"12-pack drill bits","description":"12-pack of drill bits with sizes ranging from #40 to #3","weight":0.800000011920929},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":104,"ts":4000,"name":"hammer","description":"12oz carpenter's hammer","weight":0.75},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":105,"ts":5000,"name":"hammer","description":"14oz carpenter's hammer","weight":0.875},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":106,"ts":6000,"name":"hammer","description":"16oz carpenter's hammer","weight":1},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":107,"ts":7000,"name":"rocks","description":"box of assorted rocks","weight":5.300000190734863},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":108,"ts":8000,"name":"jacket","description":"water resistent black wind breaker","weight":0.10000000149011612},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":null,"after":{"id":109,"ts":9000,"name":"spare tire","description":"24 inch spare tire","weight":22.200000762939453},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":0,"snapshot":"true","db":"inventory","table":"products","server_id":0,"gtid":null,"file":"mysql-bin.000003","pos":154,"row":0,"thread":null,"query":null},"op":"c","ts_ms":1589355606101,"transaction":null}

{"before":{"id":106,"ts":6000,"name":"hammer","description":"16oz carpenter's hammer","weight":1},"after":{"id":106,"ts":10000,"name":"hammer","description":"18oz carpenter hammer","weight":1},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589361987000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":362,"row":0,"thread":2,"query":null},"op":"u","ts_ms":1589361987936,"transaction":null}

{"before":{"id":107,"ts":7000,"name":"rocks","description":"box of assorted rocks","weight":5.300000190734863},"after":{"id":107,"ts":11000,"name":"rocks","description":"box of assorted rocks","weight":5.099999904632568},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589362099000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":717,"row":0,"thread":2,"query":null},"op":"u","ts_ms":1589362099505,"transaction":null}

{"before":null,"after":{"id":110,"ts":12000,"name":"jacket","description":"water resistent white wind breaker","weight":0.20000000298023224},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589362210000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":1068,"row":0,"thread":2,"query":null},"op":"c","ts_ms":1589362210230,"transaction":null}

{"before":null,"after":{"id":111,"ts":13000,"name":"scooter","description":"Big 2-wheel scooter ","weight":5.179999828338623},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589362243000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":1394,"row":0,"thread":2,"query":null},"op":"c","ts_ms":1589362243428,"transaction":null}

{"before":{"id":110,"ts":12000,"name":"jacket","description":"water resistent white wind breaker","weight":0.20000000298023224},"after":{"id":110,"ts":14000,"name":"jacket","description":"new water resistent white wind breaker","weight":0.5},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589362293000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":1707,"row":0,"thread":2,"query":null},"op":"u","ts_ms":1589362293539,"transaction":null}

{"before":{"id":111,"ts":13000,"name":"scooter","description":"Big 2-wheel scooter ","weight":5.179999828338623},"after":{"id":111,"ts":15000,"name":"scooter","description":"Big 2-wheel scooter ","weight":5.170000076293945},"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589362330000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":2090,"row":0,"thread":2,"query":null},"op":"u","ts_ms":1589362330904,"transaction":null}

{"before":{"id":111,"ts":16000,"name":"scooter","description":"Big 2-wheel scooter ","weight":5.170000076293945},"after":null,"source":{"version":"1.1.1.Final","connector":"mysql","name":"dbserver1","ts_ms":1589362344000,"snapshot":"false","db":"inventory","table":"products","server_id":223344,"gtid":null,"file":"mysql-bin.000003","pos":2443,"row":0,"thread":2,"query":null},"op":"d","ts_ms":1589362344455,"transaction":null}Create a table from the Flink SQL Client to read the CDC data files:

Flink SQL> CREATE TABLE debezium_source(

> id INT NOT NULL,

> ts BIGINT,

> name STRING,

> description STRING,

> weight DOUBLE

> ) WITH (

> 'connector' = 'filesystem',

> 'path' = '/Users/chenyuzhao/workspace/hudi-demo/source.data',

> 'format' = 'debezium-json'

> );

[INFO] Execute statement succeed.Execute SELECT to observe the results. Users can see that there are a total of 20 records with some UPDATEs in the middle. The last message is DELETE:

Flink SQL> select * from debezium_source;

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

| op | id | ts | name | description | weight |

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

| +I | 101 | 1000 | scooter | Small 2-wheel scooter | 3.140000104904175 |

| +I | 102 | 2000 | car battery | 12V car battery | 8.100000381469727 |

| +I | 103 | 3000 | 12-pack drill bits | 12-pack of drill bits with ... | 0.800000011920929 |

| +I | 104 | 4000 | hammer | 12oz carpenter's hammer | 0.75 |

| +I | 105 | 5000 | hammer | 14oz carpenter's hammer | 0.875 |

| +I | 106 | 6000 | hammer | 16oz carpenter's hammer | 1.0 |

| +I | 107 | 7000 | rocks | box of assorted rocks | 5.300000190734863 |

| +I | 108 | 8000 | jacket | water resistent black wind ... | 0.10000000149011612 |

| +I | 109 | 9000 | spare tire | 24 inch spare tire | 22.200000762939453 |

| -U | 106 | 6000 | hammer | 16oz carpenter's hammer | 1.0 |

| +U | 106 | 10000 | hammer | 18oz carpenter hammer | 1.0 |

| -U | 107 | 7000 | rocks | box of assorted rocks | 5.300000190734863 |

| +U | 107 | 11000 | rocks | box of assorted rocks | 5.099999904632568 |

| +I | 110 | 12000 | jacket | water resistent white wind ... | 0.20000000298023224 |

| +I | 111 | 13000 | scooter | Big 2-wheel scooter | 5.179999828338623 |

| -U | 110 | 12000 | jacket | water resistent white wind ... | 0.20000000298023224 |

| +U | 110 | 14000 | jacket | new water resistent white w... | 0.5 |

| -U | 111 | 13000 | scooter | Big 2-wheel scooter | 5.179999828338623 |

| +U | 111 | 15000 | scooter | Big 2-wheel scooter | 5.170000076293945 |

| -D | 111 | 16000 | scooter | Big 2-wheel scooter | 5.170000076293945 |

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

Received a total of 20 rowsCreate a Hudi table, where the table form is set to MERGE_ON_READ and the changelog mode property changelog.enabled is opened:

Flink SQL> CREATE TABLE hoodie_table(

> id INT NOT NULL PRIMARY KEY NOT ENFORCED,

> ts BIGINT,

> name STRING,

> description STRING,

> weight DOUBLE

> ) WITH (

> 'connector' = 'hudi',

> 'path' = '/Users/chenyuzhao/workspace/hudi-demo/t1',

> 'table.type' = 'MERGE_ON_READ',

> 'changelog.enabled' = 'true',

> 'compaction.async.enabled' = 'false'

> );

[INFO] Execute statement succeed.Use the INSERT statement to import data to Hudi, enable the streaming read mode, and execute the query to observe the results:

Flink SQL> select * from hoodie_table/*+ OPTIONS('read.streaming.enabled'='true')*/;

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

| op | id | ts | name | description | weight |

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

| +I | 101 | 1000 | scooter | Small 2-wheel scooter | 3.140000104904175 |

| +I | 102 | 2000 | car battery | 12V car battery | 8.100000381469727 |

| +I | 103 | 3000 | 12-pack drill bits | 12-pack of drill bits with ... | 0.800000011920929 |

| +I | 104 | 4000 | hammer | 12oz carpenter's hammer | 0.75 |

| +I | 105 | 5000 | hammer | 14oz carpenter's hammer | 0.875 |

| +I | 106 | 6000 | hammer | 16oz carpenter's hammer | 1.0 |

| +I | 107 | 7000 | rocks | box of assorted rocks | 5.300000190734863 |

| +I | 108 | 8000 | jacket | water resistent black wind ... | 0.10000000149011612 |

| +I | 109 | 9000 | spare tire | 24 inch spare tire | 22.200000762939453 |

| -U | 106 | 6000 | hammer | 16oz carpenter's hammer | 1.0 |

| +U | 106 | 10000 | hammer | 18oz carpenter hammer | 1.0 |

| -U | 107 | 7000 | rocks | box of assorted rocks | 5.300000190734863 |

| +U | 107 | 11000 | rocks | box of assorted rocks | 5.099999904632568 |

| +I | 110 | 12000 | jacket | water resistent white wind ... | 0.20000000298023224 |

| +I | 111 | 13000 | scooter | Big 2-wheel scooter | 5.179999828338623 |

| -U | 110 | 12000 | jacket | water resistent white wind ... | 0.20000000298023224 |

| +U | 110 | 14000 | jacket | new water resistent white w... | 0.5 |

| -U | 111 | 13000 | scooter | Big 2-wheel scooter | 5.179999828338623 |

| +U | 111 | 15000 | scooter | Big 2-wheel scooter | 5.170000076293945 |

| -D | 111 | 16000 | scooter | Big 2-wheel scooter | 5.170000076293945 |Hudi keeps the change records of each row, including the operation types of the change log. Here, we turn on the TABLE HINTS to dynamically set table parameters.

Continue to use the batch read mode and execute the query to observe the output. We can see that the changes in the middle are merged:

Flink SQL> select * from hoodie_table;

2021-08-20 20:51:25,052 INFO org.apache.hadoop.conf.Configuration.deprecation [] - mapred.job.map.memory.mb is deprecated. Instead, use mapreduce.map.memory.mb

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

| op | id | ts | name | description | weight |

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

| +U | 110 | 14000 | jacket | new water resistent white w... | 0.5 |

| +I | 101 | 1000 | scooter | Small 2-wheel scooter | 3.140000104904175 |

| +I | 102 | 2000 | car battery | 12V car battery | 8.100000381469727 |

| +I | 103 | 3000 | 12-pack drill bits | 12-pack of drill bits with ... | 0.800000011920929 |

| +I | 104 | 4000 | hammer | 12oz carpenter's hammer | 0.75 |

| +I | 105 | 5000 | hammer | 14oz carpenter's hammer | 0.875 |

| +U | 106 | 10000 | hammer | 18oz carpenter hammer | 1.0 |

| +U | 107 | 11000 | rocks | box of assorted rocks | 5.099999904632568 |

| +I | 108 | 8000 | jacket | water resistent black wind ... | 0.10000000149011612 |

| +I | 109 | 9000 | spare tire | 24 inch spare tire | 22.200000762939453 |

+----+-------------+----------------------+--------------------------------+--------------------------------+--------------------------------+

Received a total of 10 rowsUse count(*) in Bounded Source Read Mode:

Flink SQL> select count (*) from hoodie_table;

+----+----------------------+

| op | EXPR$0 |

+----+----------------------+

| +I | 1 |

| -U | 1 |

| +U | 2 |

| -U | 2 |

| +U | 3 |

| -U | 3 |

| +U | 4 |

| -U | 4 |

| +U | 5 |

| -U | 5 |

| +U | 6 |

| -U | 6 |

| +U | 7 |

| -U | 7 |

| +U | 8 |

| -U | 8 |

| +U | 9 |

| -U | 9 |

| +U | 10 |

+----+----------------------+

Received a total of 19 rowsUse count(*) in Streaming Read Mode:

Flink SQL> select count (*) from hoodie_table/*+OPTIONS('read.streaming.enabled'='true')*/;

+----+----------------------+

| op | EXPR$0 |

+----+----------------------+

| +I | 1 |

| -U | 1 |

| +U | 2 |

| -U | 2 |

| +U | 3 |

| -U | 3 |

| +U | 4 |

| -U | 4 |

| +U | 5 |

| -U | 5 |

| +U | 6 |

| -U | 6 |

| +U | 7 |

| -U | 7 |

| +U | 8 |

| -U | 8 |

| +U | 9 |

| -U | 9 |

| +U | 8 |

| -U | 8 |

| +U | 9 |

| -U | 9 |

| +U | 8 |

| -U | 8 |

| +U | 9 |

| -U | 9 |

| +U | 10 |

| -U | 10 |

| +U | 11 |

| -U | 11 |

| +U | 10 |

| -U | 10 |

| +U | 11 |

| -U | 11 |

| +U | 10 |

| -U | 10 |

| +U | 11 |

| -U | 11 |

| +U | 10 |We can see that the calculation results in batch and streaming modes are the same. The current data lake CDC format is still in a fast iteration period. Learn more:

[1] https://www.oreilly.com/content/ubers-case-for-incremental-processing-on-hadoop/

[2] https://hudi.apache.org/blog/2021/07/21/streaming-data-lake-platform

Flink Course Series (8): Detailed Interpretation of Flink Connector

Zeppelin Notebook: An Important Tool for PyFlink Development Environment

206 posts | 54 followers

FollowApache Flink Community China - August 12, 2022

Apache Flink Community - July 5, 2024

Apache Flink Community - April 8, 2024

Apache Flink Community - June 11, 2024

Apache Flink Community - May 10, 2024

ApsaraDB - February 29, 2024

206 posts | 54 followers

Follow Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Apache Flink Community