By Xinyu

Compared to traditional data warehouses, data lakes offer more flexibility in handling various types of data and support highly scalable storage, making them ideal for big data analysis. To enable quasi-real-time or even real-time data processing, a data lake must efficiently ingest and store data while delivering low-latency query performance for analysis needs.

Apache Paimon and Apache Hudi are widely used data lake storage formats with high write throughput and low-latency query performance. This article compares the performance of Paimon and Hudi on Alibaba Cloud EMR and explores their respective roles in building quasi-real-time data warehouses.

The cluster environment for this article is Alibaba Cloud EMR version 5.16.0 with the following node attributes:

• master: 1 * ecs.g7.2xlarge 8 vCPU 32 GiB

• core: 4 * ecs.g7.6xlarge 24 vCPU 96 GiB

The following components and versions are used:

• Paimon: 0.7-SNAPSHOT(Paimon community 0.6 release)

• Hudi: 0.14.0

• Flink: 1.15

• Spark: 3.3.1

• OSS-HDFS: 1.0.0

This article consists of two parts: testing the real-time data ingestion performance of Paimon and Hudi using Flink, and constructing end-to-end quasi-real-time data warehouses using Paimon and Hudi with Flink and Spark. The test data is stored in the OSS-HDFS of EMR.

Real-time data ingestion is a crucial application scenario for data lake formats and serves as the first step in building real-time data lake warehouses. The tests in this section refer to the paimon-cluster-benchmark. The actual business scenarios include the upsert scenario (updating and revising data) and the pure append scenario. Paimon and Hudi's read and write capabilities are tested in these respective scenarios.

This section uses Flink to stream data into the lake. The deployment mode is Flink Standalone. The Flink configuration is as follows. As the TM memory size greatly affects the test results, the test results under 8 GB, 16 GB, and 20 GB are counted respectively. Additionally, because this test does not need to use the managed memory of TM, set it to 1 MB.

parallelism.default: 16

jobmanager.memory.process.size: 4g

taskmanager.numberOfTaskSlots: 1

taskmanager.memory.process.size: 8g/16g/20g

execution.checkpointing.interval: 2min

execution.checkpointing.max-concurrent-checkpoints: 3

taskmanager.memory.managed.size: 1m

state.backend: rocksdb

state.backend.incremental: true

table.exec.sink.upsert-materialize: NONEThe data lake upsert is used to update or insert new data. When performing an upsert, it checks whether the data to be written already exists in the data lake. If the data already exists, it will be updated; if the data does not exist, the new data will be inserted. Upsert is usually based on a unique identifier or a primary key to determine whether the data already exists.

The test data source in this section is generated by Flink datagen, which randomly generates data with primary keys ranging from 0 to 100,000,000. Then, Flink is used to stream the data into Paimon and Hudi tables respectively, and the total time consumed to write 500 million pieces of data is recorded (according to statistics, the total size of parquet files in a single bucket is within 2 GB). At the same time, we also use Flink to read the written Paimon and Hudi tables in batch mode and count the total duration.

For the upsert scenario, Paimon selects the primary-key table and Hudi selects the merge-on-read table. Both of them support compaction, so the test is further divided into two directions: compaction disabled and compaction enabled.

The configuration of the Paimon table is as follows. The number of buckets is the same as the parallelism of Flink and is set to 16. The default file format of Hudi is parquet. To be consistent with Hudi, the file output format is parquet and the compression method is set to snappy.

'bucket' = '16',

'file.format' = 'parquet',

'file.compression' = 'snappy',

'write-only' = 'true'The Hudi table is configured as follows. BUCKETindex is used and the number of buckets is 16, which is the same as the parallelism of Flink. Because the reading of the Hudi MOR table will be affected by the parameter compaction.max_memory, configure it to be half of the taskmanager.memory.process.size.

'table.type' = 'MERGE_ON_READ',

'metadata.enabled' = 'false',

'index.type' = 'BUCKET',

'hoodie.bucket.index.num.buckets' = '16',

'write.operation' = 'upsert',

'write.tasks' = '16',

'hoodie.parquet.compression.codec' = 'snappy',

'read.tasks' = '16',

'compaction.schedule.enabled' = 'false',

'compaction.async.enabled' = 'false',

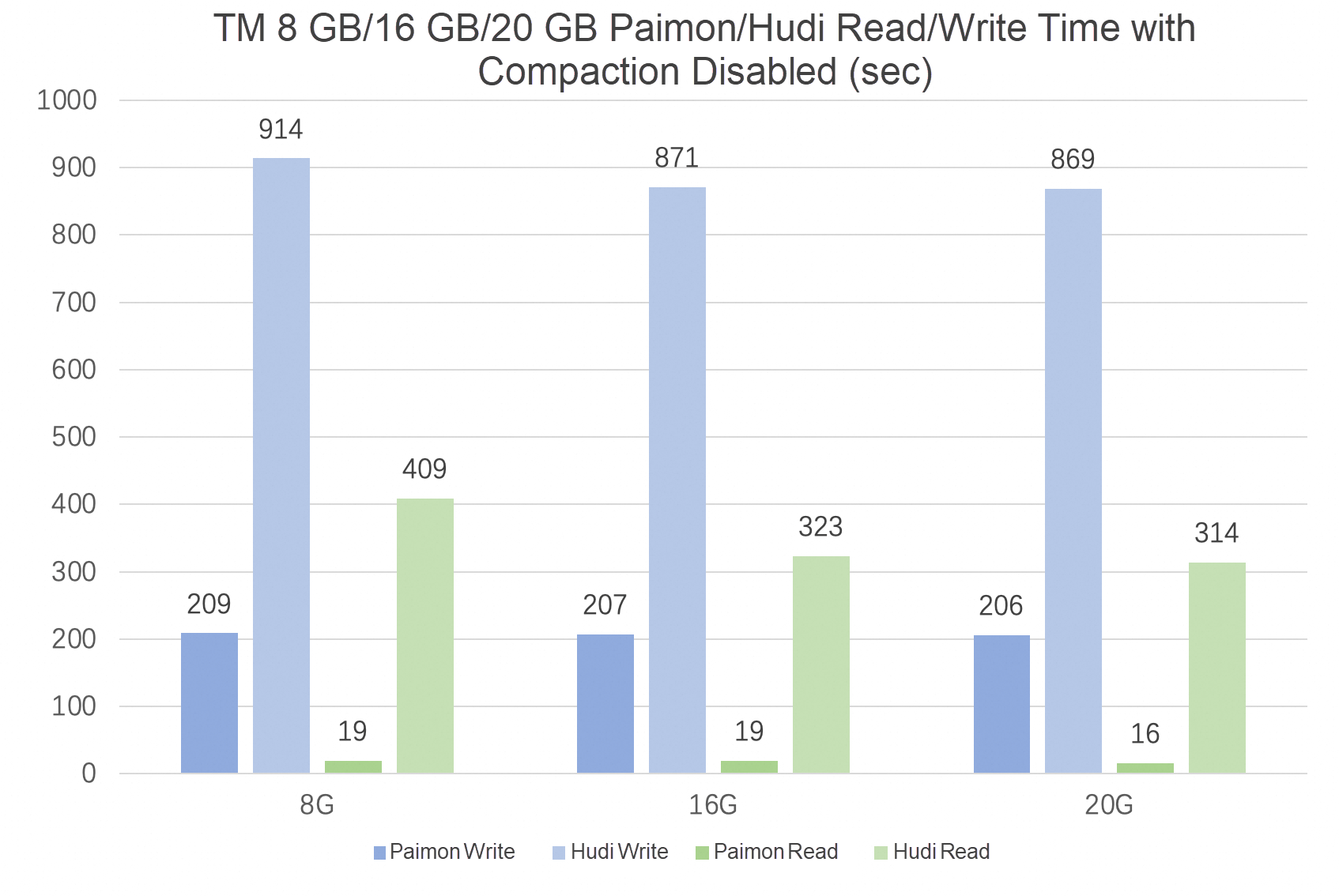

'compaction.max_memory' = '4096/8192/10240' -- Half of the TM process memoryThe test results are as follows:

It can be found that in the upsert scenario, when compaction is disabled, Paimon has better read and write performance than Hudi, and Hudi has higher requirements for TM memory.

Paimon Configuration:

'bucket' = '16',

'file.format' = 'parquet',

'file.compression' = 'snappy',

'num-sorted-run.compaction-trigger' = '5' -- default configurationHudi Configuration:

The total amount of time required for testing is small (the number of checkpoints is correspondingly small), and as the number of uncompacted log files increases, the compaction memory required by Hudi becomes larger. Therefore, the configuration of compaction.delta_commits should be set to 2 to ensure that compaction can be completed during writing.

'table.type' = 'MERGE_ON_READ',

'metadata.enabled' = 'false',

'index.type' = 'BUCKET',

'hoodie.bucket.index.num.buckets' = '16',

'write.operation' = 'upsert',

'write.tasks' = '16',

'hoodie.parquet.compression.codec' = 'snappy',

'read.tasks' = '16',

'compaction.schedule.enabled' = 'true',

'compaction.async.enabled' = 'true',

'compaction.tasks' = '16',

'compaction.delta_commits' = '2'

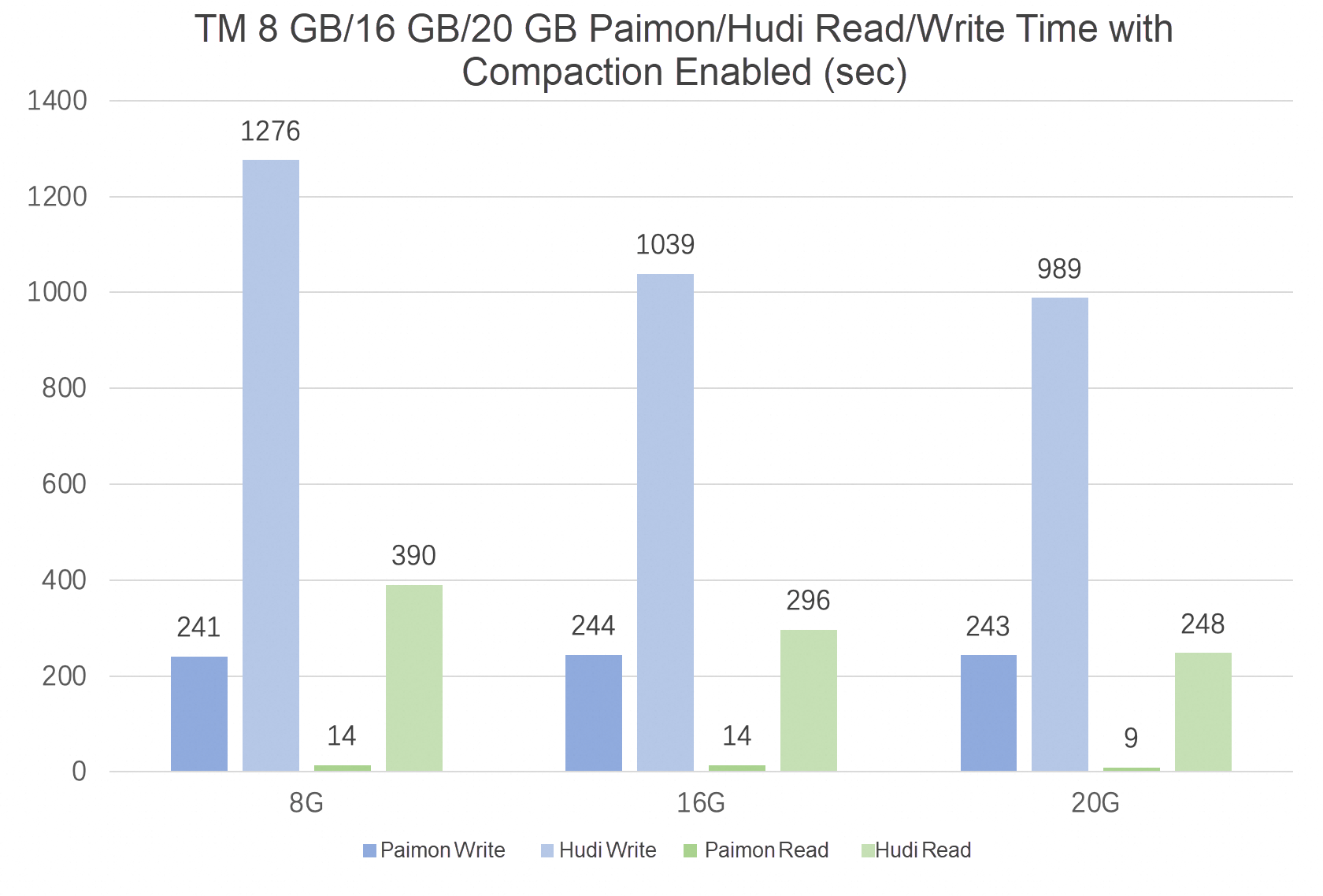

'compaction.max_memory' = '4096/8192/10240' -- Half of the TM process memoryThe test results are as follows:

In upsert scenarios, when compaction is enabled, Paimon has better read and write performance than Hudi. Compared with the previous test with compaction disabled, the write performance of Paimon and Hudi is reduced, but the read performance is improved.

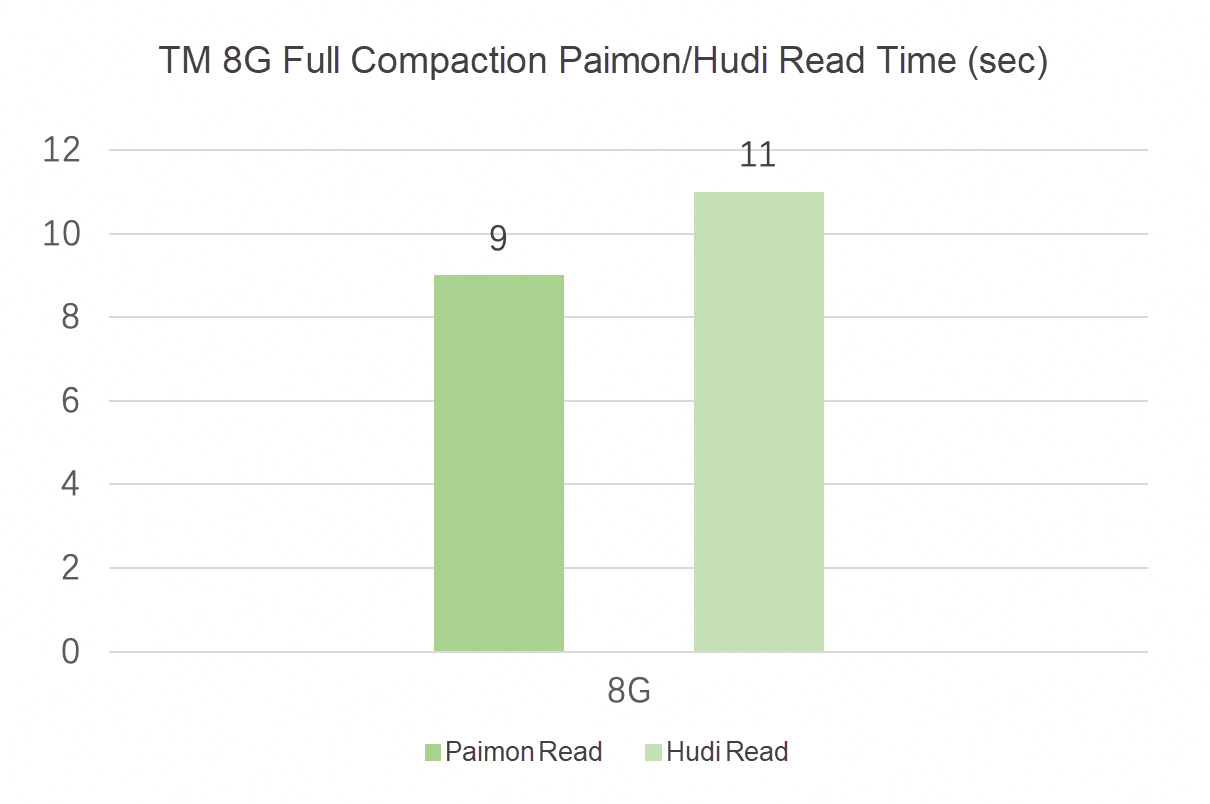

The compaction of Hudi consumes much memory, requires long running time, and is executed asynchronously. When the write task is completed, the unfinished compaction will not continue to execute. It is observed that when the TM memory reaches 20 GB, Hudi still has 4 delta commits that are not compacted (even if compaction.delta_commits=2 is configured). By default, Paimon compaction is not full compaction. Therefore, we also performed the following supplementary test to manually perform a full compaction on Paimon and Hudi, and then compare the time for reading data.

The results are as follows:

The other scenario of data ingestion is data append write, such as log ingestion.

The test data source in this section is also generated by Flink datagen, and then Flink is used to write data into Paimon and Hudi tables. Similarly, the total time consumed by Flink to write 500 million pieces of data is recorded (in the append scenario, Paimon and Hudi buckets are not required), and the total time consumed by Flink to batch read the written Paimon and Hudi tables is also used.

Configuration of the Paimon Table:

'bucket' = '-1',

'file.format' = 'parquet',

'file.compression' = 'snappy'Configuration of the Hudi Table:

Because the amount of data in a single batch is large enough, small file problems do not exist. Therefore, disable clustering:

'table.type' = 'COPY_ON_WRITE',

'metadata.enabled' = 'false',

'write.operation' = 'insert',

'write.tasks' = '16',

'hoodie.parquet.compression.codec' = 'snappy',

'read.tasks' = '16',

'write.insert.cluster' = 'false',

'clustering.schedule.enabled' = 'false',

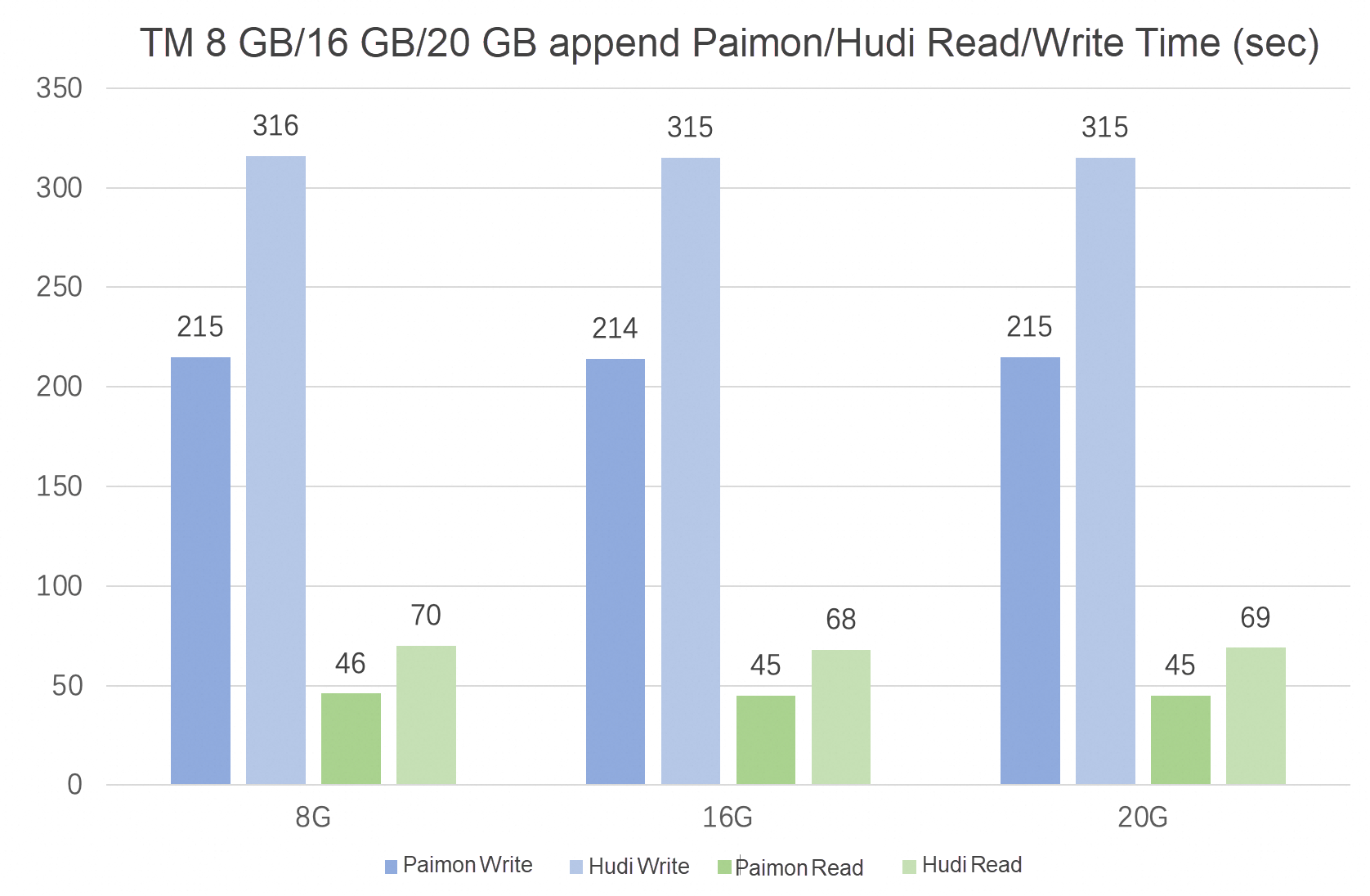

'clustering.async.enabled' = 'false'The test results are as follows:

In the append scenario, Paimon has better read and write performance than Hudi, and both of them do not require high TM memory.

After data ingestion into the lake, you can further build an integrated real-time data warehouse based on the powerful capabilities of the data lake format and streaming engine. This section uses Paimon and Hudi as unified storage to build a set of quasi-real-time data warehouses in classic e-commerce scenarios. The data warehouses have the following layers:

This layer uses Flink to write data into the lake in real time. To be closer to the production environment, Flink is started in Yarn Session mode. At the same time, due to the increase of data links, to reasonably allocate resources, the following adjustments are made to the memory and parallelism:

yarn-session.sh -Dparallelism.default=8 \

-Djobmanager.memory.process.size=2g \

-Dtaskmanager.numberOfTaskSlots=2 \

-Dtaskmanager.memory.process.size=8g \

-Dtaskmanager.memory.managed.size=1m \

-Dexecution.checkpointing.interval=2min \

-Dexecution.checkpointing.max-concurrent-checkpoints=3 \

-Dstate.backend=rocksdb \

-Dstate.backend.incremental=true \

-Dtable.exec.sink.upsert-materialize=NONE \

--detachedThe datagen table creation statement is as follows, and the rows-per-second is adjusted to 10,000.

CREATE TEMPORARY TABLE datagen_orders

(

order_name STRING

,order_user_id BIGINT

,order_shop_id BIGINT

,order_product_id BIGINT

,order_fee DECIMAL(20, 2)

,order_state INT

)

WITH (

'connector' = 'datagen'

,'rows-per-second' = '10000'

,'fields.order_user_id.kind' = 'random'

,'fields.order_user_id.min' = '1'

,'fields.order_user_id.max' = '10000'

,'fields.order_shop_id.kind' = 'random'

,'fields.order_shop_id.min' = '1'

,'fields.order_shop_id.max' = '10000'

,'fields.order_product_id.kind' = 'random'

,'fields.order_product_id.min' = '1'

,'fields.order_product_id.max' = '1000'

,'fields.order_fee.kind' = 'random'

,'fields.order_fee.min' = '0.1'

,'fields.order_fee.max' = '10.0'

,'fields.order_state.kind' = 'random'

,'fields.order_state.min' = '1'

,'fields.order_state.max' = '5'

);The following statements are used to create Paimon tables and write data:

CREATE TABLE IF NOT EXISTS paimon_catalog.order_dw.ods_orders

(

order_id STRING

,order_name STRING

,order_user_id BIGINT

,order_shop_id BIGINT

,order_product_id BIGINT

,order_fee DECIMAL(20, 2)

,order_create_time TIMESTAMP(3)

,order_update_time TIMESTAMP(3)

,order_state INT

)

WITH (

'bucket' = '-1',

'file.format' = 'parquet',

'file.compression' = 'snappy'

);

INSERT INTO paimon_catalog.order_dw.ods_orders

SELECT

UUID() AS order_id

,order_name

,order_user_id

,order_shop_id

,order_product_id

,order_fee

,NOW() AS order_create_time

,NOW() AS order_update_time

,order_state

FROM datagen_orders;The following statement is used to create Hudi tables and write data:

create TEMPORARY table ods_orders

(

order_id STRING

,order_name STRING

,order_user_id BIGINT

,order_shop_id BIGINT

,order_product_id BIGINT

,order_fee DECIMAL(20, 2)

,order_create_time TIMESTAMP(3)

,order_update_time TIMESTAMP(3)

,order_state INT

)

WITH (

'connector' = 'hudi'

,'path' = '/xxx/hudi/order_dw.db/ods_orders'

,'precombine.field' = 'order_update_time'

,'table.type' = 'COPY_ON_WRITE'

,'hoodie.database.name' = 'order_dw'

,'hoodie.table.name' = 'ods_orders'

,'hoodie.datasource.write.recordkey.field' = 'order_id'

,'metadata.enabled' = 'false'

,'write.operation' = 'insert'

,'write.tasks' = '8'

,'hoodie.parquet.compression.codec' = 'snappy'

,'write.insert.cluster' = 'false'

,'clustering.schedule.enabled' = 'false'

,'clustering.async.enabled' = 'false'

)

;

INSERT INTO ods_orders

SELECT

UUID() AS order_id

,order_name

,order_user_id

,order_shop_id

,order_product_id

,order_fee

,NOW() AS order_create_time

,NOW() AS order_update_time

,order_state

FROM datagen_orders;For Paimon tables, with their own aggregation engine capabilities, the consumption PV and total amount can be easily aggregated through simple configuration (merge-engine) to build a user-merchant aggregation intermediate table. The changelog-producer is configured as lookup because the downstream needs to read the changelog.

CREATE TABLE paimon_catalog.order_dw.dwm_shop_users

(

shop_id BIGINT

,user_id BIGINT

,ds STRING COMMENT 'hour'

,pv BIGINT COMMENT 'the user's consumption times in the shop within this hour'

,fee_sum DECIMAL(20, 2) COMMENT 'the user's total spend in the shop within this hour'

)

tblproperties (

'primary-key' = 'shop_id, user_id, ds'

,'bucket' = '8'

,'changelog-producer' = 'lookup'

,'file.format' = 'parquet'

,'file.compression' = 'snappy'

,'merge-engine' = 'aggregation'

,'fields.pv.aggregate-function' = 'sum'

,'fields.fee_sum.aggregate-function' = 'sum'

,'metadata.stats-mode' = 'none'

);The sample code of the Paimon Spark streaming job is as follows:

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions.{date_format, lit}

object PaimonOds2DwmJob {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().getOrCreate()

val sourceLocation = "/xxx/paimon/order_dw.db/ods_orders"

val targetLocation = "/xxx/paimon/order_dw.db/dwm_shop_users"

val checkpointDir = "/xxx/paimon/order_dw.db/dwm_shop_users_checkpoint"

import spark.implicits._

spark.readStream

.format("paimon")

.load(sourceLocation)

.select(

$"order_shop_id",

$"order_user_id",

date_format($"order_create_time", "yyyyMMddHH").alias("ds"),

lit(1L),

$"order_fee"

)

.writeStream

.format("paimon")

.option("checkpointLocation", checkpointDir)

.start(targetLocation)

spark.streams.awaitAnyTermination()

}

}For Hudi tables, if you want to implement similar aggregation operations, you need to use custom Payload or Merger. In this example, a custom Merger is used to aggregate the UV, PV, and fee_sum fields of records with the same key. The core logic is as follows:

public class OrdersLakeHouseMerger extends HoodieAvroRecordMerger {

@Override

public Option<Pair<HoodieRecord, Schema>> merge(HoodieRecord older, Schema oldSchema, HoodieRecord newer, Schema newSchema, TypedProperties props) throws IOException {

// ...

Object oldData = older.getData();

GenericData.Record oldRecord = (oldData instanceof HoodieRecordPayload)

? (GenericData.Record) ((HoodieRecordPayload) older.getData()).getInsertValue(oldSchema).get()

: (GenericData.Record) oldData;

Object newData = newer.getData();

GenericData.Record newRecord = (newData instanceof HoodieRecordPayload)

? (GenericData.Record) ((HoodieRecordPayload) newer.getData()).getInsertValue(newSchema).get()

: (GenericData.Record) newData;

// merge uv

if (HoodieAvroUtils.getFieldVal(newRecord, "uv") != null && HoodieAvroUtils.getFieldVal(oldRecord, "uv") != null) {

newRecord.put("uv", (Long) oldRecord.get("uv") + (Long) newRecord.get("uv"));

}

// merge pv

if (HoodieAvroUtils.getFieldVal(newRecord, "pv") != null && HoodieAvroUtils.getFieldVal(oldRecord, "pv") != null) {

newRecord.put("pv", (Long) oldRecord.get("pv") + (Long) newRecord.get("pv"));

}

// merge fee_sum

if (HoodieAvroUtils.getFieldVal(newRecord, "fee_sum") != null && HoodieAvroUtils.getFieldVal(oldRecord, "fee_sum") != null) {

BigDecimal l = new BigDecimal(new BigInteger(((GenericData.Fixed) oldRecord.get("fee_sum")).bytes()), 2);

BigDecimal r = new BigDecimal(new BigInteger(((GenericData.Fixed) newRecord.get("fee_sum")).bytes()), 2);

byte[] bytes = l.add(r).unscaledValue().toByteArray();

byte[] paddedBytes = new byte[9];

System.arraycopy(bytes, 0, paddedBytes, 9 - bytes.length, bytes.length);

newRecord.put("fee_sum", new GenericData.Fixed(((GenericData.Fixed) newRecord.get("fee_sum")).getSchema(), paddedBytes));

}

HoodieAvroIndexedRecord hoodieAvroIndexedRecord = new HoodieAvroIndexedRecord(newRecord);

return Option.of(Pair.of(hoodieAvroIndexedRecord, newSchema));

}

}The sample code of the Hudi Spark Streaming job is as follows. Configure hoodie.table.cdc.enabled as true because the downstream needs to read the changelog.

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions.{date_format, lit}

object Ods2DwmJob {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().getOrCreate()

val sourceLocation ="/xxx/hudi/order_dw.db/ods_orders"

val targetLocation = "/xxx/hudi/order_dw.db/dwm_shop_users"

val checkpointDir = "/xxx/hudi/order_dw.db/dwm_shop_users_checkpoint"

import spark.implicits._

spark.readStream

.format("hudi")

.load(sourceLocation)

.select(

$"order_shop_id".alias("shop_id"),

$"order_user_id".alias("user_id"),

date_format($"order_create_time", "yyyyMMddHH").alias("ds"),

lit(1L).alias("pv"),

$"order_fee".alias("fee_sum")

)

.writeStream

.format("hudi")

.option("hoodie.datasource.write.table.type", "COPY_ON_WRITE")

.option("hoodie.datasource.write.recordkey.field", "shop_id, user_id, ds")

.option("hoodie.datasource.write.precombine.field", "ds")

.option("hoodie.database.name", "order_dw")

.option("hoodie.table.name", "dwm_shop_users")

.option("hoodie.metadata.enable", "false")

.option("hoodie.index.type", "BUCKET")

.option("hoodie.bucket.index.num.buckets", "8")

.option("hoodie.datasource.write.operation", "upsert")

.option("hoodie.datasource.write.record.merger.impls", "org.apache.hudi.common.model.merger.OrdersLakeHouseMerger")

.option("hoodie.parquet.compression.codec", "snappy")

.option("hoodie.table.cdc.enabled", "true")

.option("hoodie.table.cdc.supplemental.logging.mode", "data_before_after")

.option("checkpointLocation", checkpointDir)

.start(targetLocation)

spark.streams.awaitAnyTermination()

}

}Finally, submit the jobs to YARN separately:

spark-submit --class Ods2DwmJob \

--master yarn \

--deploy-mode cluster \

--name PaimonOds2DwmJob \

--conf spark.driver.memory=2g \

--conf spark.driver.cores=2 \

--conf spark.executor.instances=4 \

--conf spark.executor.memory=16g \

--conf spark.executor.cores=2 \

--conf spark.yarn.submit.waitAppCompletion=false \

./paimon-spark-streaming-example.jar

spark-submit --class Ods2DwmJob \

--master yarn \

--deploy-mode cluster \

--name HudiOds2DwmJob \

--conf spark.driver.memory=2g \

--conf spark.driver.cores=2 \

--conf spark.executor.instances=4 \

--conf spark.executor.memory=16g \

--conf spark.executor.cores=2 \

--conf spark.yarn.submit.waitAppCompletion=false \

--conf spark.serializer=org.apache.spark.serializer.KryoSerializer \

--conf spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension \

--conf spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog \

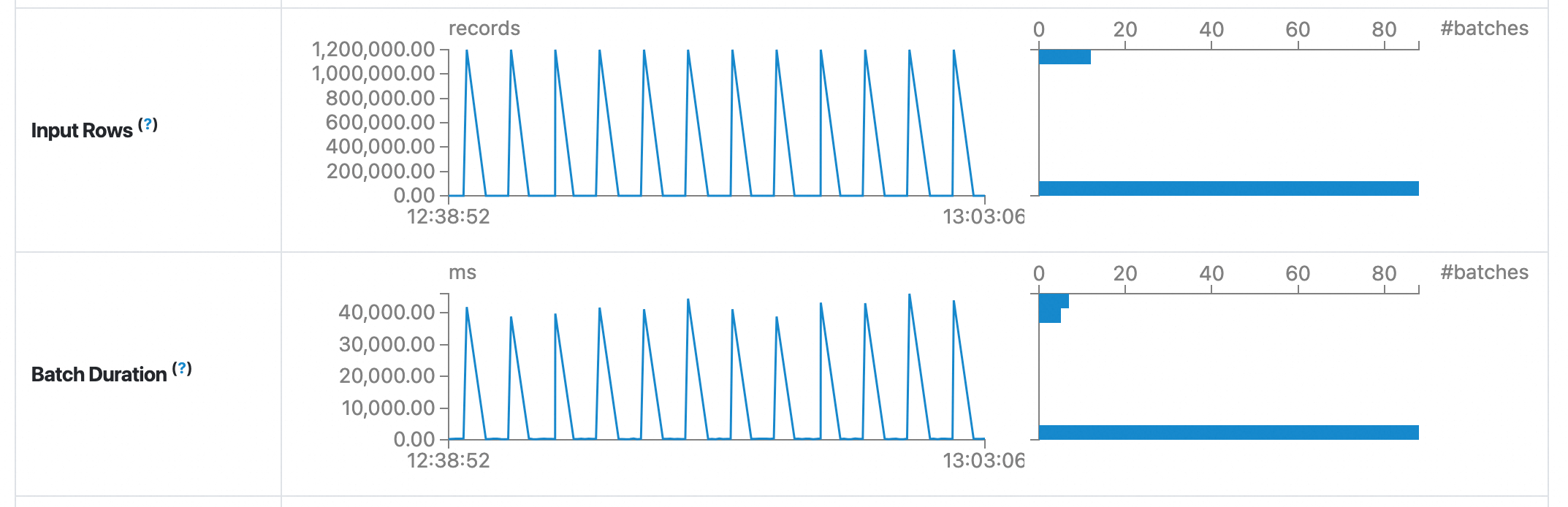

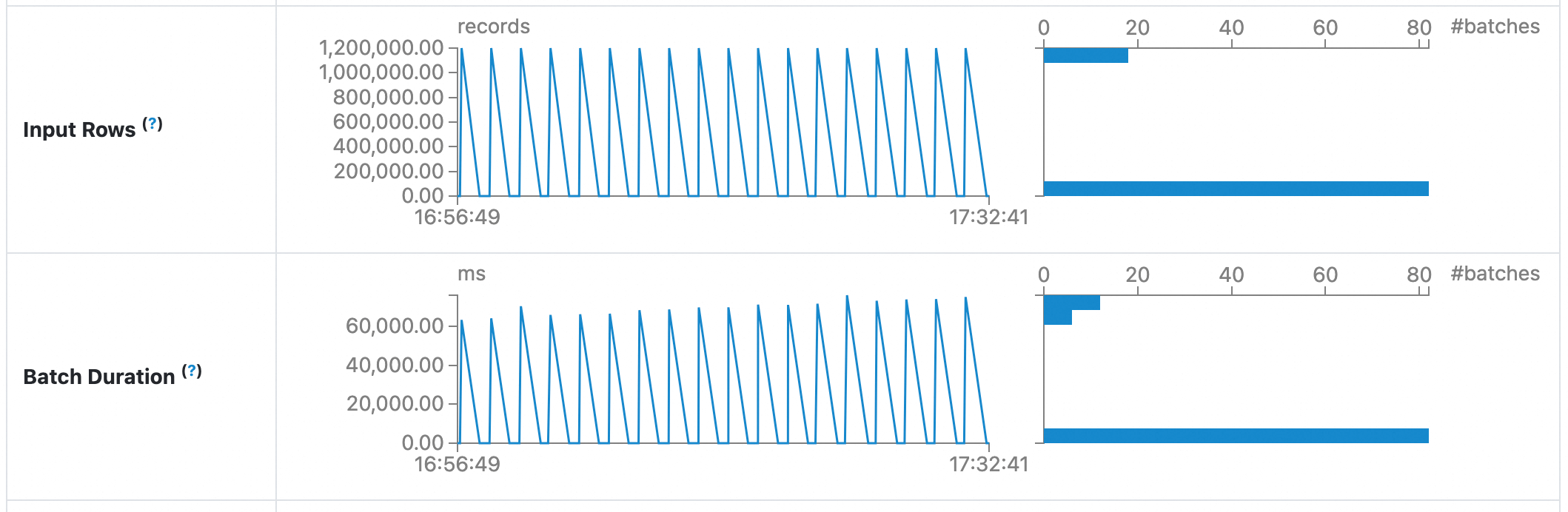

./hudi-spark-streaming-example.jarWith the preceding resources, after the job runs stably for 100 batches (about 3 hours), the UI of the streaming job is as follows:

In this case, Paimon takes about 40 seconds to write a single batch.

Hudi takes about 65 seconds to write a single batch.

The Paimon SparkSQL table creation statement is as follows. The aggregation engine is still configured to aggregate the specified fields:

CREATE TABLE paimon_catalog.order_dw.dws_users

(

user_id BIGINT

,ds STRING COMMENT 'hour'

,fee_sum DECIMAL(20, 2) COMMENT 'the user's total spend within this hour'

)

tblproperties (

'primary-key' = 'user_id, ds'

,'bucket' = '8'

,'merge-engine' = 'aggregation'

,'fields.fee_sum.aggregate-function' = 'sum'

);

CREATE TABLE paimon_catalog.order_dw.dws_shops

(

shop_id BIGINT

,ds STRING COMMENT 'hour'

,uv BIGINT COMMENT 'the total number of consumers in the shop within this hour'

,pv BIGINT COMMENT 'the total consumption times in the shop within this hour'

,fee_sum DECIMAL(20, 2) COMMENT 'the sum of consumption in the shop within this hour'

)

tblproperties (

'primary-key' = 'shop_id, ds'

,'bucket' = '8'

,'merge-engine' = 'aggregation'

,'fields.uv.aggregate-function' = 'sum'

,'fields.pv.aggregate-function' = 'sum'

,'fields.fee_sum.aggregate-function' = 'sum'

);The following Paimon Spark Streaming Dwm2DwsJob shows how to set read.changelog to true because the upstream changelog needs streaming read.

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions.{lit, when}

object Dwm2DwsJob {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().getOrCreate()

val sourceLocation = "/xxx/paimon/order_dw.db/dwm_shop_users"

val targetLocation1 = "/xxx/paimon/order_dw.db/dws_users"

val checkpointDir1 = "/xxx/paimon/order_dw.db/dws_users_checkpoint"

val targetLocation2 = "/xxx/paimon/order_dw.db/dws_shops"

val checkpointDir2 = "/xxx/paimon/order_dw.db/dws_shops_checkpoint"

import spark.implicits._

val df = spark.readStream

.format("paimon")

.option("read.changelog", "true")

.load(sourceLocation)

df.select(

$"user_id",

$"ds",

when($"_row_kind" === "+I" || $"_row_kind" === "+U", $"fee_sum")

.otherwise($"fee_sum" * -1)

.alias("fee_sum"))

.writeStream

.format("paimon")

.option("checkpointLocation", checkpointDir1)

.start(targetLocation1)

df.select(

$"shop_id",

$"ds",

when($"_row_kind" === "+I" || $"_row_kind" === "+U", lit(1L)).otherwise(lit(-1L)).alias("uv"),

when($"_row_kind" === "+I" || $"_row_kind" === "+U", $"pv").otherwise($"pv" * -1).alias("pv"),

when($"_row_kind" === "+I" || $"_row_kind" === "+U", $"fee_sum")

.otherwise($"fee_sum" * -1)

.alias("fee_sum")

.writeStream

.format("paimon")

.option("checkpointLocation", checkpointDir2)

.start(targetLocation2)

spark.streams.awaitAnyTermination()

}

}The following Hudi Spark Streaming Dwm2DwsJob allows you to reuse the Merger defined at the previous layer. Because Hudi also needs to streaming read changelog, the hoodie.datasource.query.type is configured as incremental and the hoodie.datasource.query.incremental.format is configurated as cdc. The changelog format of Hudi is different from that of Paimon, and the data processing logic is slightly different from Paimon.

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions.{col, get_json_object, lit, when}

import org.apache.spark.sql.types.{DecimalType, LongType}

object Dwm2DwsJob {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().getOrCreate()

val sourceLocation ="/xxx/hudi/order_dw.db/dwm_shop_users"

val targetLocation1 = "/xxx/hudi/order_dw.db/dws_users"

val checkpointDir1 = "/xxx/hudi/order_dw.db/dws_users_checkpoint"

val targetLocation2 = "/xxx/hudi/order_dw.db/dws_shops"

val checkpointDir2 = "/xxx/hudi/order_dw.db/dws_shops_checkpoint"

import spark.implicits._

val df = spark.readStream

.format("hudi")

.option("hoodie.datasource.query.type", "incremental")

.option("hoodie.datasource.query.incremental.format", "cdc")

.load(sourceLocation)

df.select(

get_json_object($"after", "$.user_id").cast(LongType).alias("user_id"),

get_json_object($"after", "$.ds").alias("ds"),

when(get_json_object($"before", "$.fee_sum").isNotNull, get_json_object($"after", "$.fee_sum").cast(DecimalType(20, 2)) - get_json_object($"before", "$.fee_sum").cast(DecimalType(20, 2)))

.otherwise(get_json_object($"after", "$.fee_sum").cast(DecimalType(20, 2)))

.alias("fee_sum"))

.writeStream

.format("hudi")

.option("hoodie.datasource.write.table.type", "COPY_ON_WRITE")

.option("hoodie.datasource.write.recordkey.field", "user_id, ds")

.option("hoodie.datasource.write.precombine.field", "ds")

.option("hoodie.database.name", "order_dw")

.option("hoodie.table.name", "dws_users")

.option("hoodie.metadata.enable", "false")

.option("hoodie.index.type", "BUCKET")

.option("hoodie.bucket.index.num.buckets", "8")

.option("hoodie.datasource.write.operation", "upsert")

.option("hoodie.datasource.write.record.merger.impls", "org.apache.hudi.common.model.merger.OrdersLakeHouseMerger")

.option("hoodie.parquet.compression.codec", "snappy")

.option("checkpointLocation", checkpointDir1)

.start(targetLocation1)

df.select(

get_json_object($"after", "$.shop_id").cast(LongType).alias("shop_id"),

get_json_object($"after", "$.ds").alias("ds"),

when(get_json_object($"before", "$.fee_sum").isNotNull, lit(0L)).otherwise(lit(1L)).alias("uv"),

when(get_json_object($"before", "$.fee_sum").isNotNull, get_json_object($"after", "$.pv").cast(LongType) - get_json_object($"before", "$.pv").cast(LongType))

.otherwise(get_json_object($"after", "$.pv").cast(LongType))

.alias("pv"),

when(get_json_object($"before", "$.fee_sum").isNotNull, get_json_object($"after", "$.fee_sum").cast(DecimalType(20, 2)) - get_json_object($"before", "$.fee_sum").cast(DecimalType(20, 2)))

.otherwise(get_json_object($"after", "$.fee_sum").cast(DecimalType(20, 2)))

.alias("fee_sum"))

.writeStream

.format("hudi")

.option("hoodie.datasource.write.table.type", "COPY_ON_WRITE")

.option("hoodie.datasource.write.recordkey.field", "shop_id, ds")

.option("hoodie.datasource.write.precombine.field", "ds")

.option("hoodie.database.name", "order_dw")

.option("hoodie.table.name", "dws_shops")

.option("hoodie.metadata.enable", "false")

.option("hoodie.index.type", "BUCKET")

.option("hoodie.bucket.index.num.buckets", "8")

.option("hoodie.datasource.write.operation", "upsert")

.option("hoodie.datasource.write.record.merger.impls", "org.apache.hudi.common.model.merger.OrdersLakeHouseMerger")

.option("hoodie.parquet.compression.codec", "snappy")

.option("checkpointLocation", checkpointDir2)

.start(targetLocation2)

spark.streams.awaitAnyTermination()

}

}Finally, submit the jobs to YARN separately:

spark-submit --class Dwm2DwsJob \

--master yarn \

--deploy-mode cluster \

--name PaimonDwm2DwsJob \

--conf spark.driver.memory=2g \

--conf spark.driver.cores=2 \

--conf spark.executor.instances=4 \

--conf spark.executor.memory=8g \

--conf spark.executor.cores=2 \

--conf spark.yarn.submit.waitAppCompletion=false \

./paimon-spark-streaming-example.jar

spark-submit --class Dwm2DwsJob \

--master yarn \

--deploy-mode cluster \

--name HudiDwm2DwsJob \

--conf spark.driver.memory=2g \

--conf spark.driver.cores=2 \

--conf spark.executor.instances=4 \

--conf spark.executor.memory=8g \

--conf spark.executor.cores=2 \

--conf spark.yarn.submit.waitAppCompletion=false \

--conf spark.serializer=org.apache.spark.serializer.KryoSerializer \

--conf spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension \

--conf spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog \

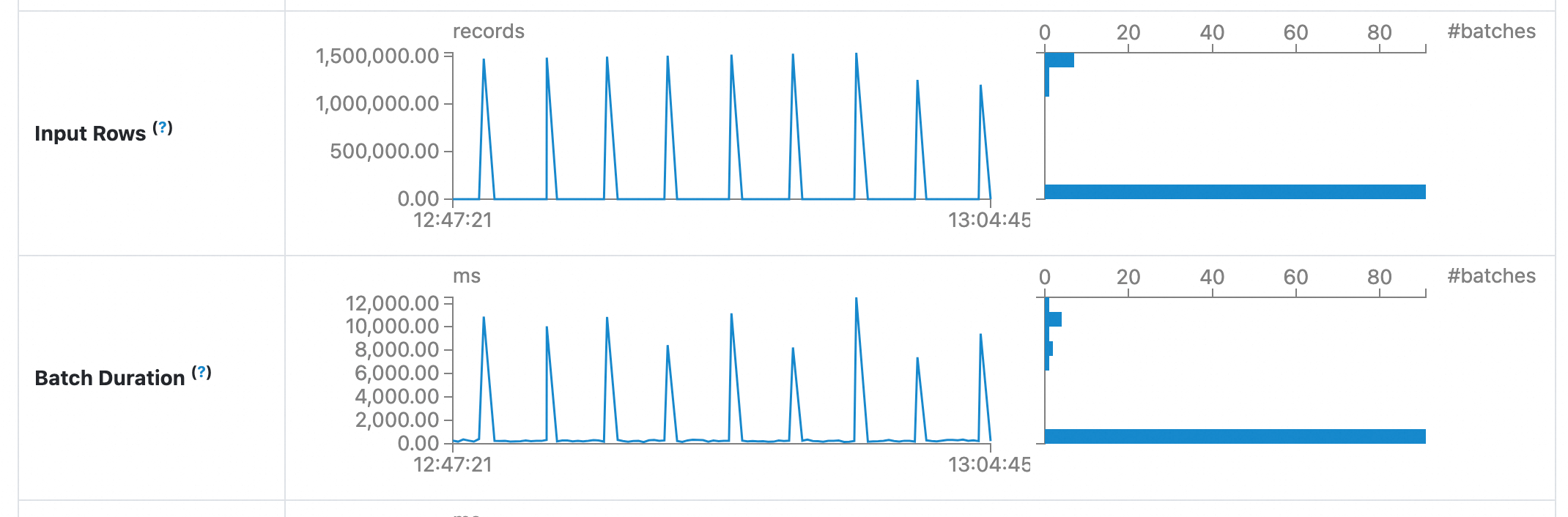

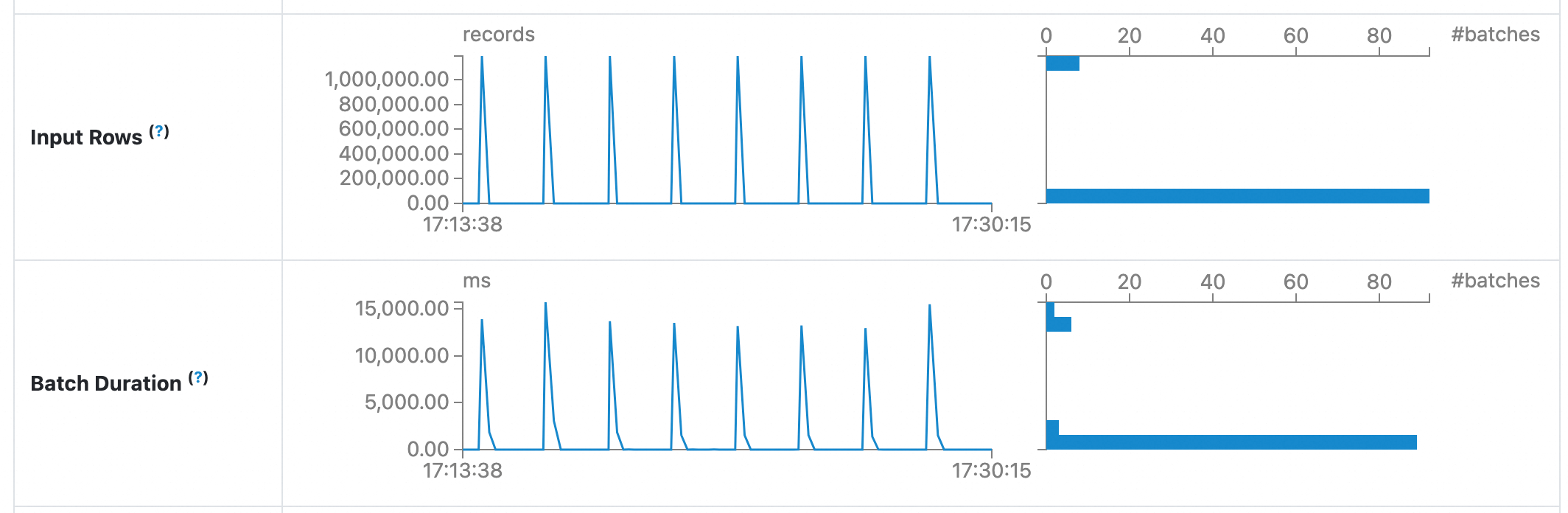

./hudi-spark-streaming-example.jarWith the preceding resources, after the job runs stably for 100 batches (about 3 hours), the UI of the streaming job (for example, the dws_shops table) is as follows:

In this case, Paimon takes about 10 seconds to write a single batch.

Hudi takes about 13 seconds to write a single batch.

In this scenario, you can use the following two SQL queries to query dwm_shop_users tables at the DWM layer as upstream tables in other business scenarios, or query data at the DWS layer directly for application analysis or report display:

-- SparkSQL queries ods_orders.

select order_id, order_user_id, order_shop_id, order_fee, order_create_time

from order_dw.ods_orders

order by order_create_time desc limit 10;

-- SparkSQL queries dws_shops.

select shop_id, ds, uv, pv, fee_sum

from order_dw.dws_shops

where ds = '2023120100' order by ds, shop_id limit 10;Paimon and Hudi have built real-time ETL connections that can process 40 million records per hour (10 GB after compression), satisfying the needs of minute-level production scenarios.

206 posts | 54 followers

FollowApache Flink Community - April 30, 2024

Apache Flink Community - May 10, 2024

Apache Flink Community - July 5, 2024

Apache Flink Community - June 11, 2024

Apache Flink Community - July 18, 2024

Apache Flink Community - September 30, 2025

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Apache Flink Community