This article is compiled from the speech of Chen Yuzhao (Yuzhao) (Alibaba Technical Expert) and Liu Dalong (Fengli) (Alibaba Development Engineer) during Flink Forward Asia 2021. The main contents include:

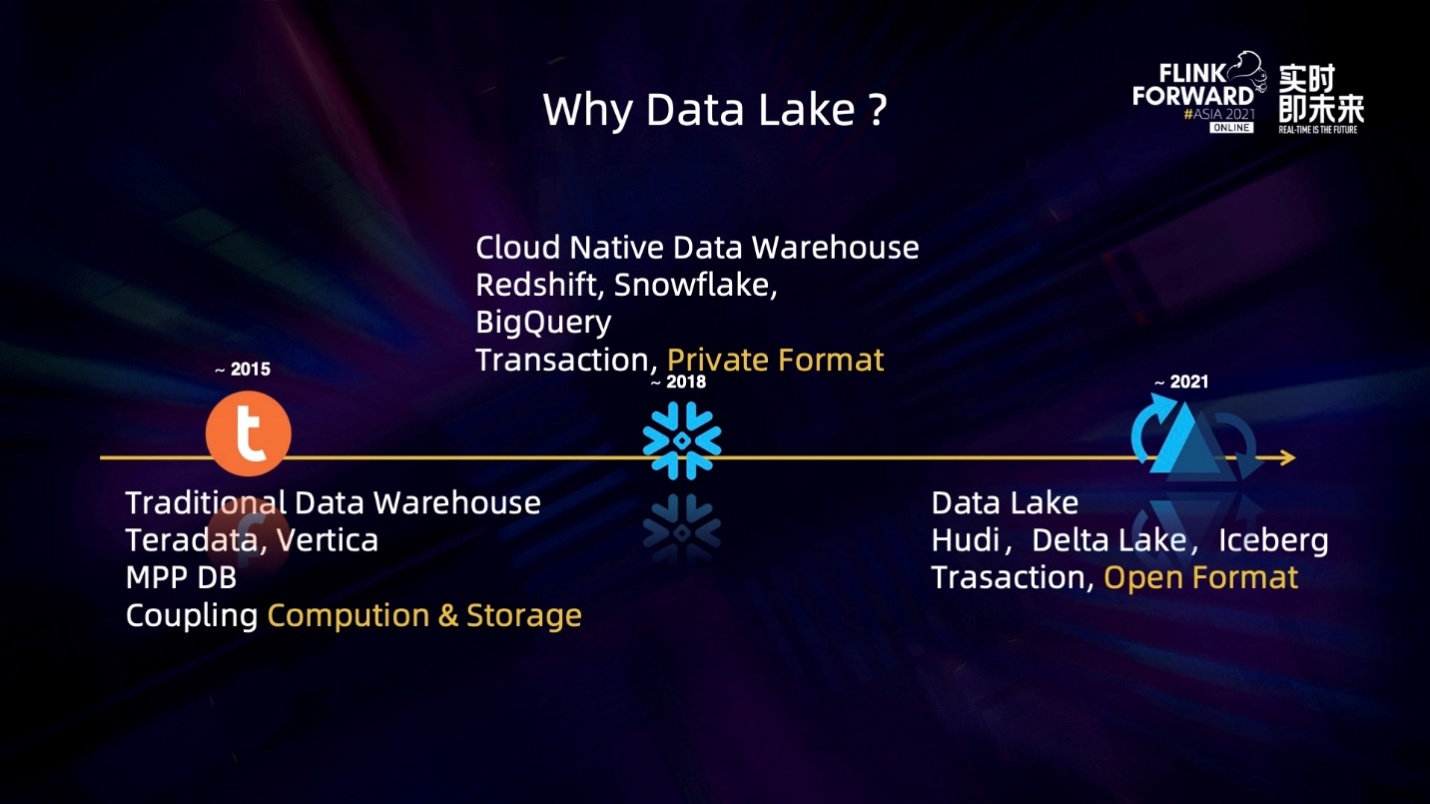

When it comes to data lakes, you may not understand what they are. What is a data lake? Why have data lakes been a hot topic over the past two years? Data lakes are not a new concept. It was proposed in the 1980s. At that time, the definition of the data lake was a primitive data layer, which can store various structured, semi-structured, and unstructured data. Data schemas are determined during queries in many scenarios, such as machine learning and real-time analysis.

Data lake storage features low cost and high flexibility, which is suitable for centralized storage in query scenarios. With the rise of cloud services in recent years, especially the maturity of object storage services, more enterprises choose to build storage services on the cloud. The storage and computing separation architecture of the data lake is very suitable for the current cloud service architecture. It provides basic acid transactions through snapshot isolation and supports docking with various analysis engines to adapt to different query scenarios. Data lake storage has a great advantage in cost and openness.

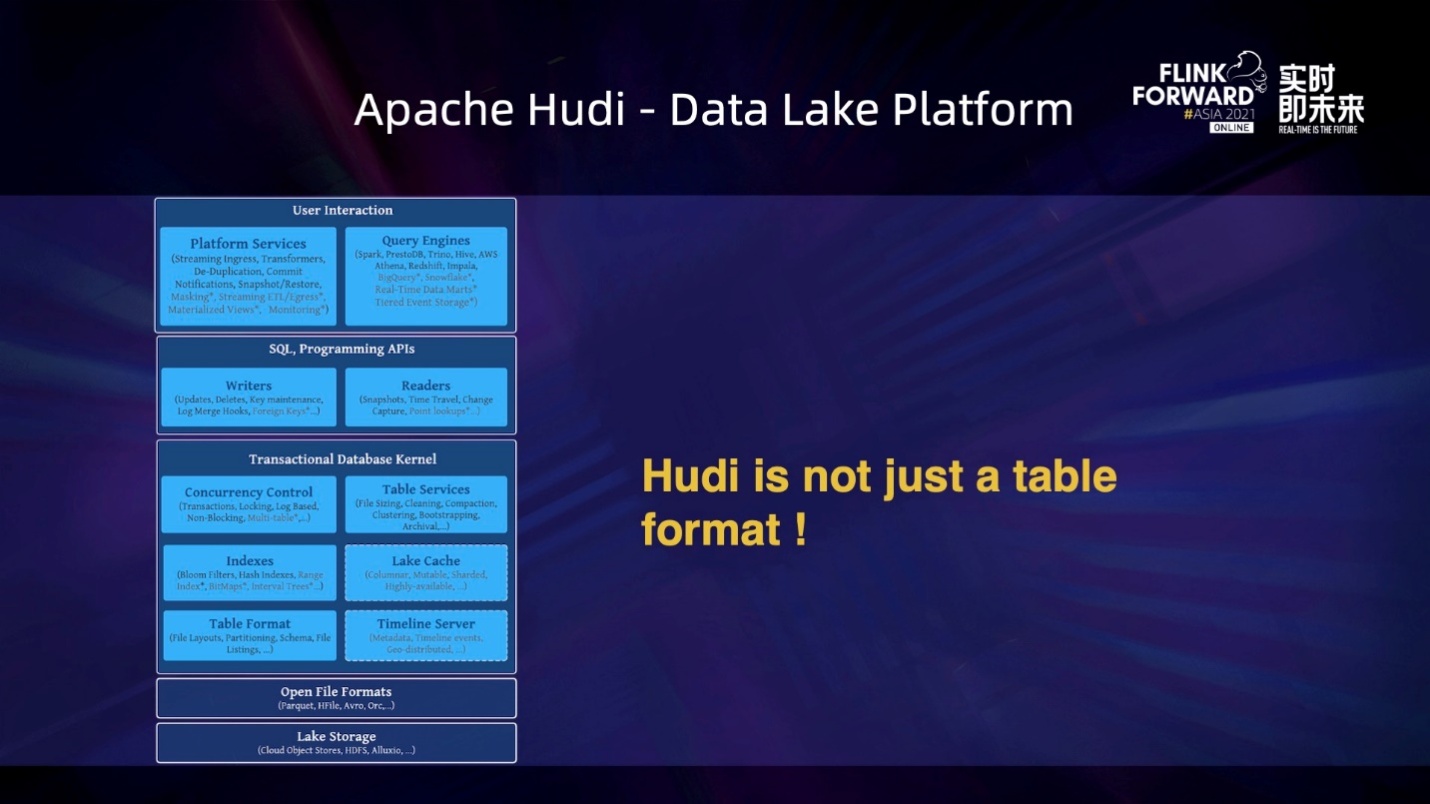

The current data lake storage has begun to assume the functions of data warehouses and implements an integrated lake house architecture by connecting with compute engines. Data lake storage is a table format, which encapsulates the high-level semantics of the table based on the original data format. Hudi has put data lakes into practice since 2016. At that time, it was to solve the problem of data updates on file systems in big data scenarios. Hudi-like LSM table format is currently unique in the lake format, friendly to near real-time updates with relatively perfect semantics.

Table format is the basic attribute of the three currently popular data lake formats. Hudi has been evolving towards the platform since the beginning of the project and has relatively perfect data governance and table service. For example, users can concurrently optimize the file layout when writing, and the metadata table can optimize the file search efficiency of the query end when writing.

The following introduces the basic concepts of Hudi:

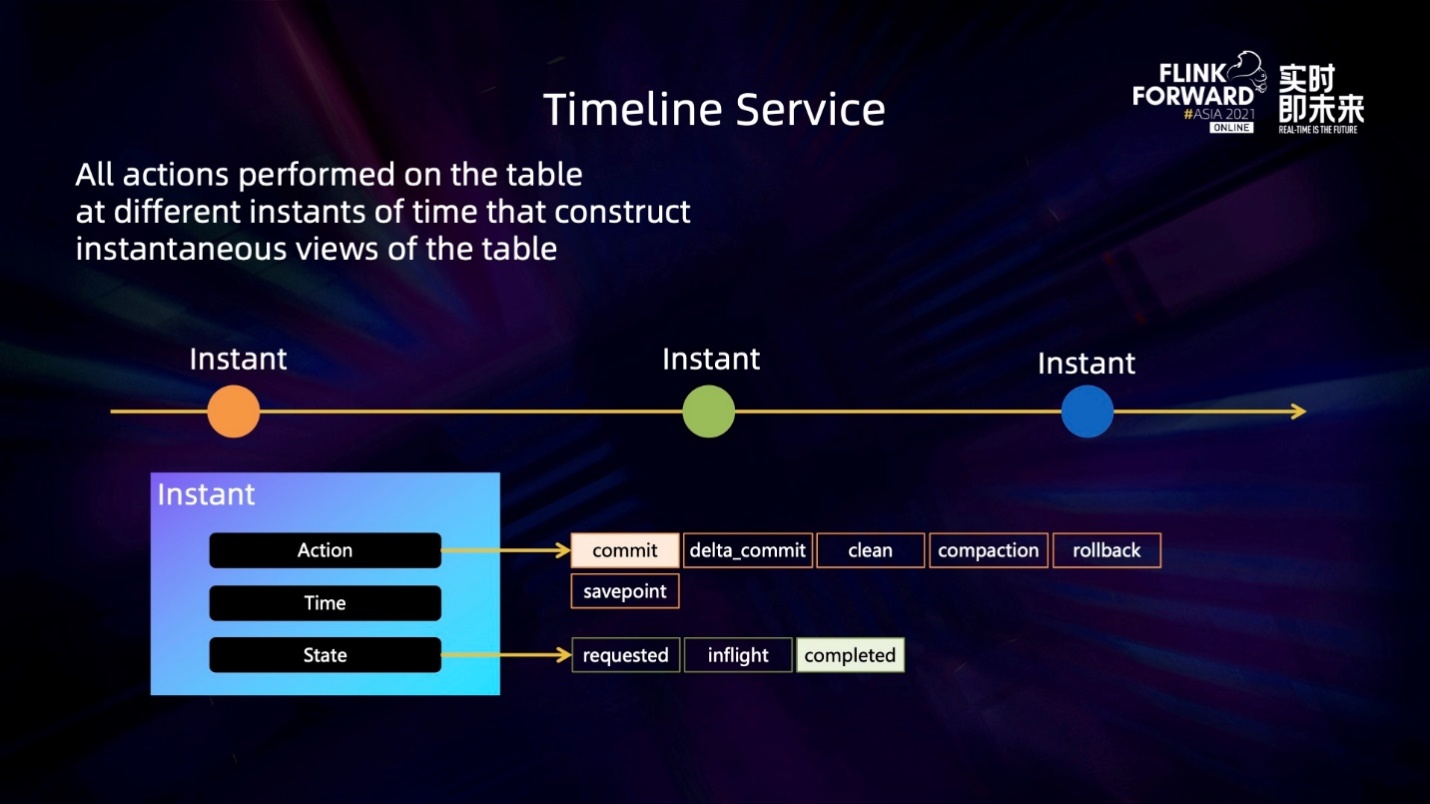

The timeline service is the core abstraction of the Hudi transaction layer. All data operations in Hudi are carried out around the timeline service. Each operation is bound to a specific timestamp through instant abstraction. The timeline service is composed of a series of instants. Each instance records the corresponding action and status. Hudi can know the status of the current table operation through the timeline service. Hudi can expose the file layout view under a specific timestamp to the current reader and writer of the table through the abstraction of a set of file system views and the timeline service.

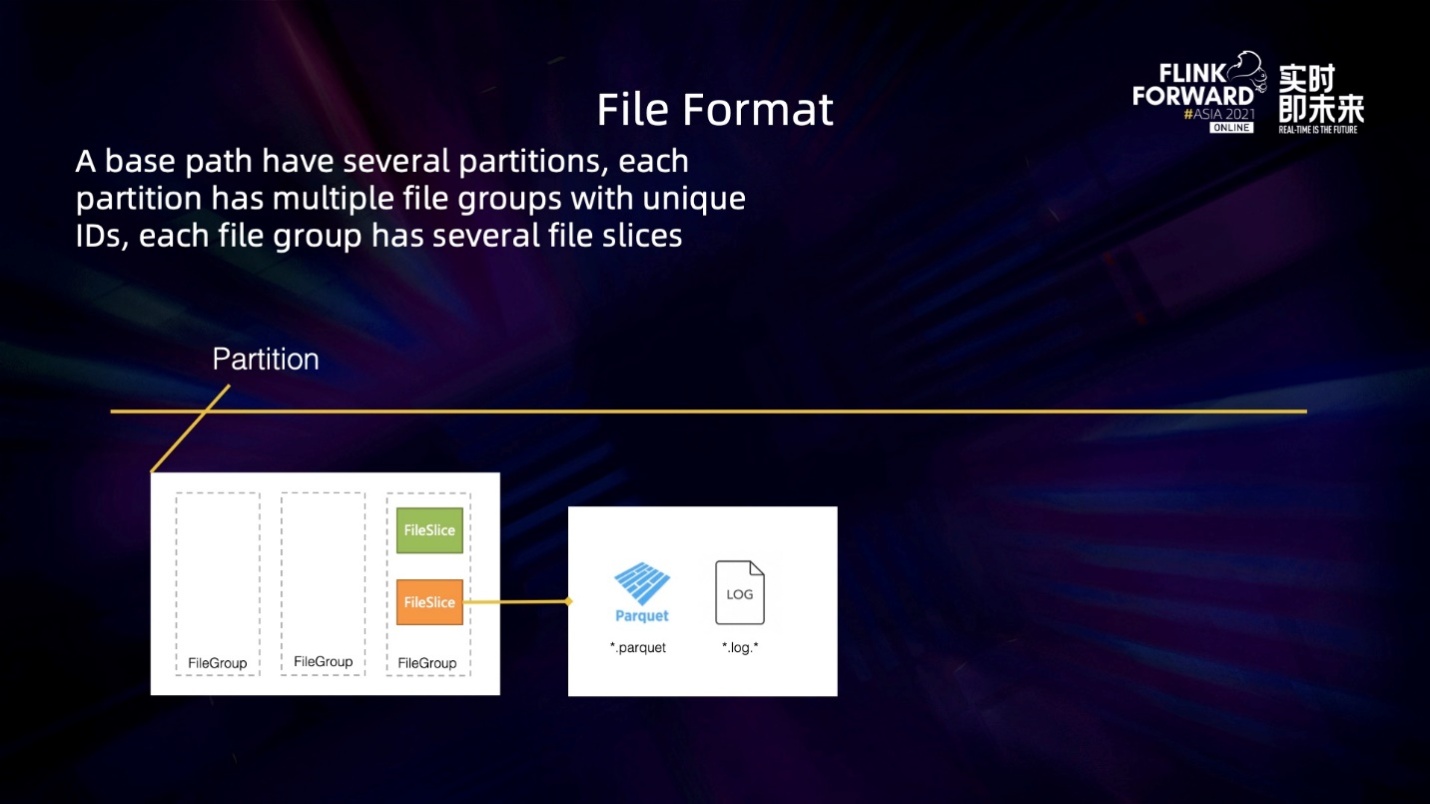

A file group is Hudi's core abstraction in the file layout layer. Each file group is equivalent to a bucket, which is divided by file size. Each writing will generate a new version. A version is abstracted as a file slice, and the file slice maintains the corresponding version of the data file. When a file group is written to the specified file size, a new file group will be switched.

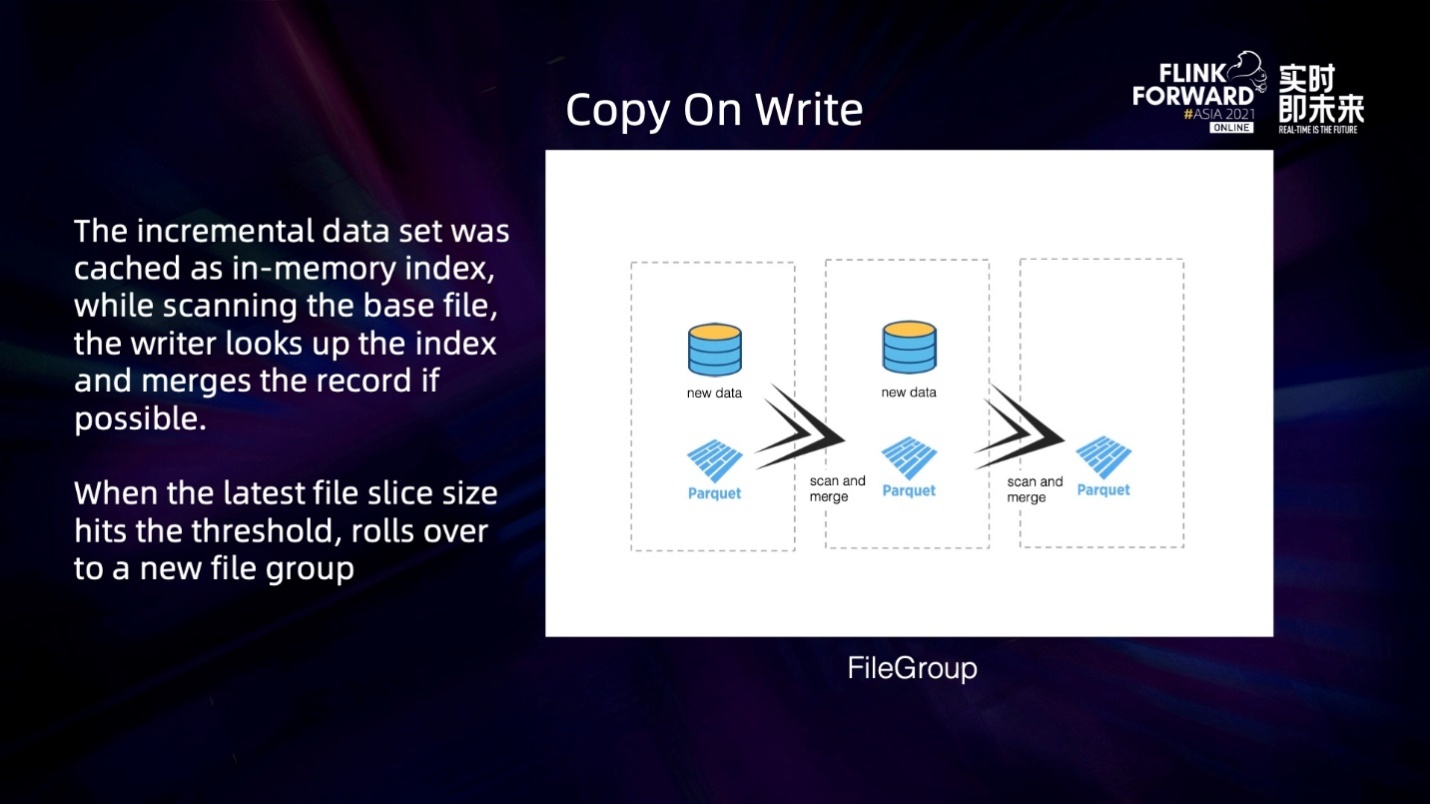

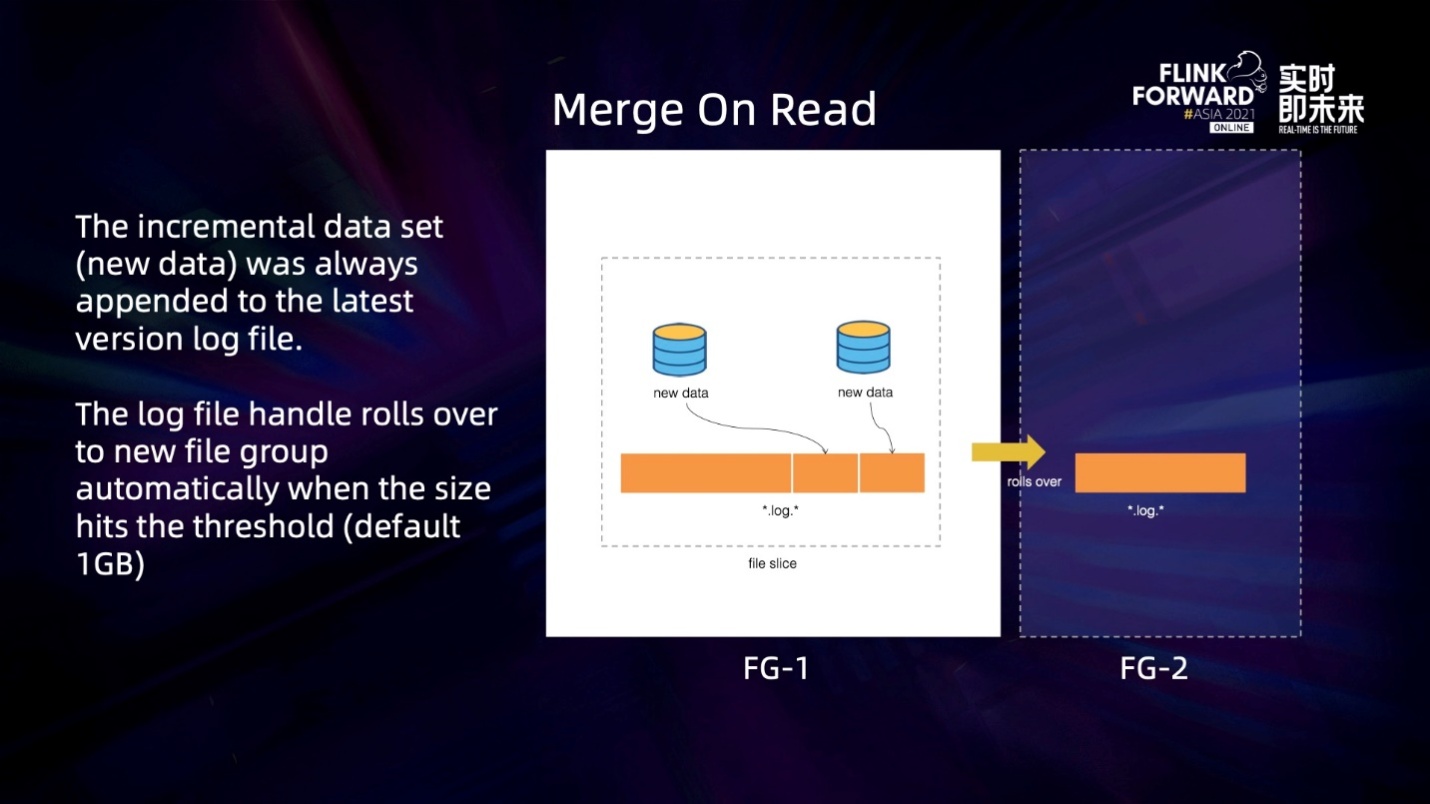

Hudi's writing behavior in file slices can be abstracted into two semantics: copy on write and merge on read.

The copy on write writes full data each time. The new data merges with the data of the previous file slice and then writes a new file slice to generate a new bucket file.

The merge on read is more complicated. Its semantics is appended write. Only incremental data is written each time, so no new file slice is written. It will try to append the previous file slice and cut the new file slice only after the written file slice is included in the compression plan.

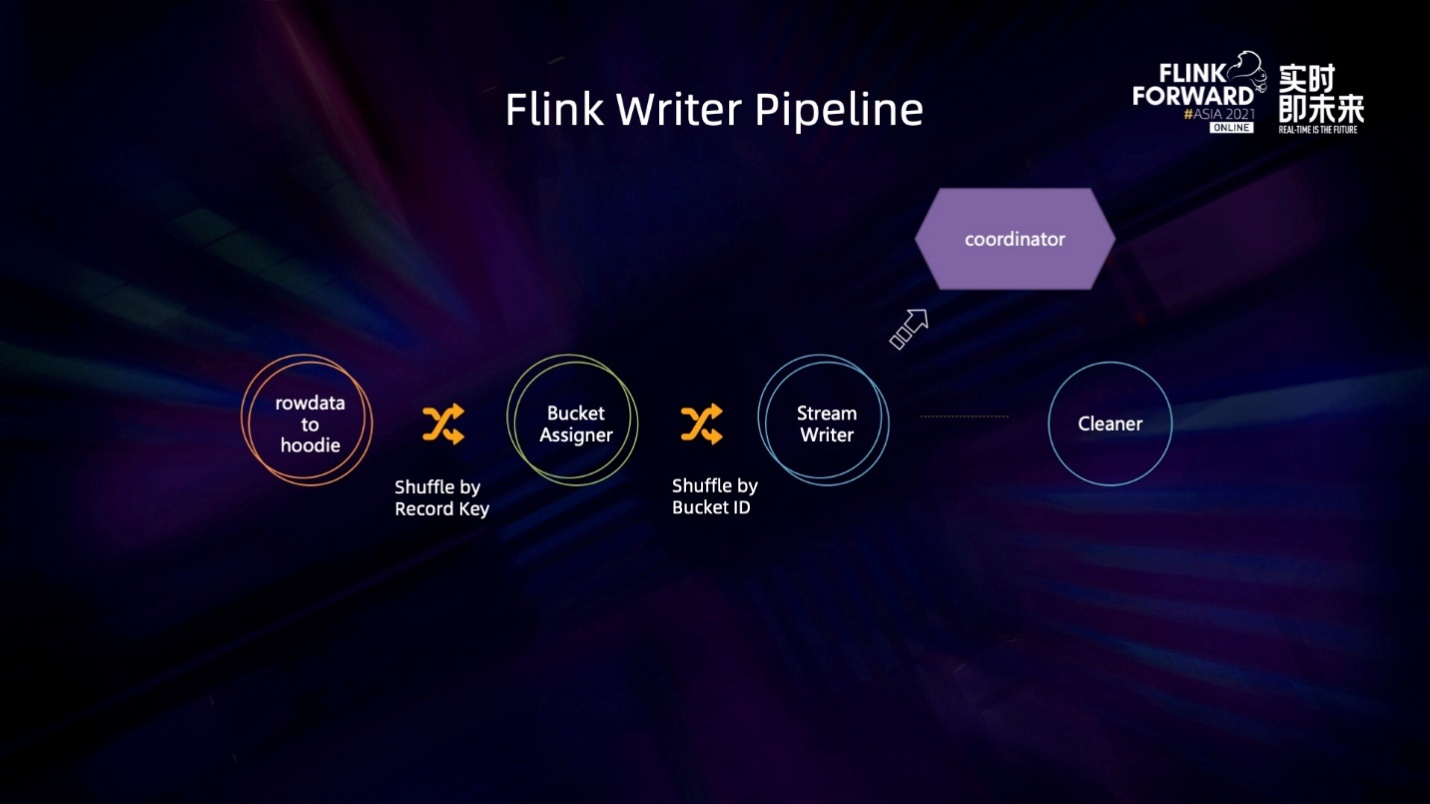

The write pipeline of Flink Hudi consists of several operators. The first operator is responsible for converting the rowdata at the table layer to the Hudi message format HudiRecord. Then, through a Bucket Assigner, it is mainly responsible for allocating the changed HudiRecord to a specific file group, and the record of the divided file group will flow into the Writer operator to perform real file writing. Finally, there is a coordinator, which is responsible for scheduling the table service at the Hudi table layer and initiating and committing new transactions. In addition, there are some background cleaning roles responsible for cleaning up the data of the old version.

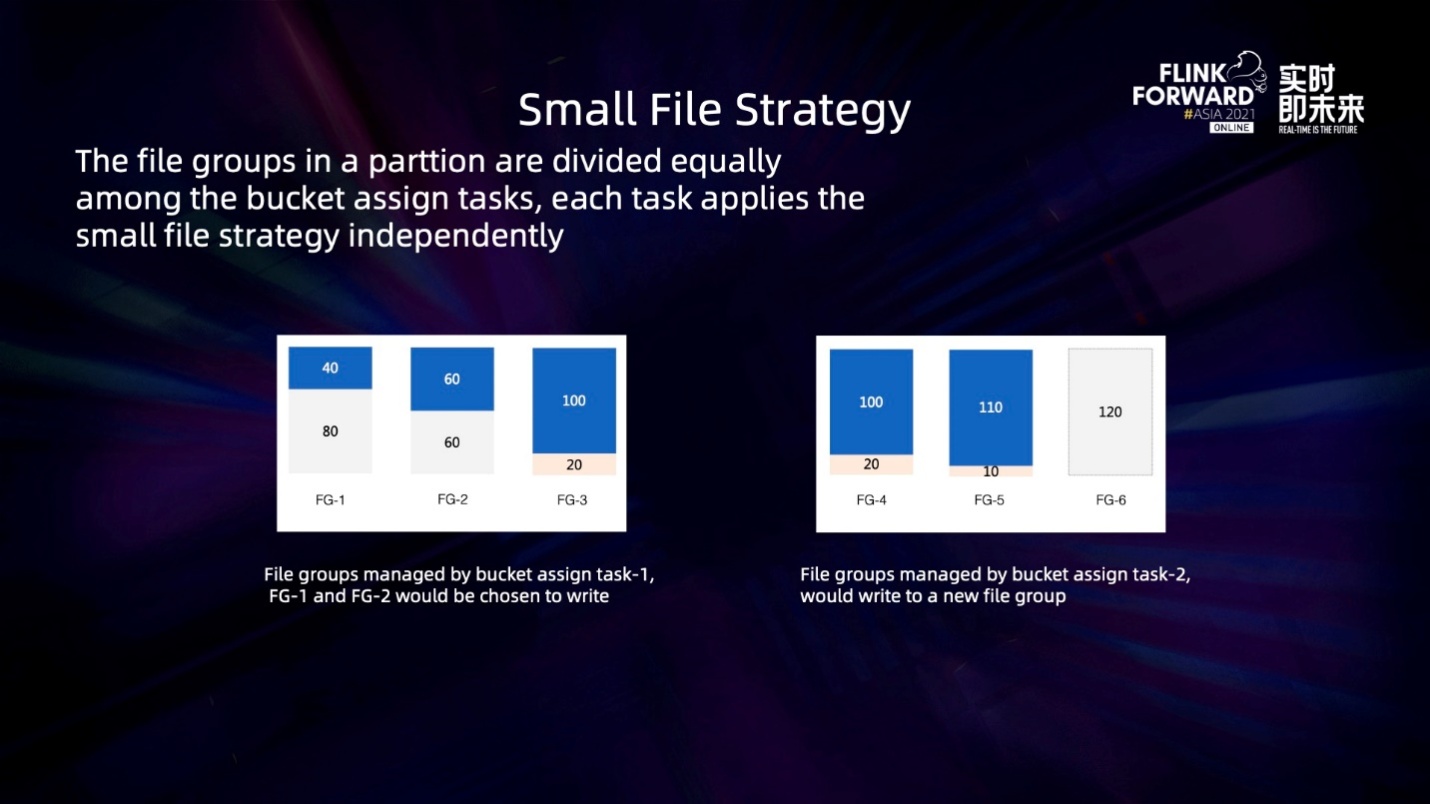

Each bucket assign task will hold a bucket assigner in the current design, which independently maintains its own set of file groups. When writing new data or non-updated insert data, the bucket assign task scans the file view and preferentially writes this batch of new data to the file group that is determined to be a small bucket.

For example, the default size of a file group is 120M in the figure above. Then, task 1 in the left figure will be written to file group1 and file group 2 first. Note: File group 3 will not be written here because file group 3 already has 100M of data, so it can avoid over-write amplification if it is no longer written to a bucket close to the target threshold. However, task 2 in the right figure will directly write a new file group and will not append those larger file groups that have already been written.

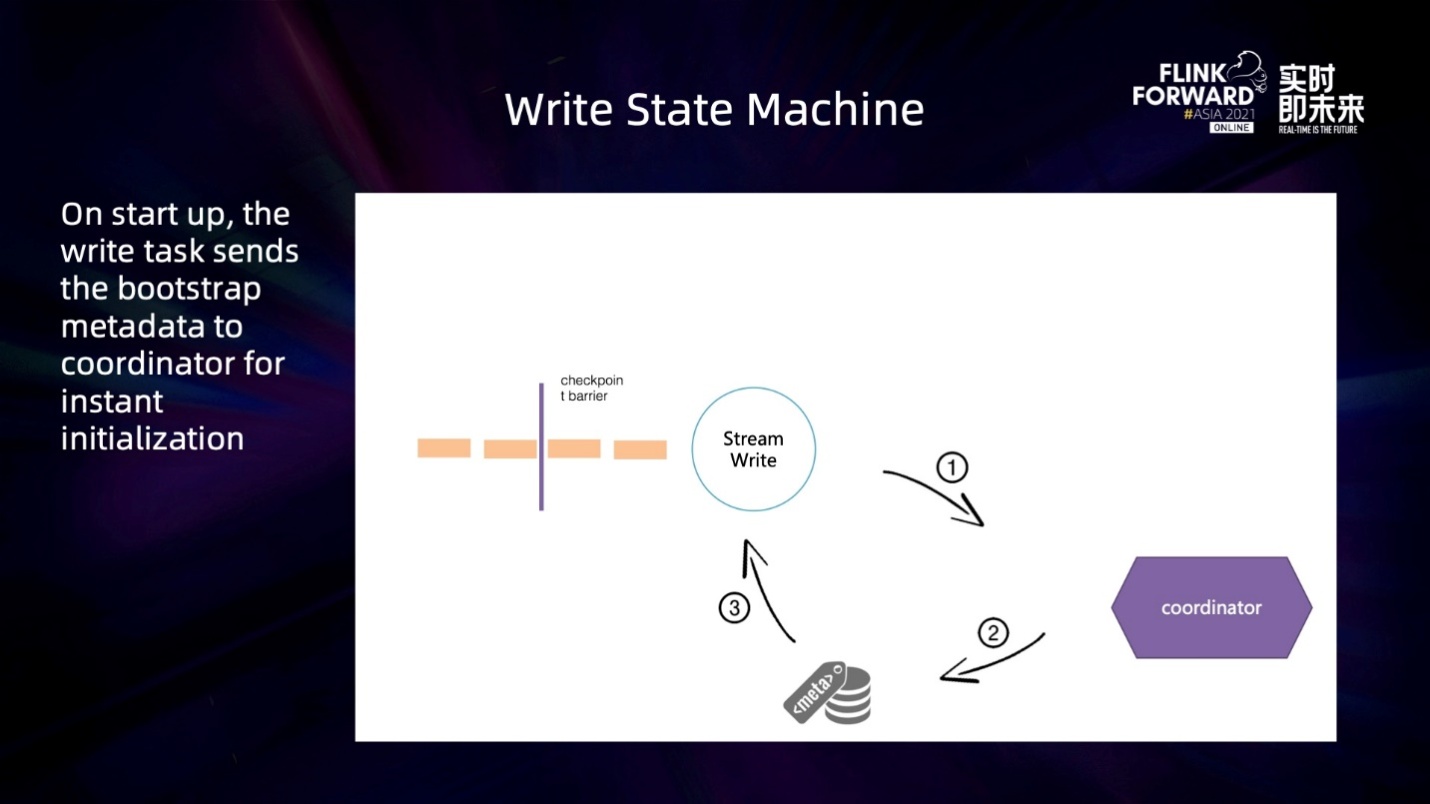

The following section describes the state switching mechanism of the Flink Hudi write process. When the job starts, the coordinator will first try to create this table on the file system. If the current table does not exist, it will write some meta information on the file directory, which means building a table. After receiving the initialization metadata of all tasks, the coordinator opens a new transaction. After the write task sees the initiation of the transaction, it unlocks the flush behavior of the current data.

Write Task will accumulate a batch of data first. There are two flush strategies here. One strategy is that when the current data buffer reaches the specified size, the data in the memory will be flushed out. The other strategy is that when the upstream checkpoint barrier arrives and needs to take snapshots, it will flush all the data in memory to disk. After each flush, the meta information is sent to the coordinator. After the coordinator receives the success event of the checkpoint, it commits the corresponding transaction and initiates the next new transaction. After the writer task sees a new transaction, it unlocks the write of the next transaction. As such, the entire writing process is strung together.

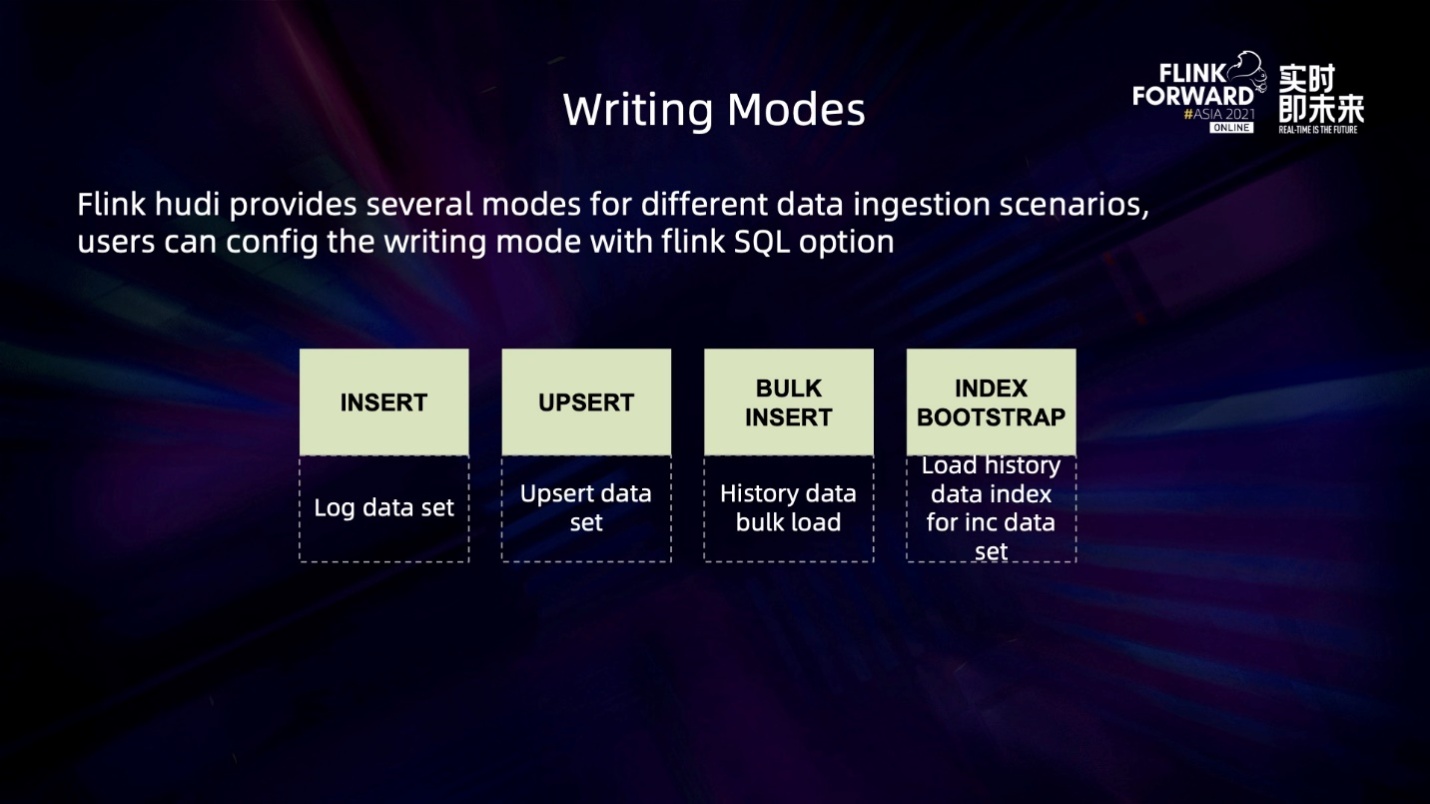

Flink Hudi Write provides a wide range of writing scenarios. Currently, you can write log data types, non-updated data types, and merge small files. In addition, Hudi supports core write scenarios (such as update streams and CDC data). At the same time, Flink Hudi supports efficient batch import of historical data. In the bucket insert mode, offline data in Hive or offline data in a database can be efficiently imported into Hudi format through the batch query. In addition, Flink Hudi provides full and incremental index loading. Users can efficiently import batch data into the lake format at one time and use the writing program connected to the stream to realize full and incremental data import.

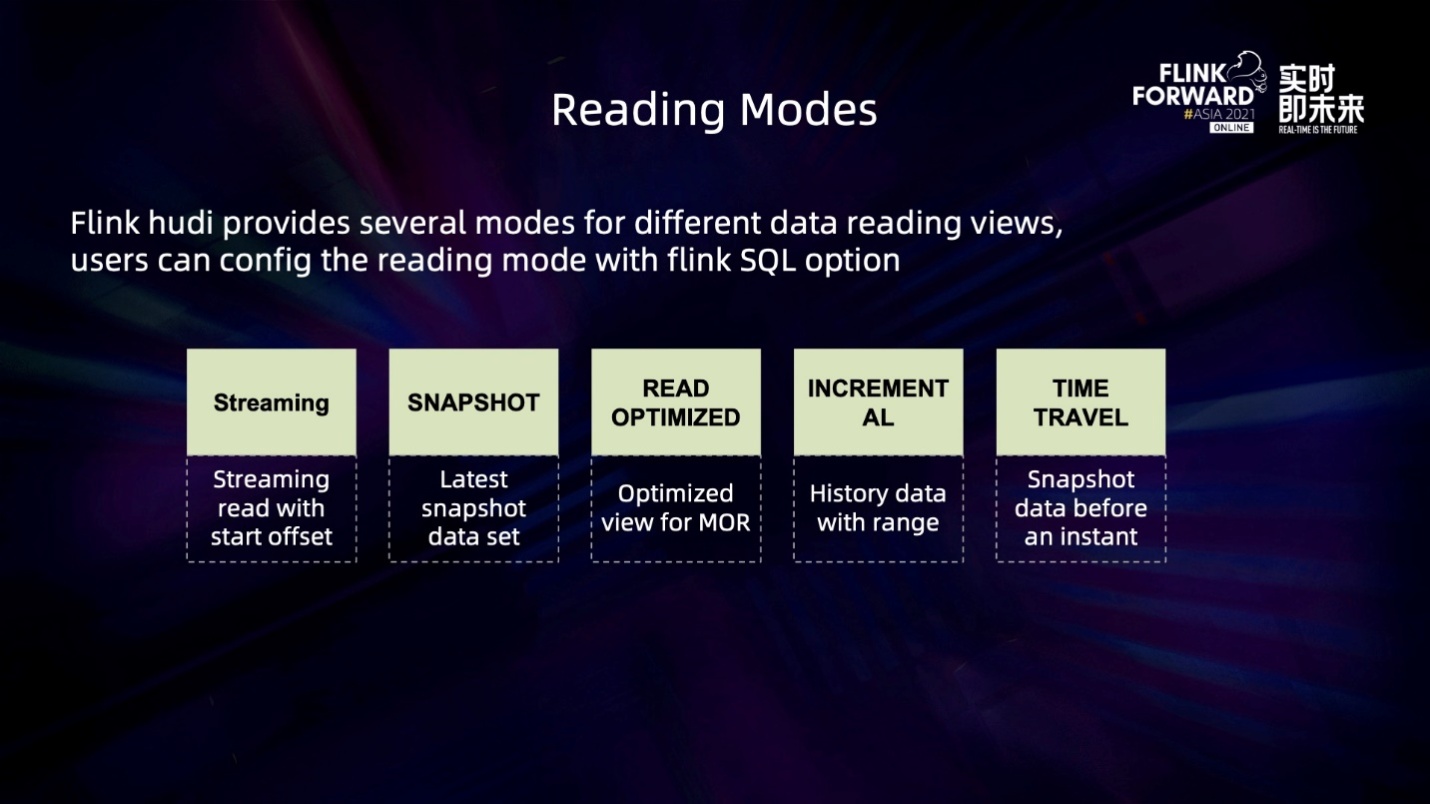

The Flink Hudi read side also supports a wide range of query views. Currently, it mainly supports full read, incremental read by historical time range, and streaming read.

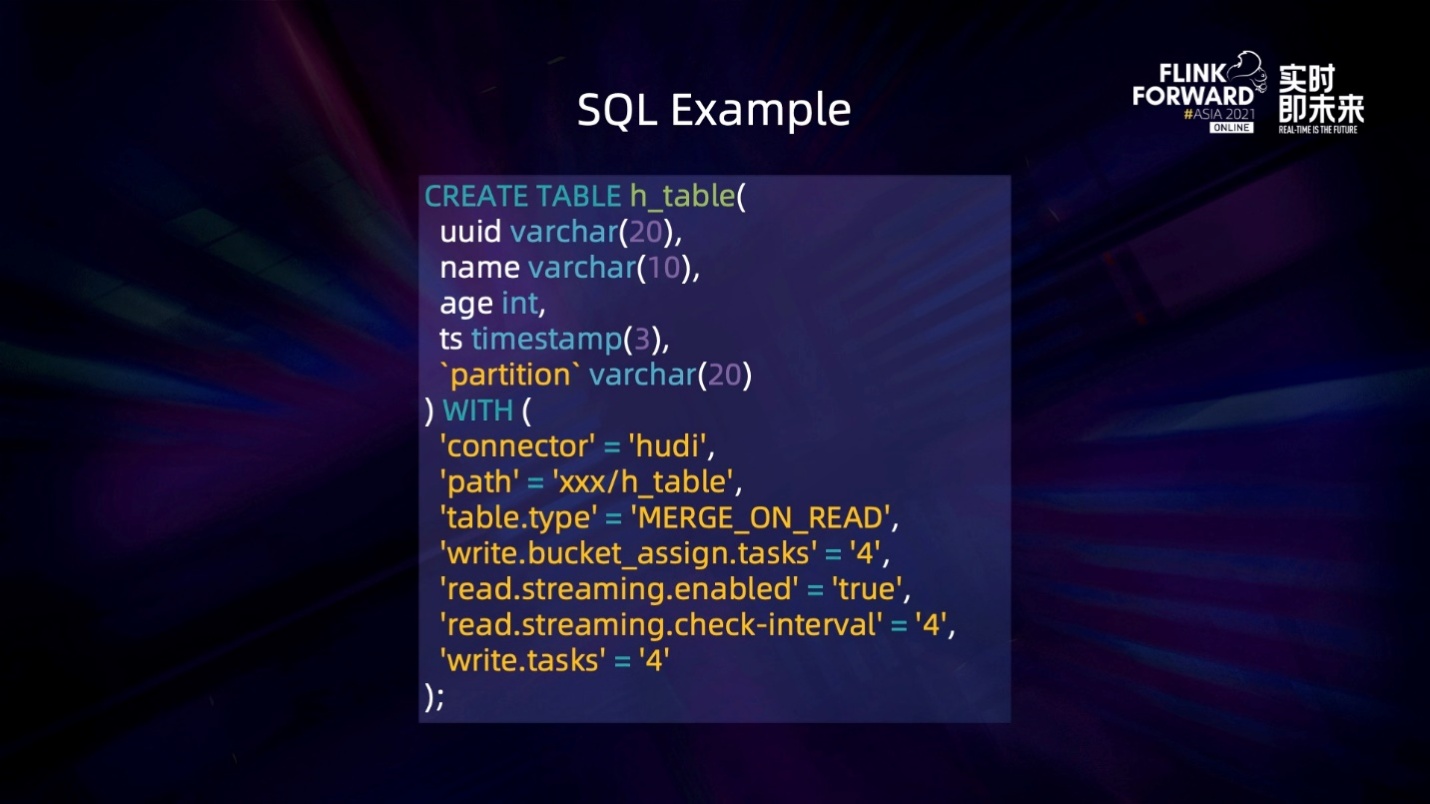

The preceding figure shows an example of using Flink SQL in Hudi. Hudi supports a wide range of use cases and simplifies the parameters that users need to configure. Users can easily write upstream data to the Hudi format by simply configuring the table path, concurrency, and operation type.

The following describes the classic application scenarios of Flink Hudi:

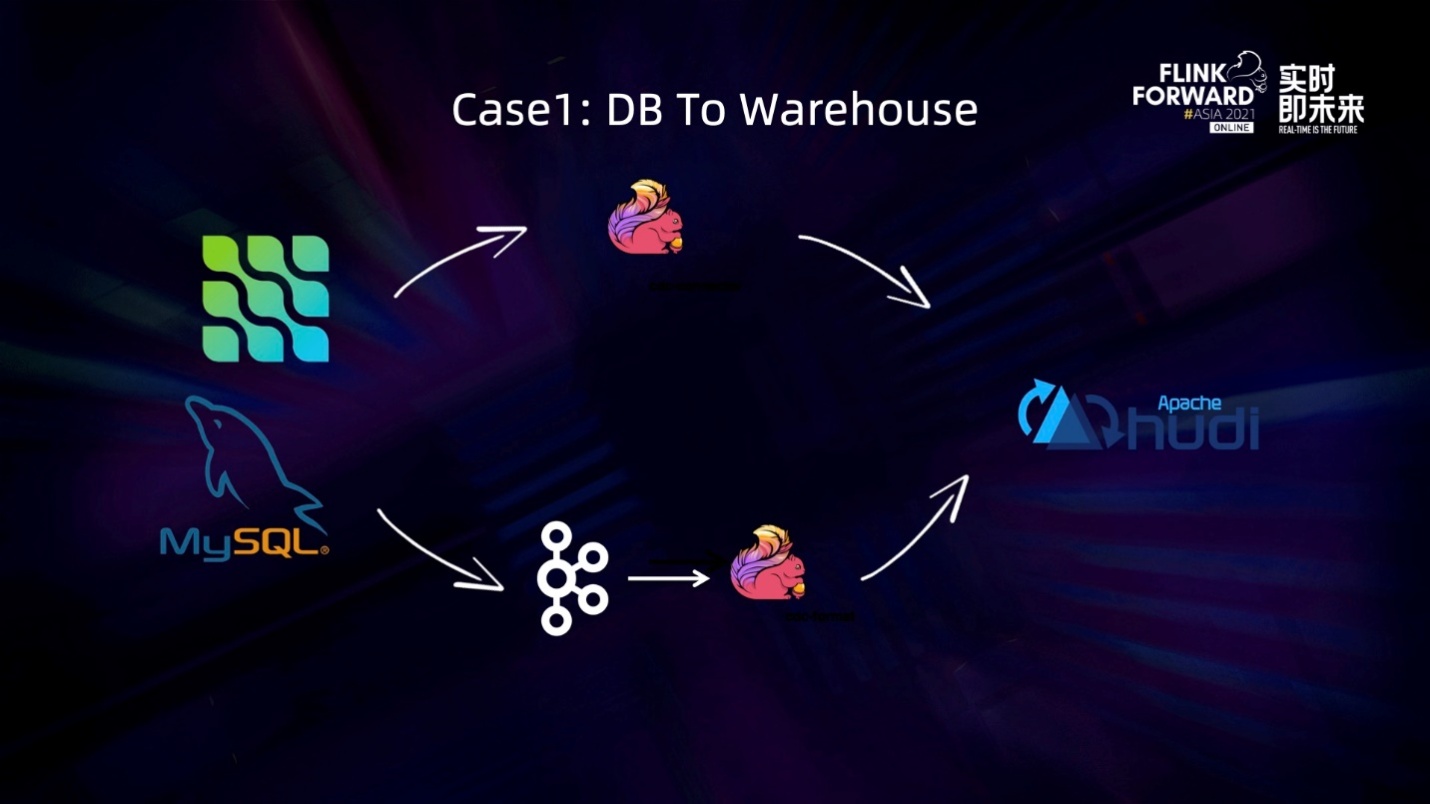

The first classic scenario is the DB Data into the lake. Currently, there are two ways to import DB data into the data lake. First, full and incremental data can be imported into Hudi format at one time through the CDC connector. Second, you can also import data into Hudi format through the CDC format of Flink by consuming the CDC changelog on Kafka.

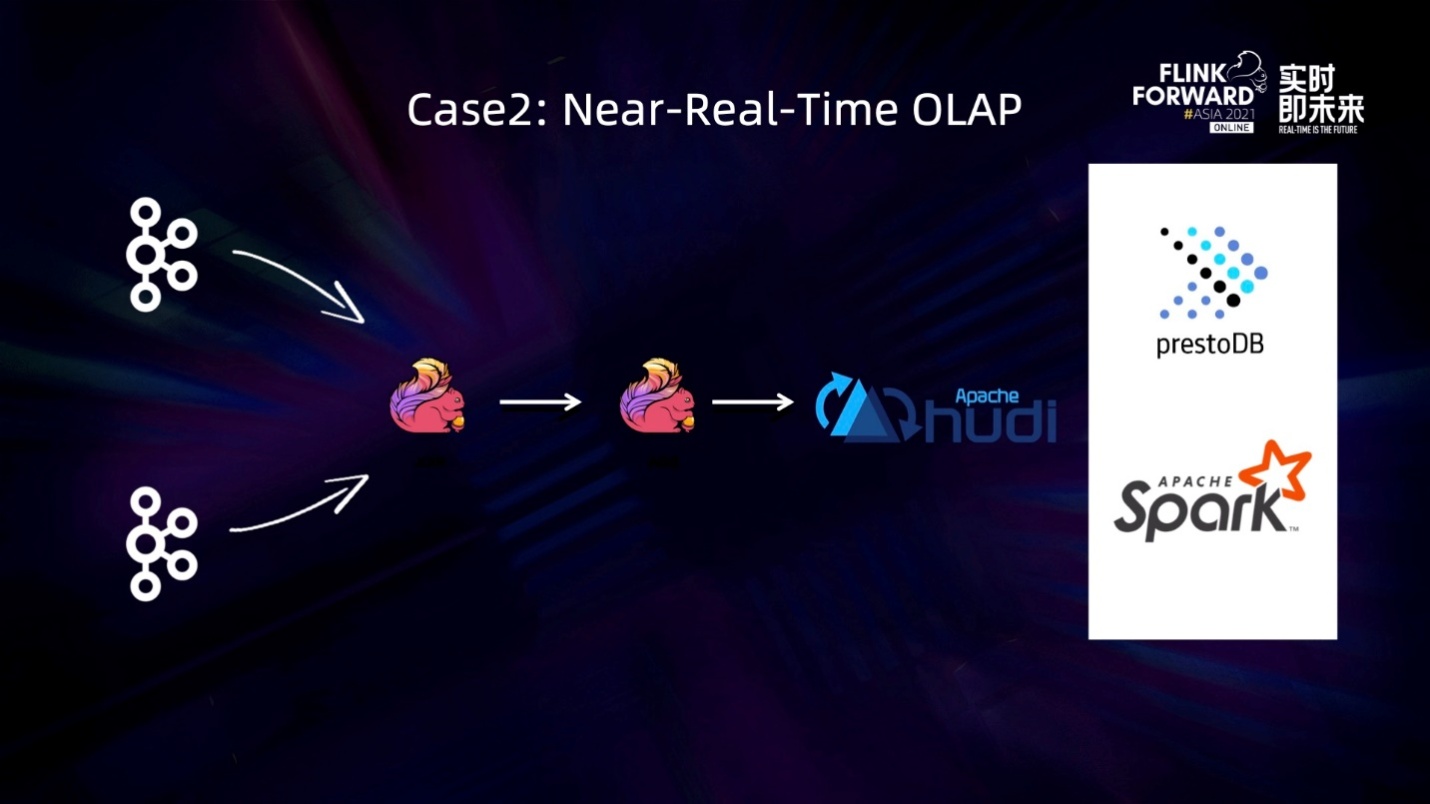

The second classic scenario is ETL (near real-time OLAP analysis) for stream computing. The change stream is directly written into the Hudi format by connecting some simple ETLs in the upstream, such as JOIN operations on two data streams or JOIN operations on two data streams with an agg. Then, the downstream read end can connect to traditional classic OLAP engines (such as Presto and Spark) to make end-to-end near real-time queries.

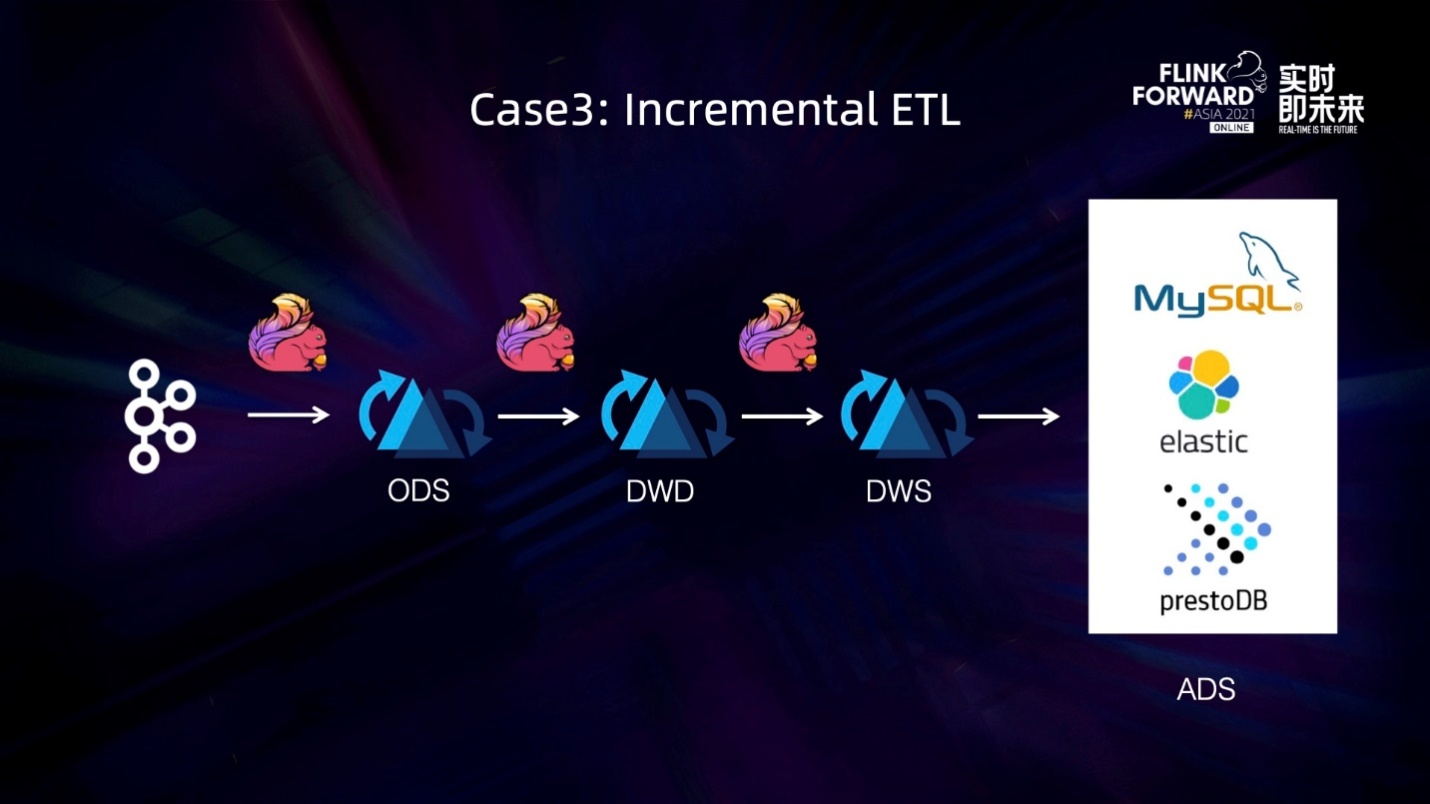

The third classic case is somewhat similar to the second one. Hudi supports native changelog. It supports saving row-level changes in Flink computing. Based on this capability, end-to-end and near real-time ETL production can be achieved by streaming read consumption changes.

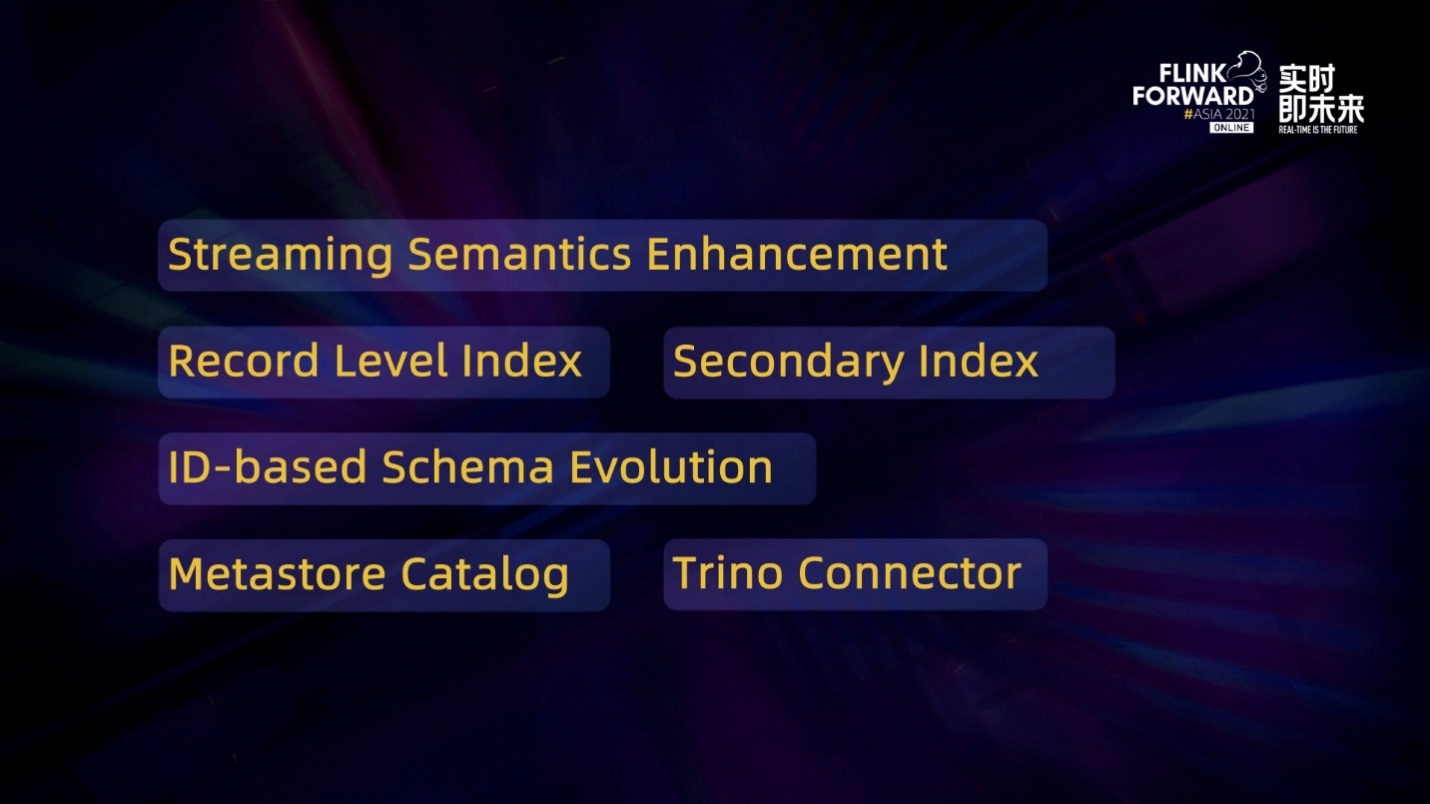

In the future, the two major versions of the community will focus on stream reading and stream writing and strengthen the semantics of stream reading. In addition, we will do self-management in catalog and metadata. We will also launch a Trino Connector support in the near future to replace the current way of reading Hive and improve efficiency.

The following is a demonstration of MySQL to input thousands of tables to Hudi.

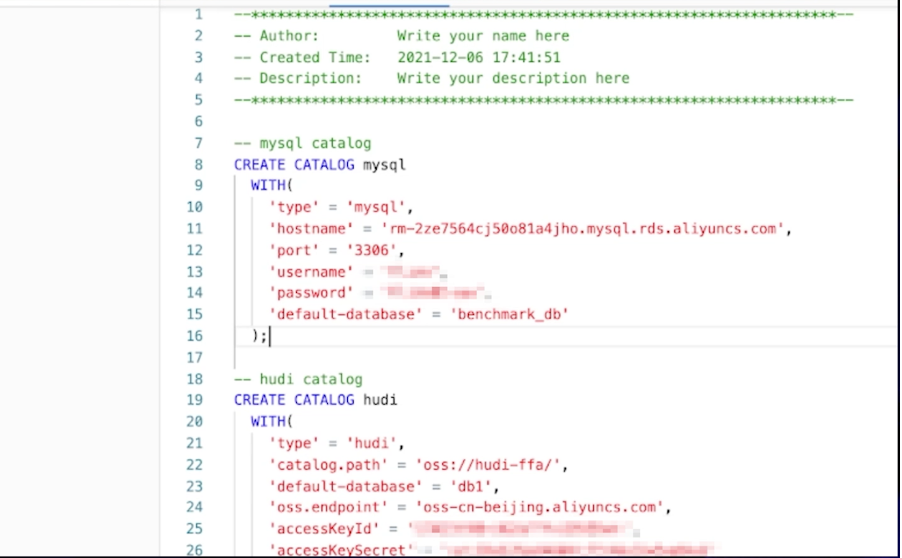

First of all, we have prepared two databases: benchmark1 and benchmark2. There are 100 tables under benchmark1 and 1000 tables under benchmark2. Since thousands of tables are strongly dependent on the catalog, we first want to create a catalog. A MySQL catalog is created for the data source, and a Hudi catalog is created for the target. The MySQL catalog is used to obtain information about all source tables, including table structures and table data. The Hudi catalog is used to create targets.

After executing the two SQL statements, two catalogs were successfully created.

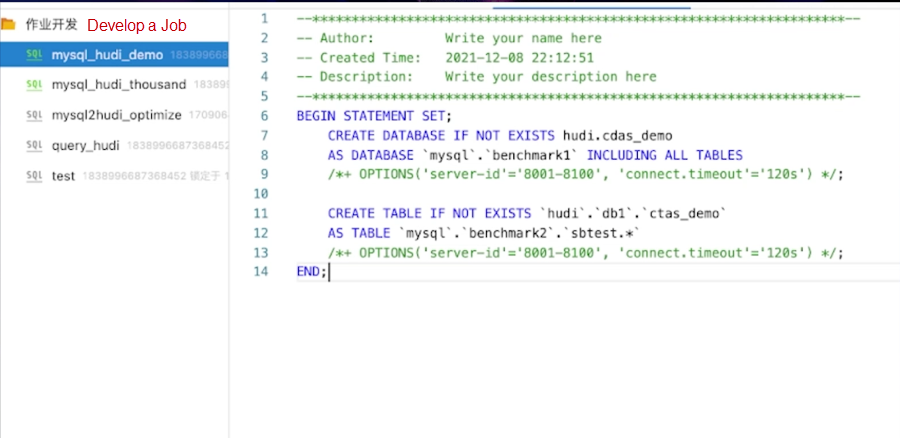

Next, create a job that inputs thousands of tables to the lake. Only nine lines of SQL are required. The first syntax is to create database as a database. Its function is to synchronize all table structures and table data under MySQL benchmark1 database to the Hudi CDS demo database with one click. The relationship between tables is a one-to-one mapping. The second syntax is create table as table. It synchronizes all tables matching the regular expressions of sbtest. in the MySQL benchmark2 database to the ctas_dema table under the DB1 of Hudi. It is a many-to-one mapping, and it will merge the database and table shards.

Then, we click the job operation and maintenance page online to start the job. We can see that the configuration information has been updated, indicating that it has been launched again. Then, click the start button to start the job. Then, you can go to the job overview page to view the state information.

The preceding figure shows the topology of the job. It is very complex and includes 1100 source tables and 101 destination table tables. Here, we have made some optimizations - source merges to merge all tables into one node. It can only be pulled once in the incremental binlog pull phase to reduce the pressure on MySQL.

Refresh the OSS page. There is an additional cdas_demo path. Enter the subtest1 path. You can see that metadata is being written, indicating the data is being written.

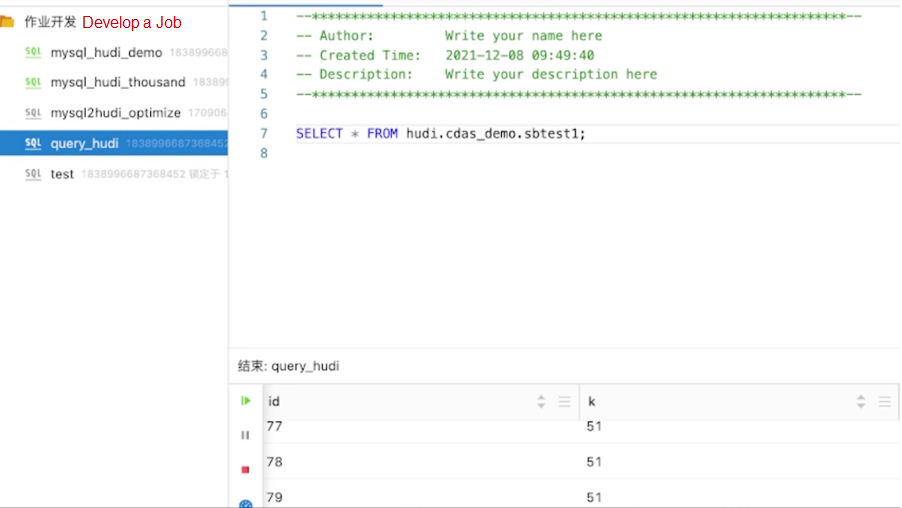

Then, write a simple SQL query to a table on the job development page to verify whether the data is actually being written. Execute the SQL statement in the preceding figure. It can be seen that the data can be queried. The data is consistent with the inserted data.

We use the metadata capabilities provided by the catalog, combined with CDS and CTS syntax and through a few lines of SQL. We can easily realize the data of thousands of tables ingestion, simplifying the process of data ingestion and reducing the workload of development and operation significantly.

An In-Depth Analysis of Flink Fine-Grained Resource Management

Flink CDC + OceanBase Data Integration Solution: Full Incremental Integration

173 posts | 48 followers

FollowApache Flink Community China - September 26, 2021

Apache Flink Community - July 5, 2024

Apache Flink Community - April 8, 2024

Apache Flink Community - May 10, 2024

ApsaraDB - February 29, 2024

Apache Flink Community - June 11, 2024

173 posts | 48 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Apache Flink Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free