This article is compiled from the speech of Guo Yangze (Tianling) (Alibaba Senior Development Engineer) during Flink Forward Asia 2021. The main contents include:

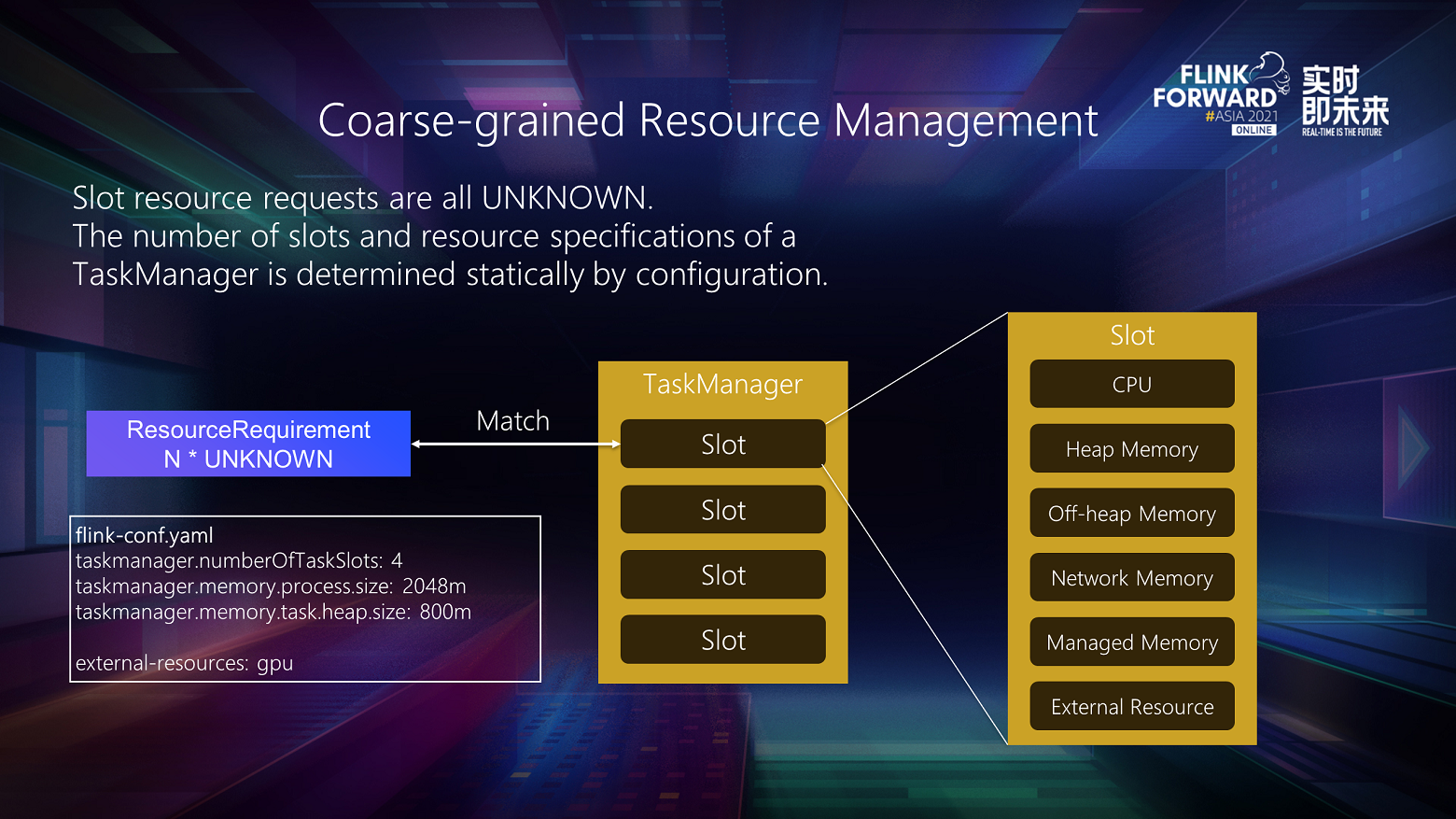

Before Flink 1.14, a coarse-grained resource management method was used. The resources required for each operator slot request are unknown. In Flink, a special value of UNKNOWN is used. This value can match the physical slot of any resource specification. From the perspective of TaskManager (TM), the number of slots and the resource dimensions of each slot are determined statically based on the Flink configuration.

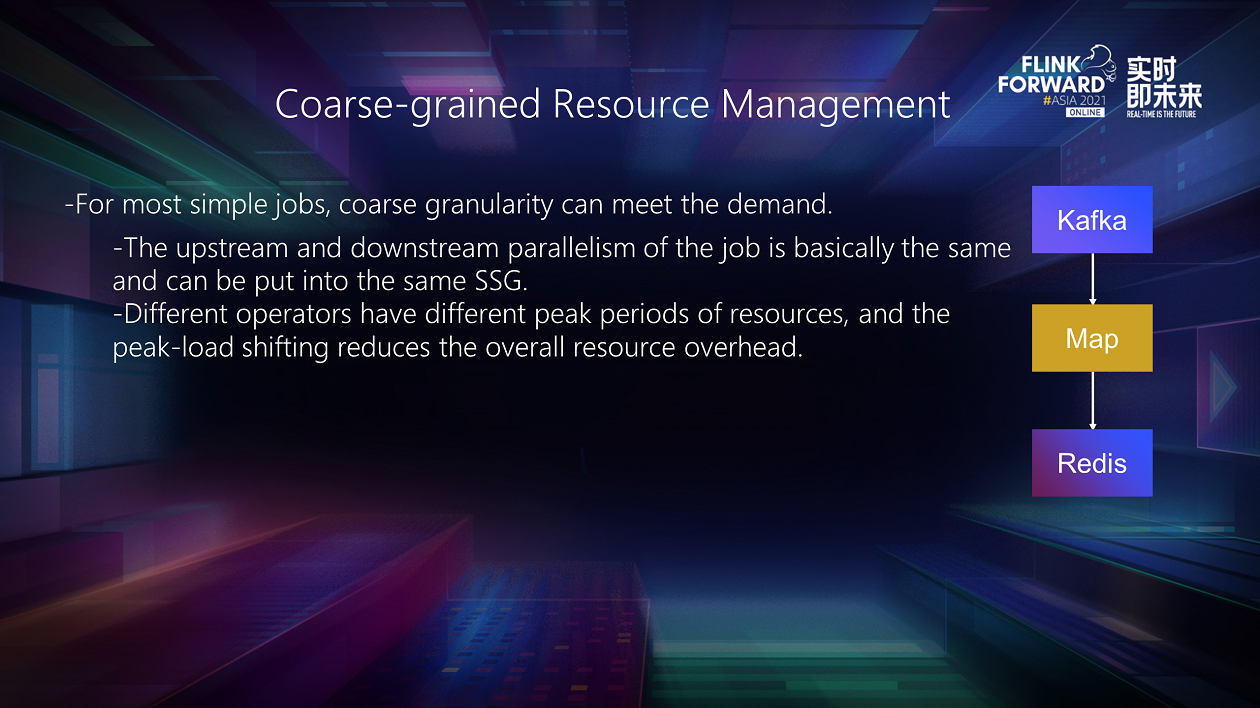

The existing coarse-grained resource management can meet the requirements for resource efficiency for most simple jobs. For example, in the preceding figure, Kafka reads data and writes the data to Redis after some simple processing. It is easy to keep the upstream and downstream concurrency consistent for this kind of job and put the entire pipeline of the job into one SlotSharingGroup (SSG). In this case, the resource requirements of the slot are virtually the same. Users can directly adjust the default slot configuration to achieve high resource utilization efficiency. At the same time, since the peak value of different task hotspots is not necessarily the same, different tasks can be put into a large slot through peak-load shifting, which can help reduce the overall resource overhead.

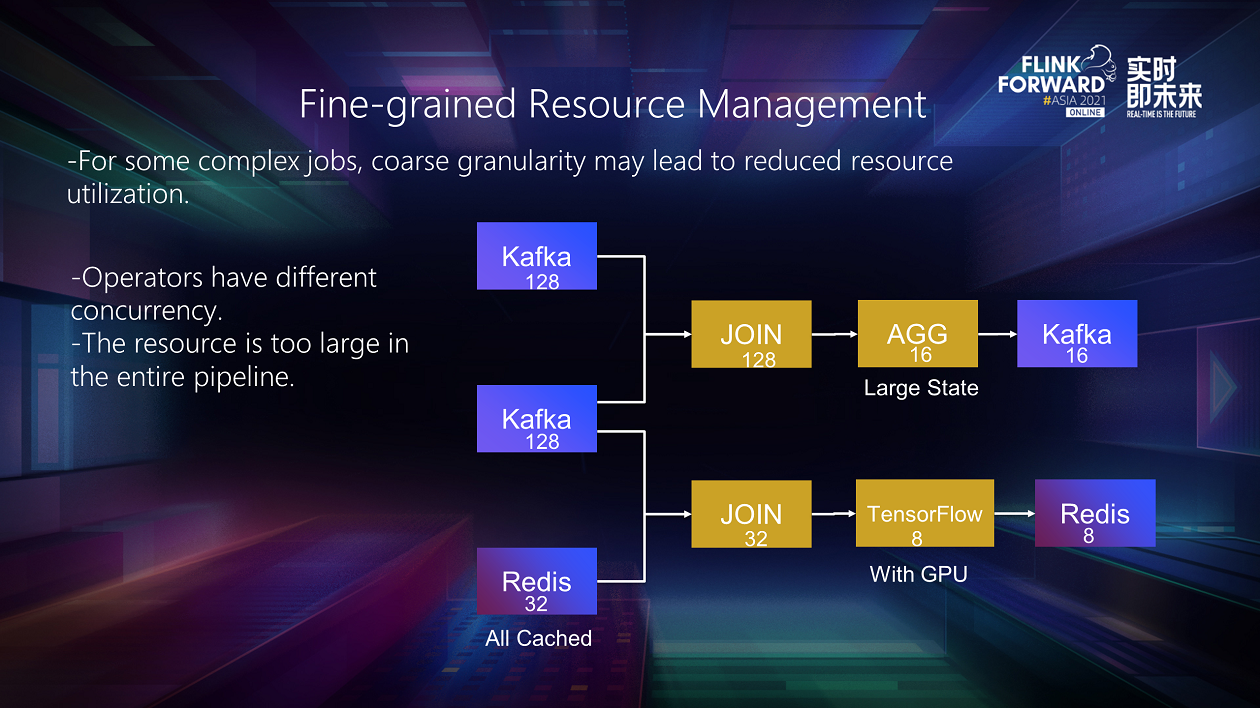

However, coarse-grained resource management cannot meet their needs well for some complex operations in production.

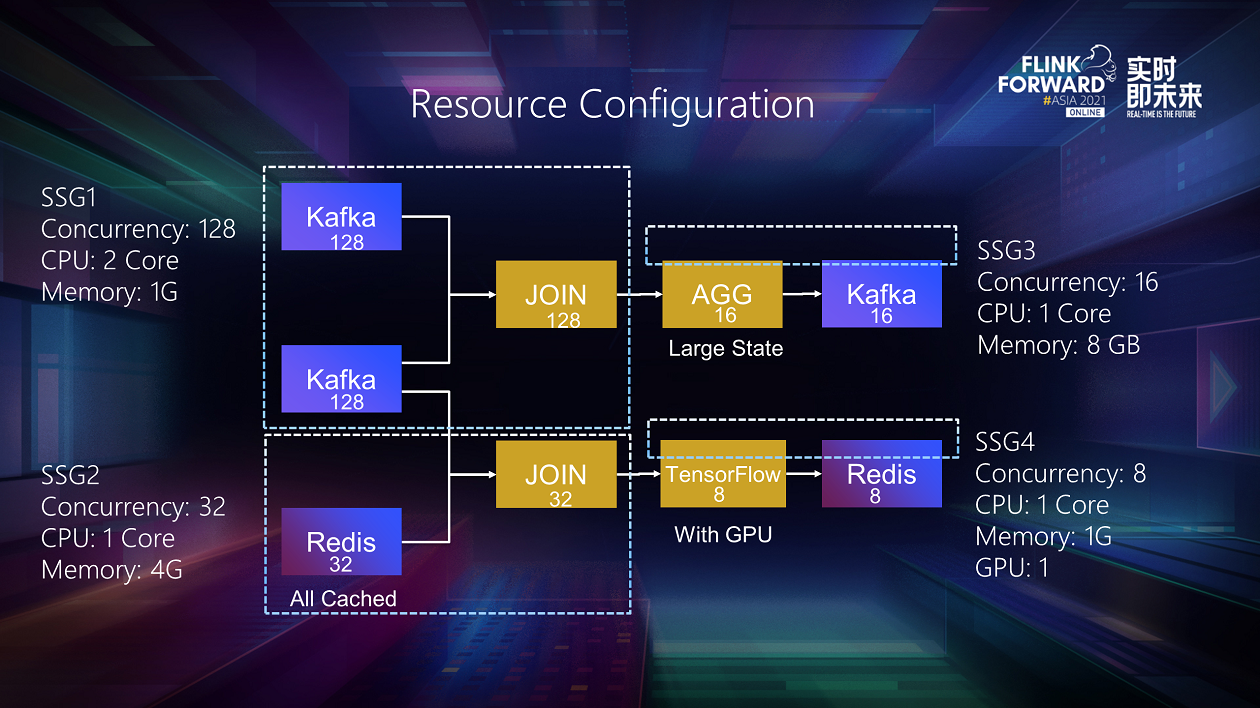

For example, a job on the graph above has two concurrent 128 Kafka sources and a 32-concurrent Redis dimension table. It has two paths to process. The two Kafka sources form the first path. After joining, some aggregation operations are performed to sink the data to the third Kafka with 16 concurrent connections. The other path is Kafka and Redis dimension tables joined. The result flows into an online inference module based on TensorFlow and is finally stored in Redis.

Coarse-grained resource management in this job may have reduced resource utilization efficiency.

First of all, the upstream and downstream concurrency of a job is inconsistent. If you want to put the entire job into a slot, you can only align it with the highest 128 concurrencies. The alignment process is not a big problem for lightweight operators. However, it will cause a huge waste of resources for operators that consume heavy resources. For example, the Redis dimension table on the graph caches all data in memory to improve performance, and the aggregation operator requires a larger managed memory to store the state. Only 32 and 16 resources need to be applied for these two operators, respectively. After alignment and concurrency, 128 resources need to be applied for, respectively.

At the same time, the pipeline of the entire job may not be placed in a slot or TM due to excessive resources, such as the memory of the preceding operators, and the TensorFlow module requires GPU to ensure computing efficiency. GPU is a very expensive resource, so there may not be a sufficient number on the cluster. As a result, jobs cannot apply for sufficient resources due to alignment and concurrency and ultimately cannot be executed.

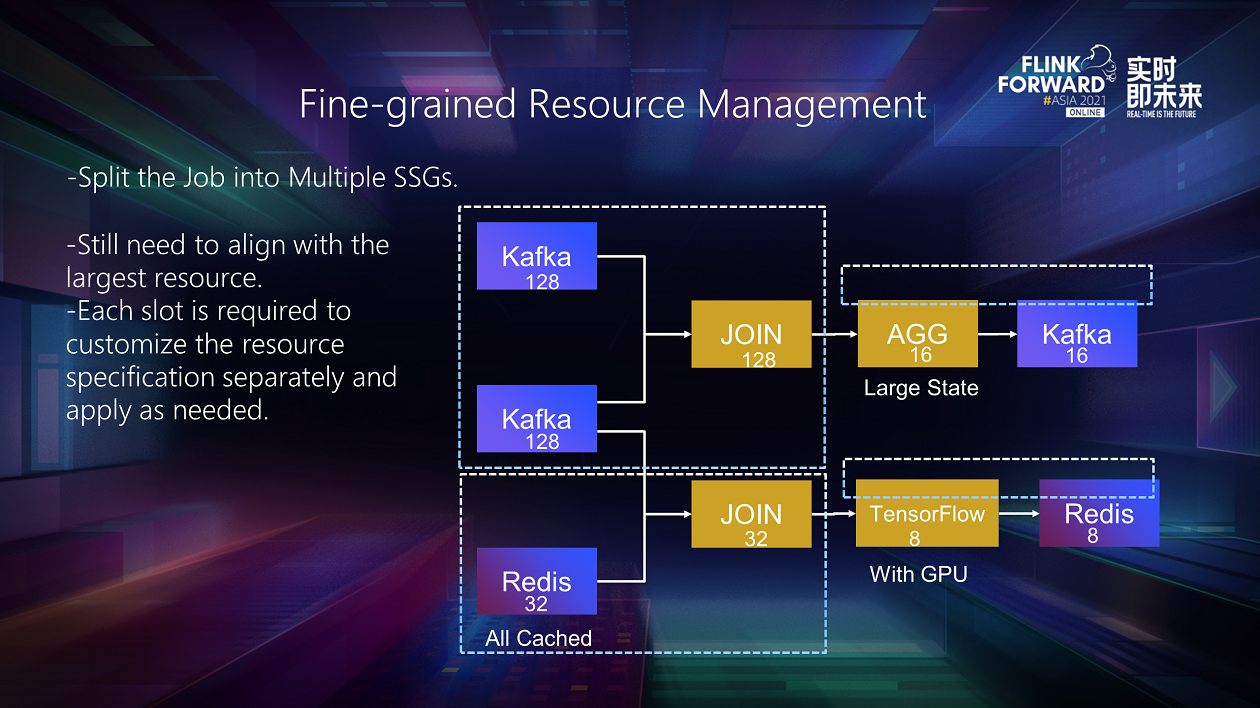

The entire job can be split into multiple SSGs. As shown in the figure, the operator is divided into four SSGs (according to concurrency) to ensure the concurrency inside each SSG is aligned. However, since each slot only has one default specification, it is still necessary to align all resource dimensions of the slot to the maximum value of each SSG. For example, memory needs to be aligned with the requirements of Redis dimension tables. Managed memory needs to be aligned with aggregation operators, and even a GPU needs to be added to extended resources. This still cannot solve the problem of resource waste.

We propose fine-grained resource management to solve this problem. The basic idea is that the resource specifications of each slot can be customized individually. Users can apply on-demand to maximize resource utilization efficiency.

In summary, fine-grained resource management aims to improve the overall utilization efficiency of resources by enabling each module of the job to apply and use resources on demand. It applies to the following scenarios. There are significant differences in the concurrency of upstream and downstream tasks in a job. The pipeline resources are too large, or it contains expensive expansion resources. In these cases, the job needs to be split into multiple SSGs, and the resource requirements of different SSGs are different. In this case, fine-grained resource management can reduce resource waste. In addition, jobs may contain one or more stages for batch tasks. Resource consumption varies significantly between different stages. Similarly, fine-grained resource management is required to reduce resource overhead.

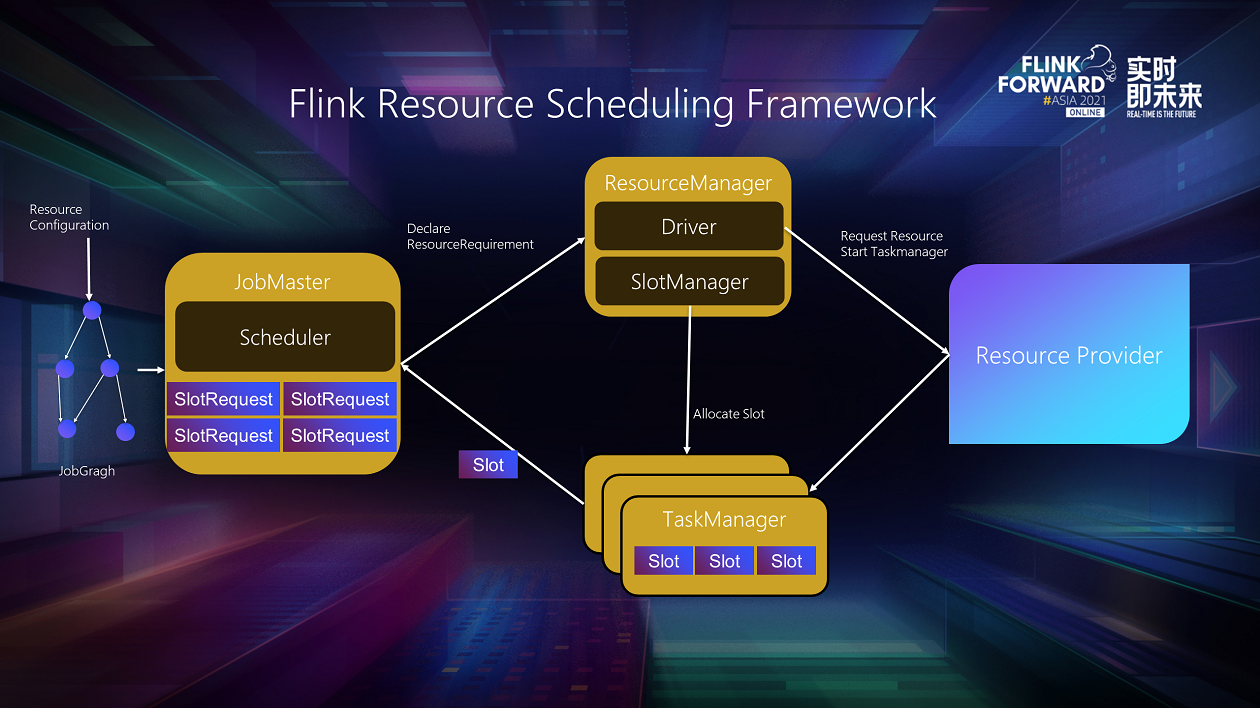

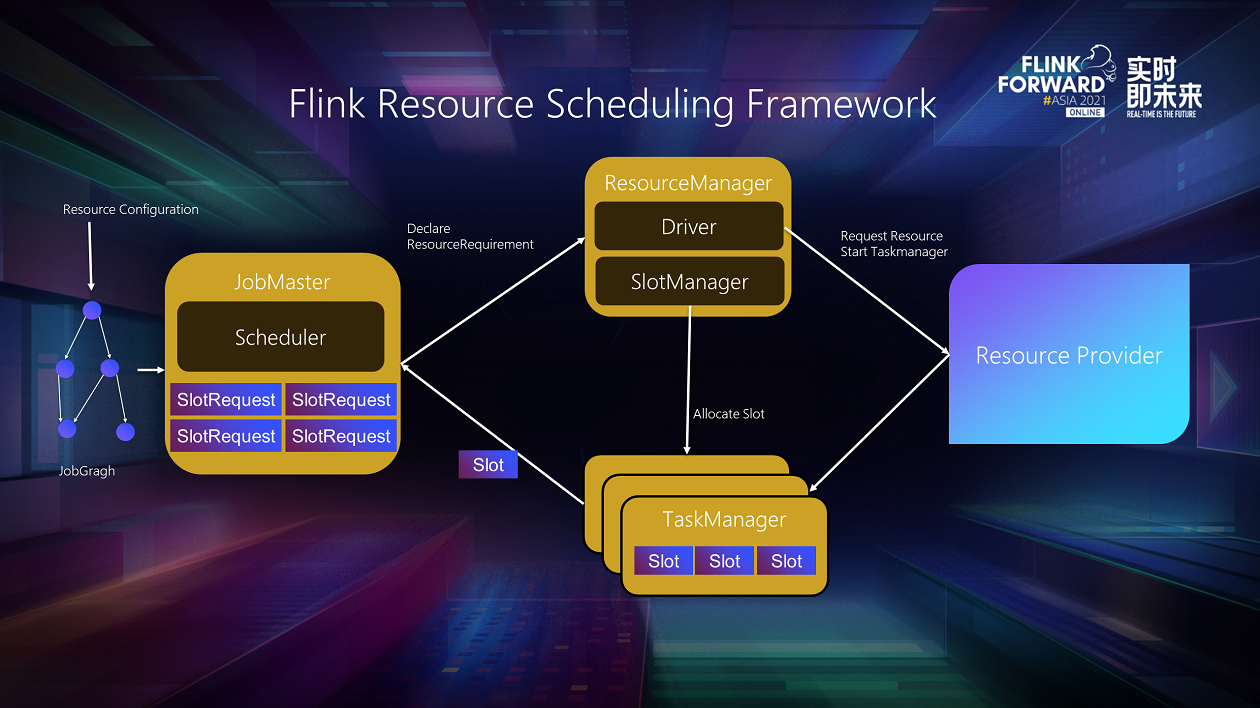

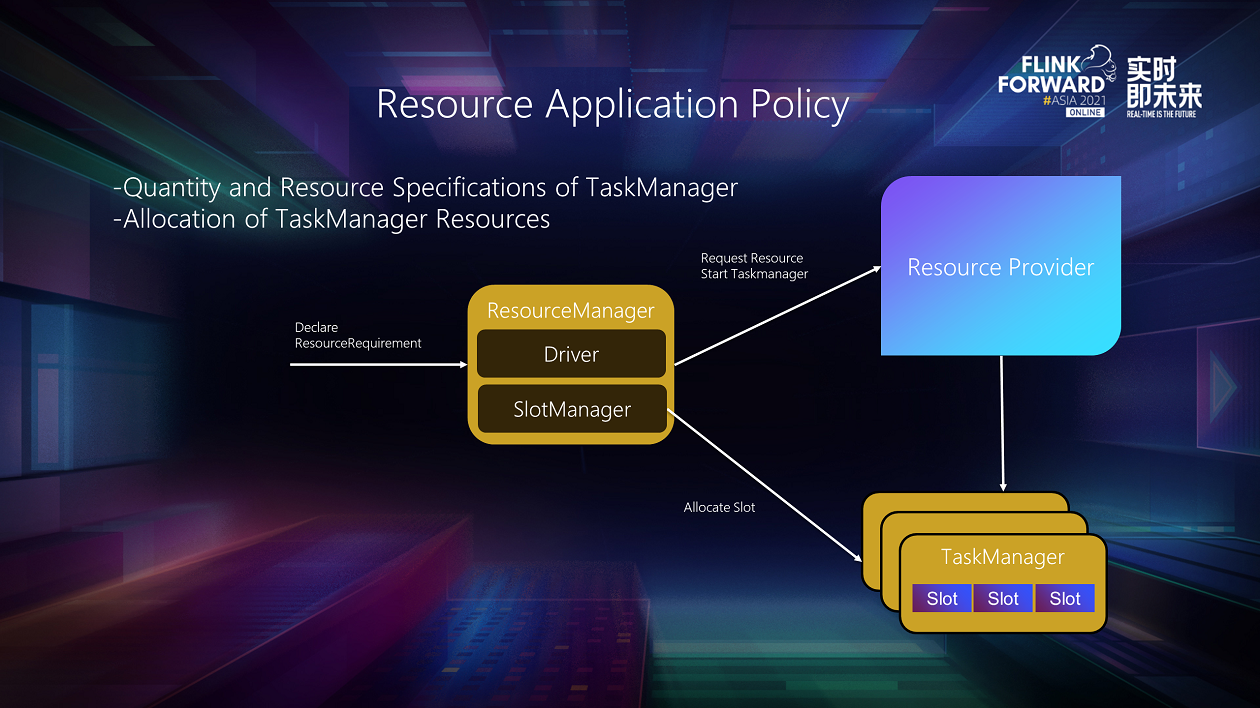

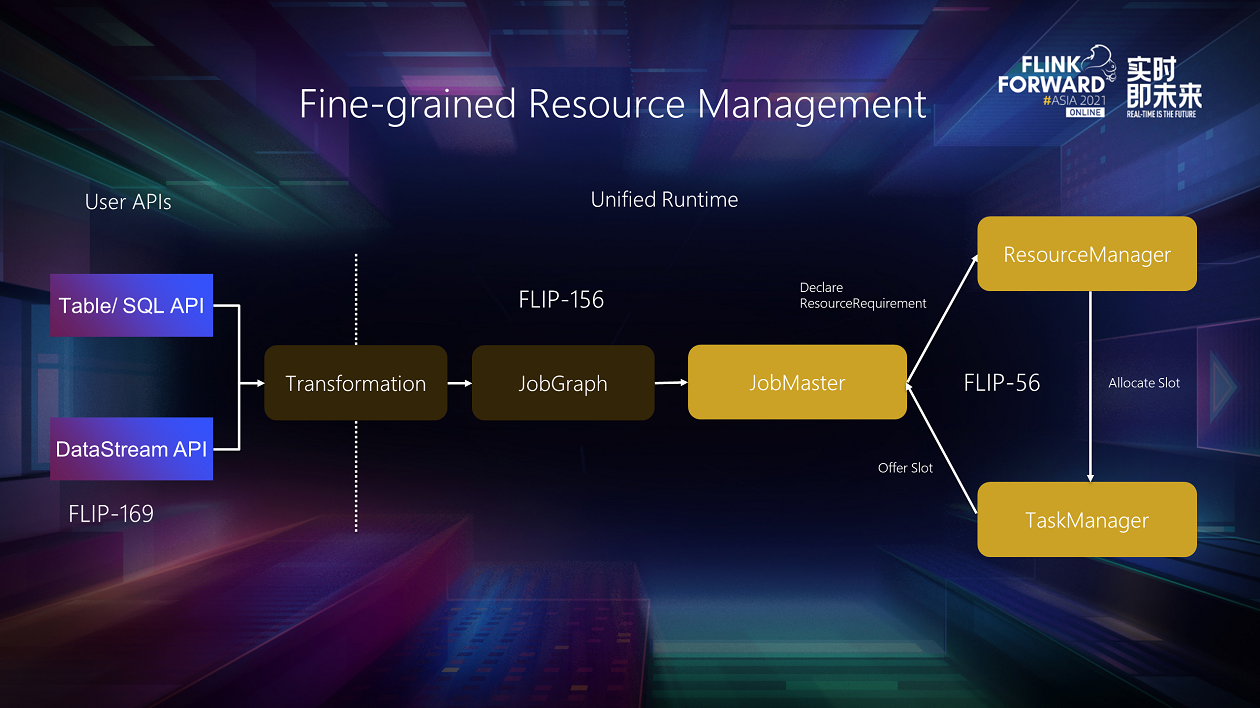

The resource scheduling framework of Flink has three roles: JobMaster (JM), ResourceManager (RM), and TaskManager. The tasks written by users are compiled into JobGraph, injected into resources, and submitted to JM. JM is used to manage JobGraph resource applications and execute the deployment.

The scheduling-related component in JM is Scheduler, which generates a series of SlotRequest according to JobGraph, aggregates these SlotRequest to generate a ResourceRequirement, and sends it to RM. After RM receives the resource declaration, RM will check whether the existing resources in the cluster can meet its requirements. If possible, it will send a request to TM to offer a slot to the corresponding JM. (The allocation of the slot here is completed with SlotManager components.) If the existing resources are insufficient, it will apply for new resources from the external Kubernetes or Yarn through the internal driver. After JM receives enough slots, it will start deploying operators before the job can run.

Following this framework, the technical implementation details and design choices in fine-grained resource management are analyzed and explained.

At the entrance to Flink, you should inject resource configurations into JobGraph. This part is the SSG-based resource configuration interface proposed in FLIP-156. Regarding the design selection of resource configuration interfaces, the main problem is the granularity of resource configuration.

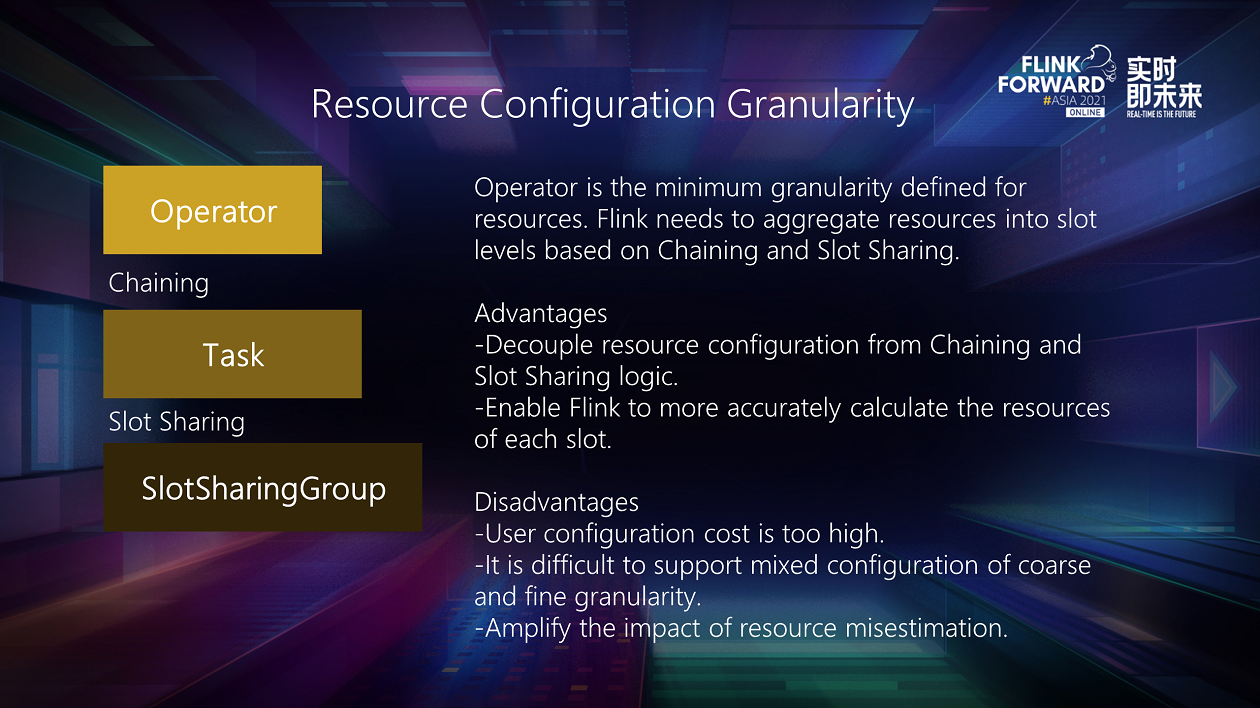

The first is the operator with the smallest operator granularity. If you configure resources on the operator, Flink needs to aggregate the resources into slot levels based on chaining and slot sharing before scheduling the resources.

The advantage of using this granularity is that we can decouple the resource configuration from the logic of chaining and slot sharing. Users only need to consider the requirements of the current operator, regardless of whether it is embedded with other operators or whether it is scheduled to a slot. Second, it enables Flink to calculate the resources of each slot more accurately. If the upstream and downstream operators in an SSG have different concurrency, the resources required for the physical slot corresponding to the SSG may also be different. If Flink has mastered the resources of each operator, it has the opportunity to help optimize resource efficiency.

However, it also has some shortcomings. First, the user configuration cost is too high. Complex jobs in production contain a large number of operators, which makes it difficult for users to configure one by one. Second, in this case, it is difficult to support coarse-fine-grained mixed resource allocation. If both coarse-grained and fine-grained operators exist in an SSG, Flink cannot determine the number of resources it needs. Finally, the user's allocation or estimation of resources will have a certain degree of deviation, and this deviation will continue to accumulate, so the operator's peak-load shifting can not be used effectively.

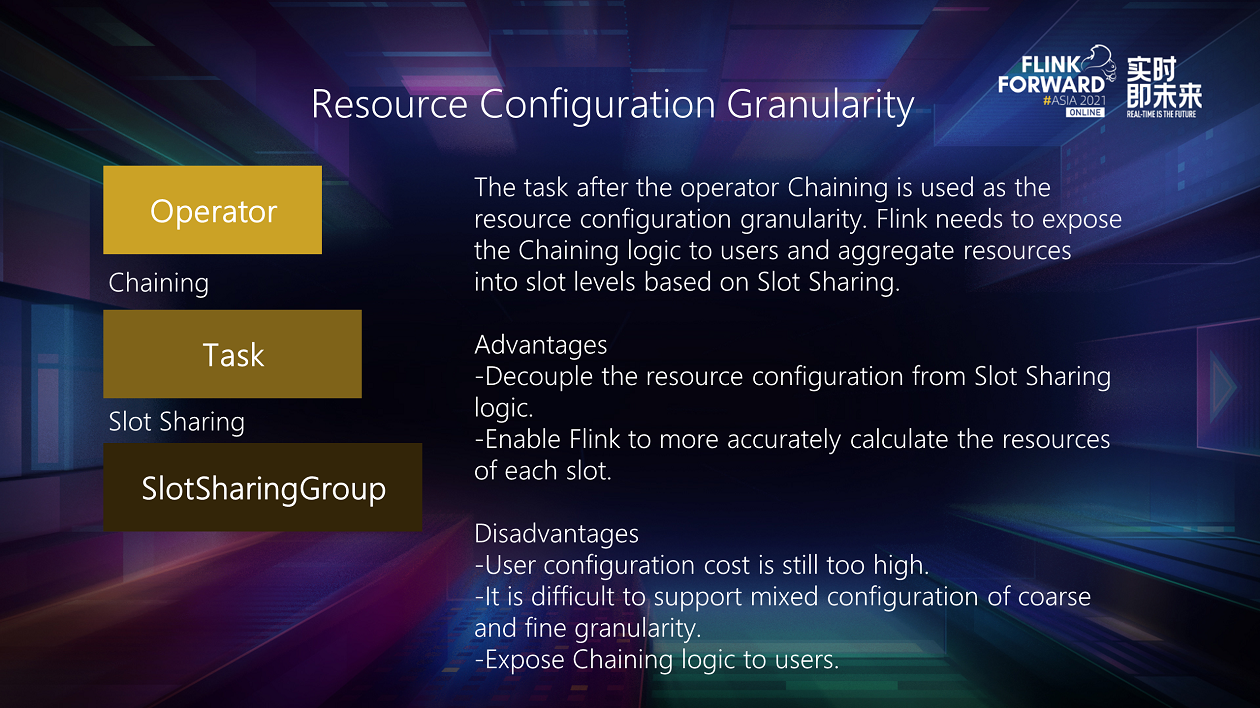

The second option is to use the task formed after the operator is chaining as the granularity of resource configuration. In this case, we must expose the chaining logic in Flink to users. At the same time, the runtime of Flink still needs to further aggregate resources into slot levels according to the slot sharing configuration of the task before resource scheduling.

Its advantages and disadvantages are roughly the same as the granularity of operators. Compared with operators, it has relatively reduced user configuration costs, but this is still a pain point. However, the resource configuration cannot be decoupled from chaining, and the internal logic of chaining and Flink is exposed to users. As a result, potential internal optimization is limited. Once a user configures the resources of a task, the chaining logic changes may split the task into two or three, resulting in incompatible user configurations.

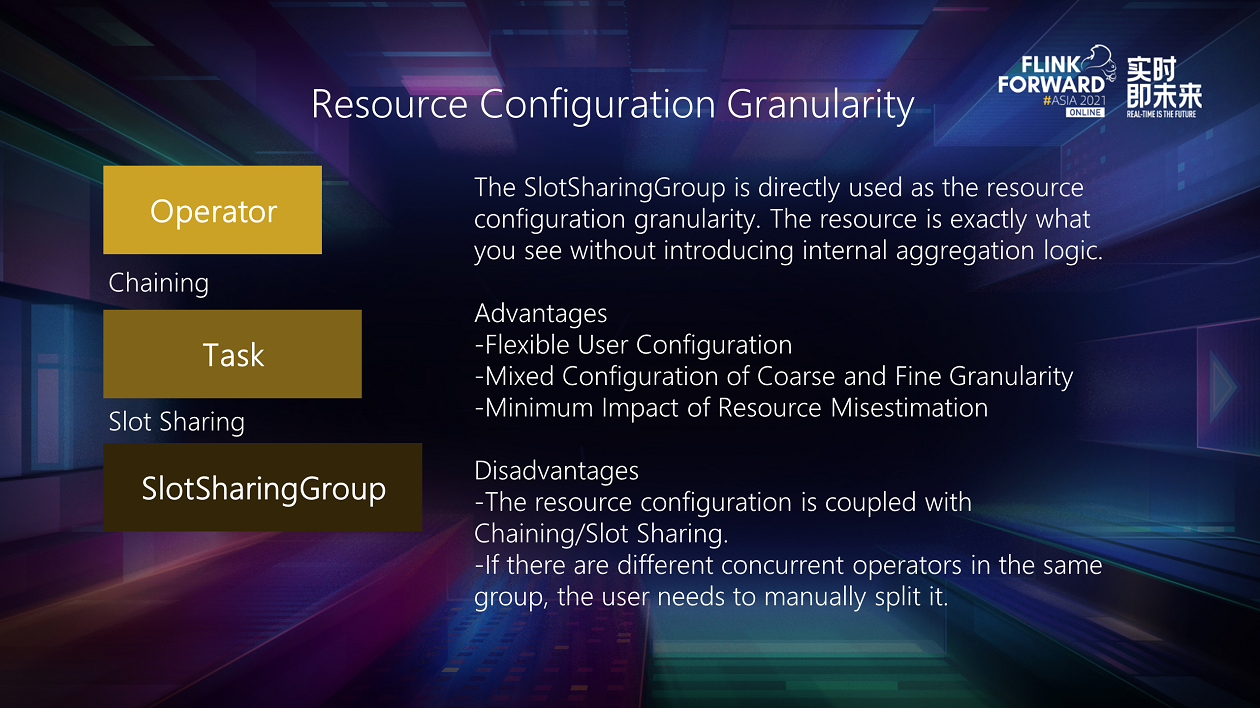

The third option is to directly use SSG as the granularity of resource configuration. This way, for Flink, what is seen and obtained for resource configuration, the previous resource aggregation logic is omitted.

At the same time, this choice has the following advantages:

Of course, some restrictions will be introduced, which couple the chaining and slot sharing of resource allocation together. In addition, if there is a concurrency difference between operators in an SSG, users may need to manually split the group to maximize resource utilization efficiency.

Therefore, we finally chose the SSG-based resource configuration interface in FLIP-156. In addition to the advantages mentioned above, the most important thing is that from the resource scheduling framework, it can be found that slot is the most basic unit of resource scheduling. From Scheduler to RM/TM, resource scheduling is applied in units of the slot. This granularity is directly used to avoid increasing the complexity of the system.

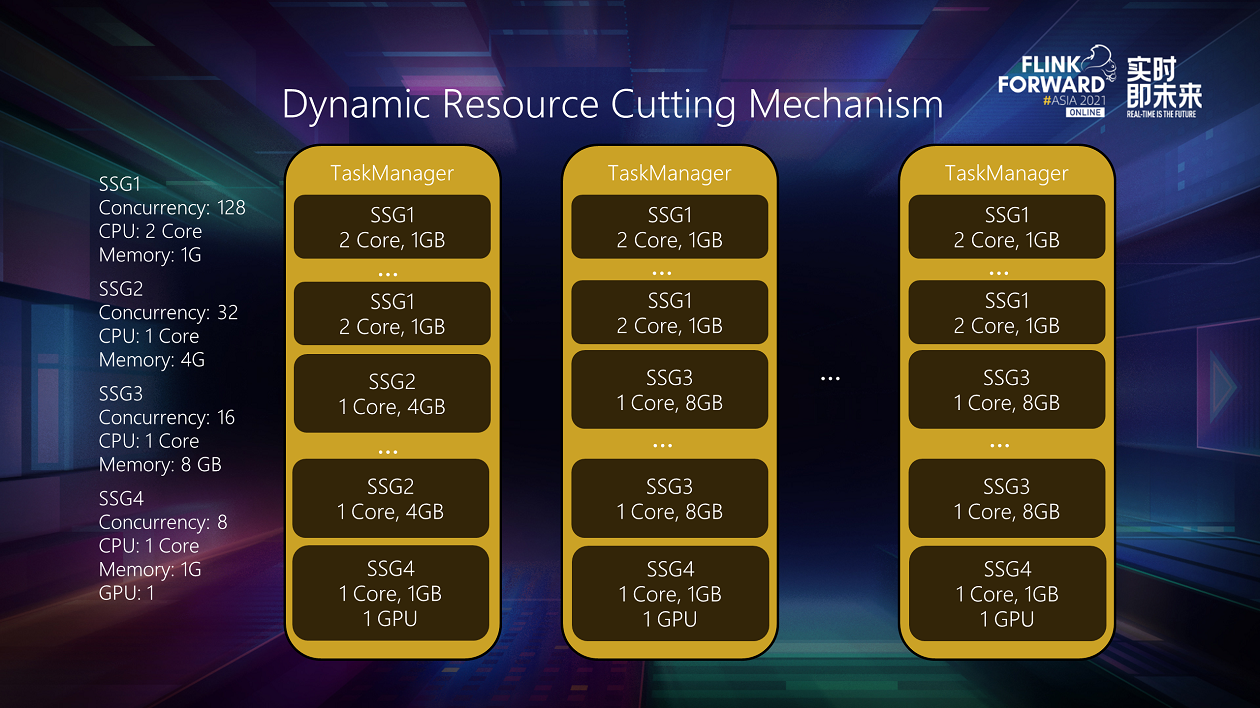

Back to the sample job, after supporting the fine-grained resource management interface, we can configure different resources for the four SSGs, as shown in the preceding figure. As long as the scheduling framework is strictly matched according to this principle, resource utilization efficiency can be maximized.

After solving the problem of resource allocation, the next step is to apply for slots for these resources. This step requires the dynamic resource cutting mechanism proposed by FLIP-56.

This picture shows the JobGraph on the left already has resources. The right side shows the resource scheduling of JM, RM, and TM. Under coarse-grained resource management, the TM slot is determined by the fixed size and startup configuration. RM cannot meet slot requests of different specifications in this case. Therefore, we need to make some changes to the slot creation method.

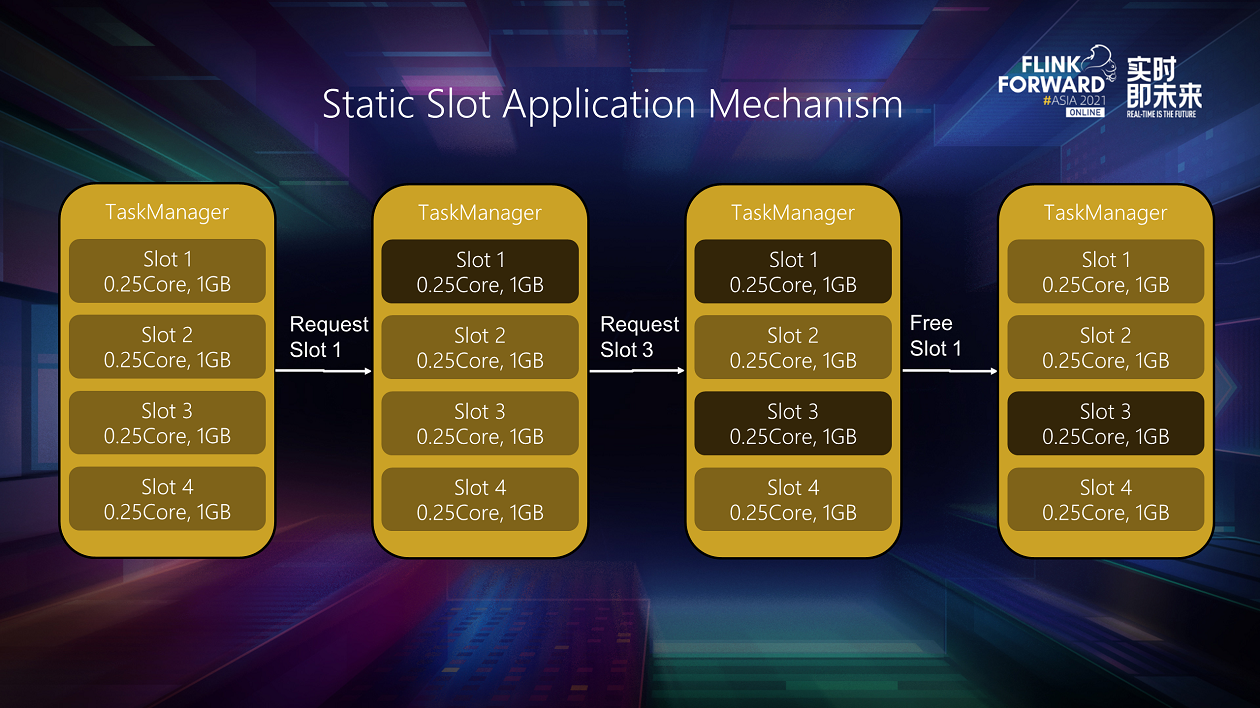

First, look at the existing static slot application mechanism. The slot was already divided and numbered when TM started. It will report these slots to the Slot Manager. When the slot request comes, the Slot Manager will decide to apply slot 1 and slot 3. Finally, the slot will be released after the task on slot 1 is run. In this case, only slot 3 is in the occupied state. Although TM has 0.75 core and 3G free resources, TM cannot meet it because the slot has been divided in advance if the job applies for a slot corresponding to resource sizes.

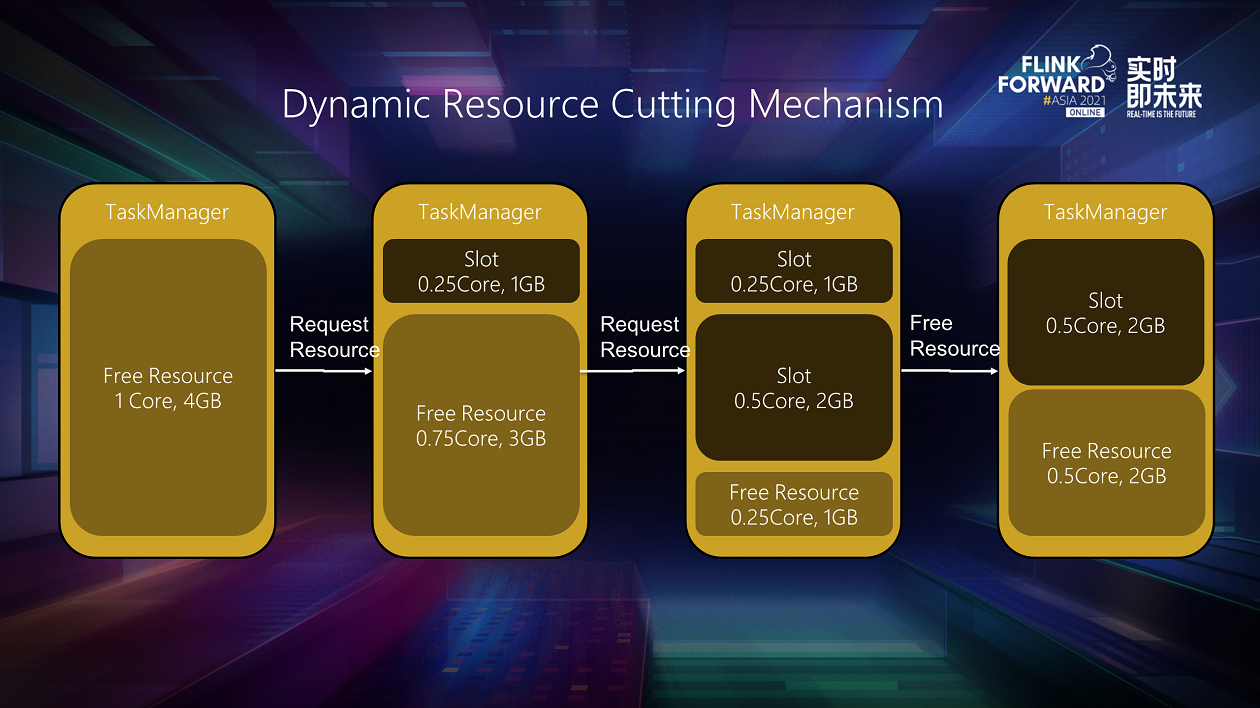

Therefore, a dynamic resource cutting mechanism is proposed. Slots are no longer generated and unchanged after the TM started. It is dynamically cut from the TM according to the actual slot request. When TM starts, we regard the resources that can be allocated to slots as an entire resource pool. For example, the figure above has 1 core and 4G memory resources. Now, there is a fine-grained job. The Slot Manager decides to ask for a 0.25 core and 1G slot from TM. TM will check whether its resource pool can cut off this slot, dynamically generate slots, and allocate corresponding resources to JM. Next, this job applies for a 0.5 core and 2G slot. The Slot Manager can still apply for slots from the same TM as long as it does not exceed idle resources. When a slot is no longer needed, we can destroy it. The corresponding resources will be returned to the idle resource pool.

The problem of how to satisfy fine-grained resource requests is solved through this mechanism.

Returning to the sample job, we only need 8 TM of the same specification to schedule the job and each TM with a GPU to meet SSG4. After that, the CPU-intensive SSG1 and the memory-intensive SSG2 and SSG3 are mixed to align the overall CPU-to-memory ratio on the TM.

What is the resource application policy? It includes two decisions when RM interacts with Resource Provider and TM. One is what resource specification TM to apply from Resource Provider and how many each specification TM needs. The other is how to place the slot into each TM. Both decisions are made within the Slot Manager component.

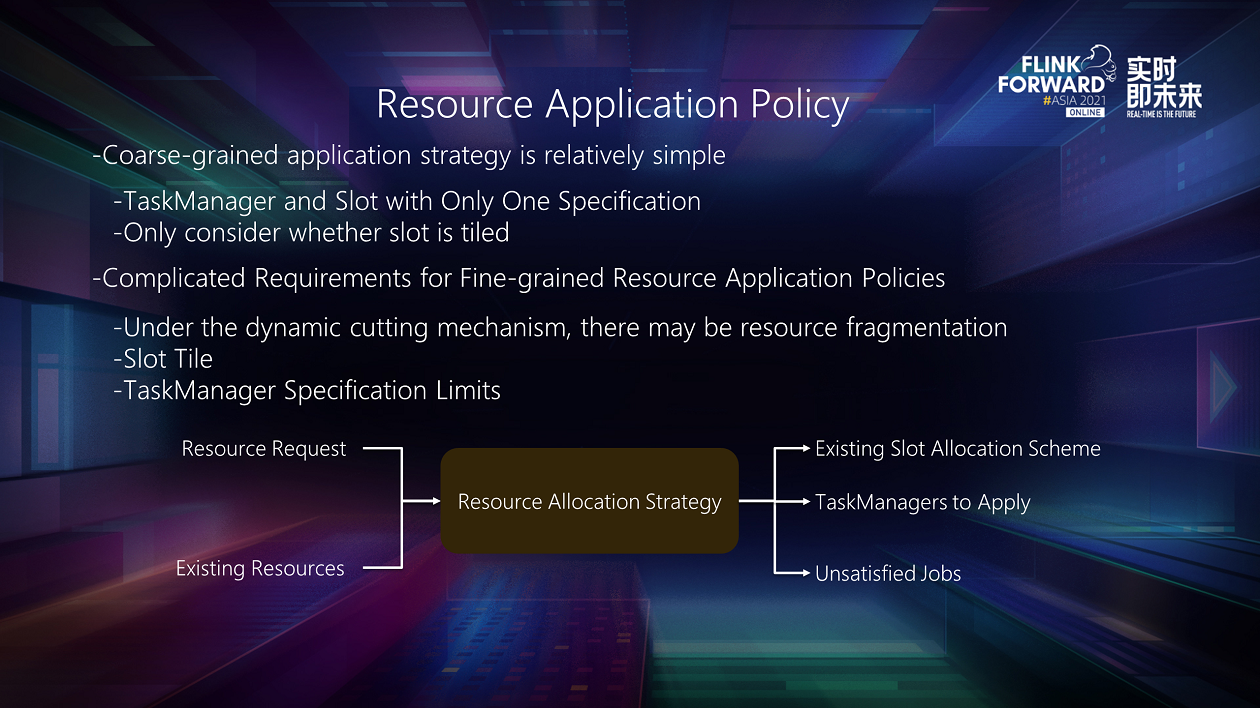

The coarse-grained resource application policy is relatively simple because only one type of TM exists, and the slot specifications are the same. In the allocation strategy, you only need to consider whether to tile the slot to each TM as much as possible. However, strategies under fine-grained resource management need to take different needs into account.

First, a dynamic resource cutting mechanism is introduced. The scheduling of slots can be regarded as multi-dimensional boxing. It is necessary to consider how to reduce resource fragmentation and ensure the efficiency of resource scheduling. In addition, the slot may need to be evaluated, and the cluster may have some requirements on the resource specifications of TM. For example, it is required not to be too small. If the TM resource is too small on Kubernetes, the startup will be too slow. The final registration timeout will not be too large, which will affect the scheduling efficiency of Kubernetes.

Faced with the complexity above, we abstract this resource application strategy and define a Resource Allocation Strategy. The Slot Manager will tell the strategy, the current resource request, and the existing available resources in the cluster. The strategy is responsible for making decisions and telling the Slot Manager how to allocate existing resources, how many new TM to apply for, their respective specifications, and whether there are jobs that cannot be met.

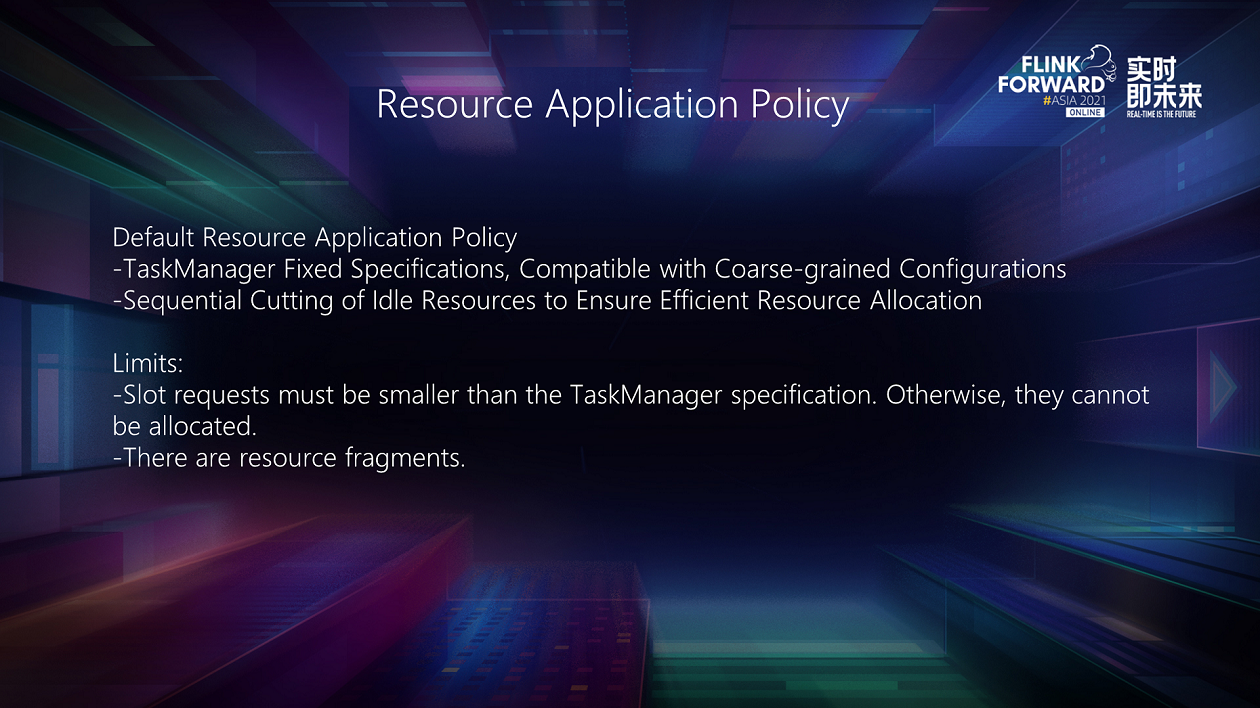

Currently, fine-grained resource management is still in beta. A simple default resource management policy is built in the community. Under this policy, the specifications of TM are fixed and determined according to the coarse-grained configuration. If the request for a slot is greater than the resource configuration, it may not be allocated. This is its limitation. In terms of resource allocation, it scans the currently idle TM sequentially and cuts directly as long as the request of the slot is met. This policy ensures that resource scheduling will not become a bottleneck even in large-scale tasks, but the cost is that resource fragmentation cannot be avoided.

Fine-grained resource management is currently only a beta version in Flink. As shown in the preceding figure, the fine-grained resource management of FLIP-56 and FLIP-156 has been mostly completed for the runtime. From the perspective of user interfaces, FLIP-169 has already exposed fine-grained configuration on the Datastream API. Please refer to the user documentation in the community for more information about how to configure it.

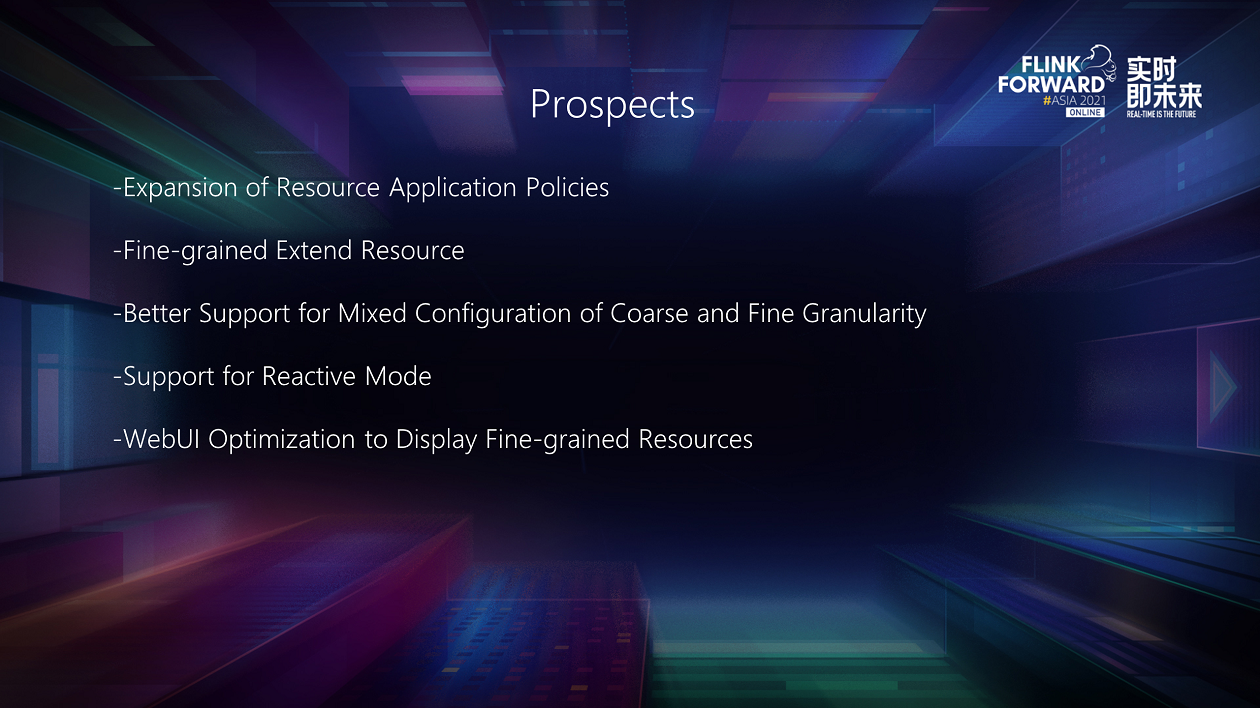

In the future, our development will mainly focus on the following aspects:

Deconstructing Stream Storage - Pravega and Flink Build an End-to-End Big Data Pipeline

206 posts | 54 followers

FollowApache Flink Community China - December 25, 2020

Apache Flink Community China - December 25, 2019

Hologres - May 31, 2022

Apache Flink Community China - September 16, 2020

Alibaba Clouder - December 2, 2020

Apache Flink Community - April 10, 2025

206 posts | 54 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Resource Management

Resource Management

Organize and manage your resources in a hierarchical manner by using resource directories, folders, accounts, and resource groups.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community