The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Zhao Yihao (Suhe), director of the Sentinel open source project

Released by Alibaba Developer

In recent years, many developers have shown great concern for the stability of microservices. As businesses evolve from monolithic to distributed architectures and adopt new deployment modes, the dependencies between services become increasingly complex. This increased complexity makes it challenging for businesses to maintain high availability of their systems.

For example, in a production environment, you may have encountered a variety of unstable situations, such as:

• During big promotions, the instantaneous peak traffic may cause the system to exceed the maximum load limit, and the system crashes, resulting in the user unable to place an order.

• The traffic of unexpected hotspot items broke through the cache, and database was defeated, accounting for normal traffic.

• The caller is dragged down by an unstable third-party service, the thread pool is full, and calls accumulate, resulting in the whole call link stuck.

These unstable scenarios may lead to serious consequences, but we are likely to ignore high-availability protection related to traffic and dependency. You may want to ask: How can we prevent the impact of these unstable factors? How do we implement high-availability protection for traffic? How can we ensure that our services are "stable"? At this time, we must turn to Sentinel, a high-availability protection middleware used in Alibaba's Double 11. During the Tmall Double 11 Shopping Festival this year, Sentinel perfectly ensured the stability of tens of thousands of Alibaba's services during peak traffic, and the Sentinel Go 1.0 GA release was officially announced recently. Let's take a look at the core scenarios of Sentinel Go and what the community has explored in the cloud native area.

Sentinel is an Alibaba open-source throttling component for distributed service architectures. Taking traffic as its breakout point, Sentinel helps developers ensure the stability of microservices in various ways, such as traffic throttling, traffic shaping, circuit breaking, and adaptive system protection. Sentinel has supported core traffic scenarios during Alibaba's Double 11 Shopping Festivals over the past 10 years, such as flash sales, cold start, load shifting, cluster flow control, and real-time stopping downstream unavailable services. It is a powerful tool to ensure the high availability of microservices. It supports programming languages such as Java, Go, and C ++. It also provides Istio/Envoy global traffic throttling support in order to provide Service Mesh with high-availability protection.

Earlier this year, the Sentinel community announced the launch of Sentinel Go, which will provide native support for high-availability protection and fault tolerance for microservices and basic components of the Go language. This marks a new step for Sentinel towards diversification and cloud native mode. In the past six months, the community has released nearly 10 versions, gradually aligning the core high-availability protection and fault tolerance capabilities. Meanwhile, the community is continuously expanding the open-source ecosystem by working with dubbo-go, and other open-source communities such as Ant Financial Modular Open Smart Network (MOSN) community.

Recently, Sentinel Go 1.0 GA version was officially released, which marks the official start of the production phase. Sentinel Go 1.0 is aligned with the high-availability protection and fault tolerance capabilities of Java, including traffic throttling, traffic shaping, concurrency control, circuit breaking, system adaptive protection, and hotspot protection. In addition, this version covers the mainstream open-source ecosystems, providing adapters of common microservice frameworks such as Gin, gRPC, go-micro, and dubbo-go, and providing extended support for dynamic data sources, such as etcd, Nacos, and Consul. Sentinel Go is evolving in the cloud native direction. In V 1.0, some cloud native aspects have been explored, including Kubernetes Custom Resource Definition (CRD) data source, Kubernetes Horizontal Pod Autoscaler (HPA), etc. For the Sentinel Go version, the expected throttling scenario is not limited to the microservice application itself. The Go language ecosystem accounts for a high proportion of cloud native basic components. However, these cloud native components often lack fine-grained and adaptive protection and fault tolerance mechanisms. In this case, you can combine some component extension mechanisms and use Sentinel Go to protect the stability of your own business.

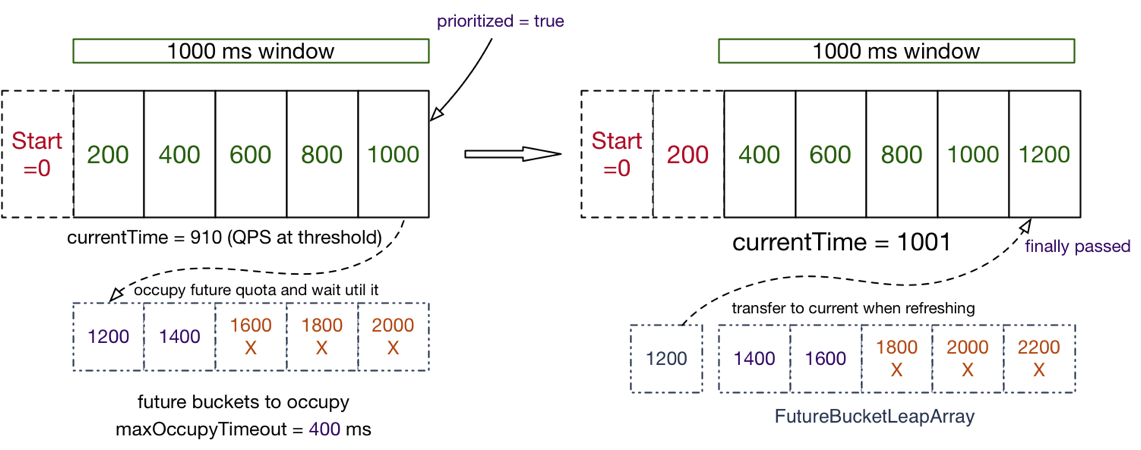

The bottom layer of Sentinel uses the high-performance sliding window to count metrics within seconds, and combines the token bucket, leaky bucket, and adaptive flow control algorithm to demonstrate the core high-availability protection capability.

So, how can we use Sentinel Go to ensure the stability of our microservices? Let us look at several typical application scenarios.

Traffic is random and unpredictable. A traffic peak may occur from one second to another. This is what we have to deal with at 00:00 on Double 11 each year. However, the capacity of our system is always limited. If the sudden traffic exceeds the system capacity, the requests may not be processed and the accumulated requests may be processed slowly. Then, the CPU/load goes high, causing the system to crash. Therefore, we need to limit such traffic spikes, so that services will not crash while handling as many requests as possible. Traffic throttling is applicable to scenarios such as pulse traffic.

In the scenario of web portal or service provider, the service provider must be protected from being overwhelmed by traffic peaks. At this time, the traffic throttling is often performed based on the service capabilities of the service provider or based on a specific service consumer. We can evaluate the affordability of the core interfaces based on the pre-stress testing and configure the traffic throttling rules of queries per second (QPS) mode. When the number of requests per second exceeds the set threshold, excess requests are automatically rejected.

The following is a simplest configuration example of Sentinel traffic throttling rules:

_, err = flow.LoadRules([]*flow.Rule{

{

Resource: "some-service", // Tracking point resource name

Count: 10, // If the threshold is 10, it is the second-level dimension statistics by default, which indicates that the request cannot exceed 10 times per second.

ControlBehavior: flow.Reject, // The control effect is direct rejection, the time interval between requests is not controlled, and the requests are not queued

},

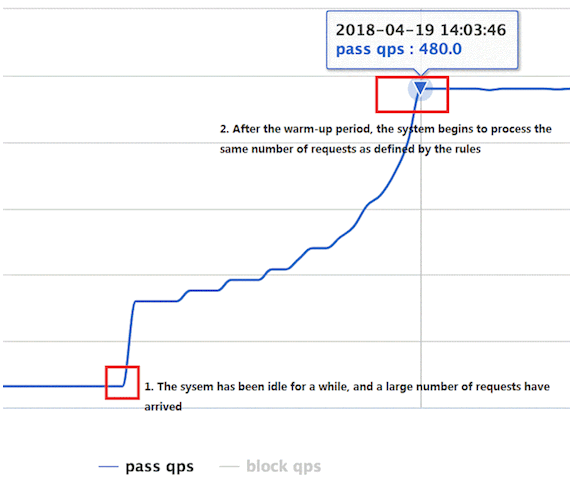

})If the system uses a low amount of resources for a long time and experiences a traffic spike, the system may immediately crash when the amount of resources used by the system is directly pushed to a high level. For example, for a newly started service, the database connection pool may not be initialized and the cache is also empty. The service can easily be crashed by the spikes in traffic. If the traditional traffic throttling mode is adopted without traffic smoothing and shifting limitation, the system is also at risk of being suspended. (For example, the concurrency is very high in a moment.) For this scenario, we can use the traffic throttling mode of Sentinel to control the traffic, making the traffic increase slowly and gradually to the upper threshold in a certain period of time, instead of allowing all traffic in an instant. At the same time, this is combined with the control effect of request interval control and queuing to prevent a large number of requests from being processed at the same time. This allows the cold system to warm up, preventing it from being overwhelmed.

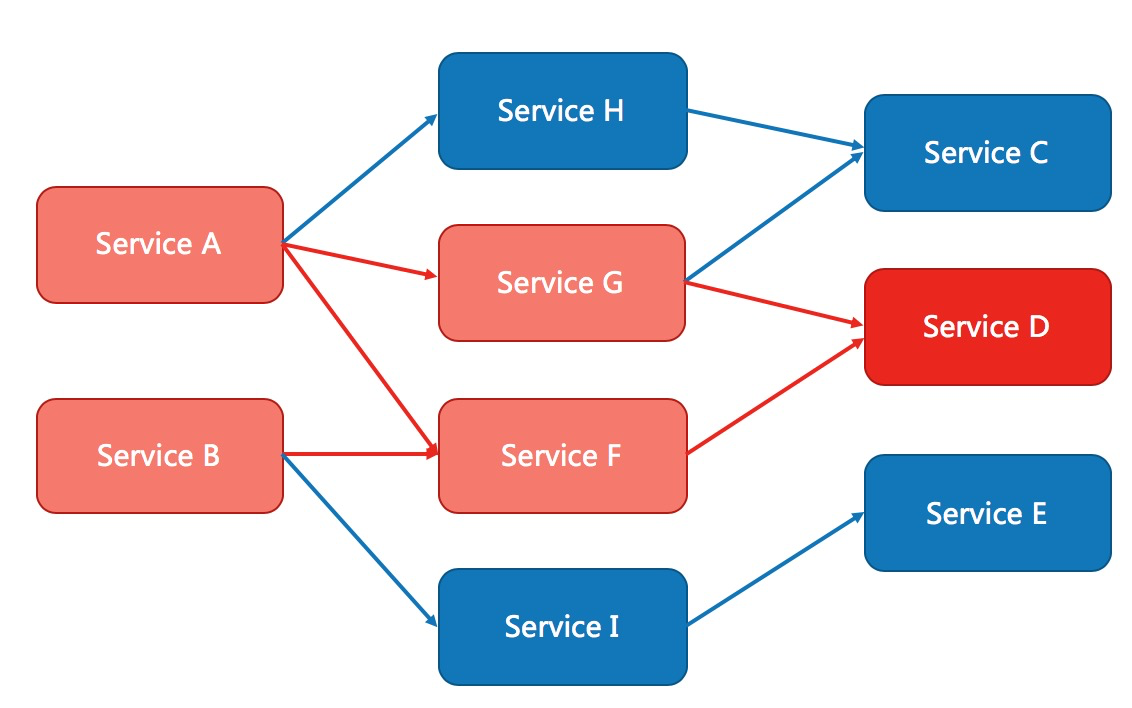

Services often call other modules, which may be other remote services, databases, or third-party APIs. For example, when you make a payment, the API provided by UnionPay may need to be called. When you query the price of an item, a database may need to be queried. However, the stability of the dependent service cannot be guaranteed. If the dependent service is unstable, the response time for the request will become longer, the response time for the method that calls the service will also become longer, and threads will build up. Ultimately, this may exhaust the thread pool of the business, resulting in service unavailability.

Modern microservice architectures are distributed and consist of many services. Different services call each other, forming complex call chains. The preceding problems may be magnified in call chains. If a link in the complex chain is unstable, a cascade may eventually cause the unavailability of the entire chain. Sentinel Go provides the following capabilities to prevent unavailability caused by unstable factors such as slow calls:

• Concurrency control (isolation module): As a lightweight isolation method, it controls the concurrent number of some calls (that is. the number of ongoing calls) to prevent too many slow calls from crowding out normal calls.

• Circuit breaking (circuit breaker module): It automatically breaks the circuit of unstable weak dependency calls. It temporarily cuts off the unstable calls and avoids the overall crash caused by local instability.

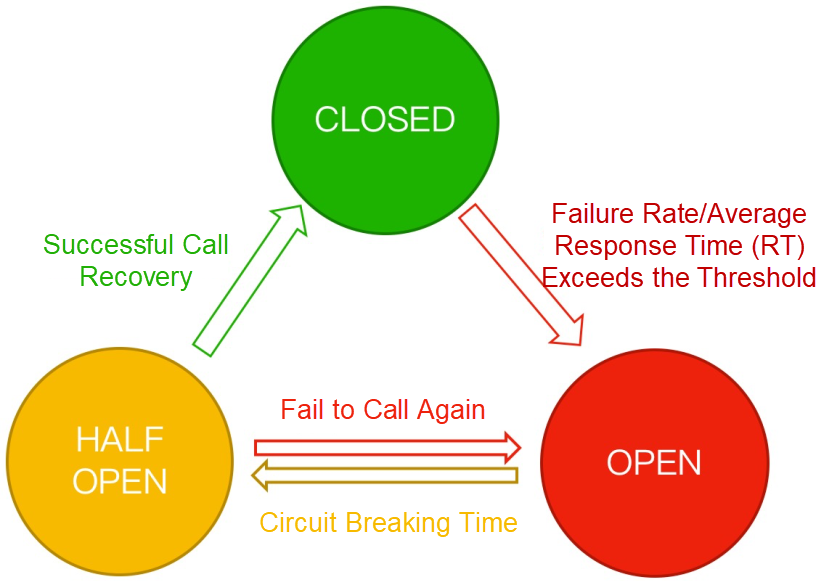

Sentinel Go's fault tolerance feature is based on the circuit breaker mode, which temporarily cuts off service calls, waits for a while and tries again, when an unstable factor occurs in a service (such as a long response time and a higher error rate). This not only prevents impacts on unstable services, but also protects service callers from crashing. Sentinel supports two break policies: Based on response time (slow call ratio) and based on error (error ratio/number of errors), it can effectively protect against various unstable scenarios.

Note that the circuit breaker mode is generally applicable to weak dependency calls, that is, circuit breaking does not affect the main service process, and developers need to design the fallback logic and return value after circuit breaking. In addition, even when service caller use the circuit breaking mechanism, we still need to configure the request timeout time on the HTTP or Remote Procedure Call (RPC) client for additional protection.

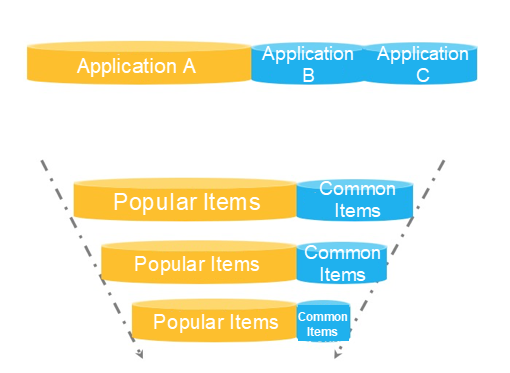

Traffic is random and unpredictable. To prevent system crashes due to heavy traffic, we usually configure traffic throttling rules for core APIs. However, common traffic throttling rules are not enough in some scenarios. For example, a lot of popular items have extremely high transient page views (PVs) at peak hours during big promotions. Generally, we can predict some popular items and cache information about these requests. When there is a large number of PVs, product information can be returned quickly from a preloaded cache without the need to access the database. However, a promotion may have some unexpected popular items whose information was not cached beforehand. When the number of PVs to these unexpected popular items increases abruptly, a large number of requests will be forwarded to the database. As a result, the database access speed will be slow, resource pools for common item requests will be occupied, and the system may crash. In this scenario, we can use the hotspot parameter traffic throttling (page in Chinese) feature of Sentinel to automatically identify hotspot parameters and control the QPS or concurrency of each hotspot value. This effectively prevents access requests with hotspot parameters from occupying normal scheduling resources.

Let's look at another example. When we want to restrict the frequency at which each user calls an API, it is not a good idea to use the API name and userId as the tracking point resource name. In this scenario, we can use WithArgs(xxx) to transmit the userId as a parameter to the API tracking point and configure hotspot rules to restrict the call frequency of each user. Sentinel also supports independent traffic throttling of specific values for more precise traffic throttling. Just as other rules, hotspot traffic throttling rules can be dynamically configured based on dynamic data sources.

The RPC framework integration modules, such as Dubbo and gRPC, provided by Sentinel Go will automatically carry the list of parameters that RPC calls in the tracking points. We can configure hotspot traffic throttling rules for corresponding parameter positions. Note that if you need to configure the traffic throttling of a specific value, due to the type system restrictions, only basic and string types are supported currently.

Sentinel Go hotspot traffic throttling is implemented based on the cache termination mechanism and token bucket mechanism. Sentinel uses a cache replacement mechanism , such as Least Recently Used (LRU), Least Frequently Used (LFU), or Adaptive Replacement Cache (ARC) policy to identify hotspot parameters and the token bucket mechanism to control the PV quantity of each hotspot parameter. The current Sentinel Go version uses the LRU strategy to collect hotspot parameters, and some contributors in the community have submitted a Project Review (PR) to optimize the elimination mechanism. In subsequent versions, the community will introduce more cache elimination mechanisms to meet requirements in different scenarios.

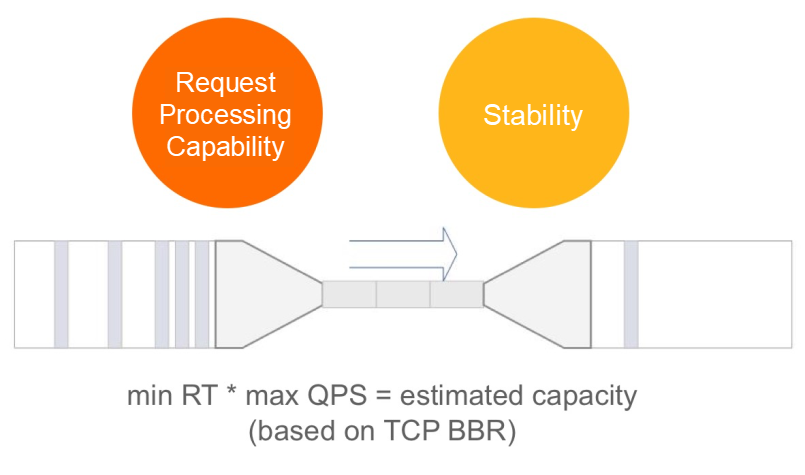

With the above traffic protection scenarios, is everything ok? In fact, it is not. In many cases, we cannot accurately evaluate the exact capacity of an interface in advance, or even predict the traffic characteristics of the core interface (such as whether there is a pulse). At this time, pre-configured rules may not be able to effectively protect the current service node. In some cases, we may suddenly find that the machine load and CPU usage start to increase suddenly. However, we have no way to quickly confirm what causes this and it is too late to deal with this exception. At this time, what we need to do is to stop the loss quickly. First, we can "save" the microservice which is on the verge of collapse through some automatic protection measures. To address these problems, Sentinel Go provides an adaptive system protection rule that combines system metrics and service capacity to dynamically adjust traffic.

The adaptive system protection strategy of Sentinel draws lessons from the idea of the TCP Bottleneck Bandwidth and Round-trip propagation time (BBR) algorithm, and combines several dimensions of monitoring indicators, such as the system load, CPU usage, portal QPS of services, response time, and concurrency. The adaptive system protection strategy balances the inbound system traffic with the system load, maximizing system stability while maximizing throughput. System rules can be used as a backup protection policy for the entire service to ensure that the service does not fail. This method is effective in CPU-intensive scenarios. At the same time, the community is also continuously improving the effect and applicable scenarios of adaptive traffic throttling in combination with automatic control theory and reinforcement learning. In future versions, the community will also introduce more experimental adaptive traffic throttling policies to meet more availability scenarios.

To be cloud native is the most important aspect of the Sentinel Go evolution. During the development of the Sentinel Go 1.0 GA version, the Sentinel Go community also has carried out some exploration in scenarios such as Kubernetes and Service Mesh.

In a production environment, you can dynamically manage rule configurations in the configuration center. In a Kubernetes cluster, you can naturally use the Kubernetes CRD to manage the Sentinel rules of applications. In Go 1.0.0, the community provides the basic Sentinel rules of CRD abstraction and the corresponding data source implementation. Users only need to import the CRD definition file of Sentinel rules, and register the corresponding data-source when accessing Sentinel. Then, you need to write a YAML configuration based on the format defined in the CRD, and apply kubectl to the corresponding namespace to dynamically configure rules. The following is an example of a flow control rule:

apiVersion: datasource.sentinel.io/v1alpha1

kind: FlowRules

metadata:

name: foo-sentinel-flow-rules

spec:

rules:

- resource: simple-resource

threshold: 500

- resource: something-to-smooth

threshold: 100

controlBehavior: Throttling

maxQueueingTimeMs: 500

- resource: something-to-warmup

threshold: 200

tokenCalculateStrategy: WarmUp

controlBehavior: Reject

warmUpPeriodSec: 30

warmUpColdFactor: 3Kubernetes CRD data-source module link: https://github.com/sentinel-group/sentinel-go-datasource-k8s-crd

In the future, the community will further improve the definition of CRD files and discuss the abstraction of standards related to high-availability protection with other communities.

Service Mesh is one of the trends of microservices evolving to the cloud native era. Under the Service Mesh architecture, some service governance and policy control capabilities gradually sink to the data plane layer. Last year, the Sentinel community made some attempts in Java 1.7.0 and provided the Envoy global rate limiting gRPC service—Sentinel RLS token server with the help of the Sentinel token server to provide the cluster flow control capability for the Envoy service mesh. This year, with the launch of Sentinel Go, the community has collaborated and integrated with more service mesh products. We have been working with Ant Financial MOSN community to originally support the flow control capabilities of Sentinel Go in the MOSN mesh, which has also been implemented in Ant Financial. The community is also exploring more general solutions. For example, the community uses the Envoy WebAssembly (WASM) extension mechanism of Istio to implement the Sentinel plug-in, so that the Istio/Envoy Service Mesh can ensure the stability of the entire cluster services by using the native flow control and adaptive protection capabilities of Sentinel.

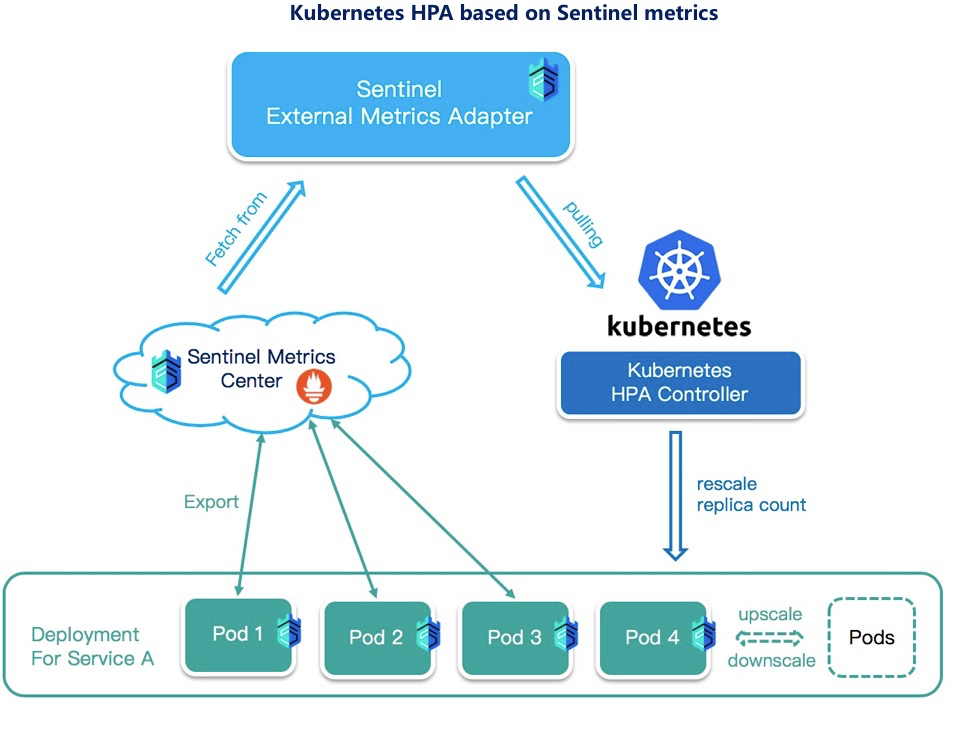

There are many ways to ensure service stability. In addition to "controlling" traffic by various rules, "flexibility" is also an idea. For applications deployed in Kubernetes, you can use Kubernetes HPA to scale services horizontally. By default, HPA supports multiple system metrics and custom metric statistics. At present, we have combined Application High Availability Service (AHAS) Sentinel with Alibaba Cloud Container Service for Kubernetes to support auto scaling based on service average QPS and response time. The community is also making some attempts in this area, revealing some service-level metrics (including the throughput, rejected traffic, and response time) of Sentinel through standard methods such as Prometheus or OpenTelemetry, and adapting them to Kubernetes HPA.

Of course, the Sentinel-based elastic solution is not a panacea. It is only applicable to some specific scenarios, such as Serverless services with fast startup. The elastic solution cannot solve the stability problem for services that start slowly or in scenarios that are not affected by the capacity of the service. For example, the capacity of the dependent database is insufficient. The elastic solution may even aggravate the deterioration of services.

After learning the above scenarios of high-availability protection and some explorations of Sentinel in the cloud native field, I believe you have gained a new understanding of the fault tolerance and stability methods for microservices. You are welcome to connect microservices to Sentinel for high-availability protection and fault tolerance. This will allow services to remain stable. At the same time, the release of Sentinel Go 1.0 GA cannot be separated from the contribution of the community. Thank you to all those who participated in the contribution.

In the development of this version, we have two new committers -- @ sanxu0325 and @ luckyxiaoqiang, who bring the warm-up traffic throttling mode, Nacos dynamic data sources and a series of function improvements and performance optimizations. They are very active in helping the community answer questions and review code. Congratulations! In the future versions, the community will continue to explore and evolve towards the cloud native and adaptive intelligent directions. Everyone is welcome to join the contributor group to participate in the future evolution of Sentinel and create infinite possibilities. We encourage any form of contribution, including but not limited to:

• Bug fixes

• New features/improvements

• Dashboards

• Documents/websites

• Test cases

Developers can select interesting issues from the good first issue list on GitHub to participate in the discussion and make contributions. We will focus on the developers who actively participate in the contribution, and the core contributors will be nominated as committers to lead the development of the community together. You are welcome to exchange your questions and suggestions through GitHub issue or Gitter.

• Sentinel Go repo: https://github.com/alibaba/sentinel-golang

• Enterprise users are welcome to register: https://github.com/alibaba/Sentinel/issues/18

• Alibaba Cloud Sentinel Enterprise Edition: https://ahas.console.aliyun.com/

What Technical Advantages Does Alibaba Have in Building a Flutter System?

2,599 posts | 762 followers

FollowAliware - May 13, 2019

Alibaba Cloud Community - May 6, 2024

Alibaba Cloud Native Community - December 19, 2023

Alibaba Cloud Community - May 14, 2024

zcm_cathy - December 7, 2020

Alibaba Developer - January 21, 2021

2,599 posts | 762 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder