By Xie Yuning (Yuqi)

This article mainly introduces how to realize the observability of DNS faults and the diagnosis of difficult problems in Kubernetes clusters. I will start from the following aspects:

DNS is everywhere in Kubernetes clusters:

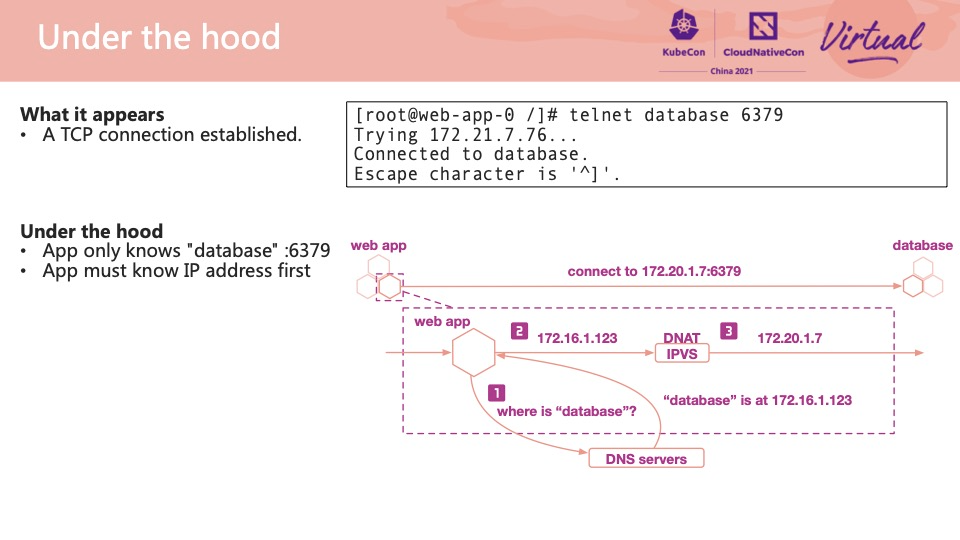

Let's take a web application that wants to access the database as an example. It seems that a database link has been established on the surface, but many things have happened under the hood:

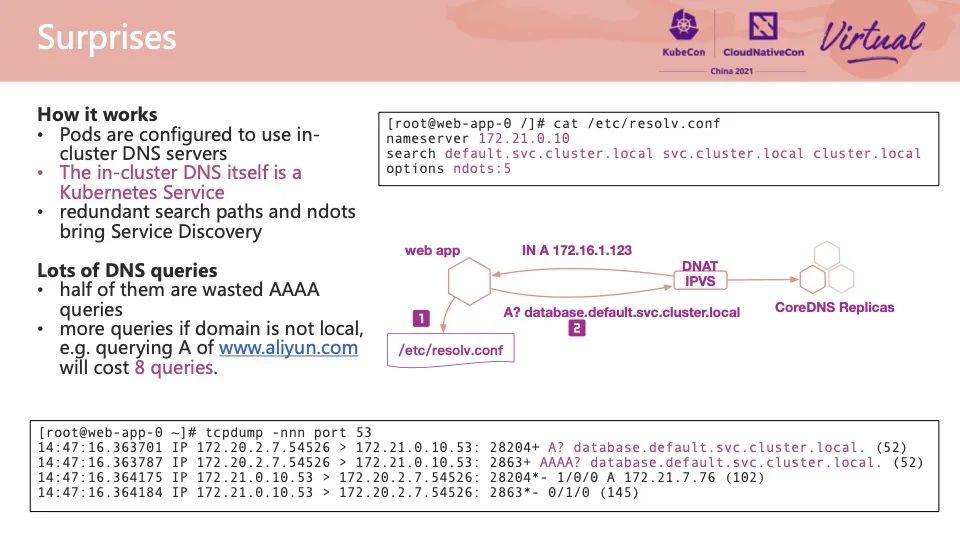

How does the web application find a DNS server? Looking at the /etc/resolv.conf file in the Pod container, we can find that the IP address of a DNS server is configured to 172.21.0.10. This IP address is the kube-dns ClusterIP service in the kube-system namespace in the cluster. CoreDNS in the cluster provides DNS resolution services through this ClusterIP service. It can also be seen from the figure that multiple container replicas of CoreDNS are deployed in the cluster. These replicas have the same status as each other. When clients access these replicas, SLB is also implemented by IPVS.

In addition to IP addresses, /etc/resolv.conf is configured with a search path, which consists of multiple domain name suffixes. When requesting a domain name, if the number of dots contained in the domain name is less than ndots, the search path will be spliced to form a complete domain name (FQDN), and then the domain name query will be issued. In this example, the web app requests a database. Since the number of ndots contained in the database is 0, which is less than the set ndots 5, it will be spliced with the suffix default.svc.cluster.local to form the full domain name (FQDN) database.default.svc.cluster.local and then issued. The settings of search paths and ndots need to be careful. Unreasonable configurations may multiply the time taken to resolve domain names of applications.

In a real Kubernetes cluster, our application often accesses some external domain names. Let's take www.aliyun.com as an example. In the process of requesting the IP address of this domain name, the application requests www.aliyun.com.default.svc.cluster.local, www.aliyun.com.svc.default.cluster.local, www.aliyun.com.cluster.local, and www.aliyun.com in sequence. The first three domain names do not exist. CoreDNS will return an error that the domain name does not exist (NXDOMAIN). The correct result (NOERROR) will not be returned until the last request for CoreDNS.

In addition, most modern applications support IPv6, which concurrently requests A and AAAA (IPv6) records during resolution. Therefore, a domain name outside a cluster often takes eight times of DNS resolution in a Pod container. This brings multiplied pressure for DNS resolution.

DNS has a long history, and the protocol is simple and reliable. However, we found that DNS service discovery in Kubernetes is not that simple from the preceding introduction:

During our daily operation and maintenance and ticket processing, we found that DNS failures often occur, and the reasons are different each time.

The QPS that the CoreDNS server can provide is positively related to the CPU. When the CPU has obvious bottlenecks or business peak hours, CoreDNS will experience delays or resolution failures when responding to DNS requests. Therefore, we should maintain the number of copies at a suitable level. We recommend that the peak CPU usage of each replica is less than one core. If the peak CPU usage of each replica is greater than one core during peak service, we recommend scaling out the replica of CoreDNS. The ratio of CoreDNS Pods to nodes in a cluster is set to 1:8 by default. This means each time you add eight nodes to a cluster, a CoreDNS Pod is created.

Another common exception is that the Conntrack table entry is full. Each TCP and UDP link in Linux occupies a Conntrack table entry by default. The number of this table is limited. When the service uses a large number of short link requests, it is easy to fill up the table and report Conntrack table full exceptions. For this exception, you can expand the Conntrack table or use long link requests as much as possible. In addition to the Conntrack limit, ARP Table and Socket limit all cause similar problems.

Some scenes result from conflict. Generally, the client reuses the source port to request DNS in high QPS scenarios. For example, when we use Alpine as the container base image, its built-in Musl runtime library uses the same source port concurrently to request A and AAAA domain name records. Coincidentally, this concurrency triggers the defect of Race Condition of Conntrack in the kernel. This defect will cause DNS messages that come in later to be discarded.

From the design of the kernel network stack, DNS is often particularly prone to package loss due to the natural design of UDP. Another example is the IPVS scenario. When the cluster uses IPVS as the SLB, if CoreDNS has a single replica restart or the replica is scaled up, and a message uses a source port that was used just a few minutes ago to initiate a request, the message will be discarded, resulting in a delay in domain name resolution. The preceding two source port conflicts can be resolved through the Kernel upgrade.

Earlier versions of CoreDNS have many problems, including Panic restart when APIServer is disconnected, and AutoPath plug-in crash, which leads to some occasional resolution failures. However, if APIServer continues to fail to recover, the domain name of the entire cluster may be unresolved. We recommend upgrading CoreDNS to the latest stable version to avoid these issues.

We have known the common causes of DNS failures in Kubernetes clusters, but how can we find them? Fortunately, CoreDNS is a naturally plug-in DNS server software with a wealth of observability. The following section describes several commonly used plug-ins and how to use them.

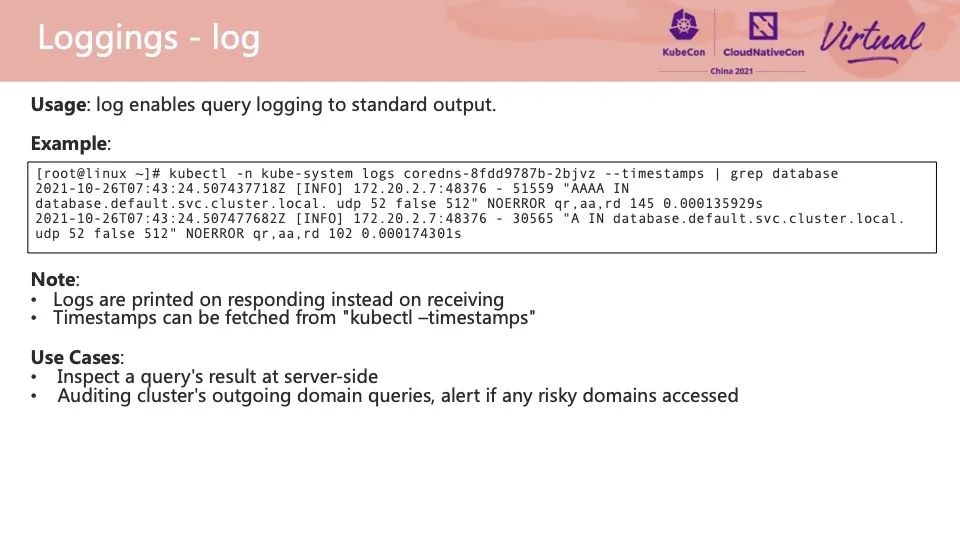

Every time CoreDNS receives a request and completes its response, it will print a line of logs, including the request domain name content, source IP, and response status code. This line of logs is similar to Nginx's Access Log, which can help us quickly find the location where the problem occurs and complete the whole delimitation process of the problem.

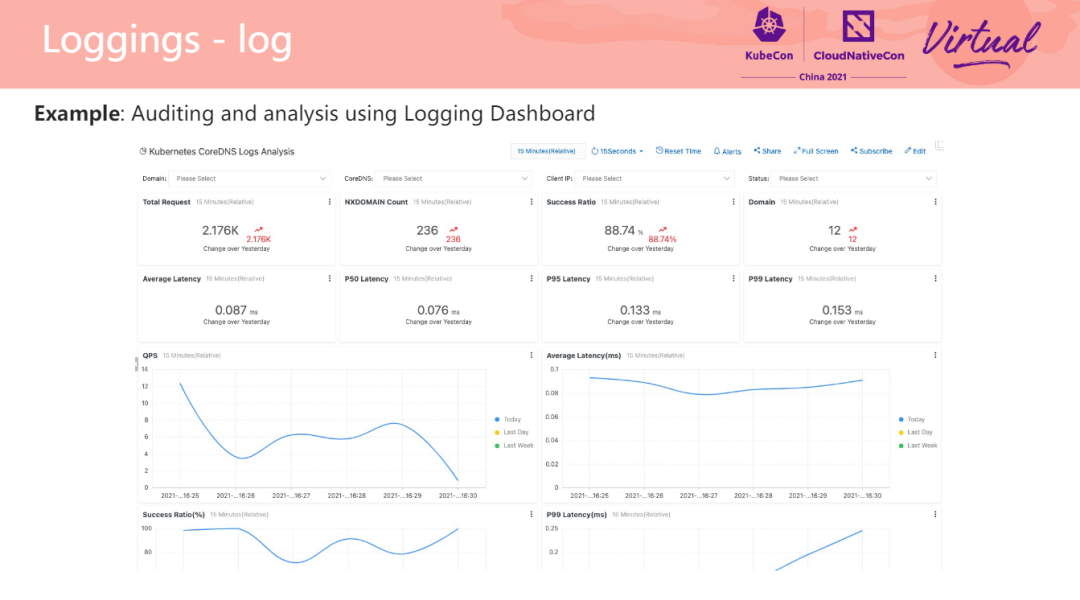

In addition, we can upload logs to the cloud, persist logs, and draw trend charts. We can even audit domain name access, such as identifying that a Pod in the cluster has accessed an illegal domain name. In the example on the right, the dashboard of SLS Log Service is used to visualize the daily trend and CoreDNS response status code to facilitate problem analysis. In addition to visualization, the log dashboard supports alert rule configuration [1].

The Dump plug-in is similar to the Log plug-in and prints a line of logs. The difference is that it starts printing when CoreDNS receives a client request. If you confirm that the resolution request has reached CoreDNS, but the log is not output, you can open the Dump plug-in for troubleshooting.

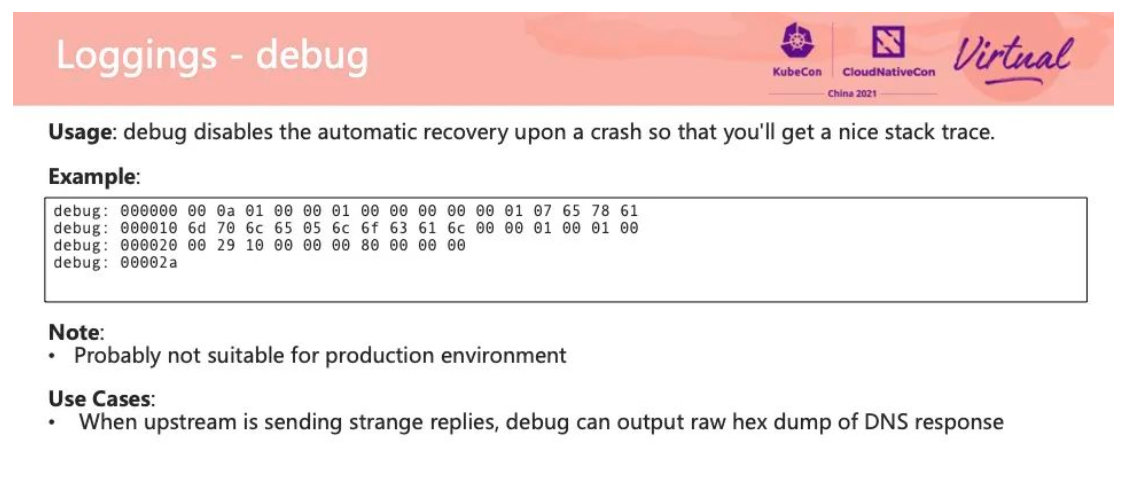

The Debug plug-in is used to troubleshoot errors. When the network link is abnormal or the upstream DNS server returns an exception, CoreDNS may receive an abnormal message. At this time, the Debug plug-in can print the message in a complete 16-progress manner. Later, you can use tools (such as Wireshark) to analyze this message and see where the message went wrong.

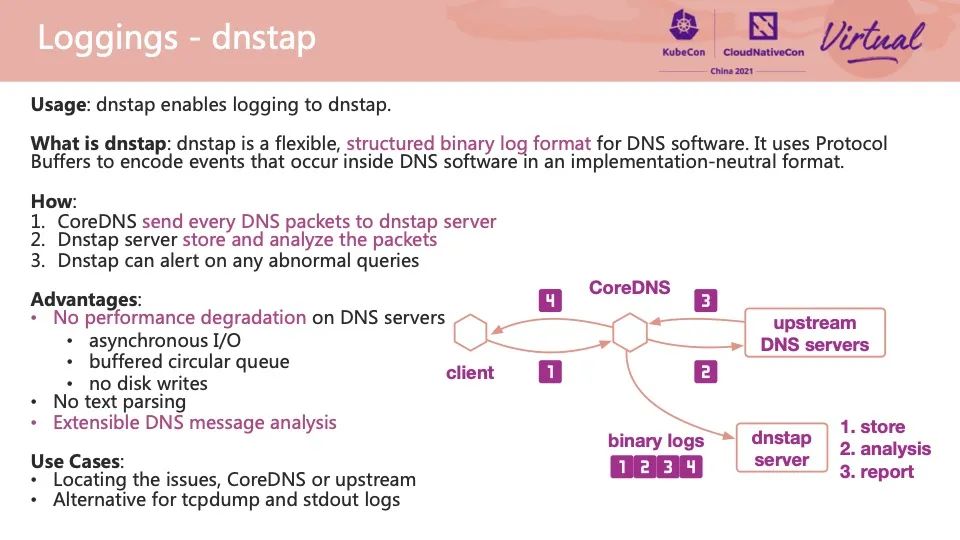

DNSTap is an interesting plug-in. DNSTap is a flexible binary log protocol specially designed for DNS messages. There are four message links in the left picture:

All four messages can be delivered to a remote DNSTap server by the CoreDNS DNSTap plug-in in the form of binary logs. The DNSTap server can perform operations, such as storing, analyzing, and reporting exceptions. DNSTap adopts asynchronous IO design in the process of sending these messages, which avoids the deserialization process of local disk log writing and subsequent processing and can realize low-performance overhead, efficient message collection, and exception diagnosis process.

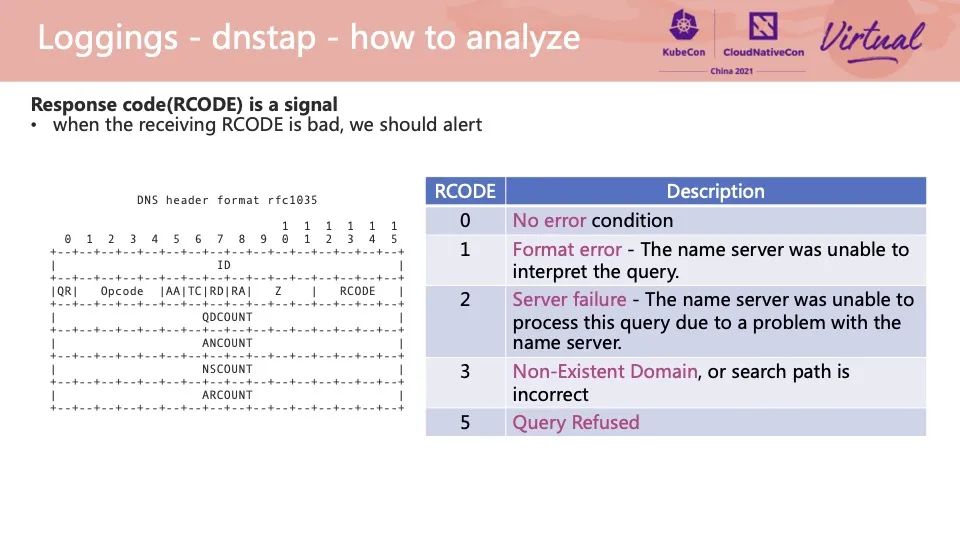

How do we implement exception diagnosis of messages in the DNSTap server? The DNSTap server can resolve the original DNS message. The response status code of the DNS resolution request can be extracted from the RCODE status code field of the DNS message in the figure. Different status codes reveal different types of exceptions. In the troubleshooting process, the problem can be located according to the right table.

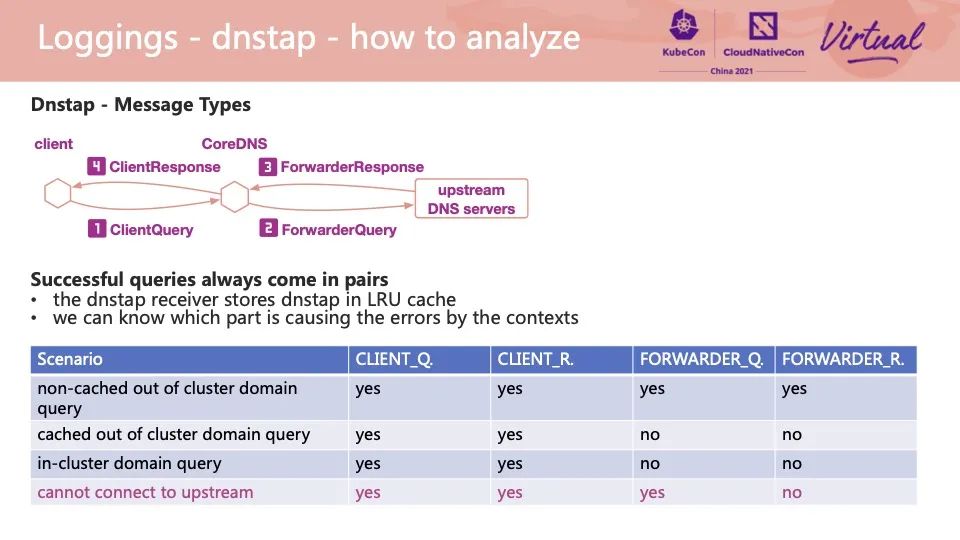

In addition to the RCODE field of the message, we can make some exception judgments based on the message type of the DNSTap message. If only ClientQuery and ForwarderQuery are generated in a message of the same DNS message ID, the upstream did not respond to the DNS request, and CoreDNS did not respond to the client. We can diagnose different scenarios for combinations of different message types. Alibaba Cloud ACK also provides an out-of-the-box solution using this idea, which can quickly distinguish some problems [2].

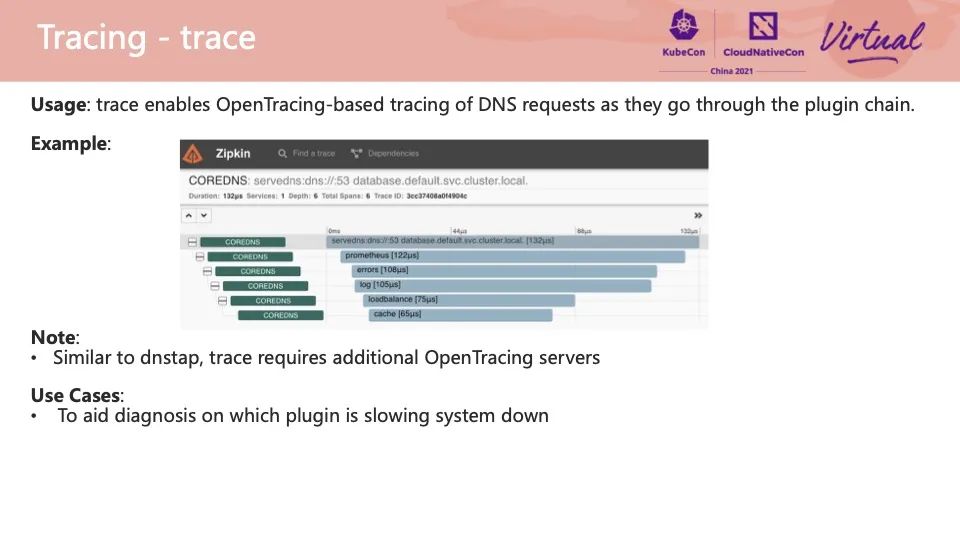

The Trace plug-in implements the capability of OpenTracing. It can record a request from receipt to when the plug-in starts and ends processing in an external OpenTracing server. Generally, we suspect that a DNS request takes too long. If you want to locate the delay point and optimize the time, you can use this plug-in to find the bottleneck quickly.

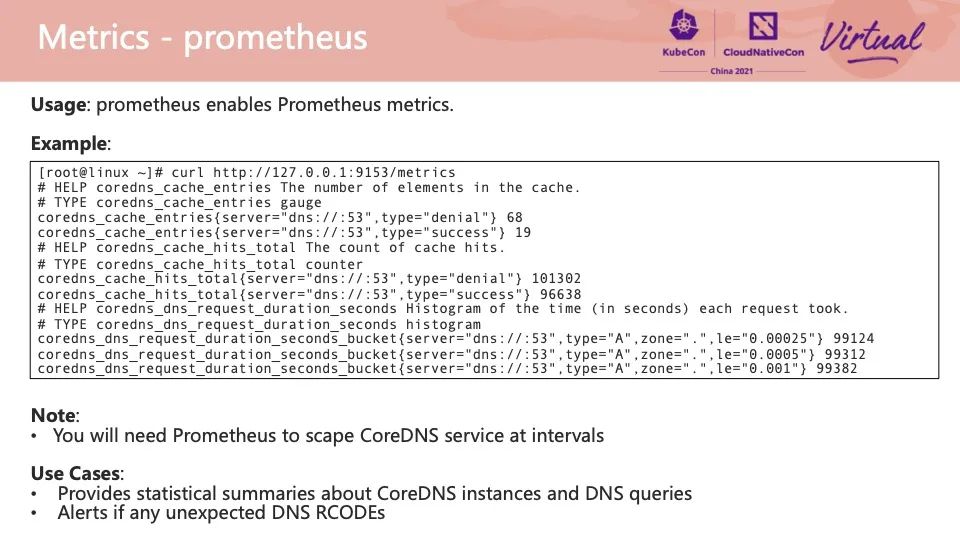

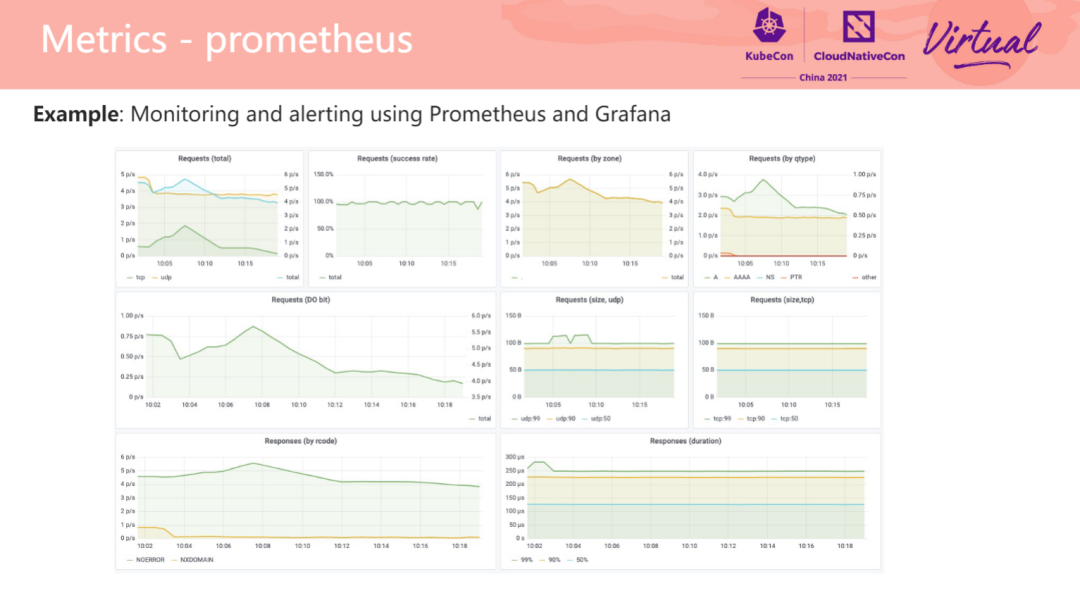

The Prometheus plug-in provides the ability to expose various operating metrics on the CoreDNS server. Generally, we need to configure the metrics interface to the collection object list of the Prometheus cluster. Prometheus can retrieve and store CoreDNS data in the time series database at regular intervals. You can use the Grafana dashboard to display these metrics. You can observe the return trends of different RCODE status codes and the QPS trends of requests from the charts. Set a reasonable threshold for these trends to provide real-time alerts. The figure on the right is the CoreDNS monitoring dashboard configured by default using the ARMS Prometheus service in the Alibaba Cloud ACK cluster.

Many DNS exceptions occur on the client-side, and CoreDNS does not receive the request at all. The cause of this kind of problem is difficult to locate. In addition to observing kernel logs and CPU load, we often use tcpdump to capture packets to analyze the flow direction of messages and where losses occur. When the probability of a DNS exception is low, the cost of tcpdump packet capture becomes high.

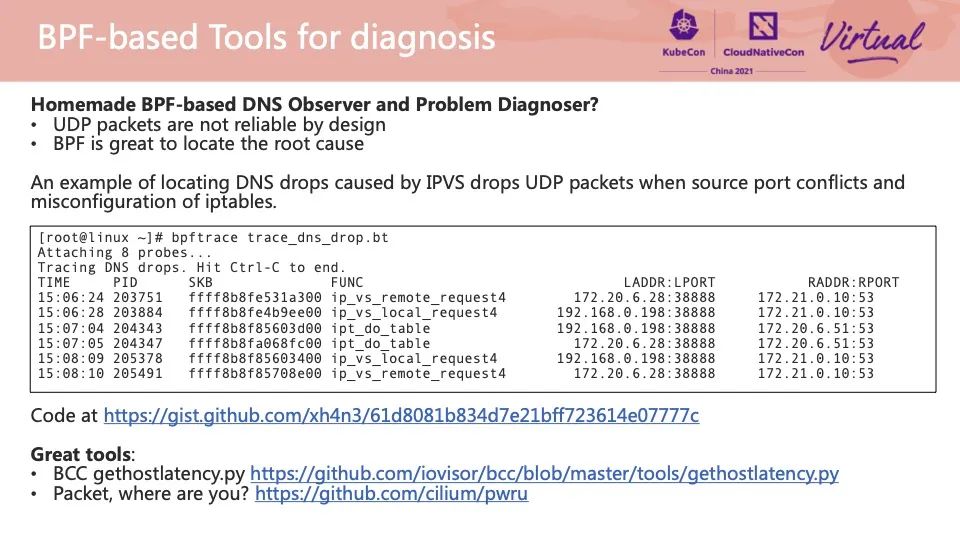

The good news is that we can use BPF tools to observe some common kernel-side message exceptions. The figure shows the trace_dns_drops.bt [3] of a BPF sample tool. After the tool is started, it will monitor the kernel functions of two common DNS message losses, determine whether the message will be lost according to the return value of the function, and print out the IP source port of the currently accessed message source if lost. We simulated two scenarios in this example: message loss caused by source port conflict when IPVS backend changes and DNS messages discarded by incorrect iptables. In addition to the source port and source IP, we can make some changes to the tool to extract the fields of DNS messages from the message, such as record type and requested domain name.

BPF technology can help us locate the flow process of a message in the kernel. We can learn the specific cause of packet loss and further optimize the configuration by extracting the functions of time, message quintuple, and passing functions. The current open-source community also has useful tools to assist in positioning, such as BCC's gethostlatency.py and Cilium's pwru project.

We have described how to observe DNS server or client-side exceptions, but what should we do when there is a DNS failure in the real online environment?

In the preceding steps, from collecting information to verifying conjecture, we often need to ask ourselves several questions:

The answers to these questions point to a large number of possible causes of anomalies. We provide a series of Kubernetes domain name resolutions and exception troubleshooting [4] in the Alibaba Cloud ACK product documentation to assist in troubleshooting.

This article introduces the common DNS exceptions, causes of faults, anomaly observation schemes, and fault handling processes on the CoreDNS server and client-side, hoping to be helpful for problem diagnosis. DNS service is important for Kubernetes clusters. In addition to observing exceptions, we should fully consider the stability of DNS service at the beginning of architecture design and adopt some best practices such as DNS local cache. Thank you!

[1] DNS Best Practices

https://www.alibabacloud.com/help/en/doc-detail/172339.html

[2] PPT Download Address for This Article

https://kccncosschn21.sched.com/event/pcag/huan-daepkubernetes-zhong-shi-dns-dun-zha-qi-tizhong-best-practice-dns-failure-observability-and-diagnosis-in-kubernetes-yuning-xie-alibaba

[3] Linux /etc/resolv.conf Configuration Documentation

https://man7.org/linux/man-pages/man5/resolv.conf.5.html

[1] Log Dashboard

https://www.alibabacloud.com/help/en/doc-detail/213461.html

[2] Delimitation of the Problem

https://www.alibabacloud.com/help/en/doc-detail/268638.html

[3] BPF Sample Tool trace_dns_drops.bt

https://gist.github.com/xh4n3/61d8081b834d7e21bff723614e07777c

[4] Exception Troubleshooting

https://www.alibabacloud.com/help/en/doc-detail/404754.html

How to Use CPU Management Policies Reasonably to Improve Container Performance

RocketMQ Streams: Lightweight Real-Time Computing Engine in a Messaging System

508 posts | 48 followers

FollowAlibaba Container Service - November 15, 2024

Alibaba Cloud Native Community - July 26, 2022

Alibaba Container Service - November 15, 2024

Alibaba Developer - January 13, 2020

Alibaba Cloud Serverless - November 27, 2023

Alibaba Cloud Native - October 27, 2021

508 posts | 48 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn MoreMore Posts by Alibaba Cloud Native Community