By Huming

This article examines the concept of a stream, compares several common stream libraries in Java, and introduces in detail the Java 22 Stream Gather API. It also briefly discusses how virtual threads can simplify the implementation of the Stream mapConcurrent operator.

Since its introduction in Java 1.8, Java Stream has rapidly become a go-to tool for developers and is a topic frequently discussed in daily work. However, due to the limited operators of Java Stream, developers often encounter limitations. To address this, developers may turn to libraries with a richer set of operators as an alternative. As part of the Java standard library, Oracle's Java architects have been exploring how to better adapt to the growing user needs and improve the maintainability of the language. With the introduction of the Stream Gather API in Java 22, this situation has been significantly improved. Based on my own experience, I would like to share insights into the Java Stream Gather API.

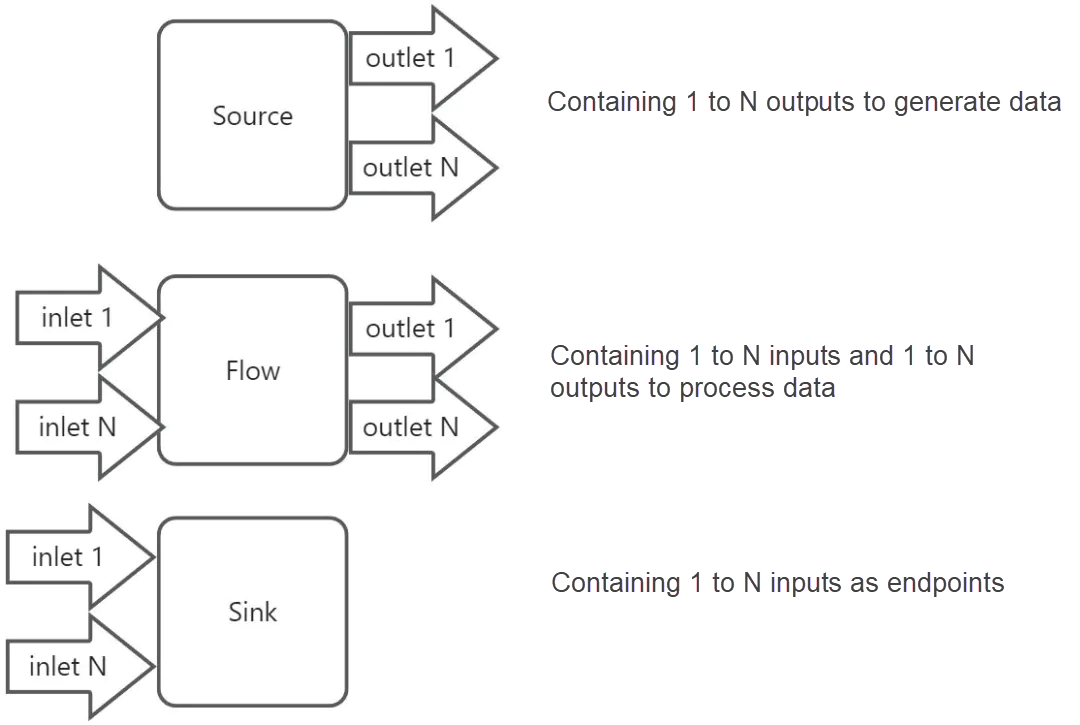

In the programming language ecosystem, a Stream is an abstract concept representing a set of continuous operations for processing data, akin to an automobile production pipeline, denoted as a "stream" here. Generally, streams can be categorized as finite streams and infinite streams. For instance, the Yangtze River, with its endless flow, can be considered an infinite stream, while the various hydropower stations along its course can be seen as intermediate processing nodes in the stream. Typically, a meticulously designed library may split a stream into Source, Flow, and Sink, although this may not necessarily be reflected at the API layer. The three parts are shown in the following figure:

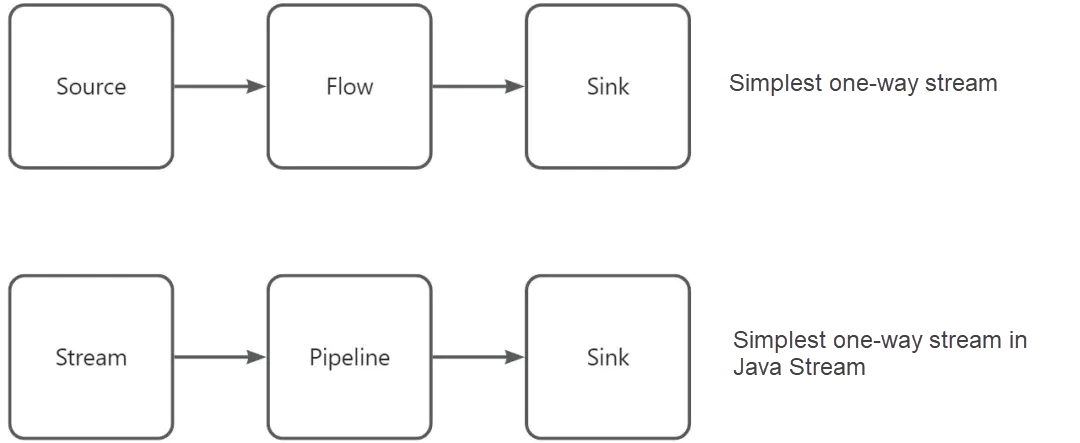

The combination of the Source, Flow and Sink finally forms a complex processing stream diagram. That is also the business logic of our code. Generally speaking, in Java, the Stream in the standard library we use can be regarded as the simplest one-way stream, as shown in the following figure:

Now we have had a clear understanding of what a stream is. Usually, if we don't need to develop Java Stream operators by ourselves, we can hardly have contact with AbstractPipeline and Sink. Of course, as of Java 22, Java Stream still does not have enough extension points.

As Java developers, we may use a variety of stream-oriented toolkits in our daily work. The simple comparison is as follows:

| Class library | Supported programming language | Reaction formula | Support for the complex stream graph (Directed Acyclic Graph, DAG) | Support for cross-node | Rich operators | Scalability |

| Java Stream | Java / Kotlin / Scala | No | No | No | No | Average |

| Kotlin Flow | Kotlin | No | No | No | Average | Excellent |

| RXaVReactor / mutiny | Java/Kotlin/Scala | Yes | No | No | Excellent | Excellent |

| AKka / Pekko Stream | Java / Kotlin / Scala | Yes | Yes | Yes | Excellent | Excellent |

As we can see from the above, though the Stream API of the Java standard library is available out of the box, we also have to choose other libraries in many specific scenarios. Therefore, to solve this problem, Viktor Klang proposes the Stream Gather API as another extension point besides Stream Collector to make up for the shortcomings of Java Stream.

At present, the Java Stream API mainly lacks rich operators. Adding many operators to the Stream API like other libraries can cause a great maintenance burden. If you want to add only a limited number of operators to solve most of the problems, the new operators need to support the following features:

1. Support for multiple types of data conversion:

1:1, such as map

1:N, such as filter and flatmap

N:1, such as buffer

N:M, such as intersperse

2. Support for finite streams and infinite streams

3. Support for stateful and stateless operations

4. Support for early termination and full consumption

5. Support for single-thread sequential processing and multi-thread parallel processing

6. Support for sensing termination, such as implementing a fold operator

Java Stream currently provides only one extension point: Collector <T, A, R>, which contains the following parts:

| Method | Description |

Supplier<A>supplier() |

Used to create aggregation state A |

BiConsumer<A, T> accumulator() |

Used to apply each input element T to the aggregate state A |

BinaryOperator<A> combiner() |

Used to merge aggregate state A during parallel processing |

Function<A, R>finisher() |

Used to generate the final result R based on the aggregate state A at the end |

Based on the above description, we only use the BiConsumer returned by the accumulator and the Function<A, R> returned by the finisher in the process, so we can only generate one value (N:1) at the end. For example, our Collectors.toList() only generates a list at the end of the stream, so it does not meet our requirements for implementing operators such as map, filter, buffer, scan, and zipWithIndex.

For this reason, the JEP 461 came into being. API and Stream Collector are somewhat similar. The specific comparison is as follows:

| Collector | Gathar | Comparison |

Supplier<A> supplier() |

Supplier<A> initializer() |

Used to create aggregation state A |

BiConsumer<A, T>accumulator() |

Integrator<A, T, R> integrator() |

The integrator is used to generate an optional N number of R elements by combining the current state A and the input element T, and supports early termination |

BinaryOperator<A> combiner0 |

BinaryOperator<A> combiner() |

Used to merge aggregate state A during parallel processing |

Function<A, R>finisher() |

BiConsumer<A, Downstream<R>>finisher() |

Used to generate an optional N number of R based on the aggregate state A at the end |

The biggest change of the above is that both integrator and finisher can generate element R in the way of 1:N. This optional 0 ... N number of R is passed downstream by DownStream<R>. If you return directly in the return value of the method, you can only express the case of 0 ... 1 number of R. DownStream<R> defines two core methods which are more similar to the Emitter of Reactor-core.

| Method | Description |

| boolean push(R element) | Generate a value R for the downstream, and return false if early termination is required. |

| boolean isRejecting0 | Return true if the value is not required by the downstream to avoid unnecessary calculations. |

The integrator is mainly used to generate an optional N number of R by combining the current state A and the input element T and using the ability of DownStream<R>. The core method is as follows:

| Method | Description |

boolean integrate(A state, Telement, Downstream<R> downstream) |

For each input element T, generate 0 ...N number of R by combining the current state A and using the downstream. Return false if early termination is required. |

Combining with the current information, we can find that the design of the whole Gather API is quite complex, because it introduces too many intermediate interface names, supports both single-thread and multi-thread, and has some hidden constraints. Let's analyze it in detail.

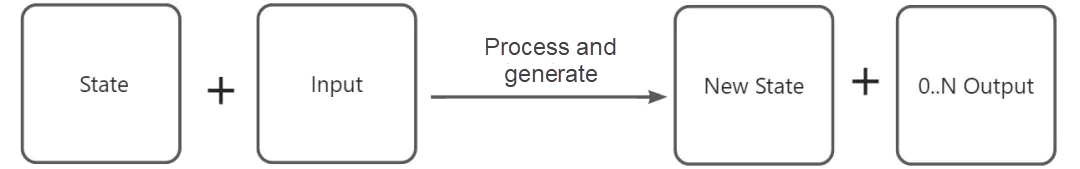

Combining with the above information and the experience of other class libraries, we know that any intermediate process of a stream can be regarded as a function, as shown in the following figure:

The whole GatherAPI above is a variation on this basis. Specifically:

The finisher is mainly used for terminating signal processing, and the combiner is mainly used for parallel streams. This simplifies the whole API. When implementing specific operators, we can only pay attention to specific parts. For example, the stateless map and filter operators are implemented separately below.

map operator is stateless and generates elements in the way of 1:1filter operator is stateless and generates elements in the way of 1:0...1 public static <T, R> Gatherer<T, ?, R> map(final Function<T, R> mapper) {

return Gatherer.of((notused, element, downstream) -> downstream.push(mapper.apply(element)));

}

public static <T> Gatherer<T, ?, T> filter(final Predicate<T> predicate) {

return Gatherer.of((notused, element, downstream) -> {

if (predicate.test(element)) {

return downstream.push(element);

}

return true;

});

}Operators like gather also exist in other class libraries, such as Reactor-core, Mutiny, and Pekko-Stream. Taking Pekko-Stream as an example, the most similar operator to gather is statefulMap which is generally called mapAccumulate in other functional stream libraries. The specific definition is as follows:

def statefulMap[S, T](

create: function.Creator[S],

f: function.Function2[S, Out, Pair[S, T]],

onComplete: function.Function[S, Optional[T]]): javadsl.Flow[In, T, Mat]Operator address:

https://nightlies.apache.org/pekko/docs/pekko/1.0.2/docs/stream/operators/Source-or-Flow/statefulMap.html

A simple comparison with Stream Gather:

| Pekko statefulMap | Gather | Comparison |

| create | Supplier<A>initializer() |

They both use a function to create the initial state. |

| f | Integrator<A, T, R> integrator() |

In statefulMap, the new state and output values are returned by function values. If you need to perform an optional output, you need to return the optional type and filter it later. The memory overhead will be relatively high. |

| N/A | BinaryOperator<A> combiner() |

Pekko natively supports parallel streams, so a single operator does not need to consider the problem of concurrency and state merging. |

| onComplete | BiConsumer<A, DownStream<R>> finisher() |

They respectively use 'OptionaI' and whether to call the 'push' method to generate an optional value. |

The implementations of zipWithIndex by statefulMap and gather are shown respectively as follows:

public static <T> Source<Pair<T, Integer>, NotUsed> zipWithIndex(final Source<T, NotUsed> source) {

return source.statefulMap(

() -> 0,

(state, element) -> Pair.create(state + 1, Pair.create(element, state)),

notused -> Optional.empty()

);

}

public static <T> Stream<Pair<T, Integer>> zipWithIndex(final Stream<T> stream) {

class State {

int index;

}

return stream.gather(Gatherer.ofSequential(

State::new,

(state, element, downstream) -> downstream.push(Pair.create(element, state.index++))

));

}Note that in the GatherAPI design, the state A returned by the initializer is used throughout the lifecycle. If our operator is stateful, then we need to put the state in a variable class, as class State in the above implementation. This is not the same way as the functional programming which returns the new immutable state. One advantage of this is to avoid generating small object Pair(State, Output). The disadvantage is that there will be many such local classes. Other important constraints include that: do not pass State objects to multiple threads for concurrent modification, and the State passed to the integrator needs to be the State returned by the initializer. As for the support for parallelization, it is no longer expanded here. Simply, Java Stream is completed by combiner merging aggregate state. There are similar thoughts in the PR of statefulMapAsync.

Currently, Stream Gather provides several default Gather implementations, such as fold, scan, windowFixed, and mapConcurrent, among which fold is relatively simple, and Collectors.reduce is implemented as pairs. The most interesting is the mapConcurrent which can be used to perform asynchronous conversions with a specified maximum degree of parallelism, similar to Pekko-Stream mapAsync. Note that this implementation uses virtual threads and semaphores, but does not use CompletableFuture, which is simpler to achieve the same feature.

public static <T, R> Gatherer<T,?,R> mapConcurrent(

final int maxConcurrency, // Limit: the maximum degree of parallelism

final Function<? super T, ? extends R> mapper) // Conversion executed in the virtual threadThe whole implementation is very simple:

public static <T, R> Gatherer<T,?,R> mapConcurrent(

final int maxConcurrency,

final Function<? super T, ? extends R> mapper) {

class State {

final ArrayDeque<Future<R>> window =

new ArrayDeque<>(Math.min(maxConcurrency, 16));

// Use semaphores without complex judgment logic.

final Semaphore windowLock = new Semaphore(maxConcurrency);

final boolean integrate(T element,

Downstream<? super R> downstream) {

if (!downstream.isRejecting())

createTaskFor(element);

return flush(0, downstream);

}

final void createTaskFor(T element) {

// Thread blocked. Wait for a permit. This is not a virtual thread here.

windowLock.acquireUninterruptibly();

var task = new FutureTask<R>(() -> {

try {

return mapper.apply(element);

} finally {

// Release the semaphore permit after the processing is completed.

windowLock.release();

}

});

var wasAddedToWindow = window.add(task);

// Use virtual threads to perform specific tasks.

Thread.startVirtualThread(task);

}

final boolean flush(long atLeastN,

Downstream<? super R> downstream) {

// ... A lot of code is omitted here. Push the result value to the next processing node.

downstream.push(current.get());

}

}

return Gatherer.ofSequential(

State::new,

Integrator.<State, T, R>ofGreedy(State::integrate),

(state, downstream) -> state.flush(Long.MAX_VALUE, downstream)

);

}We can see that virtual threads are used to execute mapper concurrently, and semaphores are combined to achieve the maxConcurrency limit. The whole implementation is very simple. If you are interested in that, you can compare the flatmap implementation in the Reactor-core and the mapAsync implementation in the Pekko-Stream.

By comparison, Gather's implementation of fold is relatively simpler, as follows:

public static <T, R> Gatherer<T, ?, R> fold(

Supplier<R> initial,

BiFunction<? super R, ? super T, ? extends R> folder) {

class State {

R value = initial.get(); // Initial state to record the aggregation result value.

State() {}

}

return Gatherer.ofSequential(

State::new,

Integrator.ofGreedy((state, element, downstream) -> {

state.value = folder.apply(state.value, element);

return true;

}),

// After the stream processing ends, the result value is returned to the next processing node of the stream.

(state, downstream) -> downstream.push(state.value)

);

}The state is also kept in the local State class, and the method returned by the finisher is called at the end, pushing the final value to the next processing node of the stream. Because fold is a method that does not necessarily satisfy the associative law, the above uses the Gatherer.ofSequential to ensure serial execution. At the same time, the Stream Gather API also supports the combination of multiple gathers, which is equivalent to the fuse in the other libraries, thus improving performance.

With the introduction of the Stream Gather API and virtual threads, the extensibility shortcomings of the Stream API are complemented and the complexity is reduced. In the future, there will be an open-source library to extend various Gather operators, such as zipWithIndex, zipWithNext, mapConcat, and throttle. Unfortunately, since Java does not currently support Kotlin-like extension methods, the final presentation of the Stream API DSL cannot be Stream.zipWithIndex but Stream.gather(MyGathers.zipWithIndex). It is hoped that Java can make up for the shortcomings of extension methods before the next LTS comes so that we can design DSL extensions more gracefully.

In this article, we have analyzed what a stream is, compared several common stream libraries on Java, and introduced and detailed the Stream Gather API in Java 22. It has also briefly shared how to use virtual threads to simplify the implementation of the map Concurrent operator of Stream Gather. I would like to share the new characteristics with you and make progress together. At the same time, I hope that you can upgrade to the new version of JDK to better empower the businesses.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Mastering Interview Questions: A Comprehensive Guide to Elasticsearch

1,076 posts | 263 followers

FollowAlibaba Cloud Community - April 19, 2024

Alibaba Cloud Community - October 28, 2024

Data Geek - May 9, 2023

Alibaba Cloud Community - November 28, 2023

Alibaba Clouder - January 11, 2019

Apache Flink Community China - September 27, 2019

1,076 posts | 263 followers

Follow Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn MoreMore Posts by Alibaba Cloud Community