By Jiangsheng

In daily development, scenarios like object transformations, linked list deduplication, and batch service calls can lead to lengthy, error-prone, and inefficient code when using for loops or if-else statements. By reviewing project code and with guidance from senior developers, I've learned several new ways to use Streams. Here, I will summarize my findings on Streams.

In Java, when working with elements in arrays or Collection classes, we typically process them one by one using loops or handle them using Stream.

Suppose we have a requirement: return a list of words longer than five characters from a given sentence, output them in descending order by length, and return no more than three words.

At school, or before learning about Stream, we might write a function like this:

public List<String> sortGetTop3LongWords(@NotNull String sentence) {

// Split the sentence to obtain specific word information

String[] words = sentence.split(" ");

List<String> wordList = new ArrayList<>();

// Loop to check the length of the words, and filter out those that meet the length requirement

for (String word : words) {

if (word.length() > 5) {

wordList.add(word);

}

}

// Sort the list that meets the conditions by length

wordList.sort((o1, o2) -> o2.length() - o1.length());

// Check the length of the results in the list; if it is greater than three, return a sublist containing the first three data

if (wordList.size() > 3) {

wordList = wordList.subList(0, 3);

}

return wordList;

}However, using Streams:

public List<String> sortGetTop3LongWordsByStream(@NotNull String sentence) {

return Arrays.stream(sentence.split(" "))

.filter(word -> word.length() > 5)

.sorted((o1, o2) -> o2.length() - o1.length())

.limit(3)

.collect(Collectors.toList());

}It is clear that the code is nearly halved in length. Therefore, understanding and effectively using Stream can make our code much clearer and more readable.

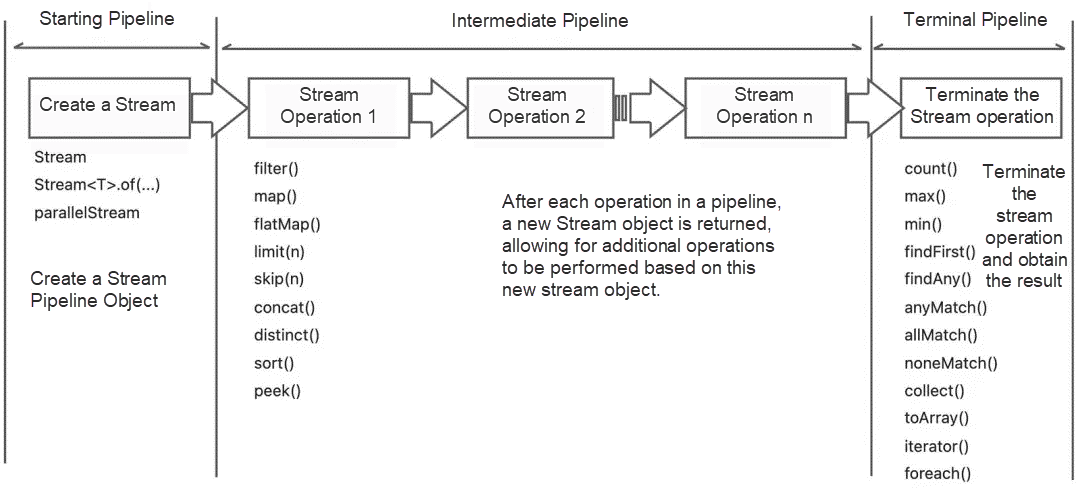

In general, Stream operations can be categorized into three types:

• Create a Stream

• Intermediate Stream Processing

• Terminate a Steam

Each Stream pipeline operation consists of several methods. Here is a list of the various API methods:

The starting pipeline is primarily responsible for creating a new Stream or generating a new Stream from the existing array, List, Set, Map, and other collection-type objects.

| API | Function Description |

|---|---|

| stream() | Create a new sequential Stream object |

| parallelStream() | Create a Stream object that can be executed in parallel |

| Stream.of() | Create a new sequential Stream object from a given series of elements |

The intermediate pipeline is responsible for processing the Stream and returning a new Stream object. Intermediate pipeline operations can be superimposed.

| API | Function Description |

|---|---|

| filter() | Filter elements based on a condition, and return a new stream. |

| map() | Convert existing elements to another object type, following a one-to-one logic, and return a new stream. |

| flatMap() | Convert existing elements to another object type, following a one-to-many logic, that is an original element may be converted to one or multiple new elements of a different type, and return a new stream. |

| limit() | Retain only the specified number of elements from the beginning of the collection, and return a new stream. |

| skip() | Skip the specified number of elements from the beginning of the collection, and return a new stream. |

| concat() | Merge the data from two streams into one new stream, and return the new stream. |

| distinct() | Remove duplicates from all elements in the Stream, and return a new stream. |

| sorted() | Sort all elements in the stream according to the specified rule, and return a new stream. |

| peek() | Traverse each element in the stream, and return the processed stream. |

As the name suggests, after performing terminal pipeline operations, the Stream ends. These operations may execute certain logical processing or return specific results obtained from the execution.

| API | Function Description |

|---|---|

| count() | Return the final number of elements after stream processing. |

| max() | Return the maximum value of the elements after stream processing. |

| min() | Return the minimum value of the elements after stream processing. |

| findFirst() | Terminate stream processing when the first element that meets the condition is found. |

| findAny() | Exit stream processing when any element that meets the condition is found. This is similar to findFirst for sequential streams but is more efficient for parallel streams, as it stops the computation as soon as any matching element is found in any shard. |

| anyMatch() | Return a boolean value similar to isContains(), used to determine if there is an element that meets the condition. |

| allMatch() | Return a boolean value, used to determine if all elements meet the condition. |

| noneMatch() | Return a boolean value, used to determine if no elements meet the condition. |

| collect() | Convert the stream to a type specified by Collectors. |

| toArray() | Convert the stream to an array. |

| iterator() | Convert the stream to an Iterator object. |

| foreach() | Traverse each element in the stream without returning a value, and then execute the specified processing logic. |

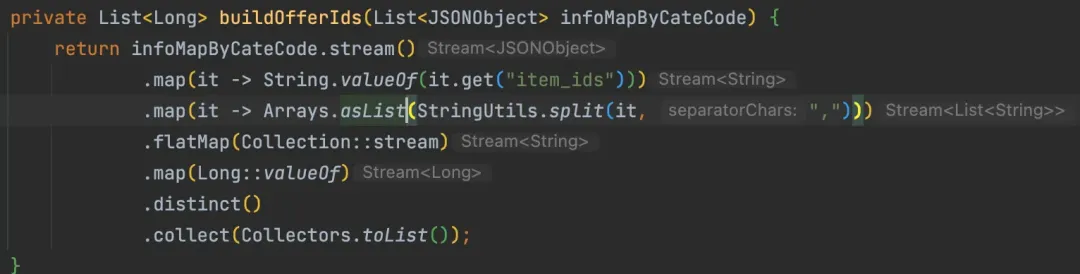

In projects, map and flatMap are frequently seen and used, for example in the following code:

What are the similarities and differences between the two?

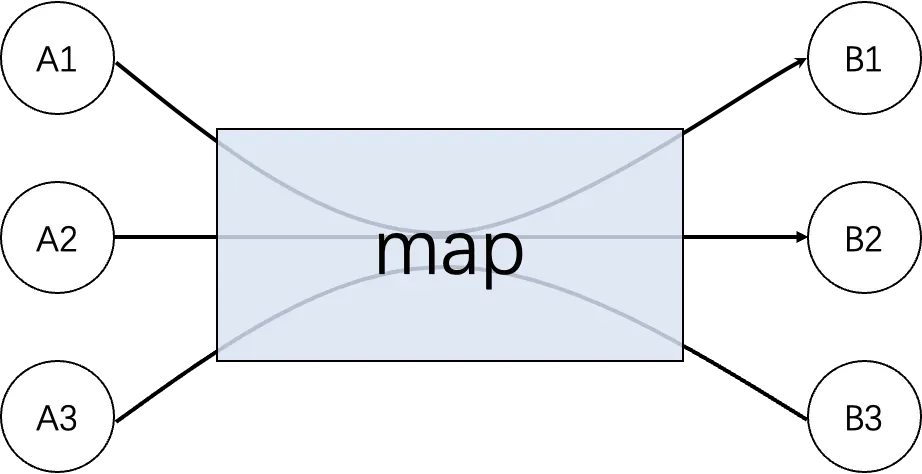

Both map and flatMap are used to convert existing elements into other elements. The difference lies in:

• map must be one-to-one, meaning each element can only be converted into one new element.

• flatMap can be one-to-many, meaning each element can be converted into one or multiple new elements.

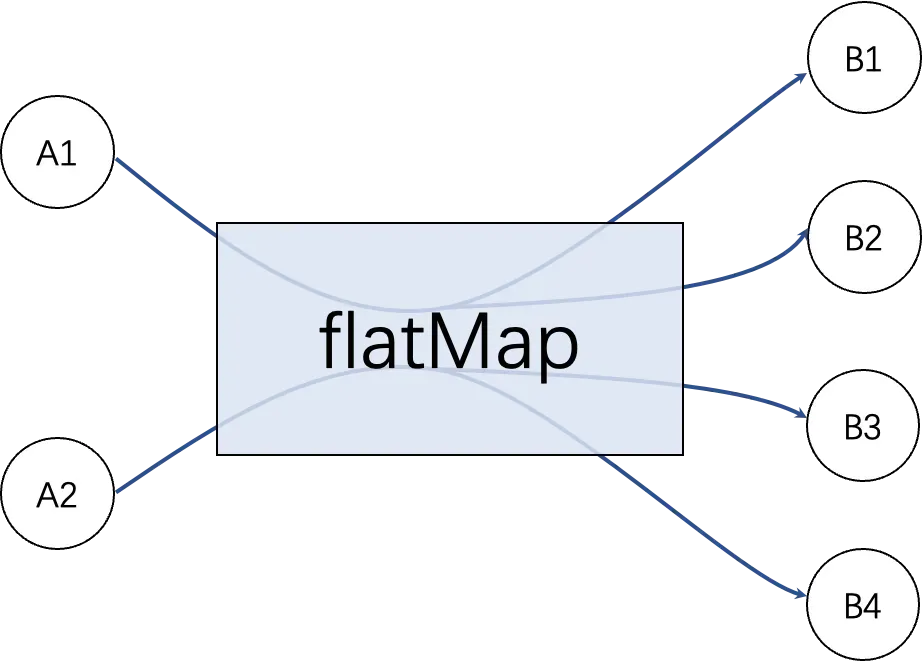

The following two figures illustrate the difference between the two:

map:

flatMap:

There is a list of string IDs, which now needs to be converted into a list of other objects.

/**

* Purpose of map: one-to-one conversion

*/

List<String> ids = Arrays.asList("205", "105", "308", "469", "627", "193", "111");

// Use stream operations

List<NormalOfferModel> results = ids.stream()

.map(id -> {

NormalOfferModel model = new NormalOfferModel();

model.setCate1LevelId(id);

return model;

})

.collect(Collectors.toList());

System.out.println(results);After execution, it is found that each element is converted into a corresponding new element, but the total number of elements before and after the conversion is consistent:

[

NormalOfferModel{cate1LevelId='205', imgUrl='null', linkUrl='null', ohterurl='null'},

NormalOfferModel{cate1LevelId='105', imgUrl='null', linkUrl='null', ohterurl='null'},

NormalOfferModel{cate1LevelId='308', imgUrl='null', linkUrl='null', ohterurl='null'},

NormalOfferModel{cate1LevelId='469', imgUrl='null', linkUrl='null', ohterurl='null'},

NormalOfferModel{cate1LevelId='627', imgUrl='null', linkUrl='null', ohterurl='null'},

NormalOfferModel{cate1LevelId='193', imgUrl='null', linkUrl='null', ohterurl='null'},

NormalOfferModel{cate1LevelId='111', imgUrl='null', linkUrl='null', ohterurl='null'}

]Given a list of sentences, we need to extract each word in the sentences to get a list of all words:

List<String> sentences = Arrays.asList("hello world","Hello Price Info The First Version");

// Use stream operations

List<String> results2 = sentences.stream()

.flatMap(sentence -> Arrays.stream(sentence.split(" ")))

.collect(Collectors.toList());

System.out.println(results2);Output:

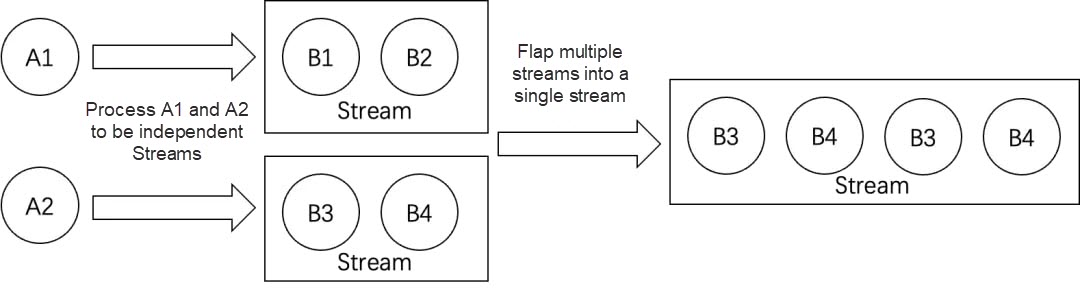

[hello, world, Hello, Price, Info, The, First, Version]It is worth noting that during the flatMap operation, each element is processed and a new Stream is returned first, and then multiple Streams are expanded and merged into a complete new Stream, as shown below:

Both peek and foreach can be used to traverse elements and then process them one by one.

However, as previously mentioned, peek is an intermediate operation, while foreach is a terminal operation. This means that peek can only be used as a step in the middle of the pipeline and cannot directly produce a result; it must be followed by another terminal operation to be executed. On the other hand, foreach, being a terminal operation without return values, can execute the relevant operation directly.

public void testPeekAndforeach() {

List<String> sentences = Arrays.asList("hello world","Jia Gou Wu Dao");

// Demo point 1: Only peek operation, which will not be executed in the end

System.out.println("----before peek----");

sentences.stream().peek(sentence -> System.out.println(sentence));

System.out.println("----after peek----");

// Demo point 2: Only foreach operation, which will be executed in the end

System.out.println("----before foreach----");

sentences.stream().forEach(sentence -> System.out.println(sentence));

System.out.println("----after foreach----");

// Demo point 3: Add a terminal operation after peek, and peek will be executed

System.out.println("----before peek and count----");

sentences.stream().peek(sentence -> System.out.println(sentence)).count();

System.out.println("----after peek and count----");

}The output shows that when peek is called alone, it is not executed, but when a terminal operation is added after peek, it can be executed. Meanwhile, foreach can be executed directly:

----before peek----

----after peek----

----before foreach----

hello world

Jia Gou Wu Dao

----after foreach----

----before peek and count----

hello world

Jia Gou Wu Dao

----after peek and count----These are the commonly used intermediate methods for Stream operations, and their meanings are explained in the table above. When using them, you can select one or more based on your needs, or combine multiple instances of the same method:

public void testGetTargetUsers() {

List<String> ids = Arrays.asList("205","10","308","49","627","193","111", "193");

// Use stream operations

List<OfferModel> results = ids.stream()

.filter(s -> s.length() > 2)

.distinct()

.map(Integer::valueOf)

.sorted(Comparator.comparingInt(o -> o))

.limit(3)

.map(id -> new OfferModel(id))

.collect(Collectors.toList());

System.out.println(results);

}The processing logic of the above code snippet:

1. Use filter to remove data that does not meet the condition.

2. Use distinct to eliminate duplicate elements.

3. Use map to convert strings to integers.

4. Use sorted to sort the numbers in ascending order.

5. Use limit to extract the first three elements.

6. Use map again to convert IDs to OfferModel objects.

7. Use collect to terminate the operation and collect the final processed data into a list.Output:

[OfferModel{id=111}, OfferModel{id=193}, OfferModel{id=205}]As described earlier, terminal methods such as count, max, min, findAny, findFirst, anyMatch, allMatch, and noneMatch are considered terminal operations with simple results. The term "simple" refers to the fact that these methods return results in the form of numbers, Boolean values, or Optional object values.

public void testSimpleStopOptions() {

List<String> ids = Arrays.asList("205", "10", "308", "49", "627", "193", "111", "193");

// Count the remaining elements after stream operations

System.out.println(ids.stream().filter(s -> s.length() > 2).count());

// Check if there is an element with the value of 205

System.out.println(ids.stream().filter(s -> s.length() > 2).anyMatch("205"::equals));

// The findFirst operation

ids.stream().filter(s -> s.length() > 2)

.findFirst()

.ifPresent(s -> System.out.println("findFirst:" + s));

}Output:

6

true

findFirst:205Once a Stream is terminated, you can no longer read the stream to perform other operations. Otherwise, an error will be reported. See the following example:

public void testHandleStreamAfterClosed() {

List<String> ids = Arrays.asList("205", "10", "308", "49", "627", "193", "111", "193");

Stream<String> stream = ids.stream().filter(s -> s.length() > 2);

// Count the remaining elements after stream operations

System.out.println(stream.count());

System.out.println("----- An error will be reported below -----");

// Check if there is an element with the value of 205

try {

System.out.println(stream.anyMatch("205"::equals));

} catch (Exception e) {

e.printStackTrace();

System.out.println(e.toString());

}

System.out.println ("----- An error will be reported above -----");

}Output:

----- An error will be reported below -----

java.lang.IllegalStateException: stream has already been operated upon or closed

----- An error will be reported above -----

java.lang.IllegalStateException: stream has already been operated upon or closed

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:229)

at java.util.stream.ReferencePipeline.anyMatch(ReferencePipeline.java:516)

at Solution_0908.main(Solution_0908.java:55)Since the stream was already terminated by the count() method, an attempt to call anyMatch on the same stream results in the error "stream has already been operated upon or closed". This is something to be aware of when working with Streams.

Since Stream is primarily used for processing collection data, in addition to the terminal methods with simple results mentioned above, more common scenarios involve obtaining a collection-type result object, such as List, Set, or HashMap.

This is where the collect method comes into play. It can support generating the following types of result data:

Generating collection objects is perhaps the most common use case for collect:

List<NormalOfferModel> normalOfferModelList = Arrays.asList(new NormalOfferModel("11"),

new NormalOfferModel("22"),

new NormalOfferModel("33"));

// Collect to a List

List<NormalOfferModel> collectList = normalOfferModelList

.stream()

.filter(offer -> offer.getCate1LevelId().equals("11"))

.collect(Collectors.toList());

System.out.println("collectList:" + collectList);

// Collect to a Set

Set<NormalOfferModel> collectSet = normalOfferModelList

.stream()

.filter(offer -> offer.getCate1LevelId().equals("22"))

.collect(Collectors.toSet());

System.out.println("collectSet:" + collectSet);

// Collect to a HashMap, with key being ID and value being the Dept object

Map<String, NormalOfferModel> collectMap = normalOfferModelList

.stream()

.filter(offer -> offer.getCate1LevelId().equals("33"))

.collect(Collectors.toMap(NormalOfferModel::getCate1LevelId, Function.identity(), (k1, k2) -> k2));

System.out.println("collectMap:" + collectMap);Output:

collectList:[NormalOfferModel{cate1LevelId='11', imgUrl='null', linkUrl='null', ohterurl='null'}]

collectSet:[NormalOfferModel{cate1LevelId='22', imgUrl='null', linkUrl='null', ohterurl='null'}]

collectMap:{33=NormalOfferModel{cate1LevelId='33', imgUrl='null', linkUrl='null', ohterurl='null'}}It is a familiar scenario for many to concatenate values from a List or an array into a single string, with each value separated by a comma. If you use a for loop and a StringBuilder to concatenate the values, you also have to consider how to handle the final comma, which can be quite cumbersome:

public void testForJoinStrings() {

List<String> ids = Arrays.asList("205", "10", "308", "49", "627", "193", "111", "193");

StringBuilder builder = new StringBuilder();

for (String id : ids) {

builder.append(id).append(',');

}

// Remove the extra comma at the end

builder.deleteCharAt(builder.length() - 1);

System.out.println("Concatenated:" + builder.toString());

}However, now with Streams, using collect makes this task much simpler:

public void testCollectJoinStrings() {

List<String> ids = Arrays.asList("205", "10", "308", "49", "627", "193", "111", "193");

String joinResult = ids.stream().collect(Collectors.joining(","));

System.out.println("Concatenated:" + joinResult);

}Both approaches yield the same result, but the Stream approach is more elegant:

Concatenated: 205,10,308,49,627,193,111,193There is another scenario that might be less common in practice - using collect to generate summary statistics for numerical data. Here is how it can be done:

public void testNumberCalculate() {

List<Integer> ids = Arrays.asList(10, 20, 30, 40, 50);

// Calculate the average

Double average = ids.stream().collect(Collectors.averagingInt(value -> value));

System.out.println("Average:" + average);

// Summary Statistics

IntSummaryStatistics summary = ids.stream().collect(Collectors.summarizingInt(value -> value));

System.out.println("Summary Statistics:" + summary);

}In the example above, the collect method is used to perform mathematical operations on the elements of the list. The results are as follows:

Average: 30.0

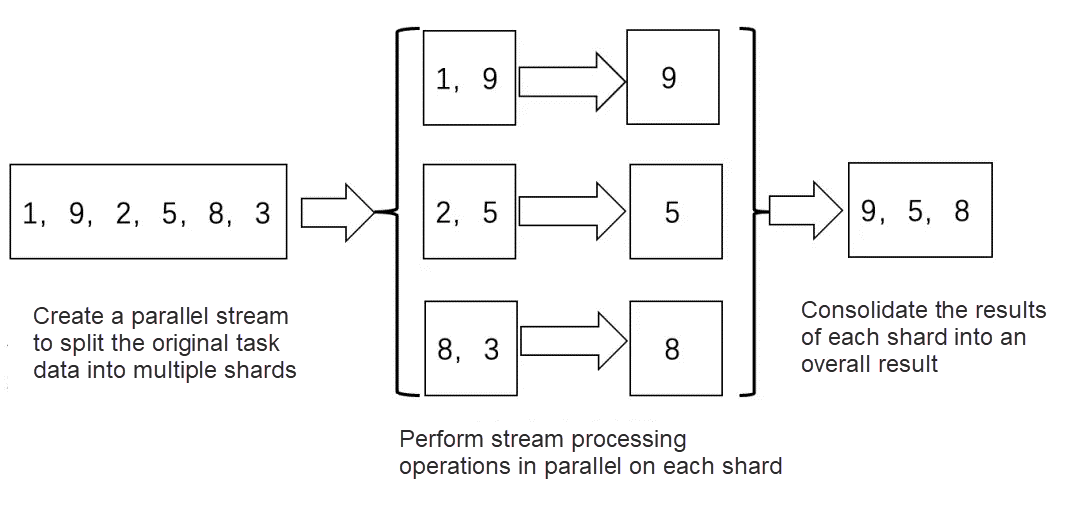

Summary Statistics: IntSummaryStatistics{count=5, sum=150, min=10, average=30.000000, max=50}A parallel stream can effectively leverage multi-CPU hardware to speed up the execution of logic. The parallel stream works by dividing the entire stream into multiple shards, processing each shard in parallel, and then aggregating the results of each shard into a single overall stream.

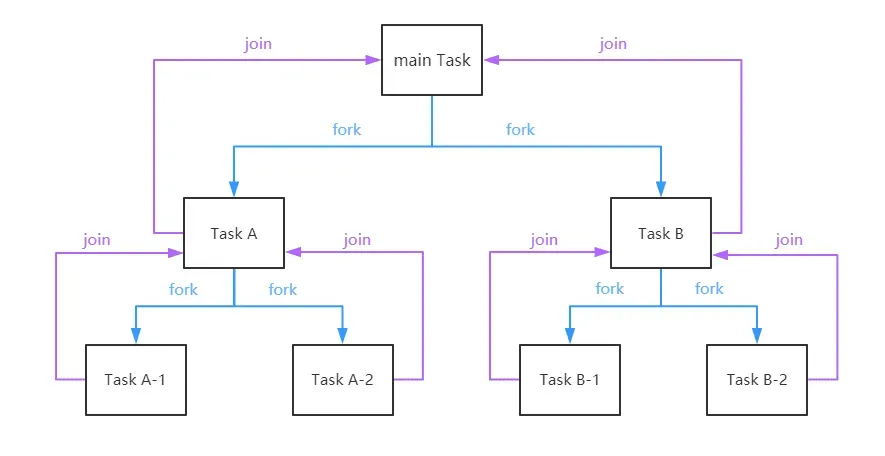

Looking at the source code of parallelStream, we can see that at the underlying layer, parallel streams split tasks and delegate them to the "global" ForkJoinPool thread pool that comes with JDK 8. In a ForkJoinPool with four threads, there is a task queue. Subtasks derived from a large task are submitted to the task queue of the thread pool. The four threads pick up tasks from the queue for execution. The faster a thread completes its tasks, the more tasks it gets. A thread becomes idle only when there are no more tasks in the queue. This is known as work stealing.

The following figure better illustrates the "split and conquer" strategy:

1. In parallelStream(), the foreach() operation must be thread-safe.

Many developers have become accustomed to using stream processing, often applying foreach() directly to for loops. However, this is not always appropriate. If the for loop involves simple operations, there is no need to use stream processing, as the underlying stream encapsulates complex logic that may not offer performance advantages.

2. In parallelStream(), foreach() does not directly use the default thread pool.

ForkJoinPool customerPool = new ForkJoinPool(n);

customerPool.submit(

() -> customerList.parallelStream(). Specific operations3. Try to avoid time-consuming operations when using parallelStream().

If you encounter operations that are time-consuming or involve a large number of I/O or thread sleep, you need to avoid using parallel streams.

Using parallelStream().foreach will by default utilize the global ForkJoinPool, which means that multiple parts of your program, including GC operations, share the same thread pool. If the task queue fills up, it can cause blocking, affecting any part of the program that uses the ForkJoinPool.

When you create a parallel stream with parallelStream, the ForkJoin framework creates multiple threads to execute the stream in parallel. Since ThreadLocal does not inherently support inheritance, the newly created threads cannot access the ThreadLocal data from the parent thread.

When converting a Stream to a map, you need to be aware of potential key duplication.

Debugging can be challenging.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

A Brief Introduction to Getting Started and Practicing with Elasticsearch

1,320 posts | 464 followers

FollowApache Flink Community - August 1, 2025

Apache Flink Community - August 29, 2025

Apache Flink Community China - January 11, 2022

Apache Flink Community China - September 16, 2020

Alibaba Cloud Native Community - August 30, 2022

Apache Flink Community - April 17, 2024

1,320 posts | 464 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba Cloud Community