By Wuge

Initially, we adopted tiered compilation because every time our service was released or restarted, the CPU and the thread pool would temporarily be full and then automatically recover later. After troubleshooting, we found that the JVM compiled some hot spot code into machine code during startup, a process during which the JIT compiler consumed a large amount of CPU.

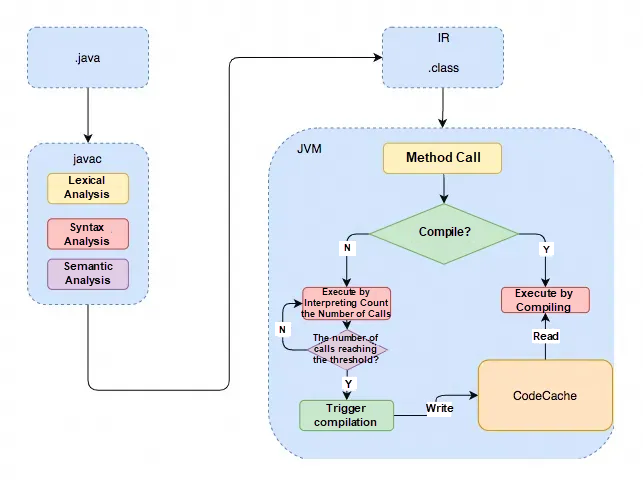

For a Java source code file to become a directly executable machine instruction, it needs to go through two stages of compilation. The first stage converts the .java file into a .class file. The second stage converts the .class file into a machine instruction.

The first stage of compilation is relatively fixed, involving translating Java code into bytecode and packaging it into a jar file. There are two ways to execute the bytecode. The first is to be interpreted by the Interpreter immediately, and the second is to be compiled by the JIT compiler. Whether the Interpreter or the JIT compiler is used depends on whether the current code is Hot Spot Code. When the JVM finds that a method or code block runs particularly frequently, it considers it a hot spot code. Then, the JIT compiles the class of some hot spot code directly into machine code specific to the local machine, optimizes it, and caches the translated machine code for future use. The JIT compiler relies heavily on profiling information gathered during runtime, hence the term Just-in-time Compilation.

The growth process of the Code Cache is similar to that of the ArrayList. It starts with an initial allocation size, and as more and more code is compiled into machine code, the Code Cache expands as it runs out of space. By default, the initial size is 2,496KB, and the maximum size is 240MB, both of which can be configured using the JVM parameters -XX:InitialCodeCacheSize=N and -XX:ReservedCodeCacheSize=N respectively.

Since Java 9, Code Cache management has been divided into three segments:

• non-method segment: Store internal JVM code, with size configured by the -XX:NonNMethodCodeHeapSize parameter.

• profiled-code segment: Contain code compiled by C1, characterized by a short lifecycle (since it may be upgraded to C2 compilation anytime), with size configured by the -XX:ProfiledCodeHeapSize parameter.

• non-profiled segment: Contain code compiled by C2, characterized by a longer lifecycle (because of the rare occurrence of anti-optimization degradation), with size configured by the -XX:NonProfiledCodeHeapSize parameter.

The benefit of partitioning the Code Cache is reduced memory fragmentation and improved execution efficiency. Generally, if we set the maximum size of the Code Cache to N, the non-method segment is reserved first, given that it is internal JVM code with a relatively fixed size. The remaining space is then evenly split by the profiled-code segment and the non-profiled segment.

The JVM integrates two types of JIT compilers:Client Compiler and Server Compiler. The terms client and server originate from their intended use cases. The Client Compiler is designed for client-side programs like IDEA that run on personal computers, where quick startup speed and rapid achievement of decent performance are prioritized without being used for a long time. Conversely, the Server Compiler is designed for server-side programs, such as a Spring backend application, which can run continuously and gather usage information about the application through long-term profiling. With this runtime profiling data, more global and radical optimizations can be performed to achieve the highest performance.

HotSpot VM comes with a Client Compiler known as C1. This compiler offers fast startup but poorer performance compared to the Server Compiler. The optimized performance of the Server Compiler is more than 30% higher than that of the Client Compiler. HotSpot VM includes two Server Compilers: the default C2 and Graal. Graal is a new Server Compiler introduced in JDK9. It can be enabled by the JVM parameter -XX:+UnlockExperimentalVMOptions -XX:+UseJVMCICompiler.

Before Java 7, developers had to manually choose a compiler based on the nature of the service. For services requiring quick startup or not needing long-term operation, the C1 Compiler with higher compilation efficiency was chosen; while for backend services demanding peak performance, the C2 Compiler with better optimization was selected. Since Java 7, Tiered Compilation was introduced to strike a balance between the two options.

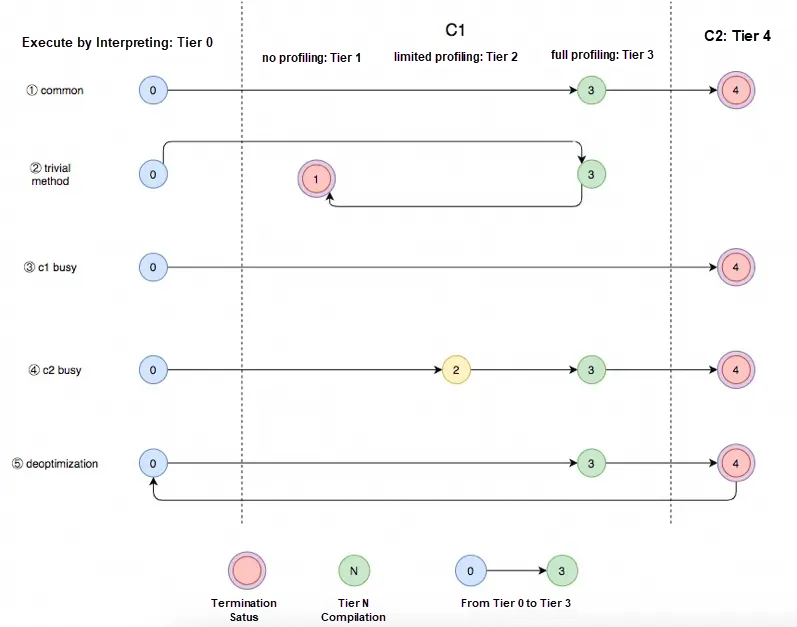

JVM categorizes tiered compilation into five levels:

Profiling is time-consuming as well, so in terms of execution speed, Tier 1 > Tier 2 > Tier 3.

The common compilation path starts with interpretation (Tier 0), then moves to C1 with full profiling (Tier 3), and finally to C2 (Tier 4). However,

• If the method is too trivial (small or not suitable for profiling), it will revert from Tier 3 to Tier 1, executing the C1 code with no profiling, and the process stops there.

• If the C1 Compiler is busy, C2 is used directly. Similarly, if the C2 compiler is busy, the process reverts to Tier 2, then proceeds to Tier 3, waiting until C2 is available to compile. The reason for reverting to Tier 2 first is to reduce the time spent in Tier 3, which is slower than Tier 2 in terms of execution efficiency. If C2 is busy, it also indicates that most methods are still in Tier 3, queuing for C2 to compile them.

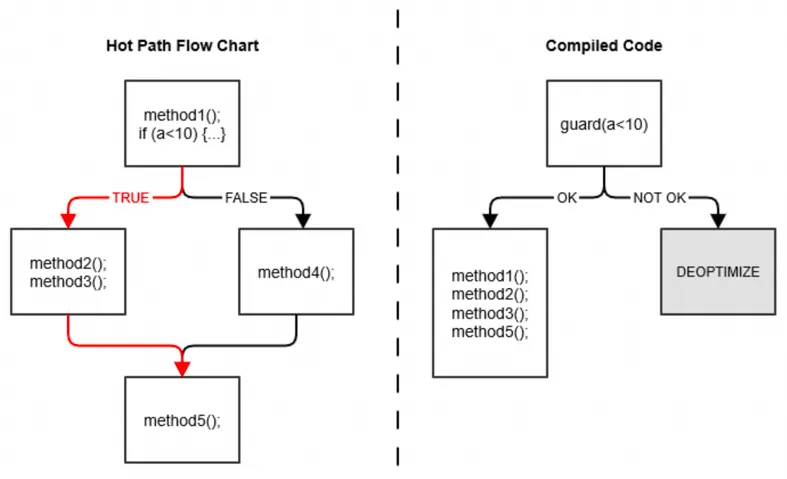

• If C2 does some radical optimization, such as branch prediction, and then finds the prediction wrong in the actual execution, it will perform "deoptimization" and revert to interpretation. As shown in the figure below, the compiler notices that a is always less than 10 during runtime, leading to the continuous execution of the left red branch. At this point, the compiler assumes that this branch may continue to be taken in the future, so it optimizes the code by removing the if judgment and combining the relevant code. In the case of a ≥ 10, the code would be executed by interpreting again.

How do the results from C1, C2, and tiered compilation differ in terms of performance?

This section uses the conclusion in this article: Startup, containers & Tiered Compilation. This article also includes a method for measuring JIT compilation time using JDK Mission Control.

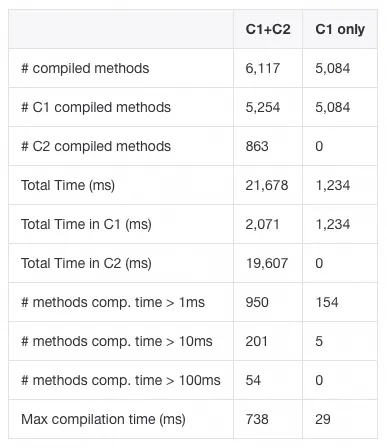

The author used Spring PetClinic, an official test project provided by Spring, to measure the compilation times of the C1 and C2 compilers. The results are as follows:

As shown in the above table, C2 compiled 863 methods in 19.6 seconds, while C1 compiled 5,254 methods in only 2.1 seconds, indicating a significant difference in compilation speed.

This section directly references conclusions from the book: Chapter 4. Working with the JIT Compiler, specifically the section "Basic Tunings: Client or Server (or Both)".

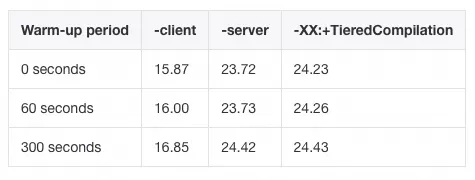

The author tested with a servlet application to measure its throughput per second under different compilation modes and warm-up periods. The results are as follows:

As the warm-up period increases, the compiler collects more comprehensive profiling information, leading to more thorough optimization, and thus the throughput of the service gradually rises.

At the same time, the performance of code compiled by C2 is significantly better than that of code compiled by C1. With tiered compilation enabled, performance tends towards the optimal performance of C2 over time.

As we all know, object-oriented design requires encapsulation, which means getter and setter methods are also needed:

public class Point {

private int x, y;

public void getX() { return x; }

public void setX(int i) { x = i; }

}The cost of invoking a function is relatively high because it involves switching stack frames.

Point p = getPoint();

p.setX(p.getX() * 2);In the above example, when p.getX() is executed, the program must save the execution location of the current method, create and push the stack frame of getter, access p.x within the frame, return the value, and then pop the stack frame to resume the execution of the current method. The same process applies when executing p.setX(...).

Thus, the compiler can directly inline the getX() and setX() methods to improve the execution efficiency of the compiled code. After optimization, the actual executed code is as follows:

Point p = getPoint();

p.x = p.x * 2;In this article, the performance loss after disabling inlining optimization through -XX:-Inline exceeds 50%.

The compiler primarily determines whether the method is worth inlining optimization based on its size. First, as mentioned earlier, the compiler performs profiling at runtime, and for hot spot code, if the bytecode size of the method is less than 325 bytes (or specified by the parameter -XX:MaxFreqInlineSize=N), the method will be inlined. For non-hot spot code, if the bytecode size of the method is less than 35 bytes (or specified by the parameter -XX:MaxInlineSize=N), the method will be inlined.

Generally, the more methods that are inlined, the higher the execution efficiency of generating code. However, the more methods that are inlined, the longer the compilation time, and the later the program reaches its peak performance.

The CPU executes instructions in a pipeline, similar to workers on an assembly line. When a method starts running, the CPU loads the instructions from memory into the CPU cache in the proper order. Then, the pipeline starts moving, and the CPU executes each instruction as it comes through. When instructions are executed in sequence, the efficiency is highest, but loops disrupt this sequence.

Consider the following Java code:

public class LoopUnroll {

public static void main(String[] args) {

int MAX = 1000000;

long[] data = new long[MAX];

java.util.Random random = new java.util.Random();

for (int i = 0; i < MAX; i++) {

data[i] = random.nextLong();

}

}

}The compiled bytecode represents the pipeline when the CPU executes instructions:

public static void main(java.lang.String[]);

Code:

0: ldc #2 // int 1000000

2: istore_1

3: iload_1

4: newarray long

6: astore_2

7: new #3 // class java/util/Random

10: dup

11: invokespecial #4 // Method java/util/Random."<init>":()V

14: astore_3

15: iconst_0

16: istore 4

18: iload 4

20: iload_1

21: if_icmpge 38

24: aload_2

25: iload 4

27: aload_3

28: invokevirtual #5 // Method java/util/Random.nextLong:()J

31: lastore

32: iinc 4, 1

35: goto 18

38: return

</init>The pipeline moves from top to bottom, and the CPU executes the code accordingly. However, due to a loop, when it reaches line 35, the goto instruction sends the CPU back to line 18, seemingly reversing the pipeline's direction. Reversing the pipeline is more costly than keeping it running in one direction because it requires stopping the pipeline first, then reversing its direction, and finally restarting it. When a reverse (forward jump, back branch) occurs, the CPU needs to preserve the current pipeline state, remember where it reversed from (line 35), reread the following instructions from the main memory, and load them into the pipeline for execution. This process incurs a performance loss comparable to a CPU cache miss.

To minimize these reversals, the JIT compiler performs loop unrolling to reduce the number of loops. In the mentioned example, the optimized code might look like this:

public class LoopUnroll {

public static void main(String[] args) {

int MAX = 1000000;

long[] data = new long[MAX];

java.util.Random random = new java.util.Random();

for (int i = 0; i < MAX; i += 5) {

data[i] = random.nextLong();

data[i + 1] = random.nextLong();

data[i + 2] = random.nextLong();

data[i + 3] = random.nextLong();

data[i + 4] = random.nextLong();

}

}

}This explanation is primarily based on the article: Optimize loops with long variables in Java.

However, if the loop counter i in the above example is changed from int to long, loop unrolling will not occur. This is mainly due to the JVM's temporary lack of support for optimizing the long loop counter. The reason is that some JVM optimizations must handle int overflow, typically by internally promoting the int counter to the long counter. To support the optimization of the long loop counter would require handling long overflow, while there is currently no larger integer type than long available to do so at a low cost.

Additionally, using a long loop counter introduces heuristic safepoint checks and full array range checks, further reducing loop efficiency. The article points out that for the same logic, using the long loop counter is about 64% slower than using the int loop counter.

A safepoint is a special location inserted into the program code by the JVM, marking that when the program runs to this location, all changes to the variables inside the current code block are completed. At this time, the JVM can safely interrupt the current thread and perform some additional operations, such as GC to replace the memory or any operation that triggers STW (Stop-The-World) (such as full GC, deoptimization described above, and thread memory dumping).

During interpretation, safepoints can naturally be inserted in-between bytecodes, right after the current bytecode is executed and before the next one starts. However, for JIT-compiled code, some heuristic methods must be used to analyze and place safepoints, whose ideal location is before forward jumps in the loop.

Consider the following code:

private long longStride1()

{

long sum = 0;

for (long l = 0; l < MAX; l++)

{

sum += data[(int) l];

}

return sum;

}In the compiled code, you can see that there is a safepoint check after each loop is executed:

// ARRAY LENGTH INTO r9d

0x00007fefb0a4bb7b: mov r9d,DWORD PTR [r11+0x10]

// JUMP TO END OF LOOP TO CHECK COUNTER AGAINST LIMIT

0x00007fefb0a4bb7f: jmp 0x00007fefb0a4bb90

// BACK BRANCH TARGET - SUM ACCUMULATES IN r14

0x00007fefb0a4bb81: add r14,QWORD PTR [r11+r10*8+0x18]

// INCREMENT LOOP COUNTER IN rbx

0x00007fefb0a4bb86: add rbx,0x1

// SAFEPOINT POLL

0x00007fefb0a4bb8a: test DWORD PTR [rip+0x9f39470],eax

// IF LOOP COUNTER >= 1_000_000 THEN JUMP TO EXIT CODE

0x00007fefb0a4bb90: cmp rbx,0xf4240

0x00007fefb0a4bb97: jge 0x00007fefb0a4bbc9

// MOVE LOW 32 BITS OF LOOP COUNTER INTO r10d

0x00007fefb0a4bb99: mov r10d,ebx

// ARRAY BOUNDS CHECK AND BRANCH BACK TO LOOP START

0x00007fefb0a4bb9c: cmp r10d,r9d

0x00007fefb0a4bb9f: jb 0x00007fefb0a4bb81Then, why is there no safepoint check for the int loop counter? This is because the JVM optimizes it away for considerations of loop performance. If you want to force the JVM to insert safepoints, you can set the step size of the loop to a variable:

private long intStrideVariable(int stride)

{

long sum = 0;

for (int i = 0; i < MAX; i += stride)

{

sum += data[i];

}

return sum;

}Multi-threaded programs run from one safepoint to another. When Thread 1 enters its safepoint, it pauses and waits for Threads 2 and 3 to enter their next safepoints. Once all threads enter their respective safepoints, they can proceed together. The advantage of this approach is that there is a gap where all threads safely pause, allowing the JVM to perform global operations such as GC.

However, as mentioned above, for int loops, the JVM optimizes away safepoints during forward jumps. If the loop is time-consuming, a situation will occur where all other threads wait for this single loop thread after reaching their safepoints.

This situation manifests as an exceptionally long STW duration. Or you have clearly enabled multiple threads, and none of their tasks is completed, but you find that only one thread is still running when threads run after a while.

Bug Examples:

An exceptionally long STW duration.

A multi-threaded program becomes a single-threaded one when running after a while.

To solve this problem, you can use the JVM parameter -XX:+UseCountedLoopSafepoints. With this parameter, the JVM will forcibly insert a safepoint for every N loop. However, according to the documents of Oracle, there are rare scenarios in which the program can benefit from the approach.

If you suspect that an extremely long STW is caused by waiting for the safepoint check of a loop thread, you can add the JVM parameters -XX:+SafepointTimeout -XX:SafepointTimeoutDelay=1000. These parameters enable the JVM to print a timeout log if the safepoint check waits longer than 1,000ms, as shown in the following example:

# SafepointSynchronize::begin: Timeout detected:

# SafepointSynchronize::begin: Timed out while spinning to reach a safepoint.

# SafepointSynchronize::begin: Threads which did not reach the safepoint:

# "pool-1-thread-2" #12 prio=5 os_prio=0 tid=0x0000000019004800 nid=0x1480 runnable [0x0000000000000000]

java.lang.Thread.State: RUNNABLE

# SafepointSynchronize::begin: (End of list)It needs to be noted that safepoint check optimization applies only to counted loops. For non-counted loops, a safepoint is inserted after each loop.

Additionally, if your JVM is running in debug mode, it will not eliminate safepoint checks. This means that even if your online program has this bug, you will not be able to reproduce it in debug mode.

To understand this section, you need to first fully read the previous section. Furthermore, this is a new technology introduced in Java 10.

In the previous section, we discuss that for counted loops with int loop counters, the JIT compiler eliminates safepoint checks during forward jumps. This can lead to a situation in the multi-threaded scenario where all threads are waiting for a single loop thread to reach the next safepoint. Such occasional and long STW duration is particularly sensitive for low-latency GC, as it can give the impression that GC is slower. It also drags down other fast threads to wait at a safepoint. Furthermore, using the -XX:+UseCountedLoopSafepoints parameter to forcibly insert safepoints after every N loop can impact loop performance.

To strike a balance, Java 10 introduces a new technology called Loop Strip Mining, essentially a loop partitioning technology. Through profiling, it determines how long it takes other threads to reach the next safepoint and identifies how many iterations in the long loop of the current thread match this average time to reach the safepoint. Then, it inserts safepoints after these iterations to avoid dragging down all threads due to the long loop in a single thread not passing through safepoints.

for (int i = start; i < stop; i += stride) {

// body

}The optimized pseudocode is as follows:

i = start;

if (i < stop) {

do {

// LoopStripMiningIter, the number of matching loops deduced by JIT

int next = MIN(stop, i + LoopStripMiningIter * stride);

do {

// body

i += stride;

} while (i < next);

// Semantically equivalent to inserting a safepoint in the middle of the original loop

safepoint();

} while (i < stop);

}The purpose of array range checks is to determine whether the current array object access is out of range. If the situation does happen, it will throw an ArrayIndexOutOfBoundsException.

Consider accessing an array within a loop:

for (int index = Start; index < Limit; index++) {

Array[index] = 0;

}Each iteration of the loop requires checking whether the index exceeds the range of the Array. This is undoubtedly time-consuming. To mitigate this, the JIT compiler minimizes range checks in the loop.

This technology divides a loop into three parts: Pre-loop, Main loop, and Post-loop. It will run the loop first along with array range checks. During execution, it will infer the maximum number of loops N without the range check according to the step size change and the maximum length of the array. This process is the pre-loop. The subsequent N iterations proceed without range checks, forming the main loop. Any loops beyond N include range checks when accessing the array again, forming the post-loop.

The optimized version of the above code might look like this:

int MidStart = Math.max(Start, 0);

int MidLimit = Math.min(Limit, Array.length);

int index = Start;

for (; index < MidStart; index++) { // PRE-LOOP

if (index > Array.length) { // RANGE CHECK

throw new ArrayIndexOutOfBoundsException();

}

Array[index] = 0;

}

for (; index < MidLimit; index++) { // MAIN LOOP

Array[index] = 0; // NO RANGE CHECK

}

for (; index < Limit; index++) { // POST-LOOP

if (index > Array.length) { // RANGE CHECK

throw new ArrayIndexOutOfBoundsException();

}

Array[index] = 0;

}The execution of this optimization has several prerequisites:

• The array accessed by the loop does not change during the loop.

• The step size of the loop remains constant during the loop.

• When accessed, the array subscript is linearly related to the loop counter.

for (int x = Start; x < Limit; x++) {

Array[k * x + b] = 0;

}In this example, k and b are constants that do not change during the loop, x is the loop counter, and the access of k * x + b is an access that is linearly related to the loop counter.

• The current loop is identified as a hot loop during JIT profiling.

Loop unswitching is primarily used to reduce the number of conditional checks inside loops:

for (i = 0; i < N; i++) {

if (x) {

a[i] = 0;

} else {

b[i] = 0;

}

}The code above can be optimized by the compiler to:

if (x) {

for (i = 0; i < N; i++) {

a[i] = 0;

}

} else {

for (i = 0; i < N; i++) {

b[i] = 0;

}

}This optimization can be performed during bytecode compilation through static analysis, without relying on runtime profiling or the JIT compiler.

Escape Analysis (EA) is a code analysis process that, based on the analysis results, enables various interesting optimizations, such as scalar replacement and lock elision.

The following article explains in detail how the JIT compiler utilizes the results of escape analysis to perform various optimizations, but the content is quite theoretical and can be difficult to understand. If you are interested, you can take a look:

SEEING ESCAPE ANALYSIS WORKING

If you want to have a brief understanding, you can look at the part I write below.

If an object is inaccessible to the method from outside and can also be split, then when executed, the program will not create the object but directly create its member variables as a replacement. After the object is split, its member variables can be allocated on the stack or in the register, eliminating the need to allocate memory space for the original object.

public void foo() {

MyObject object = new MyObject();

object.x = 1;

...//to do something

}After optimized by the compiler:

public void foo() {

int x = 1;

...//to do something

}In this example, MyObject object = new MyObject(); in the code before optimization allocates memory on the heap, with subsequent memory reclaimed by GC. In contrast, int x = 1; in the optimized code allocates memory directly on the stack of the current method. Memory within the stack is automatically released when the stack frame of the current method finishes execution. Thus, there is no need for GC to reclaim the garbage, and stack memory access is faster than heap memory access. With the two benefits combined, the execution efficiency of the program can be enhanced.

During the optimization process, the compiler performs escape analysis at runtime to determine whether the access to the object extends beyond the scope of the current method (escaping). For instance, whether it is returned as the return value of the current method, passed as an input parameter to another method, or accessed by multiple threads. If the compiler determines that it is non-escaping, the above optimization will be applied.

Due to the current implementation of EA, if an object passes through the control flow before being accessed, the compiler will not optimize it, even if it appears to be non-escaping from our perspective. As shown in the following example, the o object does not escape, but it will not be optimized in practice.

public void foo(boolean flag) {

MyObject o;

if (flag) {

o = new MyObject(x);

} else {

o = new MyObject(x);

}

...//to do something

}How much of a performance boost can scalar replacement provide?

Benchmark Mode Cnt Score Error Units

ScalarReplacement.single avgt 15 1.919 ± 0.002 ns/op

ScalarReplacement.single:·gc.alloc.rate avgt 15 ≈ 10⁻⁴ MB/sec

ScalarReplacement.single:·gc.alloc.rate.norm avgt 15 ≈ 10⁻⁶ B/op

ScalarReplacement.single:·gc.count avgt 15 ≈ 0 counts

ScalarReplacement.split avgt 15 3.781 ± 0.116 ns/op

ScalarReplacement.split:·gc.alloc.rate avgt 15 2691.543 ± 81.183 MB/sec

ScalarReplacement.split:·gc.alloc.rate.norm avgt 15 16.000 ± 0.001 B/op

ScalarReplacement.split:·gc.count avgt 15 1460.000 counts

ScalarReplacement.split:·gc.time avgt 15 929.000 msThis article provides a comparative test of the above two examples. ScalarReplacement.single is the performance result after scalar replacement and ScalarReplacement.split is the performance result after adding control flow to cheat EA without scalar replacement.

Personally, I think the above ns/op value is meaningless because it is not a comparative experiment under the same code environment, and the ScalarReplacement.split results are affected by the performance impact of the added if-else judgment. Therefore, it cannot be used to compare the performance improvement brought by scalar replacement.

Instead, the improvements in gc.count and gc.time are more valuable, as the ultimate benefit of scalar replacement is to eliminate heap memory allocation and the associated CG process.

Scalar Replacement and Allocation on the Stack

As we all know, in terms of access speed, register > stack memory > heap memory. Therefore, another EA-based compilation optimization strategy is allocation on the stack, which involves storing entire objects on the stack for those that do not escape. However, the JVM does not implement this strategy; instead, it uses scalar replacement as an alternative. This mainly takes into account two factors:

• In the memory model of Java, stack memory only stores the pointer information of the original type and object, and the pointer of the object points to the address of the object entity in the heap memory. Allocating object entities in stack memory breaks this pattern.

• The storage of an object includes not only the storage of its member variables but also the header structure of the object itself. Regardless of whether an object is allocated on the stack or the heap, allocating such an object always includes storing its header structure information. However, with scalar replacement, the header structure is eliminated, and only the member variables are allocated.

A classic Java interview question is the difference between StringBuffer and StringBuilder. The former is thread-safe, whereas the latter is not. This is because the append method in StringBuffer is annotated with the synchronized keyword, which locks the method.

In practice, however, in the following code test, the performance of StringBuffer and StringBuilder is virtually the same. This is because the object created within a local method can only be accessed by the current thread and not by other threads, so there is no contention for reading and writing this variable. At this point, the JIT compiler can perform lock elision to the method lock of the object.

public static String getString(String s1, String s2) {

StringBuffer sb = new StringBuffer();

sb.append(s1);

sb.append(s2);

return sb.toString();

}Lock elision is based on escape analysis, and a necessary condition for non-escaping is that the current object is visible only to one thread. Consequently, for internal operations on objects that do not escape, lock elision can be performed, removing synchronization logic to improve efficiency.

The compiler can be disabled from performing lock elision optimizations through the JVM parameter -XX:-EliminateLocks. This article demonstrates through experimentation that the performance after lock elision is almost identical to that of the version without locking, and furthermore, disabling lock elision optimization results in a performance gap of tens of times between the locked and unlocked versions.

Peephole optimization is a late-stage optimization strategy employed by the compiler aimed at reducing the computational intensity of instructions within local code blocks. These optimizations do not require profiling and can be accomplished directly through static analysis of the code by the compiler.

Note that peephole optimization is machine-dependent. Compilers tailor optimizations based on the characteristics of different processors' instruction sets, meaning that the results of peephole optimization can vary across different processors.

For example, Intel introduced the AVX2 (Advanced Vector Extensions) instruction set in its CPU with the Sandy Bridge architecture in 2011, and later expanded it with the AVX-512 instruction set in 2016. Therefore, starting from JDK9, the JIT compiler has included specific optimizations for this instruction set, although it is initially disabled by default. From JDK11 onwards, this instruction set is enabled by default. You can set this parameter through the JVM parameter -XX:UseAVX=N. The value of N can be:

• 0: Disable AVX instruction set optimizations

• 1: Use AVX level 1 instructions for optimization (supported only on Sandy Bridge and newer CPUs)

• 2: Use AVX level 2 instructions for optimization (supported only on Haswell and newer CPUs)

• 3: Use AVX level 3 instructions for optimization (supported only on Knights Landing and newer CPUs)

At runtime, the JVM automatically detects the current CPU architecture and selects the highest version of the instruction set supported by the CPU for optimization, that is, effectively setting the value of N in -XX:UseAVX=N.

Use the laws of algebra or discrete mathematics for optimization

y = x * 3

=> y = (x << 1) + x;

A && (A || B)

=> A;

A || (A && B)

=> A;Replace slower instructions with faster ones

For example, for the squaring of x:

x * xDirectly compiled bytecode:

aload x

aload x

mulThe aload x denotes loading the variable labeled x and pushing it onto the stack, while the mul indicates multiplying the two operands. After peephole optimization, the result can be:

aload 1

dup

mulThe dup operation signifies duplicating the result of the previous instruction and pushing it to the top of the stack, which is faster compared to using aload to load the variable from memory again.

As the name suggests, dead code elimination means removing inaccessible or useless parts of the code.

int dead()

{

int a=10;

int z=50;

int c=z*5;

a=20;

a=a*10;

return c;

}After optimization:

int dead()

{

int z=50;

int c=z*5;

return c;

}• Principle Analysis and Practice of Java Just-in-time Compiler:

https://tech.meituan.com/2020/10/22/java-jit-practice-in-meituan.html

• 21 Deep Dive Into the JVM Just-in-time Compiler JIT to Optimize Java Compilation:

https://learn.lianglianglee.com/专栏/Java并发编程实战/21%20%20深入JVM即时编译器JIT,优化Java编译.md

• Startup, containers & Tiered Compilation:

https://jpbempel.github.io/2020/05/22/startup-containers-tieredcompilation.html

• Chapter 4. Working with the JIT Compiler:

https://www.oreilly.com/library/view/java-performance-the/9781449363512/ch04.html

• Deep Dive Into the New Java JIT Compiler – Graal:

https://www.baeldung.com/graal-java-jit-compiler

• Tiered Compilation in JVM:

https://www.baeldung.com/jvm-tiered-compilation

• Loop Unrolling:

https://blogs.oracle.com/javamagazine/post/loop-unrolling

• Optimize loops with long variables in Java:

https://developers.redhat.com/articles/2022/08/25/optimize-loops-long-variables-java

• JVM Source Code Analysis – Safepoint:

https://www.jianshu.com/p/c79c5e02ebe6

• java code execution yields to different results in debug without breakpoints and normal run. Is ExecutorService broken?:

https://stackoverflow.com/a/38427546

• The situation that the ParNew application pauses occasionally for several seconds:

https://hllvm-group.iteye.com/group/topic/38836

• Loop Strip Mining in C2:

https://cr.openjdk.org/~roland/loop_strip_mining.pdf

• RangeCheckElimination:

https://wiki.openjdk.org/display/HotSpot/RangeCheckElimination

• SEEING ESCAPE ANALYSIS WORKING:

https://www.javaadvent.com/2020/12/seeing-escape-analysis-working.html

• JVM Anatomy Quark #18: Scalar Replacement:

https://shipilev.net/jvm/anatomy-quarks/18-scalar-replacement/

• JVM Anatomy Quark #19: Lock Elision:

https://shipilev.net/jvm/anatomy-quarks/19-lock-elision/

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Cloud Unveils AI-powered Media Solutions and IoT Partnerships at Gitex in the Middle East

1,347 posts | 477 followers

FollowApsaraDB - October 22, 2020

Aliware - April 10, 2020

Apache Flink Community China - March 17, 2023

Alibaba Cloud Native Community - September 11, 2024

OpenAnolis - December 24, 2024

Alibaba Clouder - April 15, 2021

1,347 posts | 477 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba Cloud Community