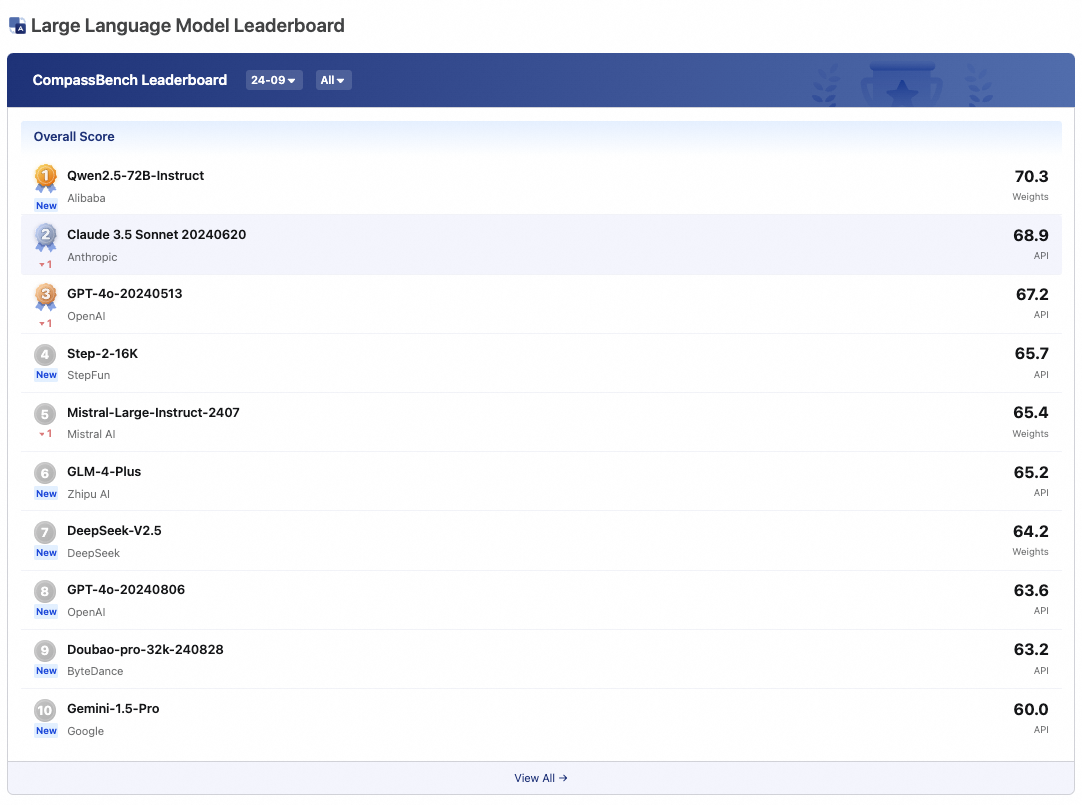

Qwen 2.5 tops OpenCompass LLM Leaderboard in its September update

According to its latest September update, Alibaba Cloud’s open-source Qwen 2.5-72B-Instruct has claimed the top spot on the OpenCompass large language model leaderboard. In various benchmarks,it surpasses even closed-source SOTA models, such as Claude 3.5 and GPT-4o.

Qwen 2.5-72B-Instruct showcased strong overall capabilities, achieving the highest score of 74.2 in coding and an impressive 77 in mathematics, outperforming Claude 3.5 (72.1) and GPT-4o (70.6). In a recent article, OpenCompass commended Qwen 2.5 as its first-ever open-source champion, reflecting the rapid progress in the open-source LLM community.

The bi-monthly leaderboard, established by the Shanghai Artificial Intelligence Laboratory, assesses LLM performance across language, knowledge, reasoning, mathematics, coding, instruction, and agent capabilities.

During the Apsara Conference 2024, Alibaba Cloud introduced over 100 open-source Qwen2.5 multimodal models to the global open-source community. These models range from 0.5 to 72 billion parameters in size and support over 29 languages.

See more about 100 open-sourced Qwen2.5 multimodal models on Apsara Conference 2024.

Watch the replay of the Apsara Conference 2024 at this link!

Unleashing the Power of Qwen, Alibaba Cloud Kicks Off Hong Kong Inter-School AIGC Competition

1,322 posts | 464 followers

FollowAlibaba Cloud Community - June 11, 2024

Alibaba Cloud Community - November 22, 2024

Alibaba Cloud Community - November 24, 2025

Alibaba Cloud Community - February 27, 2025

Alibaba Cloud Community - May 9, 2024

Alibaba Cloud Community - July 9, 2024

1,322 posts | 464 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Community