By Li Bo (Aohai), an AI product expert at Alibaba Cloud who has worked in the AI industry for five years and is responsible for AI platform products

This article provides an overview of the DataScience node of E-MapReduce, including the DataScience deep learning framework, PAI-Alink machine learning algorithm platform for unified streaming and batch processing, and DataScience atomic components, such as AutoML, FaissServer, and PAI-EMS.

This article was adapted from a PowerPoint presentation and can be divided into two main parts:

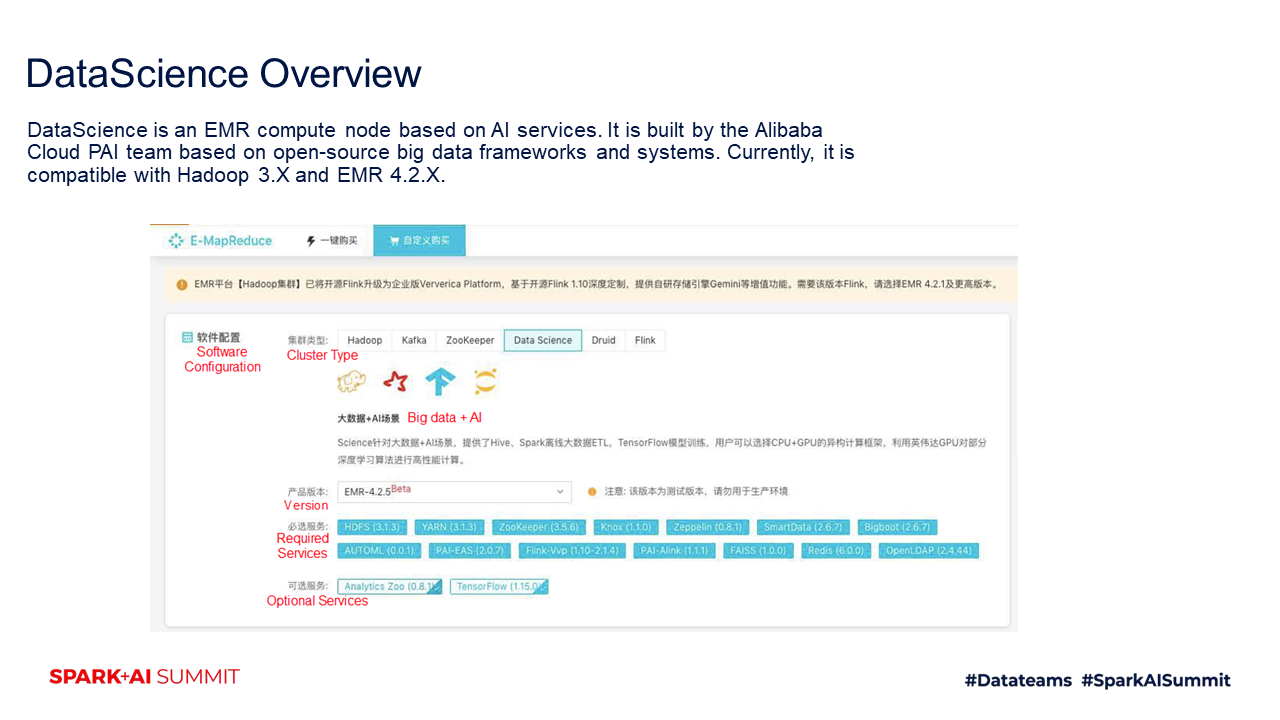

DataScience is an E-MapReduce (EMR) compute node based on AI services. It was built by the Alibaba Cloud Platform for AI (PAI) team based on open-source big data frameworks and systems. We will create this node before the Spark "Digital Body" AI Challenge. You can go to the EMR product, select the DataScience node, and select all the components that you want to use. DataScience is compatible with Hadoop 3.x and EMR 4.2.x.

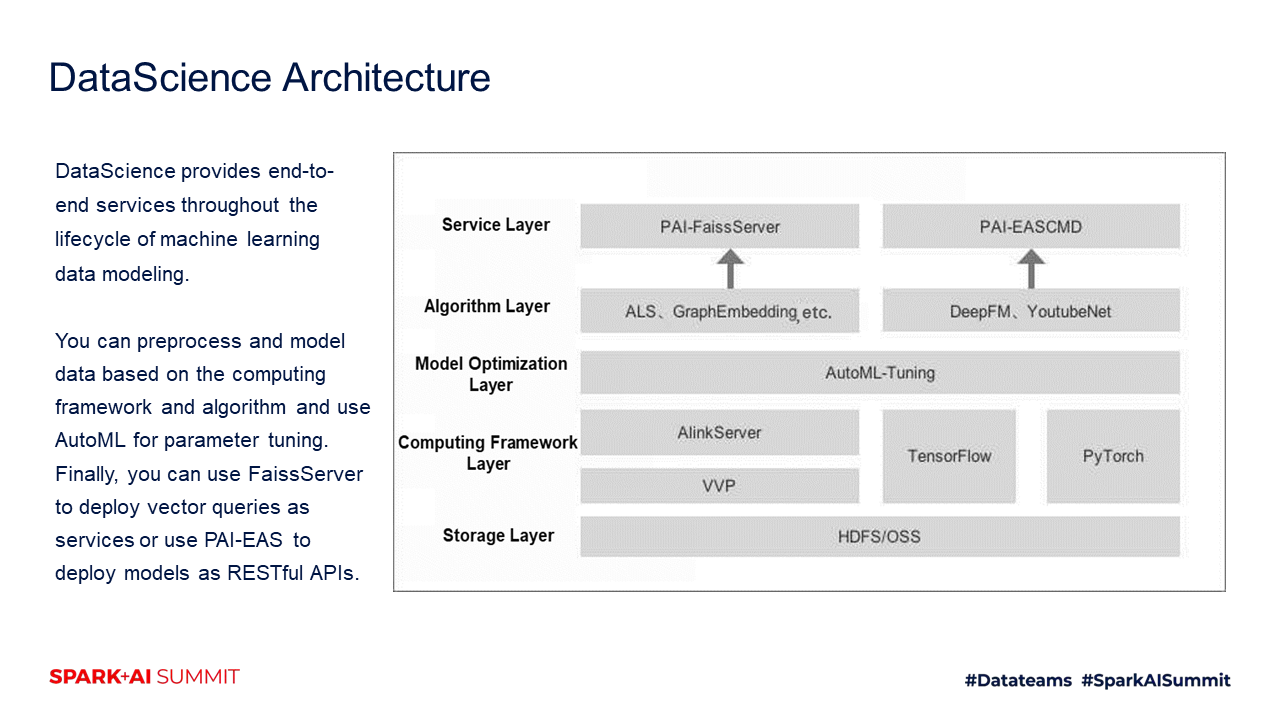

The following figure shows the capabilities provided by DataScience. DataScience provides end-to-end services throughout the lifecycle of machine learning data modeling. The underlying storage layer can read data from HDFS and OSS. The computing framework layer consists of two parts. The first is the traditional machine learning framework that provides services through AlinkServer and is built on the underlying commercial Flink framework VVP. The other is the deep learning framework that includes TensorFlow and PyTorch. You can use AlinkServer to build a traditional machine learning model or use TensorFlow and PyTorch to build a deep learning model. This AI Challenge focuses on images. Therefore, TensorFlow and PyTorch are more frequently used. After you preprocess and model data based on the computing framework and algorithm, you need to tune parameters. The Alibaba Cloud PAI team provides the AutoML tool for parameter tuning. At the algorithm layer, you can use your own algorithm. At the service layer, the online model needs to be used in an actual industry environment, and PAI-EASCMD or PAI-FaissServer may be used.

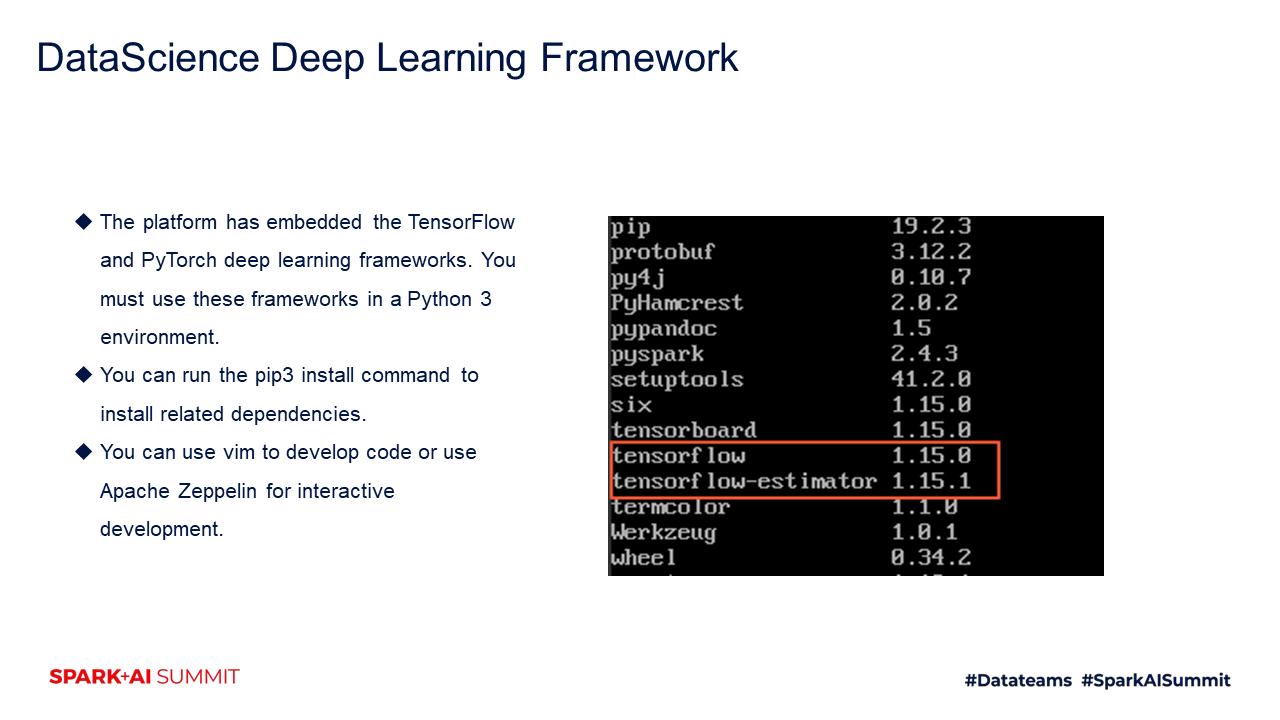

Currently, the platform has embedded the TensorFlow and PyTorch deep learning frameworks for contestants. You can use these frameworks and write code in a Python 3 environment. Deep learning data modeling depends on a lot of third-party libraries. You can run the pip3 install command to install related dependencies. Contestants can use vim to develop code. Those who are unfamiliar with vim can use Apache Zeppelin for interactive development. Apache Zeppelin supports shell operations.

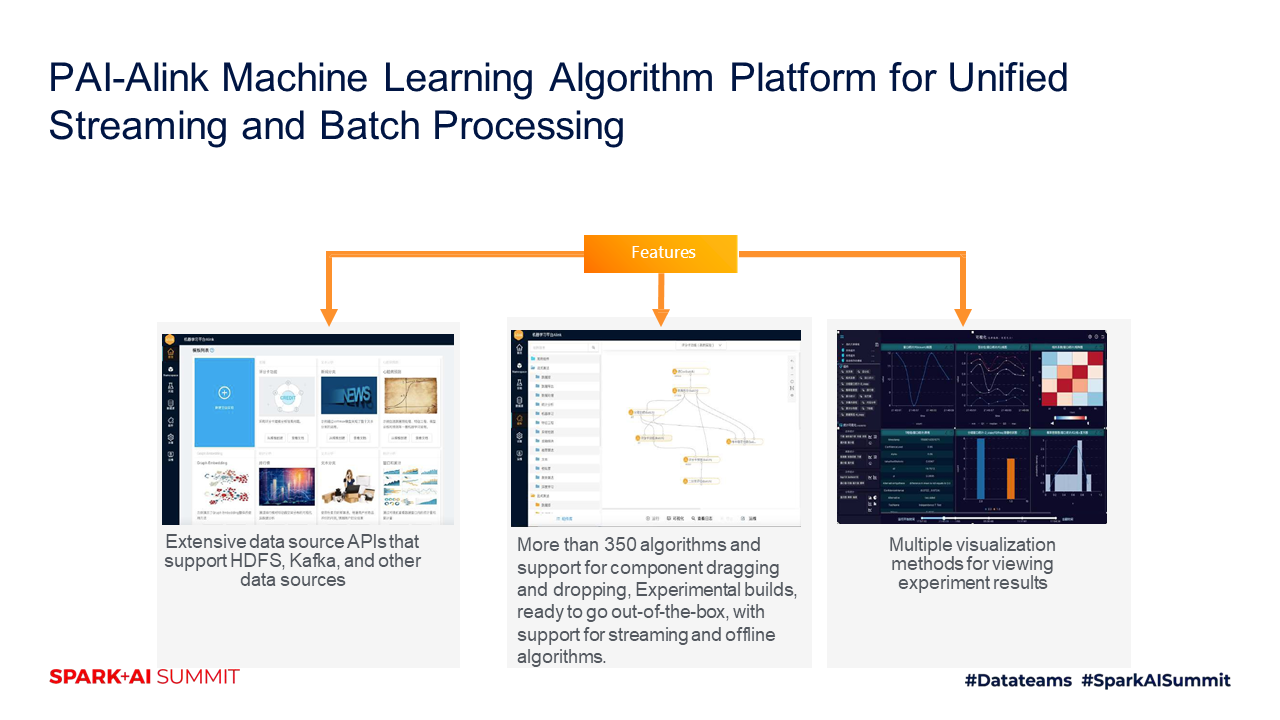

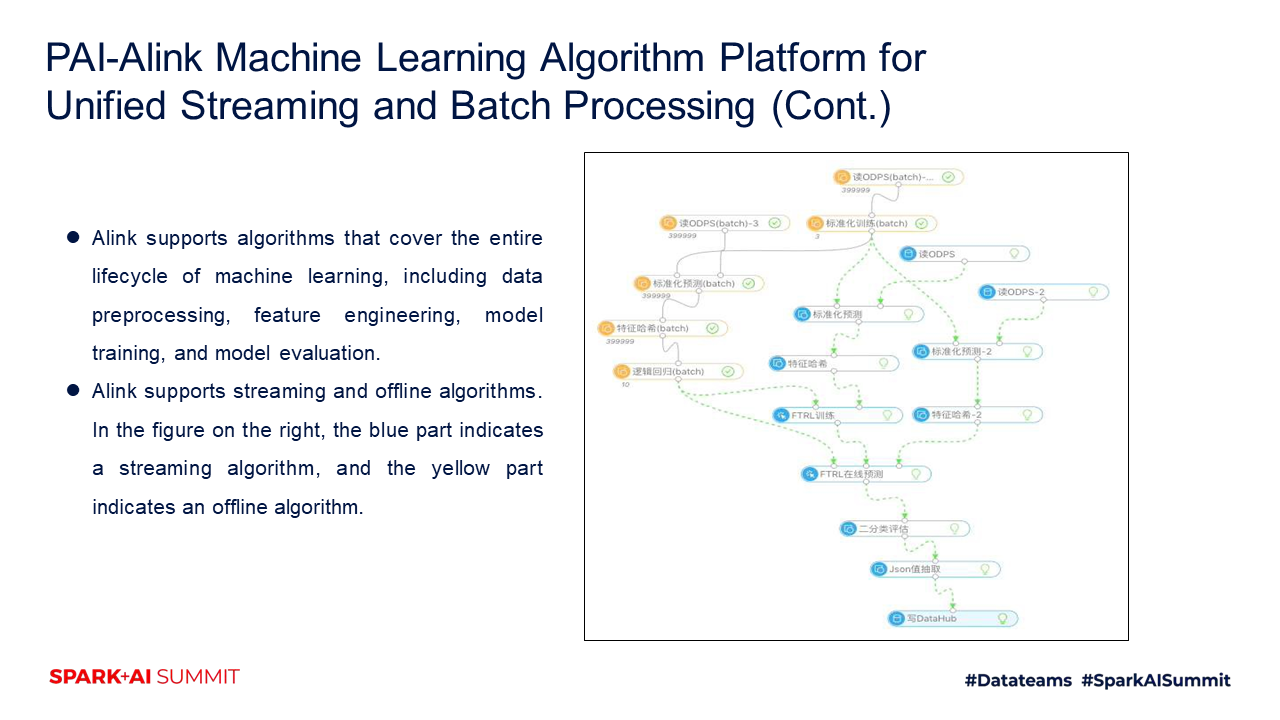

Although most contestants do not use traditional machine learning algorithms, those who need them can use Alink. Alink supports more than 350 traditional machine learning algorithms that cover the entire lifecycle of machine learning, such as data preprocessing, feature engineering, model training, and model evaluation. Traditional machine learning algorithms include K-Means and random forest. Alink supports streaming and offline algorithms, and allows you to drag and drop components. Alink supports multiple visualization methods for viewing the experiment results.

The following figure shows an Alink experiment demo. The blue part indicates a streaming algorithm, and the yellow part indicates an offline algorithm.

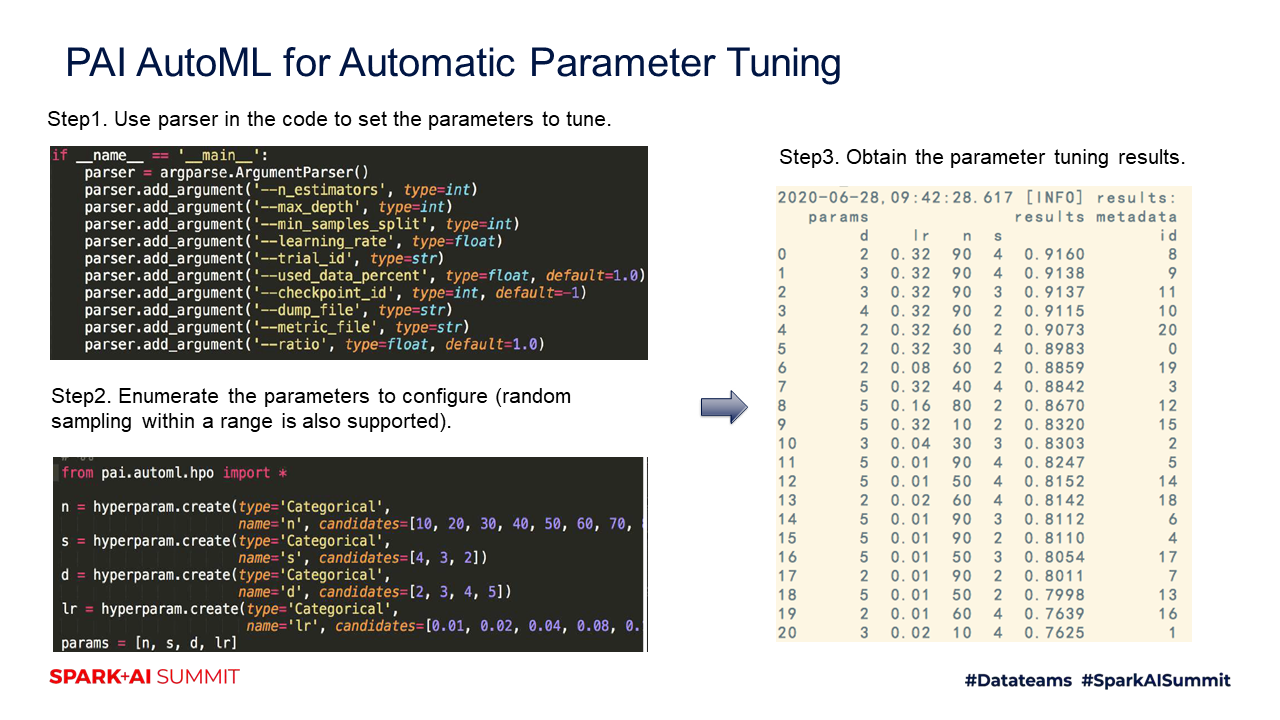

AutoML is a common component used in competitions. To get good results, you need to tune the parameters to find the right combination of algorithms in addition to building models. Alibaba seldom uses manual parameter tuning. Alibaba has embedded AutoML into DataScience for this AI Challenge. To use AutoML, you need to first create a modeling script. In the script, many parameters need to be tuned, such as max_depth, learning_rate, and train_id. You can use parser in the code to set the parameters to tune. In addition, you need to create a script for parameter tuning, import pai.automl.hop, map the preceding parameters, and enumerate the parameters to configure. If you do not want to use the enumeration method, you can use the random sampling method. After you specify a range, the platform can perform random sampling within the range. The right side of the following figure shows the final parameter tuning results for different parameter combinations. In Step 2 in the following figure, in addition to enumerating the parameters to set, you need to set a metric, such as the accuracy or recall rate, as the evaluation standard. You can also customize a metric. AutoML eliminates manual parameter tuning.

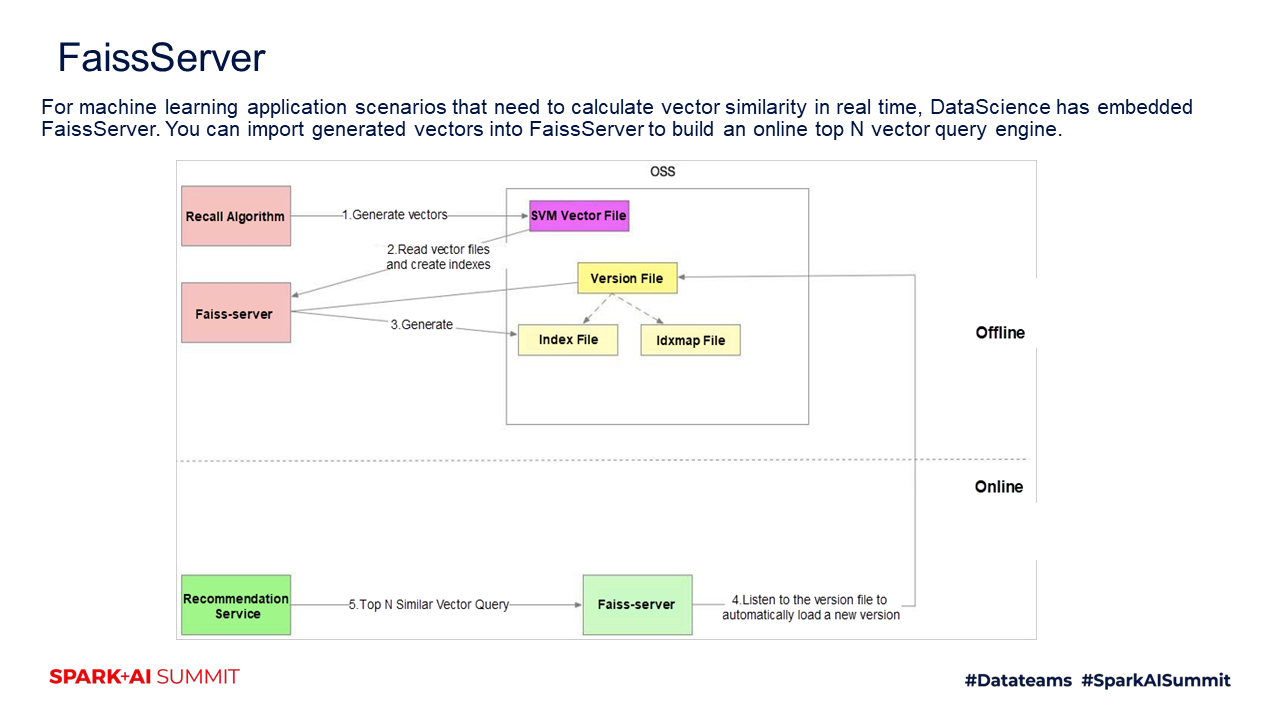

In machine learning application scenarios that need to calculate the vector similarity in real time, FaissServer can quickly calculate the distance between a given vector and other vectors. After you load all vectors to FaissServer and send a GRPC query, FaissServer will report the top N vectors. FaissServer is built into DataScience. You can import generated vectors into FaissServer to build an online top N vector query engine. FaissServer is frequently used for image similarity analysis and querying.

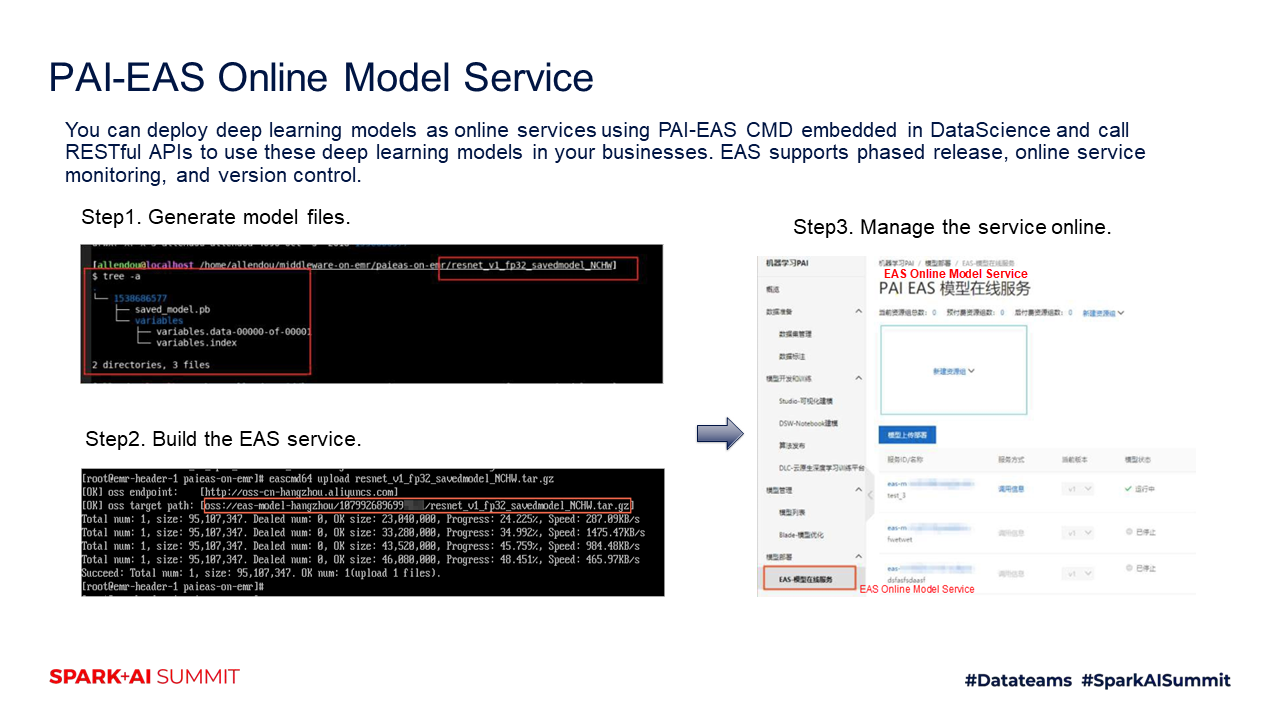

EAS may be used in the finals. It focuses on how to better models on business terminals, such as mobile phones and Internet of Things (IoT) devices. You can deploy deep learning models as online services using PAI-EAS CMD embedded in DataScience and call RESTful APIs to use these deep learning models in your businesses. EAS supports phased release, online service monitoring, version control, and other features.

Learn more about Alibaba Cloud E-MapReduce at https://www.alibabacloud.com/products/emapreduce

The Secrets Behind the Optimized SQL Performance of EMR Spark

62 posts | 7 followers

FollowAlibaba EMR - July 9, 2021

Alibaba Clouder - April 13, 2021

Alibaba EMR - August 24, 2021

Alibaba EMR - November 8, 2024

GAVASKAR S - June 21, 2023

Alibaba EMR - November 14, 2024

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba EMR