By Che Yang (Senior Technical Expert at Alibaba Cloud) and Gu Rong (Research Associate at Nanjing University and Core Developer of Alluxio)

In recent years, artificial intelligence (AI) technologies such as deep learning have rapidly developed and are being used in a wide range of industries. With the widespread application of deep learning, an increasing number of fields are demanding efficient and convenient AI model training capabilities. Besides, in the era of cloud computing, containers and container orchestration technologies such as Docker and Kubernetes have made great progress in the automated deployment of application services during software development and O&M. The Kubernetes community's support for accelerated computing device resources such as Graphics Processing Units (GPUs) is increasing. Due to the advantages of cloud environments in computing costs and scaling and the advantages of containerization in optimal deployment and agile iteration, distributed deep learning model training based on containerized elastic infrastructure and cloud-based GPU instances is now a major AI model generation trend in the industry.

To ensure the flexibility of resource scaling, most cloud applications adopt a basic architecture that separates computing and storage. Object storage is often used to store and manage massive training data because it greatly reduces storage costs and improves scalability. In addition to storing data in the cloud, many cloud platform users store large amounts of data in private data centers due to considerations of security compliance, data ownership, or legacy architectures. Such users want to build AI training platforms using hybrid clouds and use the elastic computing capabilities of the cloud platform for AI business model training. However, the combination of local storage and off-premises training intensifies the impact of computing-storage separation on remote data access performance. The basic computing-storage separation architecture makes the configuration and scaling of computing and storage resources more flexible. However, in terms of data access efficiency, model training performance may degrade due to limited network transmission bandwidth if the architecture is used without any optimization.

Currently, the standard solution for off-premises deep learning model training is to manually prepare data. Specifically, data is copied and distributed to off-premises standalone storage such as a Non-Volatile Memory Express (NVMe) Solid State Drive (SSD) or to a distributed high-performance storage such as GlusterFS Cloud Parallel File System (CPFS). You may encounter the following problems when you prepare data manually or by using scripts:

1) Higher costs for data synchronization and management: The continuous update of data requires periodic data synchronization from the underlying storage, which incurs higher management costs.

2) Higher cloud storage costs: You need to pay additional fees for off-premises standalone storage or distributed high-performance storage.

3) Higher complexity for large-scale scaling: As the volume of data grows, it is difficult to copy all data to the off-premises standalone storage. It also takes a long time to copy all data to a CPFS such as GlusterFS.

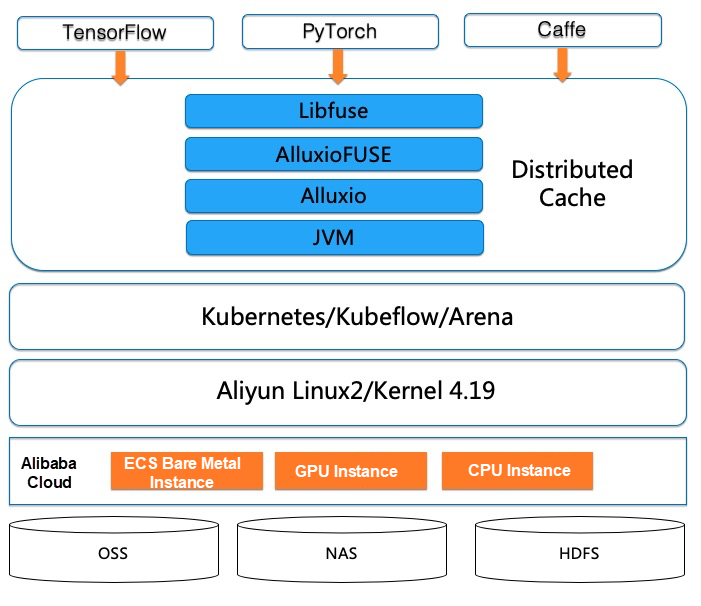

To solve the preceding problems, we have designed and implemented a model training architecture based on containers and data orchestration. Figure 1 shows the system architecture.

1) Kubernetes is a popular container cluster management platform for deep neural network training. It enables the flexible use of different machine learning frameworks through containers and agile on-demand scaling. Alibaba Cloud Container Service for Kubernetes (ACK) is a Kubernetes service provided by Alibaba Cloud. Use it to run Kubernetes workloads on Alibaba Cloud's CPUs, GPUs, Neural-network Processing Units (NPUs) (Hanguang 800 chip), and ECS Bare Metal Instances.

2) Kubeflow is an open-source Kubernetes-based cloud-native AI platform for developing, orchestrating, deploying, and running scalable and portable machine learning workloads. Kubeflow supports distributed training in two TensorFlow frameworks: parameter server and AllReduce. Based on Arena developed by the Alibaba Cloud Container Service team, submit distributed training workloads using these two frameworks.

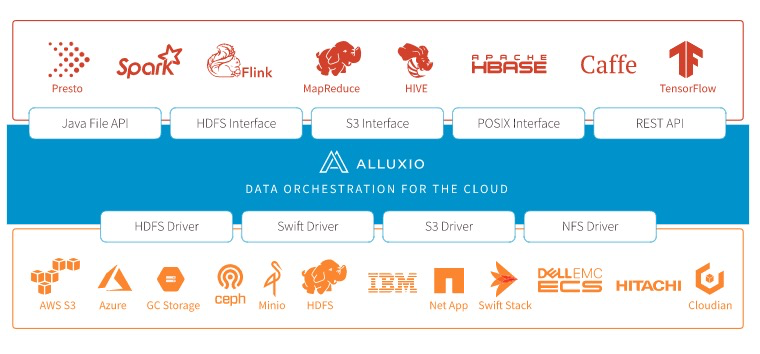

3) Alluxio is an open-source data orchestration and storage system designed for hybrid clouds. A data abstraction layer is added between the storage system and computing framework to provide a unified mount namespace, hierarchical caches, and various data access interfaces. It supports efficient access to large-scale data in various complex environments, such as private cloud clusters, hybrid clouds, and public clouds.

Alluxio originated in the big data era and was created at Apache Spark's AMPLab at UC Berkeley. The Alluxio system was designed to solve the analysis performance bottleneck and the I/O operation problems when different computing frameworks exchange data through a disk file system such as Hadoop Distributed File System (HDFS) in the big data processing pipeline. Alluxio was made open source in 2013. After seven years of continuous development and iteration, it has become a mature solution applied in big data processing scenarios. With the rise of deep learning in recent years, Alluxio's distributed cache technology is becoming the mainstream solution for off-premises I/O performance problems in the industry. Furthermore, Alluxio launched FUSE-based POSIX file system interfaces to enable efficient data access for off-premises AI model training.

To better integrate Alluxio into the Kubernetes ecosystem and combine the advantages of both, the Alluxio team and the Alibaba Cloud Container Service team worked together to develop the Helm Chart solution, which greatly simplifies Alluxio deployment and use in Kubernetes.

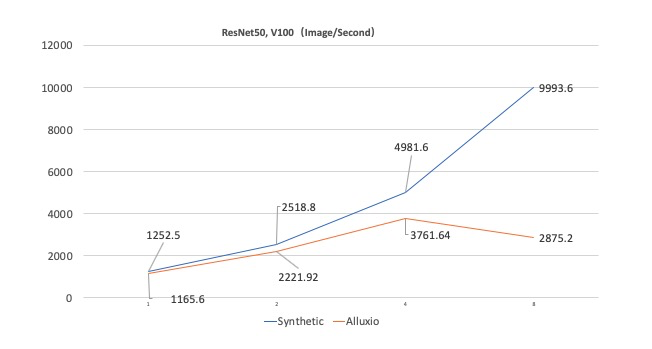

According to our performance evaluation, the training speed for a single GPU is improved by more than 300% after the GPU is upgraded from NVIDIA P100 to NVIDIA V100. However, this significant improvement in computing performance puts increased pressure on data storage and access. This also poses a new challenge to the I/O performance of Alluxio.

The following figure compares the performance of synthetic data and Alluxio caches. The horizontal axis represents the number of GPUs, while the vertical axis represents the number of images processed per second. Synthetic data refers to the data generated and read by the training program without I/O overhead. This specifies the upper limit of model training performance. Alluxio caches refer to the data read by the training program from the Alluxio system. When the number of GPUs is 1 or 2, the performance difference between synthetic data and Alluxio caches is tolerable. However, when the number of GPUs increases to 4, their performance difference is obvious. The number of images processed by Alluxio per second falls from 4,981 to 3,762. When the number of GPUs is 8, the performance of model training on Alluxio is less than 30% that of synthetic data. According to the system monitoring results, the computing, memory, and network performance of the system are far below their limits, indicating that Alluxio cannot efficiently support training with eight NVIDIA V100 GPUs on a single host.

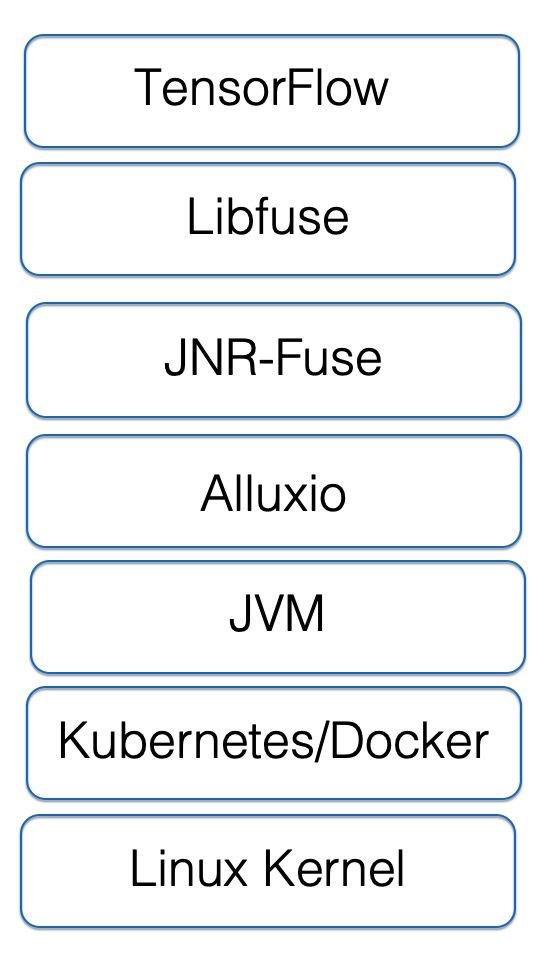

To deeply understand the factors that affect performance and why optimization is needed, we need to analyze the Alluxio technology stack that supports FUSE in Kubernetes, as shown in the following figure.

Through an in-depth analysis of the technology stack and Alluxio kernel, we summarize the reasons for the performance impact as follows:

1) Alluxio introduces multiple Remote Procedure Calls (RPCs) in file operations and creates performance overhead in training scenarios.

Alluxio is more than a simple cache service. It is a distributed virtual file system that provides comprehensive metadata management, block data management, underlying file system (UFS) management, and health check mechanisms. Its metadata management mechanism is more powerful than many UFSs. These are the advantages and features of Alluxio, which also constitute the overhead produced by the distributed system. For example, the Alluxio client is used to read a file by default. Even if the file data has been cached in the local Alluxio worker node, the client makes many RPCs to the primary node to obtain file metadata and ensure data consistency. The overhead for completing the entire read operation is not significant in conventional big data scenarios. However, it is hard to meet the high-throughput and low-latency requirements in deep learning scenarios.

2) Alluxio's data caching and eviction policies frequently trigger cache jitter on the nodes.

In deep learning scenarios, hot and cold data is not clearly distinguished. Therefore, each Alluxio worker node needs to read all the data. However, by default, Alluxio reads data locally first. Even if the data is stored in the Alluxio cluster, Alluxio pulls data from other cache nodes and keeps a local replica. This feature incurs additional overhead in our scenario:

The performance overhead is huge when the caches of a node are almost full.

3) It is easy to develop, deploy, and use a file system based on FUSE, but the default performance is not ideal for the following reasons:

max_idle_threads, thread creation, and deletion operations are frequently triggered, affecting the read performance. In FUSE, the default value of max_idle_threads is 10.4) The integration of Alluxio and FUSE (AlluxioFUSE) needs to be optimized and profoundly customized for the common multi-thread and high-concurrency scenarios of deep learning.

direct_io mode in FUSE and cannot use the kernel_cache mode to further improve I/O efficiency through page caching. This is because Alluxio requires that each thread use its own file input handle (FileInputStream) in a multi-thread scenario. However, if page caching is enabled, AlluxioFUSE may read caches concurrently in advance, causing an error.5) Kubernetes affects the thread pool of Alluxio.

Alluxio is implemented based on Java 1.8, in which some thread pools are calculated based on Runtime.getRuntime().availableProcessors(). However, in the Kubernetes environment, the value of cpu_shares is 2 by default, and the number of CPU cores in the Java Virtual Machine (JVM) is calculated as 1 according to cpu_shares()/1024. This affects the concurrency of the Java process in a container.

After analyzing the preceding performance problems and factors, we designed a series of performance optimization policies to improve the performance of off-premises model training. First, it is hard to ensure the speed, quality, and cost-efficiency of data access at the same time. Instead, we only focus on the acceleration of data access to read-only datasets in model training. The basic idea of optimization is to ensure high performance and data consistency, at the cost of some adaptability, such as for simultaneous reads and writes and continuous data updates.

Based on this, we have designed specific performance optimization policies that comply with the following core principles:

We will describe these optimization policies from the perspective of component optimization at each layer.

FUSE is implemented at two layers: libfuse runs in user mode and the FUSE Kernel runs in kernel mode. We have made many optimizations to FUSE in the latest version of the Linux kernel. We found that the read performance of Linux kernel 4.19 was improved by 20% compared with Linux kernel 3.10.

1) Extend the validity period of FUSE metadata.

Each file in Linux contains two types of metadata: struct dentry and struct inode. They are the bases of the file in the kernel. The two structs must be obtained before all operations on the file. Therefore, each time the inode and dentry of the file or directory are obtained, the FUSE kernel performs a complete operation from the libfuse and the Alluxio file system, which puts great pressure on the Alluxio primary node in scenarios with high-latency and high-concurrency data access. –o entry_timeout=T –o attr_timeout=T can be set for optimization.

2) Configure max_idle_threads to avoid frequent thread creation and deletion operations, which produce CPU overhead.

This is done because FUSE starts to run one thread in multi-thread scenarios. When there are more than two available requests, FUSE automatically generates additional threads. Each thread processes one request at a time. After a request is processed, each thread checks whether the number of threads exceeds that specified by max_idle_threads (10 by default). If so, the excess threads are recycled. This field is related to the number of active I/O threads generated in user processes and can be set to the number of read threads. However, max_idle_threads is supported only by libfuse3, while AlluxioFUSE only supports libfuse2. Therefore, we modified the code of libfuse2 to support the configuration of max_idle_threads.

Alluxio and FUSE are integrated through the AlluxioFuse process. This process calls the embedded Alluxio client to interact with the running Alluxio primary and worker nodes during runtime. For deep learning scenarios, we have customized the Alluxio attributes of AlluxioFuse to optimize performance.

In deep learning training scenarios, each training iteration is an iteration of a full dataset. It is unlikely that any node would have sufficient space to cache a dataset of several terabytes. Alluxio's default cache policy is designed for big data processing scenarios (such as queries) with clearly differentiated hot and cold data. Data caches are stored in the local node of the Alluxio client to ensure optimal performance for the next read. The configurations are as follows:

1) alluxio.user.ufs.block.read.location.policy: The default value is alluxio.client.block.policy.LocalFirstPolicy. This means that Alluxio will continuously save data to the local node where the Alluxio client is located. In this case, caches on the node constantly experience jitter when the cache is close to full. This significantly reduces the throughput and latency and imposes high pressure on the primary node. Therefore, location.policy needs to be set to alluxio.client.block.policy.LocalFirstAvoidEvictionPolicy and the alluxio.user.block.avoid.eviction.policy.reserved.size.bytes parameter must be set. This parameter specifies the data volume to be reserved to prevent the local cache from being evicted. Typically, the value of this parameter must be greater than the upper limit of node cache x (100% - maximum percentage of node evictions).

2) alluxio.user.file.passive.cache.enabled: This specifies whether to cache additional replicas of data on the local node of Alluxio. This attribute is enabled by default. Therefore, when the Alluxio client requests data, the node where the client is located caches data from other worker nodes. You can set this attribute to false to avoid unnecessary local caching.

3) alluxio.user.file.readtype.default: The default value is CACHE_PROMOTE. This configuration has two potential issues: First, data may move between different cache layers on the same node. Second, most operations on data blocks require a lock. However, many lock operations are quite important in the source code of Alluxio. A large number of locking and unlocking operations will produce a lot of overhead during high concurrency, even if no data is migrated. Therefore, you can set this parameter to CACHE instead of the default value CACHE_PROMOTE to avoid the locking overhead caused by the moveBlock operation.

In deep learning training scenarios, all training data files are listed and metadata is read before each training task starts. The process of running a training task further reads the training data files. On accessing a file through Alluxio, the following operations are completed by default: File metadata and block metadata are obtained from the primary node, the location of the block metadata is obtained from the worker nodes, and then the block data is read from the obtained location. The complete operation includes the overhead of multiple RPCs and causes significant file access latency. If you cache the block information of the data file into the client memory, the file access performance will be significantly improved.

1) When you set alluxio.user.metadata.cache.enabled to true, file and directory metadata caching is enabled on the Alluxio client so that you do not need to access metadata through RPCs a second time. Based on the size of the heap which is allocated to AlluxioFUSE, set alluxio.user.metadata.cache.max.size to specify the maximum amount of metadata of cached files and directories. Also set alluxio.user.metadata.cache.expiration.time to adjust the validity period of the metadata cache. In addition, when you select worker nodes to read data, the Alluxio primary node constantly queries the statuses of all worker nodes, which incurs additional overhead in high-concurrency scenarios.

2) Set alluxio.user.worker.list.refresh.interval to 2 minutes or longer.

3) The last access time is constantly updated when files are read, which puts great pressure on the Alluxio primary node in high-concurrency scenarios. Therefore, we add a switch to the Alluxio code to disable the update of the last access time.

Data locality indicates that data computing is performed on the node where data is located to avoid data transmission over the network. In a distributed parallel computing environment, data locality is very important. Two short-circuit read and write modes are supported in the container environment: UNIX socket and direct file access.

Currently, we prefer the latter solution.

ActiveProcessorCount

This parameter is controlled by Runtime.getRuntime().availableProcessors(). If you use Kubernetes to deploy a container without specifying the number of CPU requests, the Java process reads two CPU shares from the proc filesystem (procfs) in the container. In this case, availableProcessors() is calculated as 1 according to cpu_shares()/1024. This limits the number of concurrent threads for Alluxio in the container. The Alluxio client is an I/O-intensive application. Therefore, set -XX:ActiveProcessorCount to specify the number of processors. The basic principle is to set ActiveProcessorCount to a larger value when possible.

-XX:ActiveProcessorCount specifies the default number of GC and just-in-time (JIT) compilation threads of JVM. However, you can set it to a smaller value by using the -XX:ParallelGCThreads, -XX:ConcGCThreads, and -XX:CICompilerCount parameters. This avoids frequent preemption and failover of these processes and the resulting performance degradation.

After Alluxio is optimized, the training performance of ResNet-50 with eight GPUs on a single host improves by 236.1%, and the scalability problem is solved. The training process might be expanded to eight GPUs on four hosts. In addition, the performance loss compared with the performance of synthetic data is only 3.29% (31068.8 images per second versus 30044.8 images per second). It takes 63 minutes to complete training with eight GPUs on four hosts in synthetic data scenarios, and 65 minutes with Alluxio.

In this article, we summarize the challenges in applying Alluxio in high-performance distributed deep learning model training scenarios and our practices in optimizing Alluxio. We also discuss multiple ways to improve the AlluxioFUSE performance in high-concurrency read scenarios. Finally, we implement a distributed model training solution based on our Alluxio optimizations to verify the performance on ResNet-50 using eight GPUs on four hosts. In this test, our system achieved good results.

Alluxio is working to support page caching and ensure FUSE stability in high-throughput scenarios with a large number of small files and high-concurrency read scenarios. The Alibaba Cloud Container Service team will continue to work with the Alluxio open-source community and professors from Nanjing University, such as Dai Haipeng and Gu Rong, to make further improvements. We believe that, through the joint efforts and innovative ideas of the industry, open-source community, and academia, we can gradually reduce the data access cost and complexity of deep learning training in computing-storage separation scenarios, further facilitating off-premises AI model training.

Fan Bin, Qiu Lu, Calvin Jia, and Chang Cheng of the Alluxio team have provided great help in the design and optimization of this solution. They have made significant improvements to the metadata caching system through Alluxio's own capabilities, making it possible to apply Alluxio in AI scenarios. Thank you all.

The Burgeoning Kubernetes Scheduling System – Part 1: Scheduling Framework

223 posts | 33 followers

FollowAlibaba Container Service - August 25, 2020

Alibaba Container Service - February 17, 2021

Alibaba Container Service - February 21, 2023

Alibaba Cloud_Academy - June 26, 2023

Alibaba Developer - March 1, 2022

Alibaba EMR - November 18, 2020

223 posts | 33 followers

Follow Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Container Service