Being an academician, my research interests include Deep Learning and Internet of Things. I have worked on a lot of deep learning frameworks in Tensorflow and Keras in Jupyter Notebooks powered by GPUs and cloud based TPUs. With this expertise, I explored the cloud AI systems available with Alibaba Cloud. The Machine Learning Platform for AI sounds promising for anyone who wants to kickstart their projects in Cloud AI.

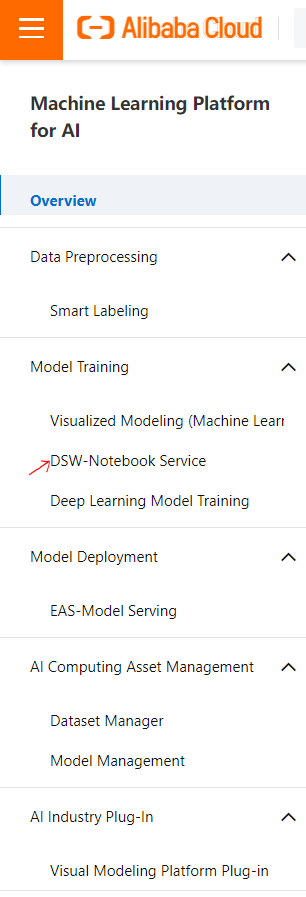

This machine learning platform is offering a lot of options. I got comfortable with the DSW Notebook Service. The machine learning platform has visual modelling platform for those who are not comfortable with programming too. I chose to go with the DSW Notebook Service.

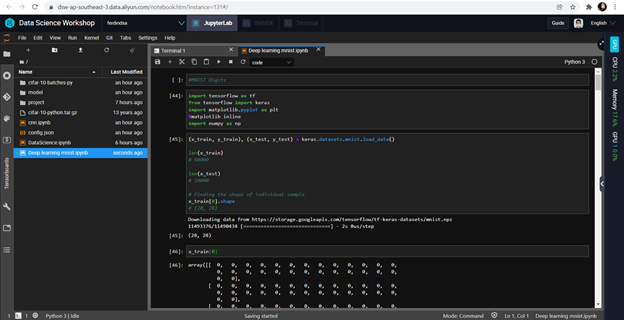

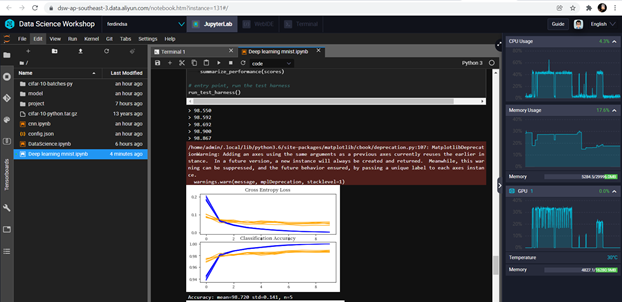

I read Jeremy's article on developing deep learning model to classify images from his blog published earlier. This article helps anyone to understand the DSW environment and to start coding for Deep Learning. I created a DSW instance in Kuala Lumpur region of Malaysian data center of Alibaba Cloud. The instance I created includes a Pay As You Go configuration of GPU with tensor flow support and 2 vCPUS. The user needs to wait for a couple of minutes inorder to start and stop the DSW instance.

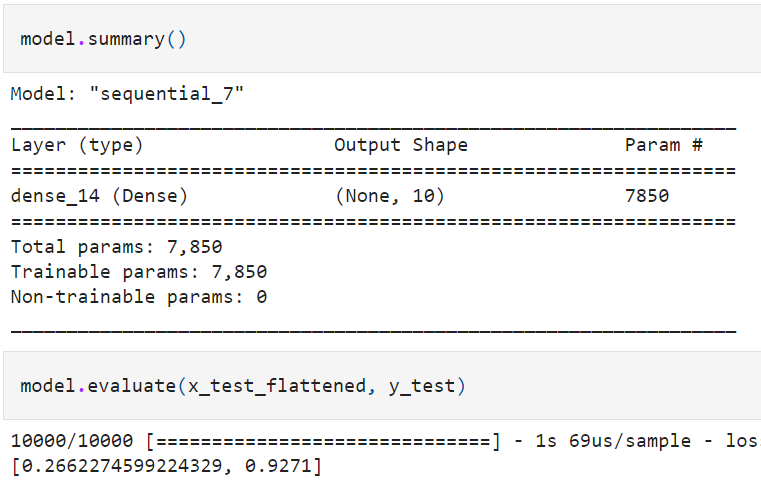

The source code used in this blog's demonstration can be found in the GitHub link. MNIST digits dataset consists of thousands of images consisting of handwritten digits from 0 to 9. A basic one layer CNN was tried. A dense layer with sigmoid activation function was used. The accuracy for this simple architecture was found to be around 92%.

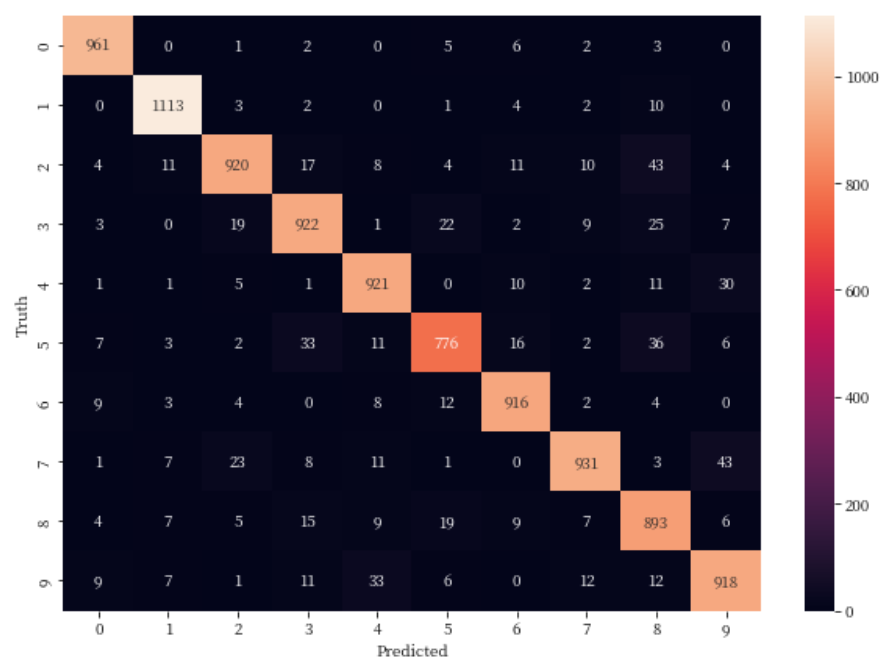

Seaborn Library in Python is used to generate the confusion matrix and it is given below.

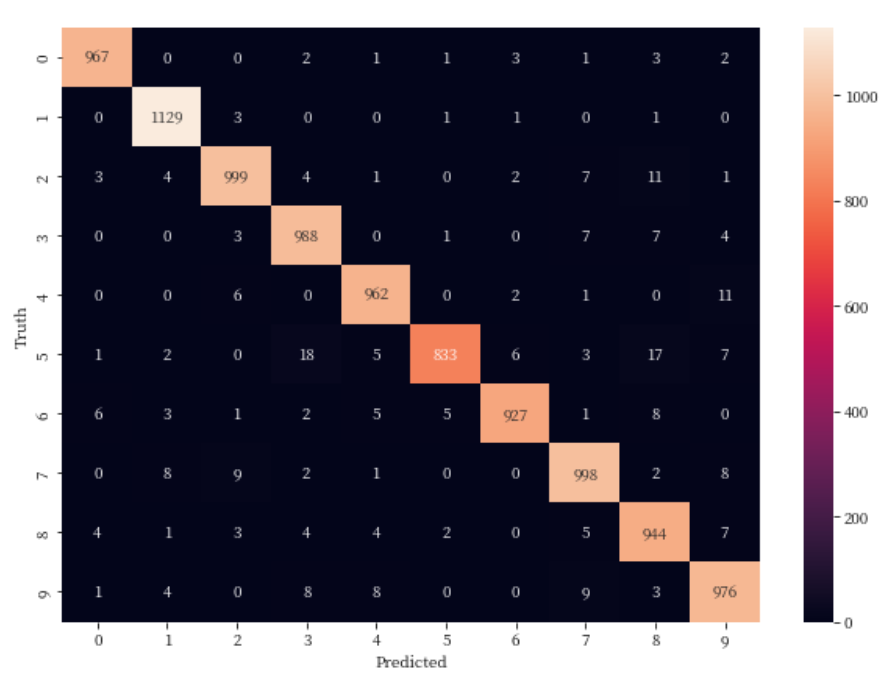

After observing this performance, the architecture was modified to have two dense layers. The first dense layer is powered by relu activation and the second one with sigmoid activation. This configuration when tried with the same number of epochs, the testing accuracy of the handwritten digits improved to the level of 97%.

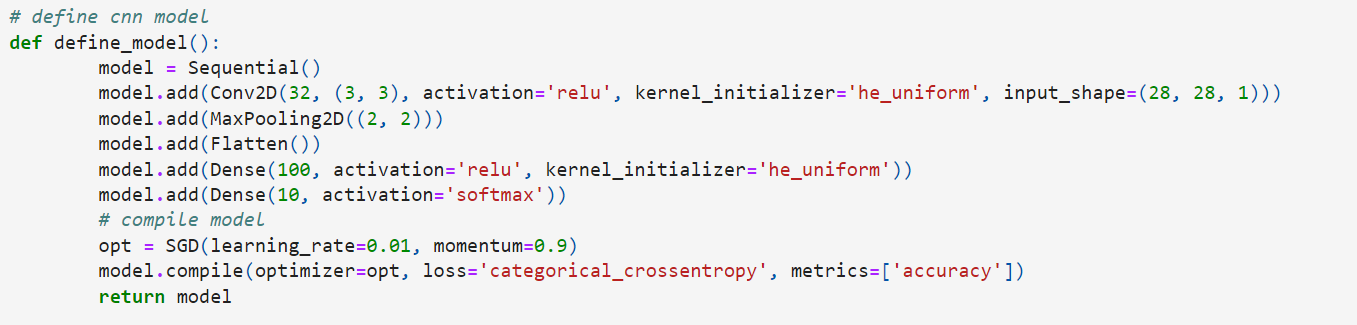

With an observation of increase in accuracy, a higher architecture of VGG 16 was tried on this dataset. This architecture has almost 16 layers as shown in the figure below.

This architecture uses Stochastic Gradient Descent with a learning rate of 0.01 and kernel initializer of he_uniform. The cross entropy loss was set to categorical and the model is run on the same number of epochs as mentioned in the architectures mentioned above. The performance of testing accuracy increased to the level of 99%. The cross entropy loss decreased significantly and paved way for the testing accuracy to increase further.

| S.No | Architecture | Testing Accuracy |

|---|---|---|

| 1 | Single Dense | 92% |

| 2 | Double Dense | 97% |

| 3 | VGG 16 | 99% |

Overall, while using the DSW notebook service for Deep Learning, the GPU sounded dedicated and was faster than any other system I worked before. The right pane of DSW notebook shows the real time monitoring of CPU and GPU performance and it is impressive.

Guest Lecture on Virtual Private Cloud (VPC) to VIT University

Time Series Forecasting using variants of Long Short Term Memory (LSTM) Recurrent Neural Networks

Alibaba F(x) Team - December 31, 2020

Alibaba Clouder - January 21, 2020

- January 17, 2018

Alibaba Clouder - October 30, 2019

Alibaba Clouder - March 9, 2017

Alibaba Clouder - July 19, 2018

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn MoreMore Posts by ferdinjoe