Neural networks and deep learning technologies underpin most of the advanced intelligent applications today. In this article, Dr. Sun Fei (Danfeng), a high-level algorithms expert from Alibaba's Search Department, will provide a brief overview of the evolution of neural networks and discuss the latest approaches in the field. The article is primarily centered on the following five items:

In Part 1 of this article, we gave a brief overview of neural networks and deep learning. In particular, we talked about sensor models, feed-forward neural networks, and back-propagation. In this section, we take a closer of at deep learning, particularly convolutional neural networks (CNN) and recursive neural networks (RNN).

From the second low point in development in 1990s to 2006, neural networks once again entered the consciousness of the masses, this time in even more force than before. A monumental occurrence during this rise of neural networks was the two theses on multi-layer neural networks (now called “deep learning”) submitted by Hinton in a number of places including Salahundinov.

One of these theses solved the issue of setting initialization values for neural networks. The solution, put simply, is to consider the input value as x, and the output value as decoded x, then through this method find a better initialization point. The other thesis raised a method of quickly training a deep neural network. Actually, there are a number of factors contributing to the modern popularity of neural networks, for example the enormous growth in computing resources and availability of data. In the 1980s, it was very difficult to train a large scale neural network due to the lack of data and computing resources.

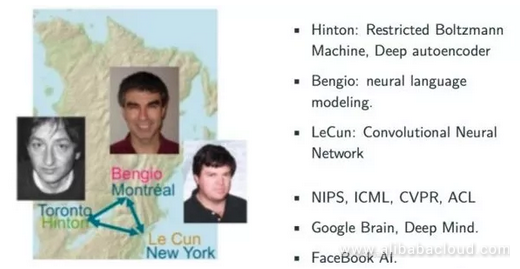

The early rise of neural networks was driven by three monumental figures, namely Hinton, Bengio, and LeCun. Hinton's main accomplishment was in the Restricted Boltzmann Machine and Deep Autoencoder. Bengio's major contribution was a series of breakthroughs in using the metamodel for deep learning. This was also the first field in which deep learning experienced a major breakthrough.

In 2013, language modeling, based on the metamodel, was already capable of outperforming even the most effective method at the time, the probability model. The main accomplishment of LeCun was research related to CNN. The primary appearance of deep learning was in a number of major summits like NIPS, ICML, CVPR, ACL, where it attracted no small amount of attention. This included the appearance of Google Brain, Deep Mind, and Facebook AI, which all placed the center of their research on the field of deep learning.

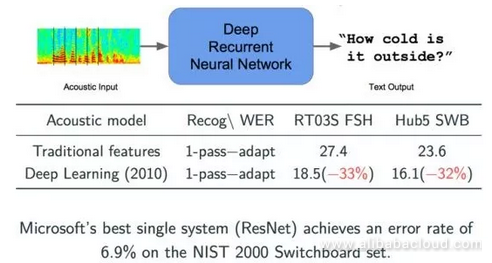

The first breakthrough to come about after deep learning entered the consciousness of the masses was in the field of speech recognition. Before we began using deep learning, models were all trained on previously defined statistical databases. In 2010, Microsoft used a deep learning neural network for speech recognition. We can see from the figure below that two error indicators both dropped by 2/3, an obvious improvement. Based on the newest ResNet technology, Microsoft has already reduced this indicator to 6.9%, with improvements coming year by year.

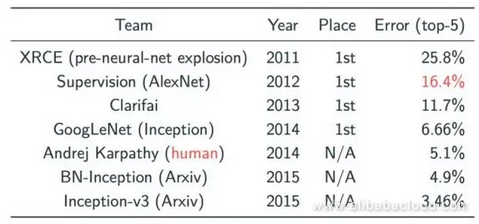

In the field of image classification, the CNN model experienced a major breakthrough in the form of ImageNet in 2012. In ImageNet, the Image classification is tested using a massive data collection and then sorted into 1000 types. Before the application of deep learning, the best error rate for image classification system was 25.8% (in 2011), which came down to a mere 10%, thanks to the work done by Hinton and his students in 2012 using CNN.

From the graph, we can see that since 2012, this indicator has experienced a major breakthrough each year, all of which have been achieved using the CNN model.

These massive achievements owe in large part to the multi-layered structure of modern systems, as they allow for independent learning and the ability to express data through a layered abstraction structure. The abstracted features can be applied to a variety of tasks, contributing significantly to the current popularity of deep learning.

Next we will introduce two classic and common types of deep learning neural networks: One is the Convolutional Neural Network (CNN), and the other is the Recurrent Neural Network

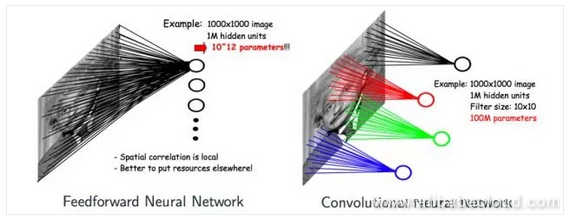

There are two core concepts to Convolutional Neural Networks. One is convolution and the other is pooling. At this point, some may ask why we don't simply use feed-forward neural networks rather than CNN. Taking a 1000x1000 image for example, a neural network would have 1 million nodes on the hidden layer. A feed-forward neural network, then, would have 10^12 parameters. At this point it's nearly impossible for the system to learn since it would require an absolutely massive number of estimations.

However, a large number of images have characteristics like this. If we use CNN to classify images, then because of the concept of convolusion, each node on the hidden layer only needs to connect and scan the features of one location of the image. If each node on the hidden layer connects to 10*10 estimations, then the final number of parameters is 100 million, and if the local parameters accessed by multiple hidden layers can be shared, then the number of parameters is decreased significantly.

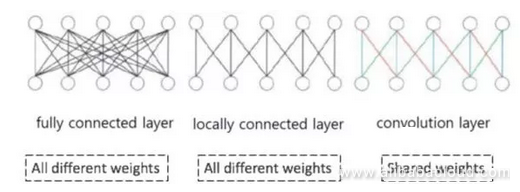

Looking at the image below, the difference between feed-forward neural networks and CNN is obviously massive. The models in the image are, from left to right, fully connected, normal, feed-forward, fully connected feed-forward, and CNN modeled neural networks. We can see that the connection weight parameters of nodes on the hidden layer of a CNN neural network can be shared.

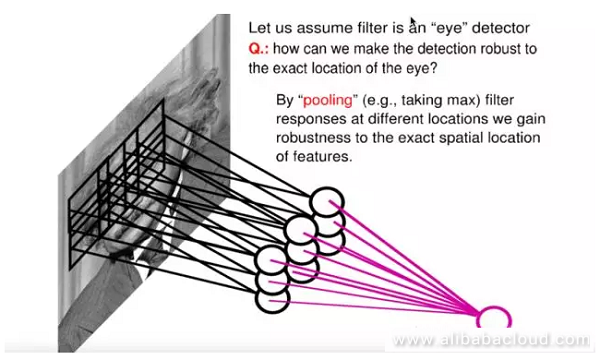

Another operation is pooling. A CNN will, on the foundation of the principle of convolusion, form a hidden layer in the middle, namely the pooling layer. The most common pooling method is Max Pooling, wherein nodes on the hidden layer choose the largest output value. Because multiple kernels are pooling, we get multiple hidden layer nodes in the middle.

What is the benefit? First of all, pooling further reduces the number of parameters, and secondly it provides a certain amount of translation invariance. As shown in the image, if one of the nine nodes shown in the image were to experience translation, then the node produced on the pooling layer would remain unchanged.

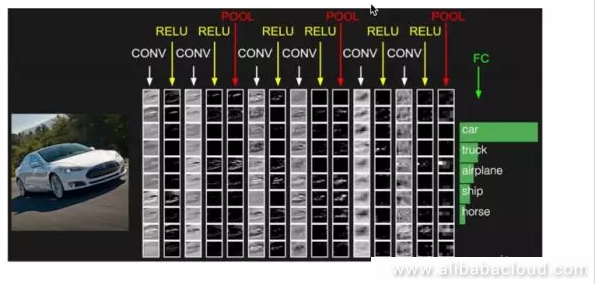

These two characteristics of CNN have made it popular in the field of image processing, and it has become a standard in the field of image processing. The example of the visualized car below is a great example of the application of CNN in the field of image classification. After entering the original image of the car into the CNN model, we can pass some simple and rough features like edges and points through the convolution and ReLU activation layer. We can intuitively see that the closer they are to the output image from the uppermost output layer, the closer they are to the contours of a car. This process will finally retrieve a hidden layer representation and connect it to the classification layer, after which it will receive a classification for the image, like the car, truck, airplane, ship, and horse shown in the image.

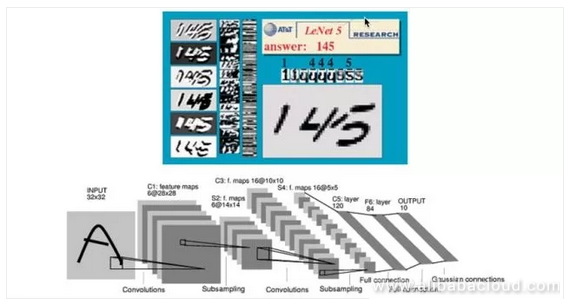

The image below is a neural network used in the early days by LeCun and other researchers in the field of handwriting recognition. This network found application in the US postal system in the 1990s. Interested readers can log into LeCun's website to see the dynamic process of handwriting recognition.

While CNN has become incredibly popular in the field of image recognition, it has also become instrumental in text recognition over the past two years. For example, CNN is currently the basis of the most optimal solution for text classification. In terms of determining the class of a piece of text, all one really needs to do is look for indications from keywords in the text, which is a task that is well suited to the CNN model.

CNN has widespread real-world applications, for example in investigations, self-driving cars, Segmentation, and Neural Style. Neural Style is a fascinating application. For example, there is a popular app in the App Store called Prisma, which allows users to upload an image and convert it into a different style. For example, it can be converted to the style of Van Goh's Starry Night. This process relies heavily on CNN.

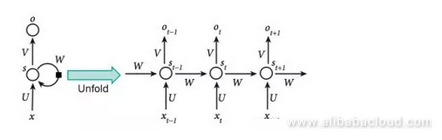

As for the foundational principles behind recursive neural networks, we can see from the image below that the output from such a network relies not only on output x, but the status of the hidden layer, which is updated according to the previous input x. The expanded image shows the entire process. The hidden layer from the first input is S(t-1), which influences the next input, X(t). The main advantage of the recursive neural network model is that we can use it in sequential data operations like text, language, and speech where the state of the current data is influenced by previous data states. This type of data is very difficult to handle using a feed-forward neural network.

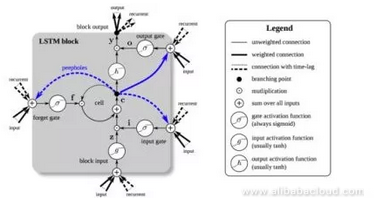

Speaking of recursive neural networks, we would be remiss not to bring up the LSTM model we mentioned earlier. LSTM is not actually a complete neural network. Simply put, it is the result of an RNN node that has undergone complex processing. An LSTM has three gates, namely the input gate, the regret gate, and the output gate.

Each of these gates is used to process the data in a cell and determine whether or not the data in the cell should be input, regretted, or output.

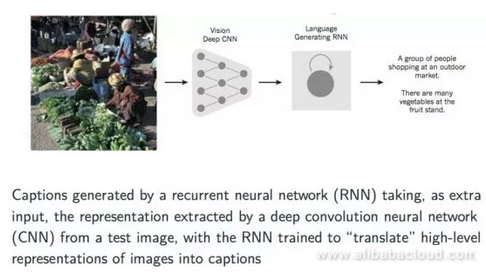

Finally let's talk a bit about a cross-discipline application of neural networks which is gaining widespread acceptance. This application involves converting an image into a text description of the image or a title describing it. We can describe the specific implementation process by using a CNN model first to extract information about the image and produce a vector representation. Later on, we can pass that vector as input to an already trained recursive neural network to produce the description of the image.

In this article, we talked about the evolution of neural networks and introduced several basic concepts and approaches in this field. The above article is based on a speech delivered by Dr. Sun Fei at the annual Alibaba Cloud Computing Conference (speech in Chinese). He is currently involved in researching recommendation systems and methods of text generation.

Read similar articles and learn more about Alibaba Cloud's products and solutions at www.alibabacloud.com/blog.

2,599 posts | 764 followers

FollowAlibaba Clouder - July 18, 2018

Alibaba Clouder - October 30, 2019

Alibaba Clouder - October 31, 2019

shiming xie - November 4, 2019

Alibaba Clouder - November 5, 2019

Alibaba F(x) Team - June 20, 2022

2,599 posts | 764 followers

Follow Image Search

Image Search

An intelligent image search service with product search and generic search features to help users resolve image search requests.

Learn More Intelligent Service Robot

Intelligent Service Robot

A dialog platform that enables smart dialog (based on natural language processing) through a range of dialog-enabling clients

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Clouder